Mark Scanlon discusses his research at DFRWS EU 2017.

Scanlon: My name is Mark Scanlon from University College Dublin. As [Owen] said, this paper is co-authored with a PhD student of mine and a colleague of mine in the school.

The point of this project is to try and make digital forensic challenges for a variety of different use cases. So to just give a quick overview of what we’re talking about, I’m going to talk about some existing techniques that are used for creating digital forensic challenges and talk a little bit more about the motivation behind the work that I’m presenting here, talk about some of the design considerations for the tool that we’ve developed, talk about EviPlant itself, and then go on and talk about some future work.

First and foremost, Digital Forensic Challenges – I’m sure there are a lot of academics in the room, a lot of people involved in training and education. So one thing that you’d be familiar with, with digital forensics education, is trying to create or curate challenges to give to a class. So it’s a pretty arduous task, right? You create a virtual machine, you emulate the criminal activity, you end up with an image that’s pretty big and you’re trying to distribute that image amongst your entire class. If you’re teaching [online] or a [01:20], then you have to try and distribute that over the internet, which adds a whole other level of complexity to the whole issues, as you are all familiar with here. You can’t rely on the quality of Wi-Fi connections.

[But challenges are also] used for proficiency testing. I think they’re going to be used more and more for proficiency testing. So kind of ongoing maintenance of your qualification or proving your skills so that you can stand up in court. But it’s also used then for forensic tool testing and validation. So if you did create a new forensic tool, you can submit it to some of the standardization organizations, like NIST, and they can run some tests against it and see if your tool is forensically sound or not.So when you’re creating digital forensic challenges, there are a number of characteristics that are necessary. Obviously, I’m coming at this from an educational perspective, but obviously, it’s applicable to all of the other things I just mentioned as well. The first is that there should be an answer key – so obviously, if you’re creating something, you should know what the challenge is, what are people meant to find.

And there should be some realistic wear and depth to the image. So it’s no sufficient to just have a brand new virtual machine, you install Windows, you emulate some activity, and that’s the only stuff that’s there. You need to have some wear and tear there as well, right? And that takes time. So if you’re there trying to create these images and you’re trying to make it realistic, it could take you weeks or months to build up that amount of wear and tear, or your poor graduate student will be tasked with doing that.

Realistic background data and noise – one of the key skills for a forensic investigator is to be able to differentiate between pertinent and non-pertinent information. So the only data that’s on the image shouldn’t just be the data that’s relevant to the answer, right?

And lastly is sharing and redistribution. Ideally… well, I mentioned already, you have to share it amongst the entire class. So we’ve seen already, it’s relatively complex to try and share even a few gigabytes within a cohort of people. And from a remote scenario, that gets even more complex. But this also means sharing and distribution amongst educators or amongst people who use these challenges. So because it takes so long to create these things, we should share this stuff more. But people hang on to what they have for their own classes, and they don’t worry about everybody else.

A number of existing approaches for creating these challenges – the first is manual. That’s kind of what I described already. This approach normally starts off with creating a virtual machine. You install an operating system or you might have a virtual machine to run with. And then you emulate the activity.

So you use the virtual machine, you log on to various services, you download things, you install things, you uninstall things, whatever you’re trying to teach that particular week, or whatever the challenges you’re trying to create. The nice thing about this is that you’re not waiting for something else to happen – you’re in control of your own destiny, so to speak. So you can emulate whatever activity you need to teach your class. It’s obviously very time-consuming – it can take weeks or months, as I mentioned. And another advantage of this is that the precise evidence is known. Because normally, what I do in this scenario is I will effectively write a script for what I’m going to do, and that script is effectively the answer that the students have to find.

The next approach is trying to add a little bit of automation, for want of a better phrase. So using honeypots – set up a honeypot, install Windows XP Service Pack [nothing], and wait for everything to attack it and wait for traffic to build up, capture the network logs, whatever you want to do. So you’re recording the activity of those who are trying to attack that system, but the majority of those attacks are automated, so the difficulty is trying to create attacks whereby it’s actually like emulating the manual of attack. So some guy is actually targeting your system, it’s not just an automated kind of port scanning attack.

The next approach is to buy second-hand equipment. We’ve kind of seen that at some of the workshops yesterday. Second-hand equipment is a very fruitful source of information. You can buy second-hand hard drives off eBay, you can buy some USB keys that are made in China, and you can have some naturally occurring evidence on those devices. So buying a second-hand hard drive, the guy might not have even formatted it; maybe he did. There’s information that you can get from that. Obviously, you enter into some interesting legal quandaries there, and maybe ethical considerations. So yes, you can find this stuff that the guy that’s selling this drive isn’t familiar, isn’t aware that you can retrieve this information from him. So there’s some data protection issue.

Another approach is automated scripting. So the idea here is that you write a script to be able to perform actions. One of the simplest examples of this is macro recording – recording the keyboard interactions and the mouse interactions for a system to form whatever it is, and then you can replay that event. So you can literally replay the event on a virtual machine, and you can see what happens. The nice thing about automation is that, obviously, you can introduce some randomness. So the idea of sharing a different challenge potentially with each person in the class. So the guy looking over his friend’s shoulder at what he’s doing, he might be looking to get a different answer than the guy thinks. And you also have instructor-based kind of storytelling – so you can curate this as you like, you can have a story that you’re trying to get the guys to figure out, and you can potentially then have a different story for everybody in the class. You can build up a corpus of events, you can piece those events together as you like, and you can end up with some nice challenges.

So the motivation behind this work is … first of all, it’s hard, it’s arduous to create these images. It’s kind of a waste of time. So you typically have digital forensics experts, digital forensics educators, the standardization agencies, they’re creating these images, and that takes an awful lot of time. It’s a waste of expert time creating these challenges to test the tools, to test the students, whatever it is. So our whole premise is to try and reduce this time through the methodology I’m about to talk through. And the idea here is to plant evidence into an image, and then use that image for whatever you need it for.

So on to EviPlant. Some design considerations that we had for the tool. First of all, ease of creation – that’s the fundamental point here. We should be able to configure stuff, let it run, and we end up with a nice image to use for whatever purpose we have. Efficient distribution – so I teach on an online Masters course for law enforcement, and it’s a nightmare trying to distribute images. We have guys from all over the world on the course, some people have very slow internet access, some people only have internet access from specific times, and they’re using a whole variety of different devices, and it gets very difficult to share information, even relatively small pieces of information, to people efficiently. And efficient injection – so I’ll talk a little bit more about the injection process in a while, but the idea is that planting the evidence shouldn’t take too long, you should be as efficient as possible.

Operating system compatibility – so again, the tool should run on anything, because people are using a wide variety of devices. And mobile compatibility I think is important as well. When I refer to mobile compatibility here, I mean creating and curating, planting evidence on mobile devices [and mobile devices …] it shouldn’t just be stuck to the standard Windows and Linux.

The premise of the system is that we have or we create base images. Base images are virtual machines, VHDs, whatever, and you basically install the operating system, Next, Next, Next, Next, and then shut it down. So that’s your base operating system you’ve got up and running. So you create it for whatever set of operating systems you’re interested in. You then can emulate the activity that you want. So you can log on to the service, you can install the files, you can do all that, whatever you need to emulate for the students to find or the tools to find. Now, you probably listened to [09:35], didn’t he just say that that’s what’s wrong with the system? But bear with me, okay?

And then, the resultant images … so you start off with that base image, you boot it up, you do your thing, you shut it down, and then what we have is a diffing tool – diffing, kind of coming from the Unix “diff” command. So the idea here is that we can diff those two images and find all of the artefacts that are on Image B that are not on Image A. As a result, we can package them together. They are all the changes.

So the idea here, with these evidence packages, is that you have all of the files, all of the artefacts. It doesn’t matter what they are, they could be partial files, they could be entire files. It doesn’t matter what the content is, it’s just treated like binary information. So you have all of the files, the artefacts themselves, and you have all of the metadata associated with them. This is grouped together in an evidence package. You can create packages and you can build up a set of packages for wear and tear, for depth, for different personas, different user activities, different behaviors, and different case types, what you’re targeting that particular week or whatever.

So there are different types of packages that you could use with this system. The first we’re kind of calling a black box package. This is basically the basic output from that diff I mentioned. So you diff your two images, this black box package contains all of the stuff that’s changed. You don’t need to look in it, you don’t need to know what’s in there. You just know that all of the changes that you’ve performed are contained within that package. All emulated activities start off as a black box package. But over time, what we’re trying to do is start to open up that package and see what’s in there, and begin to reverse engineer … that mightn’t be the correct term, but begin to figure out all the stuff that’s in that package and see what it is, and break it down into its individual components.

So when we do that, when we do actually reverse engineer those packages, we’re able to look at the files contained therein, we’re able to look at all of the metadata associated with it, and what we can do then is start to manipulate some of the standing stuff that’s in there. So if we wanted to tell a story and we were emulating some browsing activity and some Skype call logs and chat messages, we can start to manipulate those databases behind those various browsers or instant messaging tools, and we can build up a story, we can build up a thread of activity. And the idea here is that we can then … if we have enough packages, we can kind of build up a story. So we say that we want this persona, this is the type of thing we’re trying to teach or test for, this is the sort of wear and tear I want, this is the level of difficulty I want to make this challenge – so you can make more and more stuff. And something that we’re working on right now is the idea of introducing dependencies. So if you do need to include this package, well, maybe you need some previous packages that are already there from previous acquisitions.

The planting phase – when we start off here, what we have is basically a blank staging area, right? We copy our base virtual machine image into that staging area, and then what we do is we take all of the artefacts that we have from that particular evidence package, and we place them in the image at the appropriate offsets. So what happens there now is that we end up with an image that has all of the files, all of the artefacts in the correct place. Right now, what we have is it will simply overwrite something. So if something else is there in that place, it’ll overwrite it. So it’ll end up with an image that is not a forensically sound copy to your VHD that you have when you perform the action. But it’s analytically sound for the purpose of education, training, tool testing.

We’re approaching forensically sound reconstruction. We’ve done it with some small devices, but not with entire operating systems just yet. But the idea here is that you end up with something that’s a viable challenge.

So ideally, what we have here is that we end up with a whole host of different evidence packages. As I say, we’d have packages for different personas, we’d have packages from other users of this system, so it’ll kind of be a share and share alike sort of idea. And you can group these packages, as many of them as you like together, to build up a complex challenge.

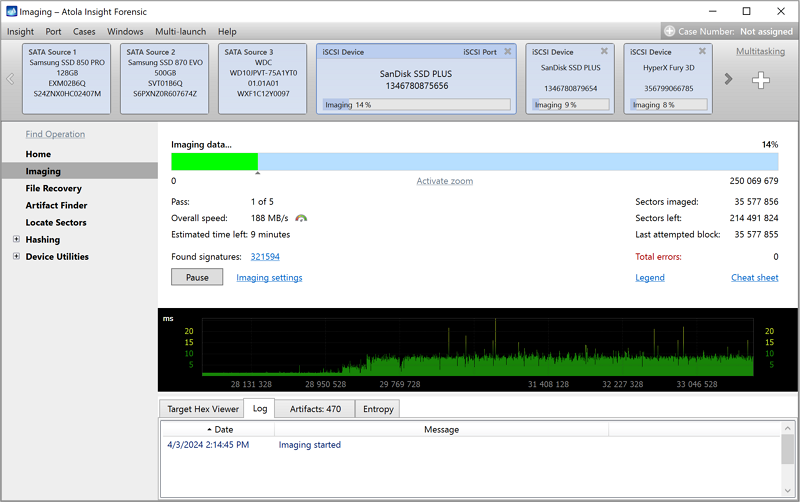

Okay, so far, we have a prototype of this system developed. It’s developed in Python, and we’re making heavy use of the pytsk library for Python, which is a Python wrapper for the SleuthKit, if you’re not familiar with it. The nice thing about the pytsk library is that obviously it does a lot of the work for us in terms of accessing the various artefacts on a disk. We can mount images, we can extract all of the metadata, we can extract as much information as we want. And we’re also dealing with, as part of this, we’re dealing with unallocated space. So if there is any unallocated space on the virtual drive, we’ll acquire that. We don’t treat it as anything different – it’s just binary content. In terms of the black box package … but it’s just binary content that we take. And everything is just … right now it’s added into a kind of data store, it’s a deduplicated data store on a database associated with …

All metadata then, as well, is stored inside of that database, and we can use that to modify the images as we like. And what we’ve done to test it out is we’ve created those base images that I mentioned. So they’re just standard [AR] operating system installs, nothing else on them. We use those as our base image. We’ve tried out a variety of different emulated activities, we’ve performed those diffs, and we’ve ended up with a variety of different evidence packages.

We then [tried] the reconstruction side that we just mentioned. So there’s two methods for reconstruction, and one is better than the other and one is harder than the other. So logical injection – logical injection is what we have up and running right now in our prototype. For that to run, we have a trace on the base image that we’ve ran the injection to. We’re trying to eliminate this as much as we can by going down the physical injection route, which I’ll talk about in just a second. So right now, yes, you can know, by looking at our image, that we have reconstructed that image. But what I would argue is that doesn’t matter for the purposes that we are creating these images, right?

We’ll get to that point – we’ll get to the forensically sound reconstruction, but right now we have analytically sound reconstruction, which is a nice term.

Then what we did was we ran our evidence packages back in, we took an

acquisition of that virtual drive using EnCase, and we were able to recover the activity that we had, the browsing stuff, the IM messages, whatever we did, we were able to recover that stuff – as you might expect. That’s not a surprising outcome from us injecting exactly what we took from the original disk.

We’re trying to get to the point of physical injection. What I mean by physical injection is being able to directly manipulate the kind of NTFS partition. So you’re not relying on the base images – sorry, the modified base images – providing all of the information. So we’re able to actually use stuff from a variety of different places, and just physically [DB] them into place where they should be, and then modify the MFT appropriately, so that it’s still accessible.

So there’s a number of benefits that you get from a system like this – from an educational standpoint. There’s some other benefits as well, in just a second.

The first is that what I find with my class, with forensics, is that because it’s so hard to make these images, there’s a number of things that happen. First of all, you have a limited set of examples that you can provide students to work with. And plagiarism is rife. I mentioned that I teach regular undergrads, computer science students forensics, and I teach law enforcement. There’s no difference, they’re all the same. There’s plagiarism that takes place. So as soon as you create an image, you share it with the class, the answers to that image are given to next year’s class, right? So that image that you’ve just created, if you were using it for a test, for an exam, you end up with people cheating. And that’s very, very hard to trace, because everybody has the same answer. This is part of the problem with the existing model.

What this allows you to do is potentially create, have on-the-fly generation – which we’re nearly there with on-the-fly generation – on-the-fly generation of practice images, so then a class, if there’s a student there, you’d have the nerd that wants to get 100 per cent, he can practice as much as he likes, he can keep generating new challenges, new images, see how he gets on. The nice thing is that you can have effectively automated correcting here as well, because you know the answers, you know what you’ve put in what location.

And helps to eliminate plagiarism – so there’s two scenarios with that. First of all, in a kind of lab-based exam scenario, where you have people sitting beside each other, everybody in that room could potentially [be presented] with a different challenge, whereby you’re testing the same skills, the same techniques, but the answers are actually different for everybody in the class. In a remote scenario, now that enables you to actually have a similar exam.

So right now, I don’t do this. I don’t send large hard drive images around the world. It can’t be done. So in a remote scenario, you could have an exam where people are presented with a challenge, and they’re all in different time zones, they’re all around the world, you don’t have to coordinate an exam. So you can send out different challenges to everyone in the class, and it’s no good even if they talk to each other, because the answers are different, the techniques that they need to use are probably slightly different, but the fundamental skills are the same that you’re trying to assess.

Okay, on to assessment. From an assessment standpoint, it’s actually fairly straightforward. We know the answer, because we’ve just used the answer to create the challenge. So you can get to the point where you have effectively automated assessment, and the interesting one here is that you can replay case challenges. So if some guy had an interesting technique that he followed to be able to figure out a case, what you can do is you can present that case at any point back to somebody else, probably in the same institution, for data protection reasons, and you can actually replay that case. So what information did he have at that point and what technique did he follow to be able to solve it?

Outside of education for a second – obviously that’s where my expertise is, but you also have proficiency testing. So I started out by saying that I think proficiency testing is kind of a hot topic. It’s starting to become somewhat commonplace, but I think it’ll be far more commonplace in the next five or ten years. So in a controlled environment, you can do the same thing. You can have in-house proficiency testing on the fly. Automated tool testing and validation – so right now, as I mentioned, if you did submit your tool to the standards agencies for validation, it’s a manual process. It goes into a queue and there’s a manual process to try and evaluate that tool. But you can effectively get to the point whereby you have automated validation of tools, so that you’re using frequent hashing, you know the answer set, the student is now just an automated tool that has to find the same piece of information, and you’re able to validate that process that is not interfering with the original evidence in any way.

Point-in-time reconstruction – this is something that I’ve literally spent the last few weeks at. This is where we’re at – point-in-time reconstruction. And point-in-time reconstruction means if you were analyzing a cloud instance, let’s say. So you’re on the hypervisor level, you have access to the VMs, so everything’s perfect from that perspective. And you take a snapshot, you take a diff of that image. You can take frequent diffs of that image. So effectively, what you can do then is reconstruct that cloud instance at any point in time, or construct multiple different time points, provide them to a class, provide them for a challenge, and say, “What’s happened over time?” So even if somebody has securely deleted stuff, if someone has manipulated the information, performed some counter-forensic techniques on the data, you should be able to replay it and see exactly when that happened. And then maybe you can find some traces about who was logged in or what techniques did they use.

This can also be used then for malware life-cycle analysis. So in terms of the attack vector, its behavior when it installs, how it gets the payload, where it gets it from. So you can take frequent snapshots, because the diffs are very small each time.

In terms of future work, where we’re at – we have the black box thing up and running. It’s going well. You can reconstruct an image. It’s analytically sound, as I mentioned. We’re starting to open those packages. Some of the stuff is easier to deal with than others. My examples that I gave there, of instant messaging, chat transcripts and browsing histories, they’re all SQLite databases normally. So that’s fairly straightforward to manipulate, so you’re literally able to curate your own browsing history, curate your own chat logs.

Artefact collision resolution – so if you do have multiple packages, especially with the timeline stuff, you have to come up with a policy for what you want to happen when there’s a collision. So if you think about any particular artefact on a disk, over time, that artefact will … some of them will stay the same, but a lot of them will change and update. So what do you do when you encounter two artefacts that want to be in the same place, so that your story is able to be told? If they’re reversed packages, you can figure that out. You might be able to actually just interweave the entries. If they’re black box packages, right now what we do is overwrite. We just overwrite whatever’s there. Yes, it’s not perfect, but that’s the solution. So we’re trying to come up with a policy for that. It’s mainly for the black box packages.

And the last is physical injection, as I mentioned. So the idea of actually physically injecting the evidence in the appropriate place on the disk, and there’ll be no trace in the resultant image. You would be able to achieve forensically sound reconstruction of a disk using that method. We’ve achieved forensically sound reconstruction of a disk, not from the evidence packages, but on a different data deduplication project. So we’re looking to combine those two things together, that we’re able to curate and create images, and create challenges, using EviPlant.

That’s all I have. And I’d like to invite any questions.

[applause]Audience member: Mark, thank you. [That thing’s] very useful. We are also in the education, so definitely it’s a challenge to make challenges. I imagine that generating or emulating the user activity may be – or if you want to script it, if you’re correctly inserting records in SQLite, I can also see that it might be difficult to do it at all the places at the right time. So how do you solve that? Do you, for example, keep track of the Windows event logs, so that as a whole it makes sense?

Scanlon: Yeah, to get the entire story reconstructed … first and foremost, just using the black box model, [25:33] the frequent injections, but if you think about the black box now, the simple thing, you just emulate the activity – which is basically what we’re doing right now, but we’re sharing the entire disk image. Right now it doesn’t matter what all that stuff is doing. If it’s just a black box, we just overwrite what’s there. The image is still bootable, all of the stuff is still there if you really wanted to do that. So from the black box, simple A and B, two images, that works perfectly fine. When you have the multiple evidence packages, you now need to start to reverse every single possible vector to show that that activity has happened. And we’re approaching that.

Audience member: [26:10] because you have [page] A and you want to go to B, and then, to get there, you emulate it.

Scanlon: Yeah.

Audience member: So you inject. But for instance, it might take time. So in a real scenario, it will take a week before something else happens, and …

Scanlon: Yeah. So when we have the reverse packages, we can actually build that timeline as you would like. So the more packages that we have that [were reversed], the more we can build that timeline to suit what we like. And even right now, it doesn’t matter if … we can inject some black box packages that effectively we don’t need to know anything about, we just put them in there. And we can have some reverse packages. So both of those things are not exclusive. You can have them both working together. And in that scenario, we do the black box stuff first, and then the reverse stuff, because it seems to make more sense.

But yeah, the goal of this project, to get there, is to look at every single vector to build the story. It’s easier with the black box stuff. To be honest, that’s fairly straightforward. That’s basically the existing stuff that we’re doing anyway, creating challenges. But we now have a small [thing] to distribute.

So to put that in perspective, you create a 10-gig Windows 10 install, or whatever, and you install some applications and you do all that kind of stuff. If you keep your RAM size on the virtual machine down, the size of the package that you now have to distribute to your students is in the order of hundreds of megabytes, as opposed to being the entire 10 GB. And again, if you’re in a remote scenario, the students or whatever just download the base images once, and then they can run multiple evidence packages into them week by week to have different challenges or whatever they want to do.

Audience member: Thank you.

Scanlon: Yeah, cool. Yeah.

Audience member: From a law enforcement perspective, I think you mentioned that [27:57] there could be [a couple of other] ways to detect tools like that being used.

Scanlon: Yeah, well, right now there are traces in the resultant images to prove that EviPlant has been used. We don’t see it as an issue for what I need in September. So yeah, something like this could be used for planting evidence – in the black box planting scenario, they would have to have a snapshot of the machine at a certain time, then perform their actions, whatever they want to do, or curate their own evidence package and inject it. But [they need to access it] at a pretty low level. It’s not really different than someone having physical access to a machine and taking out the disk and doing something to it.

So yeah, it’s something that’s crossed our minds. We’re not at that point trying to solve that problem of framing somebody for whatever crime it is, but … yeah. We’re aware of it, but we don’t have a solution to it right now, we’re not quite at that stage.

Audience member: Do you think that students might end up diffing the base image against the [29:07] version to basically find all the evidence?

Scanlon: [chuckles] Well, actually, the evidence package that we give them contains all of the information that they need to find. I mean, it has to, right? So we do that diffing for them, and we give them the evidence package, and all of the answers are in there. So yeah, the smart guys in the class will just look at the evidence package, and not look at the entire image. But that’s why we need a comprehensive set of packages being injected simultaneously, with that wear and tear, with that non-pertinent information in there as well. And the more that you have, the more realistic it is, but also the less you look at the image and the answer file is on the desktop.

I should just say actually, just if I could – if anybody is interested in this, I’m looking to roll out a sort of [alpha alpha] test of this for the coming semester in September. If anybody is interested, feel free to talk to me or send me a mail. You’d be most welcome to try that out.

Audience member: I have one question [30:07].

Scanlon: Yeah.

Audience member: Do you see [a sort of] purpose to use it commercially? So for the recruitments of new staff for forensic purposes, that they can basically do these type of assignments and [30:18].

Scanlon: Yeah. It’s not something I thought of, but it can turn to proficiency testing, because you can create these images with a specified level of difficulty, you can make them as complex as you require … So yeah, absolutely. The idea of … we all have the standard images that you get, that are standard, all the manufacturers release them, the standard images. We all have those, the answers are online everywhere for all of those things. So yeah, the idea of creating a never-before-seen challenge or test – sure, it would test the proficiency of someone [on the fly] to see how good they are [or not], yeah. Yes, lots of [30:55].

Host: One more? One last question.

Scanlon: Yeah.

Host: [31:03] actually, two questions.

Scanlon: Yeah.

Audience member: Are there any limitations in the [traces] that you can put on the image? And the other one is: Can you actually separate all the artefacts? Because I think [some intersections] or can you put this one and … does it still work?

Scanlon: Yeah, so the first question – we haven’t encountered a limit on whatever we can do. So it’s just a matter of time. The diff obviously gets longer the more stuff you do. The injection gets longer the more stuff that you’re injecting there. But we haven’t encountered a limitation for anything.

Even creating virtual machines with 16 gigs of RAM and a massive page file and all that sort of stuff – it injects just as well as it would. And the second question, in terms of overlapping stuff – in terms of the first scenario, we’re just using a black box solution, that will overwrite whatever is there. It’s a perfectly [beautiful] image, Windows is happy with it. It’s not forensically sound, as I say. There are traces that we’ve been there. But that image, if you were injecting multiple packages … so right now what we’re doing is we’re overwriting what’s there. So this is another reason why it’s analytically sound, because we may well have to slice or fragment the file. If we’re putting in a big file and there was a small file in that particular location before, we now need to actually fragment out the file for it to fit in the resultant image. So yeah, there’s … we haven’t encountered these limits right now. We’re working on the black box thing, we’re moving towards the reverse engineering stuff. We have some stuff reversed already. But this is an ongoing project, yeah.

Host: Okay. Thanks, Mark.

Scanlon: Thanks.

[applause]End of Transcript