by Martino Jerian, CEO and Founder, Amped Software

In every branch of forensic science, we have to fight with the falsehoods introduced by the popular series à la CSI (hence the properly called CSI effect), but probably this belief is the strongest in the field of forensic image and video analysis. From endless zooming from satellite imagery, to enhancing the reflection of a reflection of a reflection, to identifying faces or fingerprints at an unbelievable pace, we very often have to explain, even to “the experts”, what is science and what is fiction.

This is complicated also by the fact that sometimes we are able to extract information from images and videos where at a first glance there is absolutely nothing visible. However, very often we can’t do anything to improve images that to that average person, don’t look that bad.

The New Google Algorithm

To make things worse, news headlines often contribute to the misinformation about these technologies.

Of course, I am referring to the recent news about Google’s work on Super Resolution.

- The Guardian: Real life CSI: Google’s new AI system unscrambles pixelated faces

- CNET: Google just made ‘zoom and enhance’ a reality — kinda

- Ars Technica: Google Brain super-resolution image tech makes “zoom, enhance!” a real thing

The actual research article by Google is available here.

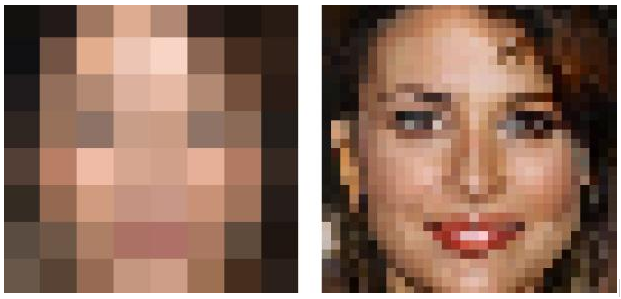

This is one example of the images provided in the article.

The results are amazing, but this system is not simply an image enhancement or restoration tool. It is creating new images based on a best guess, which may look similar but also completely different than the actual data originally captured.

What Is Forensic Science?

Now, let me give you some more background on what forensic science is and isn’t and how this relates to our case.

Forensic science, as the words say, is the use of science for legal matters. To properly speak about a scientific examination, we have to follow the three pillars of the scientific method: accuracy, repeatability and reproducibility.

If we are not working under these constraints, we are not doing scientific work.

- Accuracy means that the work must be correct, and as much as possible, free from errors or bias introduced by the tools, the methodology, the context of the investigation, or the examiner’s expectations. During the analysis of forensic evidence, it is very difficult to be completely objective, and confirmation bias by the examiner must especially be taken into account as much as possible.

- Repeatability means that the examiner must be able to repeat (even after some time) the same analysis and get the same results.

- Reproducibility means that another examiner – of relevant competence, following the work done and explained by the first, should be able to reproduce the same results.

If we consider digital images and video, there are countless papers describing very interesting approaches to image enhancement but are not suitable for forensics. They can be very good to enhance creative photography, but cannot be applied to evidence without destroying its value.

So, how can an algorithm fail to be acceptable for forensics for each of the points mentioned above?

Accuracy

We cannot use algorithms that introduce some bias, most often because they add new information which does not belong to the original image, such as adding new details. This is in contrast with proper enhancement or restoration techniques. While often used in an interchangeable manner, there is an important difference between image enhancement and restoration.

- Image enhancement is a kind of process used to improve the visual appeal of an image, enhancing or reducing some feature already present in the image (for example correcting the brightness).

- Image restoration is a kind of process where we try to understand the mathematical model which describes a specific defect and, inverting it, tries to restore an image as much as possible close to a hypothetical original without the defect (for example correcting a blurred image or lens distortion).

In both cases, in general, the process does not add new data to the image, but relies only on what is already there, just processing according to some predefined algorithm. For this reason, we will never be able to obtain a readable license plate from three white pixels, even if we receive this request very often. We can only show better what’s already in the image or video, we cannot – and must not – add new data into the evidence.

Repeatability

Another category of algorithms which are not suitable for forensics are those which are not repeatable, like those based on generating a random sequence of values to try. However, some of these algorithms properly give very similar (even if different) results in normal situations. So, they may be used with a pseudo-random approach. In laymen’s terms, computer are not actually able to generate random numbers, but only pseudo-random sequences. If we keep the so-called “seed” fixed, we can always reproduce the same sequence and thus always getting the same repeatable result.

Reproducibility

Finally, algorithms must be known and all of the involved parameters must be available. We must be able to describe the process with sufficient details to let a third-party person of relevant skills to reproduce the same results independently. So, a “super-secret-proprietary” algorithm is not suitable for forensic work.

Google’s Algorithm Vs. Forensic Science

How does the new Google algorithm fit into this framework? Not very well, it turns out. It does not tick the box on accuracy and without that, any further discussion is useless. Let’s understand why.

Google is not the first to try to do image enhancement via deep learning, but probably the one which got the most attention and the most interesting results. However, the concept is pretty simple: first of all, the system must be trained to know what a nice picture of a face looks like. Then, when we have a low quality picture, the system will try to create a new picture based on a best guess, according to the training dataset. Let me repeat again what I wrote at the beginning of the article: this system is not simply an image enhancement or restoration tool. It is creating new images based on a best guess, which may look similar but also completely different than the actual data originally captured. This algorithm is not enhancing pictures, it is creating them.

The new picture will be the result of a combination of the image under analysis with the training dataset (of course, external to the case). In any real case, the faces used for training the system will be different from the face you are trying to enhance and identify.

I’ve stated that the use of this algorithm is not good because the faces used for training the network will be different from that of the suspect. So, what if we use pictures of the suspect for performing the training? It is very likely that the output of the algorithms will create an image similar to that of the suspect since the program has been optimized to do so. And this will be the case even if the suspect is not involved and the actual person in the image under analysis is another one. The wrong use of this technology could convict an innocent person. And let’s not even start on statistics of different facial features and racial bias…

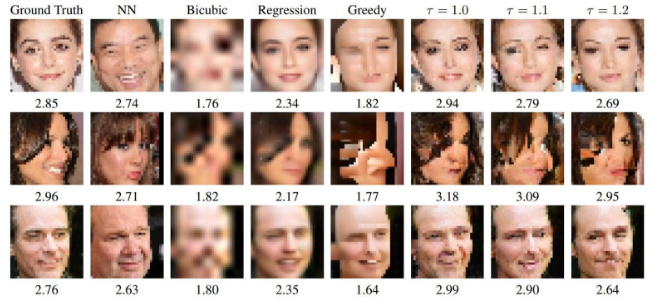

Let’s look at some examples now (from Google’s research article).

This figure from the article is very representative and interesting, as it compares different methods. Depending on the algorithms, the results may be better or worse, but in general you can notice two things:

- Much better quality, compared to the standard Bicubic

- A big difference in facial features between the Ground Truth and the results of the algorithms

For the sake of completeness, also the result of the Bicubic is not very faithful to the original but at least there are so little details that it will probably be useless for identification. While many of the others give plain wrong results. Maybe similar, but still different.

So, the question is this: is it better to have nothing or to have plain wrong evidence? It’s simply better to have no information at all than to have wrong or misleading information. Relying on fake details can easily exculpate a guilty person or convict an innocent one

Other Algorithms

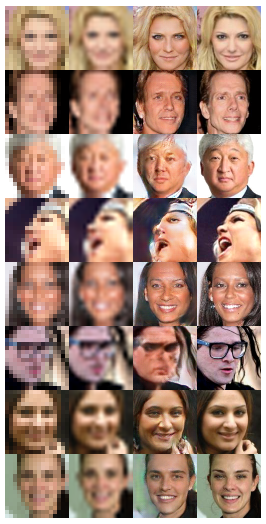

As mentioned, Google is not the first to try this, nor is the last. Some time ago, I reviewed a GitHub project called “srez”. From the project page:

Image super-resolution through deep learning. This project uses deep learning to upscale 16×16 images by a 4x factor. The resulting 64×64 images display sharp features that are plausible based on the dataset that was used to train the neural net.

Here’s a random, non cherry-picked, example of what this network can do. From left to right, the first column is the 16×16 input image, the second one is what you would get from a standard bicubic interpolation, the third is the output generated by the neural net, and on the right is the ground truth.

Even in this case, the results are visually awesome, but look at the faces in the third and fourth columns: even with this small thumbnail size, you will see that they are very different. The female in the last row even looks like a male in the reconstructed image. Imagine a case where you have to compare the features of the face in the third column, with a different picture of a suspect.

So, very similar considerations to those of the Google algorithm.

The Media

Coming back to the media coverage of the “new” algorithm, of course, all the above-mentioned articles try to juggle with the sensational headlines and the more technical savvy opinion, but they should make it more clear.

For example, in the article published in The Guardian: “Google’s neural networks have achieved the dream of CSI viewers everywhere: the company has revealed a new AI system capable of “enhancing” an eight-pixel square image, increasing the resolution 16-fold and effectively restoring lost data.”

This system is not “restoring” lost data! it is creating new data based on some set expectations. Lost data is lost forever!

Luckily at the end, it adds: “Of course, the system isn’t capable of magic. While it can make educated guesses based on knowledge of what faces generally look like, it sometimes won’t have enough information to redraw a face that is recognisably the same person as the original image. And sometimes it just plain screws up, creating inhuman monstrosities. Nontheless, the system works well enough to fool people around 10% of the time, for images of faces.”

Also, the article published in Ars Technica starts with some CSI promises, but at the end states: “It’s important to note that the computed super-resolution image is not real. The added details—known as “hallucinations” in image processing jargon—are a best guess and nothing more. This raises some intriguing issues, especially in the realms of surveillance and forensics. This technique could take a blurry image of a suspect and add more detail—zoom! enhance!—but it wouldn’t actually be a real photo of the suspect. It might very well help the police find the suspect, though.”

What About Investigative Use?

In the end, most of the articles agree that the method shouldn’t be used for forensics, but some add that it could still be a valuable investigative tool. I don’t agree at all. This kind of algorithm should be avoided in all phases of investigations.

While most of the experts I’ve discussed this with agree with me, I still had discussions with some of them that think the results of these algorithms can be useful for investigations as an identikit or facial composite.

I want to go against every claim:

Claim 1: it may be your only resource as an investigative tool when you don’t have anything.

Reply: you have something, even if very little, in your original image. Any additional information that this method gives you is just fake information which might make you take a wrong turn in your investigation. Again, trust the little information that you have. It is better to have little, but good information, than a lot of wrong information.

Claim 2: it may be useful for the investigative phase and then drop it as evidence.

Reply: this assumes the expert is careful in using the proper tool depending on the context and that he is aware of the context. This is often not true, since technicians and analysts are frequently requested to process images not knowing how these images will be used. Are we sure that if this tool is available, it will not be abused, that the user will have the proper knowledge about when to use it and when not to? What happens when an image goes out of the lab and into the wild? Even if you know that the enhancement is needed only for investigative purposes, what do you do if this then turns into key “evidence”?

There is one final comment against the “use it only in certain situations”. Forensic labs (should) have strict procedures for avoiding contamination, and following proper standard operating procedures (“SOPs”) and workflows. If you need to do some chemical analysis and you need to have a sterile environment, you will not let people come into your lab without covering their shoes and following proper cleaning procedures, as this not only affects the current evidence but also the credibility and the reliability of the whole lab, contaminating also other evidence that could be around at the same time. So, why not be so careful with images and videos as well? These are not just “photos” and “movies”, they are evidence just like biological traces with DNA or data from mobile devices. Everything possible must be done to carefully collect, preserve and analyze them.

Conclusions

In this article I have explained why, in the context of the scientific method, many algorithms are not suitable for forensic use on images and videos. The media is often leaning towards sensational headlines which make people (and often experts) have the wrong expectations. Improvements in technologies, deep learning, and image processing have been huge in the latest years, but in a forensic setting, just because something looks good, it does not mean it is good.

About Martino Jerian

Martino Jerian, CEO and Founder, Amped Software (www.ampedsoftware.com)

Martino Jerian founded Amped Software in 2008, with the mission to create revolutionary products that could become the standard tools for working with images and videos during investigations and for forensic applications. Amped Software products are used by the world’s leading top forensic labs, law enforcement, government, military, and security organizations. Martino Jerian has worked as a forensic video and image analyst and during his career he has evaluated hundreds of cases.

Have you seen the 1948 movie “Call Northside 777”? James Steward plays a reporter who at the end of the movie enlarges a photograph to the point that he can read the date on a newspaper being held by a boy in the background of the photo. It may very well be the first example of the CSI effect.