Keith: Hey, this is Keith Lockhart from oxygen forensics training. I have Amanda Mahan with me from our team. Welcome to a series of webinars about making the world a safer place.

So our first in the series is using technology to address an illicit image investigation, and just all the different problems that we typically run into and what we can do with Oxygen Forensic Detective to tackle those problems. So in doing that, because Amanda and I are going to be your guide and and conversation pieces through this webinar and all the subsequent ones, it makes sense that we take a second to introduce ourselves.

So my name is Keith Lockhart, if I haven’t met you in the world. I came to Oxygen about a year ago to grow training and build some new fun things around the new release of Detective and the old version. And I have a previous computer forensic history, which is kind of an interesting conversation point because I meet more and more mobile forensic folks that don’t have a computer forensic history. And that’s a crazy assumption I’m trying to break. But it also lends a lot of different thought processes about how I go about teaching technology and opinions I have about technology. And sometimes it’s great and sometimes I smash my head through the wall.

So I spent the previous 16 years at AccessData running training with FTK, which is a tool that’s based on the database. And I’ve mentioned that because it’s super lucky for me that Oxygen Forensic Detective is based on a database, which means we can really tackle some of those problems that you’ll see us outline in this webinar and others in a really analytical, efficient fashion that does this a world of justice.

And I happen to have a ton of thought around that being a database technology as we go. So I want to give that brief introduction, and Amanda’s on here with me as well. I want her to tell us a little bit about herself. And you know, I have a law enforcement background prior to the computer technology world and did some instruction in a place called the National White Collar Crime Center. So it’s been a long, interesting, Hey, let’s teach technology. From an investigative mindset to help get jobs done in a better, efficient, maybe a much more money efficient methodology than some of the ways that have been out there before. So I bring that.

Amanda, if you don’t mind, tell us your background and what you were doing before you got here and what you’re doing now.

Amanda: Awesome. Okay. My name’s Amanda Mahan. I’ve been with Oxygen as a contract instructor for almost two years now. And after, let’s say September, what was it? September of last year, 2019, I started full time with Oxygen. So previously I was a digital forensic analyst with a County Sheriff’s office in Alabama and just happened to be the first one for the and began the computer forensic cell phone, forensic lab there.

And shortly after I started there, I joined in with law enforcement ICAC task force. So that was a huge step for our county and working joint with the city, we were able to accomplish a lot. The reason I’m with Oxygen I believe began right then. The only software we could afford was Oxygen. And it just so happened it was the most robust, in my opinion. And I use it all of the time. So that is where I gained my passion for the software.

Keith: No, no, no. I mean, so to be clear, Amanda, the real reason you’re here because you and I got together last April to go through some detective training. What happened?

Amanda: True, true, true.

So it was my second class teaching for Oxygen that headquarters and back in the back roads. So it’s this, my new boss, Keith, I’d never met him before and he was the most interruptive student I think I’ve ever had. But it is absolutely true. But at the end of the day the discussion turned to, Hey, what do you think about working here full time? Let’s rebuild some of this material. Let’s make this robust, let’s make this work. And so eventually we did.

So instead of having to examine child exploitation material, I now have this huge opportunity where I have the ability to teach thousands of people. Now what I used to do using a detective, and if I have that opportunity to spread the word, to teach, to make the world a better place while not having to view these images myself, I am onboard. So I was really excited to join the team.

Keith: You know, we’ve been doing this together for probably full time, about six months or so, Amanda. And I think I did an interview one time where I said, this fills my love tank as an educator. You just get this [indecipherable] to try to help people do a better job. And if you lived through something that was bad, make it so somebody else doesn’t have to. Yeah. So that’s great. I’m super glad we got together. I’m super glad you came on with Oxygen and we’re making some cool stuff.

So anyway, that’s a, if you’re gonna spend all the time with us, listen to these webinars, it’s only fair that we at least introduce ourselves. So when we do make the wise cracks, you kind of have a background in why. So anyway, our first one is the illicit image investigation.

And you know, Amanda and I were talking about this and we come up with a set of problems that we’re going to try to address: some of the big ones that she ran into routinely and that we hear out in the industry, especially as you kind of figure out what Detective can do and people start thinking, Oh, but can you make it do this, and can you make it do that?

And I would say one of my most common responses to questions like that in class, because it’s a database technology are, Oh yeah, there’s, you know, 95% of the time, my answer is it’s a database. We can probably figure out how to do that.

So the first one on the list is tackling large data sets. And I’ll introduce the conversation just to say that if you’re a Detective user and you haven’t made the leap to version 12 over version 11, I mean, it’s been a good six months: October, November, December, January, February, March, quite a few months.

At this point, you got to do this. And primarily I would say with relevance to this bullet, you know, a 32 bit architecture of Detective in the 11 world just does not handle big data like the 64 bit architecture of version 12 does. I mean, it’s night and day, and the fact that we can do concurrent extractions and import, I mean that’s great, but only if you have the backbone to support that.

I mean for instance, four or five GrayKey dumps at a time would kill 11, and 12 just kind of sits back and says, what else you got? So it’s that type of analogy I want to put in front of us when we talk about tackling large data. And then just Amanda, I know you have the tool and want to show a couple of things like that to really exemplify it. But I want to give that back down only because Amanda, you might remember my first experience with the job was getting somewhere [indecipherable]. That’s not cool though. So that stuck in my head. I’m glad this is one of our top problems to address because I always have that in the back of my mind. Let’s make someone not have that bad day. So perfect. Amanda, I’m going to just kill this presentation so you can take over the screen and we will start like that.

So, okay, Amanda, here’s your screen. Have at it.

Amanda: All right. So one of my biggest problems when I walked into any house on a search warrant was, Oh my gosh, I’ve got a half a dozen phones or more, a couple of computers. I’ve got all kinds of maybe some memory cards, thumb drives. What am I going to do with all this data?

So most of my investigations always ended up being on the cell phone. So I want to show you that it is not as difficult as you think ingesting all of this data, doing all of these extractions concurrently. So I just want to show you right now how many devices I have set up to extract. You can see I have an iPhone, a Samsung, I have a memory card and a wallet phone. And I can do this all at once, which is a huge time saver. And as soon as I’m finished, I can pull all of this data straight over in Detective to be parsed and then I can do my analysis.

Keith: So I would consider you’re showing off Amanda, but you’re not, I mean that’s actually… I’ve watched you do this and just say, you know what? I don’t have time for this. I’m gonna get them all going and I’ll just minimize them out of the way while I still work, and when they’re done, I’m going to turn around and import them all at the same time while I’m still doing other stuff. So I would accuse you, but it’s actually really valid what you have on the screen right there. So yeah. Okay, go on.

Amanda: Cool. All right. So once we’ve finished with all of our extractions on all the devices and we know that’s probably where most of our problem solving is anyway, doing cell phone extractions. So now that I’ve shown you that you can do all of these extractions at the same time, let me show you some more information that will help you, and it’s in our configuration settings.

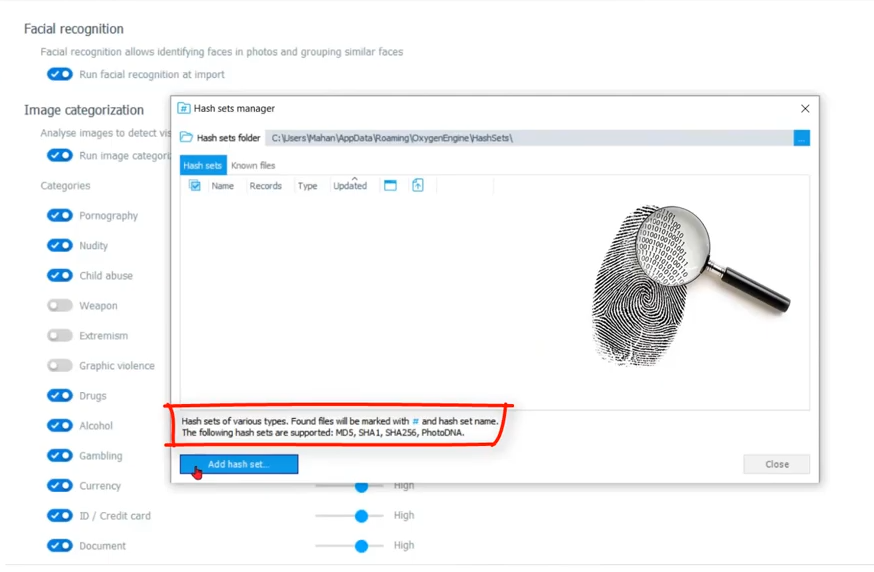

If we navigate to our menu on our home screen and we go to our options, we can see in our advanced analytics that we already have something called image categorization, which can come in to play big time. It can help call your data, it will flag all of those images that you’re looking for straight away.

Keith: So Amanda, let me just stop for a second because I want to make sure I’m tracking with what you’re saying as well. So from a large data standpoint, we get happy because we can extract a ton of devices concurrently and then pull them back into the tool while we’re still working, be at the same time and see in much greater fashion than we could in the 32-bit world versus the 64 one. Okay.

So Amanda, before you, before you leave this configuration screen, I just want to make sure I’m tracking and everybody listening is and that. So we’re taking the image categorization algorithms and rather than you doing all the, you know, let me sit down for an hour and go look at everything that would be pornography or nudity or drugs or alcohol. Let the tool do that, right? Let those algorithms go do something repetitive.

And I mean menial is probably not the right word, but your subjective thought process can be applied much more effectively other ways in the case. Let the tool do this even to include facial recognition or categorization at some point where who are these people and they’re getting pictures with these people and I’ve been trying to find a face in the name of that one right there. So yeah, I mean you’re not even importing your data yet, but you’re configuring what it looks like. The tool to get busy and help you know, make things available for you. Your time. Okay.

Remember how much data I said you’re having to bring in? I mean, let the tool do some of that work for you. And not only are you running image categorization, you have control over what you’re going to run. Let’s say that you don’t need to look for weapons, extremism, graphic violence, but you want to make your thresholds for pornography, nudity, child abuse, you want to make those lower so it pulls in more images for you. So you can make the determination whether or not this is truly a child abuse image or nudity or pornography.

So you’re casting a wider net, you know, so you can apply your own subjectivity to it if you have to and maybe not even casting in that for some of those things that are irrelevant at the moment for this particular investments. That’s super good stuff to know.

Right? And you can always wait to run your image categorization until after you have imported your device. So this, what we’re looking at now are your configurations upon import. So you’ve done your extraction, you’re going to pull this extraction into, and what we’re doing right here is telling it. I want you to go ahead and start working for me. Find all these images that I’m interested in seeing, the ones that I need to analyze.

Keith: Yeah, it’s literally, so I mean this, this segues right into the though we absolutely need this kind of technology for the larger data sets. Our next big bullet to tackle was finding things of interest and we’re setting up the tool to get busy on our behalf. So yeah, please continue.

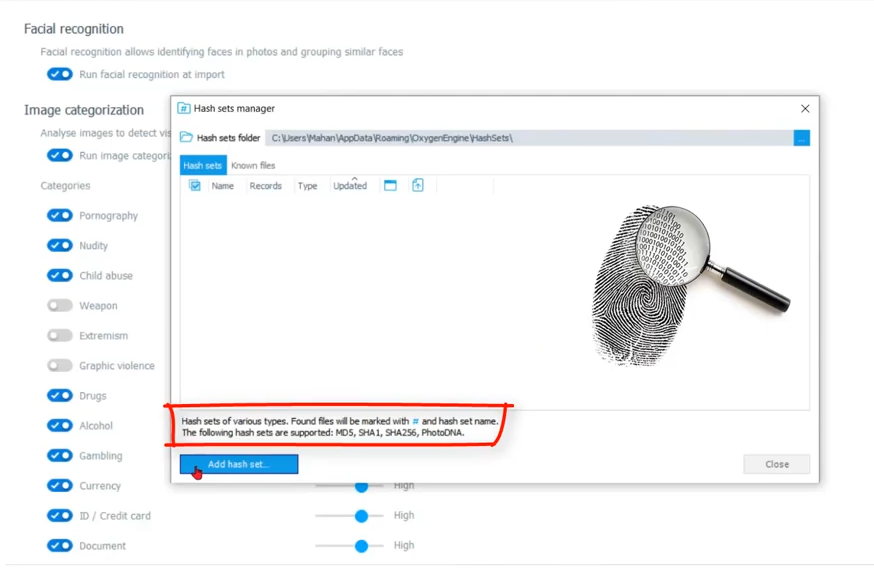

Amanda: Okay, so that’s not all that we can do though. Let’s navigate back to our options screen, and look here, at the bottom you’ve got hash sets and key words that you can run against this data as you import it. Let’s have a look at our hash sets at this point. If I participate in project VIC, this is why I can put that hash set right here to run against the data as it imports. So you can also find, yeah—

Keith: Let me just clarify for everyone just to [ensure] we’re on the same page. If you put your mouse down on that little text at the bottom with MD5 SHA-1, right up there above that button. If you’re watching this and don’t know what a hashtag is, this is not a common… or not an uncommon issue. Let’s have a set of data, run a math algorithm against it and get a result. And you’ll hear this referenced as the digital fingerprint for a file because you’ve changed the slightest bit of data in that file.

Content has nothing to do with the name, nothing to do with dates, creation, modify, whatever. If you change the content and run the same math, you’re resulting value is different. Completely different. So much so it’s like the digital fingerprint and some of those algorithms are MD5, SHA-1, SHA-256, the Photo DNA algorithms that are right there in that little text content.

And you know, back in my day there were hash keepers sets of adjudicated illicit images where people would say, Hey listen, here’s the value. It’s not the file. I’m not going to make you look at the file. But if you get the same math using the same algorithm I did, ding, ding, ding, good for you. So I just want to make sure that everybody’s on that same page of what a hash value is for us and how it’s significant, especially when we’re trying to configure the tool to do more workforce. So there you go. I’ll leave it at that.

Amanda: All right, so at this point, if we want to import a hash set, this is where we can do this and it doesn’t have to be project VIC. Again, it can be any hash set that you are concerned with and it can be and [indecipherable] you can see the different types that you can import here. So let’s go ahead and import a project VIC hash set and I just have a small one here to demo for you.

Now that we’ve imported it, we can set it to run against every device that comes in to Detective; every device that we’ve either imported or that we’ve done the extraction and we’re pulling over into Detective. And we also have the ability to run known files, which is they’re already in here for you. These hash sets are already listed. You can run them against any Android or iOS and basically you can flag those as not relevant just to make your reports less cumbersome.

Keith: So Amanda, on the one side of the coin is, wow in the example we’re using project VIC data, that stuff I want to know right away, right? Show me that stuff. Bringing it to my attention. We certainly want to be alerted to these right away by comparison to those other ones. You were just showing the known file hashes, which are what were the iOS and Android iOS, I think. Yeah, look, if we can eliminate those from consideration because they are legitimately the operating system files. Hey, more power to us. Again, using the tool to… by deductive reasoning of removing things that aren’t relevant allows more things that are relevant to bubble up more effectively. Right. Okay, Amanda?

So I think from a hash perspective, you know, we’ve got the algorithms working one side of the fence for us and on the hash side, hopefully we’re importing values that can either get rid of stuff we don’t care about.

So it can more effectively show us stuff we do care about. But before we leave hashing, I want to jump back to something. Can you hit that add hash set button down there, and I just want to jump back here because of things you’ve said to me in the past. Like, Oh, get me out of this. I’m so sick of my brain having to look through things like this. But here, right? You know those two options right there. I think you called it one time saving your brain. What are those doing? If you got those right there, what happens?

Amanda: So if I don’t want to see the images that project VIC has told me these are bad images, I have the option to turn that off in my interface. And I also have the option right here to make sure the thumbnails don’t show up in a report. So I’m not printing any of that material.

Keith: Yeah. Hey, heaven forbid you distributed [indecipherable]. I mean, I’m thinking about this from the reviewer standpoint. I mean, it’s super common. You know the technical folks are doing one thing and they’re turning data back around to people to help with review cause there’s so much of it and I think the last thing I really want to do is subject them to things like that if they don’t want to see it. I mean, but that’s some of the value of the hash is look, if this is flagged as a match, you don’t have to look at it. Right.

So if by nature we have a thumbnail view for pictures, that’s just a great way to look at him. But you know what, if they’re these, don’t put the thumbnail up there. I think there’s intrinsic value on both sides of the sanity check with that for a reviewer, for someone like you who had that was doing the work all the time. So I just want to touch on those before he left hashing. I appreciate it.

Amanda: Exactly. It is the brain saver, is what this says. If these have already been verified as CSAM, child sexual abuse material, then why have to look at it again when you’ve already got the verification that that’s exactly what it is? And remember how we talked about a hash is like a DNA for for an image. As long as that hash value is the same, it’s guaranteed that that’s going to be the same image on the other end. Absolutely.

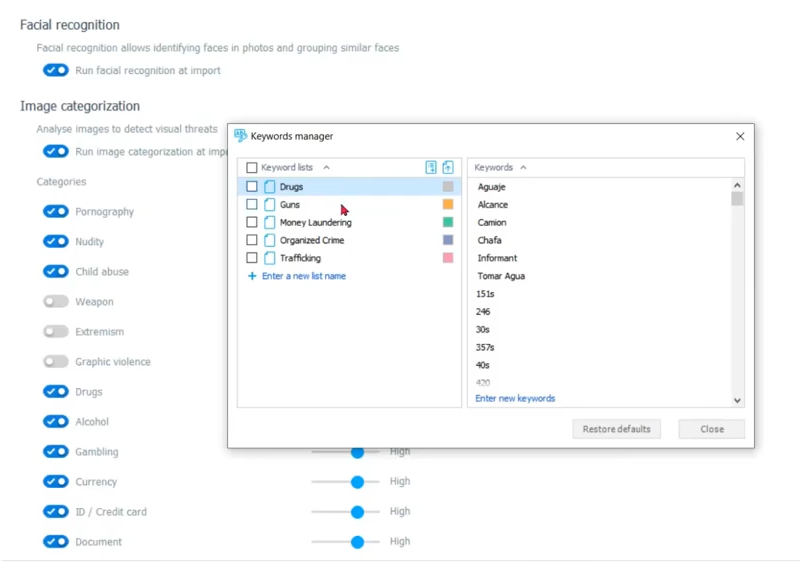

So another tool that Detective has, let’s go back to our configurations, are the keywords. There are already some keyword lists built in for you, but here we can actually enter a new list or we can import a list depending on whatever kind of case you’re working. You may, you probably already have your own keyword lists, import that here and let this work for you.

Keith: I mean, and we’re talking to text files, right Amanda? Just list of texts.

Amanda: That’s right. Just a text file. And you can build your own keyword list right here inside of Detective. Also, you can name it and add or take away keywords just as you can take away keywords easily. And the lists that are already populated for you, or enter new keywords.

Keith: Yeah. You know, my days were largely narc related from a dope perspective and I miss that tremendously. So much fun. But I could remember other instances importing keyword lists that were, you know, slang, teen slang, you know, the numbers and the symbols that mean, Oh, call me back in five minutes. My mom’s watching over my shoulder, or something like that.

Amanda: Yeah. And that’s always changing it up.

Keith: Exactly. And you know, again, I’m all about putting up the red flags, the more red flags I can go investigate as soon as my data’s done. I mean, the faster I get to work. Right. And if I can, if I understand what you’re saying here, we can pull this list in just like we had the hash values and hopefully see things waiting for us on the other side when our data imports complete.

Amanda: That’s right. It’s just another tool in our toolbox to do the workforce. Before we have to get over and start the analytics.

Keith: Perfect. Okay. But during that simultaneous import, we’re using algorithm or algorithmic work. And some human interaction by providing known hash values and keywords to help us go find these things before we even get access to our case. Right before that data is even input. We’ve got a lot of work being done on behalf.

Our next big problem set that we’re trying to address is the distribution chain or something more along the lines of, well, once we get in there, who made these things? Who are they giving them to if they are, and who has them if they didn’t make them, or who’s possessing them? Right? And it’s that, then I think that will like to say, you know what? Let’s go look at the case. Let’s see what happens with all these things in place and start finding where they are. And once we find them, what can we find out about that?

Once we find these images, what can we find out about them relative to the analytics of who’s made them where, how they get here, where are they gone? All that stuff. Right? Okay.

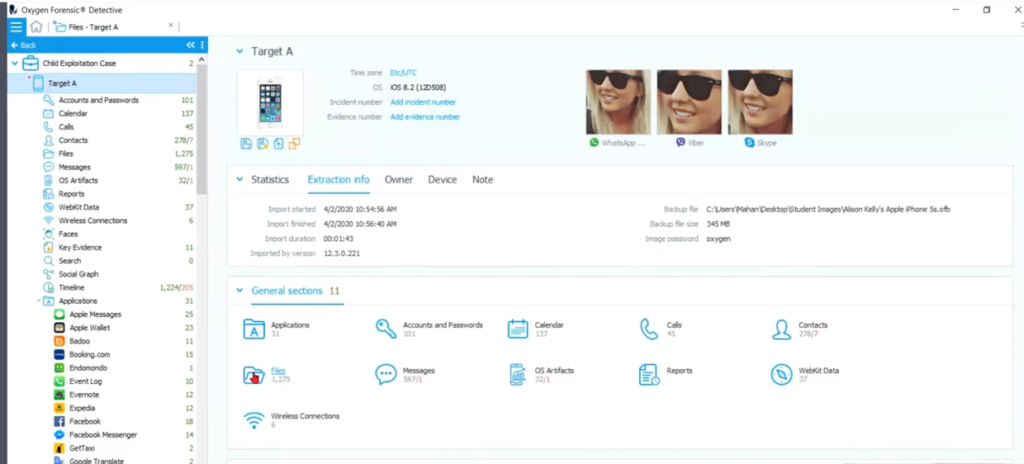

So Amanda, we’re inside Detective now with the idea that we’ve laid out the upfront work that we’re hoping Detective did for us. You know, we had image categorization project VIC values, other hash values may be key list or keyword list. Anything we can do to get a step up.

And that segues to our next set of bullets, which is kind of a combination, you know, creation, distribution, possession, who made this, who might they be giving these things to or receiving them from? Are they making it, do they have it and are they actually sending? So I say we just start on the right hand side of the lowest hanging fruit. Does this extraction have anything bad in it? And you know, based on all the things we outlined in the last 20 minutes, answer that question for us, Amanda, based on some of the things we should or should not see after our import.

Amanda: All right, so let’s say we’ve got our target age device and we want to navigate into that. So the first thing we want to see is, do they possess any of those images that we’re looking for. So what I would do here is as soon as I have my target A’s device parsed and pulled over into Detective, I would immediately go to the file section and look for all of the images there. And remember we have done image categorization already, so we should see the results of that. We’ve pulled in that project VIC JSON, and now we can see how it’s compared to the information that we have in this device. So I want to go to the file section and see exactly what hits I get compared to the project that JSON that I imported.

Keith: Hold on right there Amanda. When you say you’re going to the file section and I see I’m playing, Oh, I can see colorful things. You’re in the general sections section area, I guess of the tool on this file section. So I just want to make sure that when we talk about this, look, if we’re trying to see the files themselves in our extraction, guess what? Go to the files section.

But Amanda, you and I joke about this, but I know you’re going to find it hard to believe. If you want to go see the calls from this extraction, where would we go? And I emphatically throw that out there, the calls section, right? Same thing for messages or any one of these. So if it’s your first time watching Detective or you’re trying to figure out a way to get the data more quickly, that’s what these sections are designed to do is scoot you along right where you’re trying to go.

And in this case, I mean it’s like I’m clamoring to see results from our upfront work, from our categorizations, our product or hash comparisons or anything else like that submitted to jumping right to the file section. Whereas we will see there is all kinds of file breakdown and categorization forces waiting to show good or bad or indifferent. So that’s when she says she’s going to a section, I want to make sure we’re all talking about the same definition of section, especially when it comes to file. So sorry to interrupt you Amanda. I just wanna lay that out for everybody.

Amanda: That’s okay. Yeah, and in the file section we can filter straight to any camera shots or images. And so that’s really what I’m interested in. So here we go. So immediately I see that I have hits from my project VIC JSON, and I see what category they are under the project, that category. Now this is in the center of the screen, the meat of your information, the grid section where anything, if you look in our column one, which is on the left hand side of your screen, we’re looking at target A’s device.

And if I click on that device, I’m looking at all files within the section in the second column, which is your center column and down below on the bottom of this section, if I click on anything with a duplicate, it’s going to show me where all of these duplicates are located and buy in. So I’m all about the eye candy.

Keith: Amanda, by duplicate you, you clicked on that one and said it was a duplicate. Why?

Amanda: Well, if you look at the top of your screen in the center, you can see that we have a dropdown menu right here and we’re looking at all files. We can look at files just with duplicates or without duplicates or we can show de-duplicated files. Right now I’m looking at all files, but I can still see which files have duplicates and how many. Some have two and some have three.

Keith: So anything with that little icon, which would have a number bigger than one probably that’s indicating can duplicate for us. Okay.

Amanda: Right, right. And right next to it, this symbol indicates that this is embedded. This is the embedded file. So this could be a thumbnail. So let’s look at one of these images that project VIC says that this is child abuse material. And of course this is a mock JSON because we’re not going to use a real one in this demo. So as you can see, we’re going to assume that this is an illicit image. Cool. Alright, so this is going to be our elicit image.

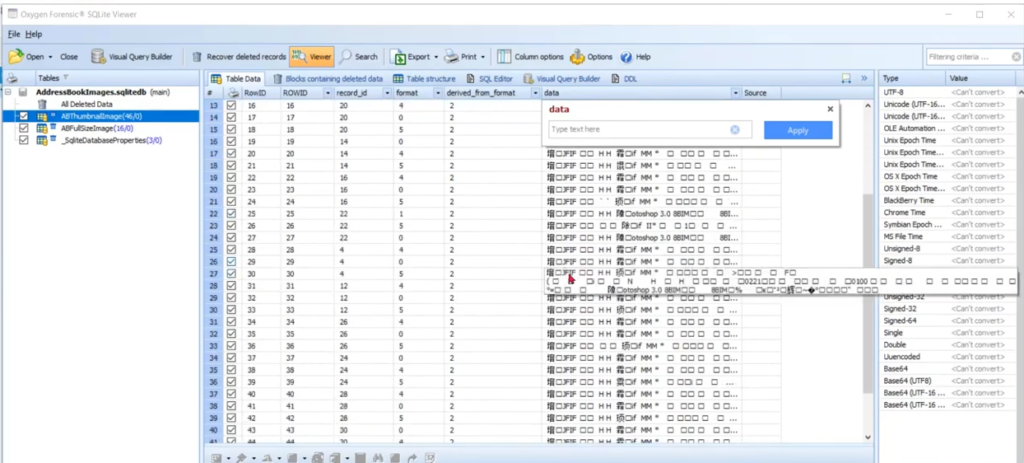

And if we look down at the bottom of the screen and your center column, you can see that there are three files that match. How do we know that they match well? They have the same hash value. So we have a match and if we scroll back to the left, remember that this indicates it’s an embedded file. So let’s click on that embedded file and look in the column on your right. This is column three here and we can see exactly where this file is coming from. Where is it embedded at?

And we can see here in the full path that this embedded image is located in the address book images, SQL database. And so to make this a little easier for us, let’s go to the database tab and locate that database. And I see it right here. If we double click on this, we’re going to open up our SQL viewer in here. We can dig down and find that image that we were looking for.

Keith: So Amanda, so you just — just because the magic is happening before my eyes — you just double click on that database, pull it open in the viewer, which you know, as far as one of my favorite things is like, wow, I don’t have to go get another one cause this was already integrated and I just fired it up right from within the file section.

And I remember you showing me this before. You’re going to go look at the thumbnail image table inside here. And I know that one’s in there, right? So I, yeah, I’m pretty sure it was there. And I don’t mean to drive, but I get excited because when I go there, if I look at the data column over there, the what? The two four is like the six column over, you know, I’m computer forensic background guy, right? And if I see JFF and my world, I’m like, pictures!

So not only are we digging down inside this database with the viewer, but I’m looking at those thinking to myself, you know what? I want to see what these are and look at that. This drives me crazy. I mean good crazy, but that’s the fact that we’re, what I think you just did, Amanda was say, Hey listen, I found a project VIC responsive file here it is.

Matter of fact, there are several versions of that which is going to take us to the next next branch up in the low hanging fruit tree possession, to maybe distribution or a creation. If there are multiple copies that might be multiple good charges for us, but you found one that was embedded inside. Something else has to be a database and now you’re inside the database going to find the actual embedded version of it, and I think it’s at the bottom for that time. He showed me further in the list, but the point, that whole thing we just outlined how you got us from, wow. I didn’t know anything. I use some of my upfront technology to give me red flag hits when I finally got into the case and in five minutes I find an embedded version of one of those red flag hits inside a database. Hmm. As you can start doing your fingers together saying excellent. You got a lot of stuff in front of you here.

Amanda: Right. And once you know where you’re looking, continue looking in that same file path and that same database, you may find something that may be project VIC hasn’t hit on for you yet. So keep digging.

Keith: So I think that, sorry, go ahead.

Amanda: No, go ahead.

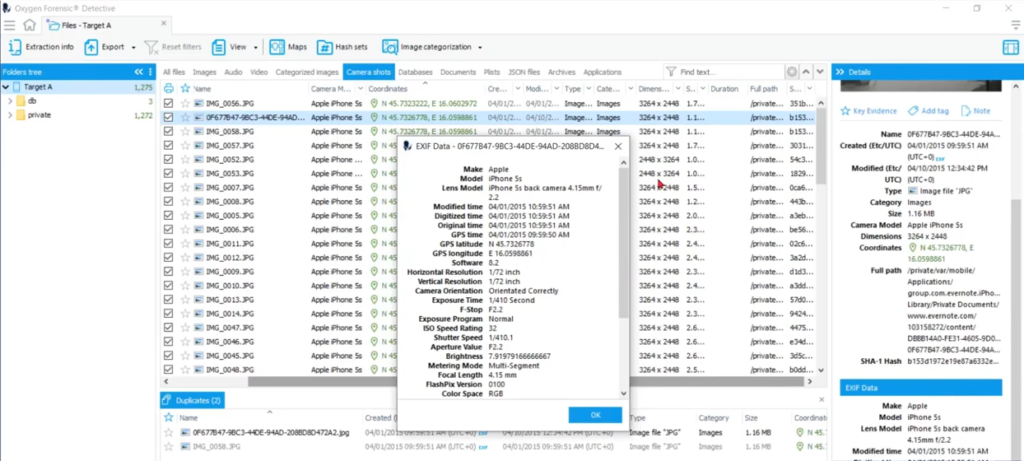

Keith: So yeah, keep digging and I would say, cause I’ve already started climbing the ladder based on that previous conversation. Go back to the file section Amanda and I want to look, I saw tad back there. I want to make sure we talk about, because if I go in our, in our third bullet there, if I go left to [indecipherable], I’m just going to throw my premise there that if I’m taking pictures with the camera and my phone, more than likely those pictures are going to have the extensible information data about the camera of my phone attached to the picture. That exif information and yeah, that tab right there. Camera shots. I mean, what is the camera shots thing doing for us, Amanda? That’s that it has its own tab. What’s the point of that?

Amanda: Well, this tells me that this came from likely this camera that we’re looking at and look at all this exit data. We have geo coordinates, our creation date, modified date, the file path.

Keith: You know what? Go down in the bottom of column three, Amanda. And you’re gonna have to scroll down I think, just because of our screen. Real estate. Yeah. Click show the full information of that stuff.

Because you know, and I think of the whole sexting case, if I can find a lot of copies of the pictures all over the community. But then I find the one that has the exif data, I’m blaming that person for having taken the picture because all that stuff gets stripped out when you start sending it around. But yeah, if I can say the original picture has this, maybe tie it back to the device, you know, I’m cooking with gas that day or something. You know, my day is going to be a little better if I can start finding more corroborative information like that.

So I just want to make sure we touched on that tab because again, it’s pre categorization by the tool for us based on exif information, which probably says it came from the camera. And of course looking at the time, we can put somebody somewhere with geo coordinates, you know, we’ll come back some other day and start putting people on the map with regard to this. But you know, you can click right on one of those and be on the map in a heartbeat. But if I take us out there, whenever, come back. So yeah, make sure we touched on that.

Amanda: It’s like finding a piece of gold when you find a coordinate inside of one of these images. And here you can find the make and the model of the device that took this image. So that’s very important because like you said, in social media, now almost every application that you’re going to use is going to strip that exif data from the device. So if it’s an incoming photo or likely not going to have any of this information, this is telling me this is an image taken with this device. Next step, is it an outgoing image? Maybe we have distribution then. So that’s something we really need to pay attention to.

Keith: Oh, you know where I want to go for that. Hold on one second. Okay Amanda. So if we’re in our third bullet and we just hit some great ideas about from the low hanging position, fruit stand point ideas. I know, especially even looking at duplicates and embedded things and who knows where you’re gonna find really the things you want and all of your extracted data. And we even talked about some creation stuff looking at exif and you know, having to hold me back from going out to the map cause I see coordinates and I just have to click on them. So take my focus away from that. Hey, what’s that right there. By the way, that little purple tag right there.

Amanda: So this was put here by our image categorization and this tag actually lets us know that this may be associated with that.

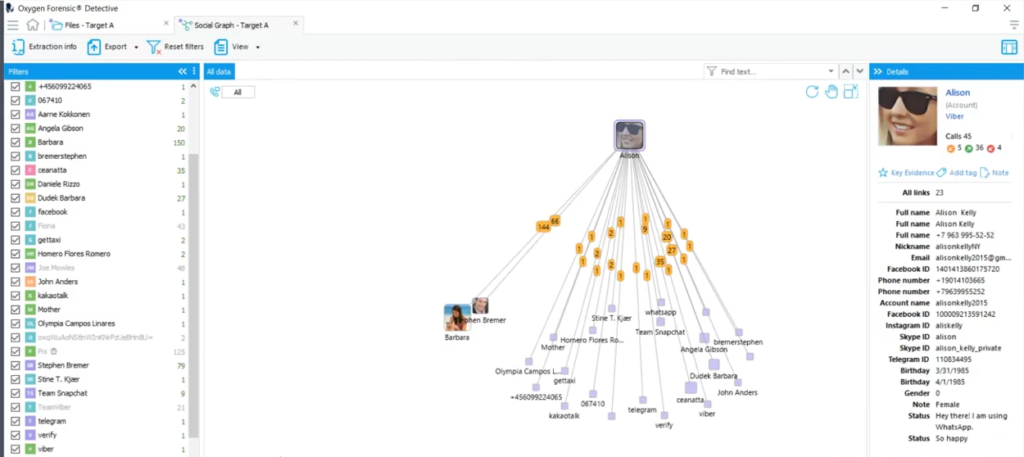

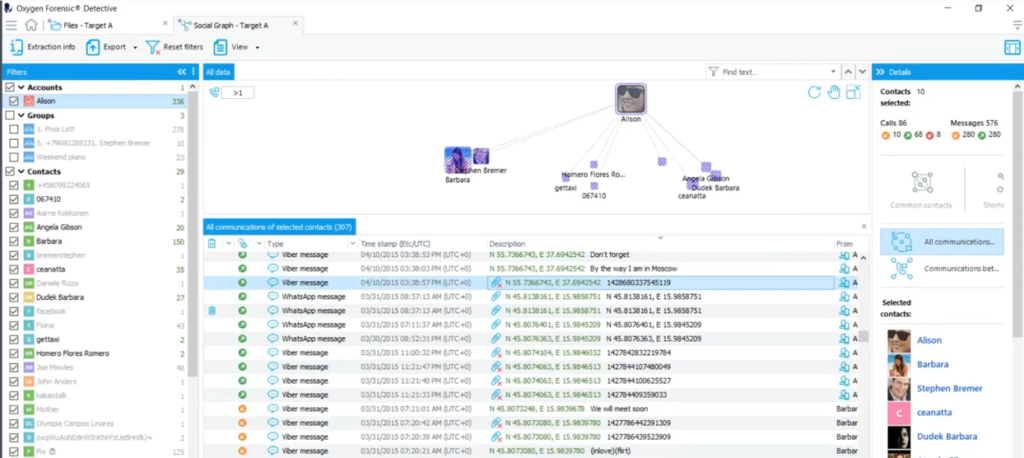

Keith: [Inaudible]. Sorry, that was just a great thing to point out while we’re looking right there. Yeah. So let’s go look at the social graph. And you know, if you’re not familiar with the social graph as a viewer, this is one of the analytic tools available to a user that is, I don’t know, the evolution of taking a picture, putting it on the wall and tying a string from it to another picture on the wall to try to show some type of association between this person and that person.

So in this scenario, as we’re going to going to pretend, what we have available to us is account-based information with contact based information and the source of the communication between the accounts and contacts and even the type, whether it’s a phone call or a message. And this is the whole, again, the old school put pictures together and tie strings between them or you know, the analyst’s notebook of view or who’s talking to who and how.

Really Amanda, just to understand that, go down to the bottom of your column one and turn off the messages. We just only want to look at calls between everybody in the social graph. Oh, interesting. Or you know, turn off or turn the messages on and turn off calls where we just want to see the messages. I mean this looks like more messages than anything in this device.

It looks like more messages and this is because of that database, you know, capability where we can just tick things off and on and really filter through it. So let’s pretend I’m not worried about groups right now even though in this kind of investigation I might be all about groups, but just to help clean things up as we’re looking at things, I’m going to turn off the groups with Amanda doing just that click box right there.

And now if we pull out the Alison large account with the sunglasses in the middle of that group over there and just, you know, do something like that. So that’s when you’re looking at that with the human eye that’s pleasing to say, Oh, this one person must be talking to all those other people and this is the truth thing. And I want to do a couple of things to help us visualize, Hmm, maybe this person is talking to these people and there is the chance that we might find that this person is sending some of those graphics or those images that we are so interested in right now to other people.

So the first thing I’m going to do to help clean up a little bit of our chaos is in the contact section over there in the first column where Amanda has kind of expanded, there are numbers there that indicate the number of communications between the account at the top and the contacts in that list. And look at some of the ones that, the number one, like I don’t know KakaoTalk or telegram or Viber or WhatsApp or those really your contacts. I mean, are you best friends with Viber or WhatsApp as a person or a contact? Amanda? So let’s go up to the view dropdown in our tool bar up there and make sure we’re looking at the communications pain and you know, it’s off by default for, so we have more real estate for our graph essentially. But okay, here are the actual communications.

Amanda, if you go double click on WhatsApp and the column one there, just as a fun trick to narrow down just to the WhatsApp. Oh, there’s the one, what’s that message between our target and WhatsApp? And the description is your WhatsApp code is blah blah. Okay. That looks like one of those, you know, verification code messages. Who cares about that one is what my point is there. So Amanda, go up and turn all the context back on again. Perfect. Thank you.

And let’s look at, let’s double click on I don’t know, Facebook right in the middle, cause I’m best friends with Facebook, my favorite contact in my entire life and I look at it and 600 is a conference. Okay, I’m just going to go out on a limb and say a ton of those one offs, you know, might be things I don’t want to see.

So turn all the contacts back on, Amanda, and we’re going to use that little filter at the top that says ‘all’. And you know by the number of communications is what’s going on here. So if you bump that up greater than zero, greater than one it grays, all those greater than one’s out kind of filters them out of our view. So in essence, we’re just down to the real people, the real contacts. I mean Gett Taxi, there’s two, apparently that’s the app for go and get taxis and obviously having communications with them, but you know, we can go through them all. But this helps us organize some of the chaos.

So Amanda, while you’re here, sweep everybody just left. Click on your mouse and grab the whole yes, yes, yes. And as that happens, that selects everybody and all of their message down, all of their messages down below.

Now I’m trying to find ones like right there, stop or go past and then go back. I see how it makes me drive like, Oh stop right there. Look at that one. Look at the Viber message toward the bottom and you’re laughing cause you know I’m all excited.

Not only is there a paperclip, which is generally indicative of what an attachment, an image not only is there, but it’s got an X on it, which generally means why it might be deleted and recovered and it’s sitting right there with coordinate information. So before I go absolutely nuts on that and I won’t, I promise, just go up Amanda and sort on the description column for me because that is my goldmine, Viber, WhatsApp, a bunch of messages with attachments that may or may not have been deleted as I can go look at later and now I’m hitting on that.

Hmm. Did these, did some of these pictures maybe leave this device or did maybe other ones arrive this way? I mean, yeah, they’re on the phone. That’s great. How’d they get there? Or where do they go if they were taken here, these are the things we’re trying to figure out when we have the data at our fingertips.

What do these designators mean? What does this social graph do for us? How does it indicate communication between people? How do we even know these things on here are valuable?

Maybe I can show that the same picture was sent out seven different times, and that’s seven new charters for me to play with. Anyway, man, I just want to take that minute and go through some of the social graph capability so we could finish off that third bullet on possession, distribution, creation and you know several variations of those in between. What else would you add to that Amanda?

Amanda: Well let’s not forget that Detective has a pretty good system of maps set up, so if we were interested to see if one of these geo coordinates is somewhere around our victim, then we would definitely follow up on these coordinates just by clicking it, which would take us out to a map, which I hope we’re going to get to show them later, and another one.

Keith: Click on it. Can’t take it. Click on one of them. Yeah, because you’re segwaying perfectly into the next bullet, which is inter-agency corroboration, and that bolt is the way it is because you know we’re going to play the part of the agency who goes to the next county and says, listen, we got this guy or the store, we got this target. I’ll be fair to everyone. We got this target and it just so happens they say something like, yeah, we were going to ask you the same thing. Here’s these things on a place or a map in our county. Got anything like it and I see you’ve clicked on one coordinate, taken it out to a largely zoomed in view of a satellite view. On a map and wow, I just want to go nuts there, but I won’t, we’ll come back to that.

I think we’ll pause at part one right here. And when we come back for part two, we’ll figure out how to start comparing between this map and that map and this device and that device and see how that changes our communication patterns or maybe our distribution patterns or matches on, Hey, this person has this. And so does that person over there, you know, so we, we start our conversation with how do we even get to this stuff, whether it’s one device or many devices. And then once we find it, how do we place culpability or X scope ability around it? And then once we’ve done that, how do we give it to somebody? I mean, we’ll work together with other people as our corroboration part, but how do we then report on all of this and make it make sense to whoever it is that matters in our world. Okay. Amanda, what else would you close this out with before we come back for part two later?

Amanda: I would say that it’s also easy to take data from other agencies and import it right here. For example, if you have a CDR, we can overlay that on the information that we already have from your target A’s device.

Keith: Amanda, you’re supposed to give him information that lets us get out of the video today and now I just want to go stay in the video. Nice. Now. Perfect. You’re obviously setting us up for next time as well. Okay, everybody listen, thanks for watching. This is part one in what we consider to be an ongoing longterm series of whatever kind of fun we can get into and the tool to help you guys use it better day to day. So I would say thanks Amanda for the time helping out with, with our thing here and everybody keep on learning. We will see you next time.