By Marco Fontani, Forensics Director at Amped Software

It is increasingly common nowadays that an investigation involves imagery from Social Media Platforms (SMPs), such as Facebook, Instagram, Twitter, etc., or from messaging apps such as Messenger and WhatsApp. Indeed, the marriage of digital images, smartphones, and social media platforms made it so that billions of images are shared daily (6.9 billion on WhatsApp alone, and 1.3 billion on Instagram according to Photutorial [1]).

Sometimes, images are acquired from social media profiles as part of the investigation, in which case their SMP of provenance is obviously known (but we should rather say, the last SMP that hosted the image is known; it could have been elsewhere before).

In some other cases, you may be facing a bunch of pictures and you have literally no idea where they come from: are they original? Are they from SMPs, what device took the picture?

Moreover, it is becoming more and more clear even to the general internet that images found on the web and SMPs are not always trustworthy. Is there a way we can check the authenticity of shared images? How are they different from the ones never sent to any SMP?

In this article, we’ll try to answer some of these questions and show you how Amped Authenticate can help investigate images from SMPs.

What Happens When You Upload an Image to a Social Media Platform

We have all witnessed a steady increase in the quality of images captured by smartphones. The first iPhone was released 15 years ago, but it really feels like a century has passed since then. Initially, smartphones could capture 2 megapixels images with their single camera, while today’s devices have crashed the 100 megapixels barrier and hardly come with less than 3 cameras.

While these super-nitid pictures are very nice to see, they are quite beefy in size and cannot be conveniently shared on SMPs. For this reason, the vast majority of SMPs will reduce the size of images as part of the uploading process. While every platform has its own rules [2], the most common operations include:

1. Downscaling images to a fixed resolution, usually below 2 megapixels;

2. Removing all or most metadata, sometimes adding some new ones;

3. Re-compressing images to the JPEG format, usually with average quality;

4. Renaming files according to some SMP-specific pattern.

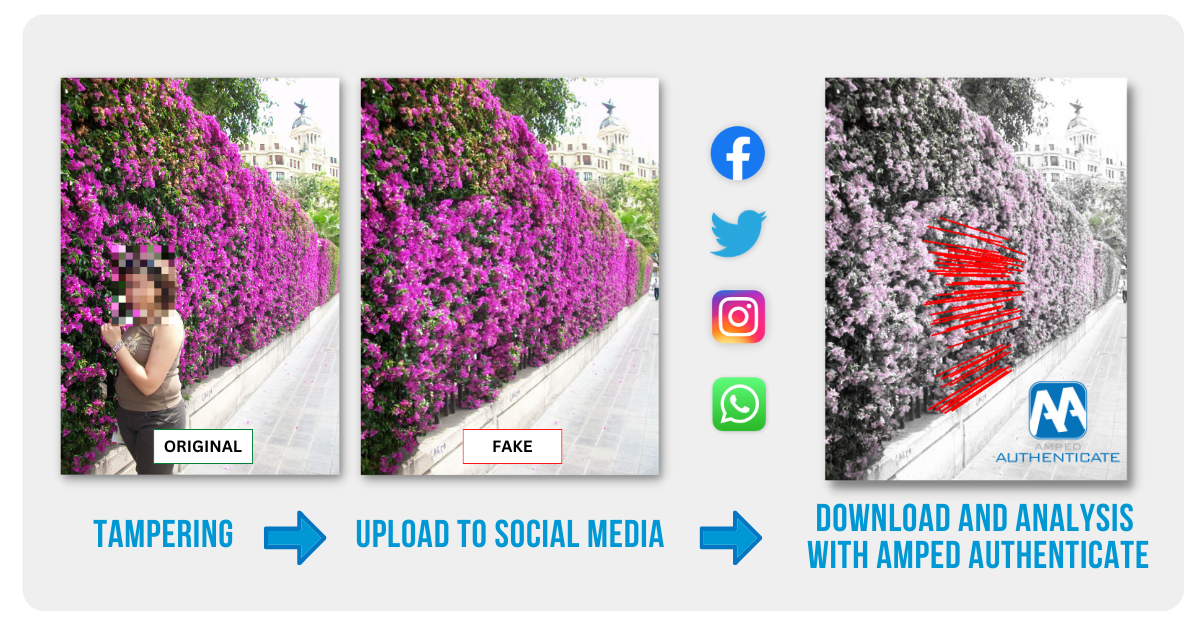

While these operations seem innocent and beneficial to the normal user (they make sharing a picture faster and less data-consuming), they act as a “counter-forensic” or “laundering” process when it comes to detecting manipulations. Many image forgery detection algorithms that are highly effective on full-sized manipulated images, become way less reliable when applied to social media images, just because of the downscale-and-recompress process [3].

In terms of signal processing, indeed, downscaling means decimation or interpolation, and compression means quantization of values: all these terms suggest that the produced image has less information than the original, and most of the remaining information has been “re-computed” from the original pixels.

Having all of this in mind, it should be quite clear that a decent image authenticity analysis cannot be oblivious to whether the investigated image comes from a SMP or not. We are thus left with two open questions:

1. How to identify images that come from a SMP?

2. What kind of analysis can be done on images coming from a SMP? Which in other words means: how to analyze images that come from a SMP?

How to identify images that come from a SMP

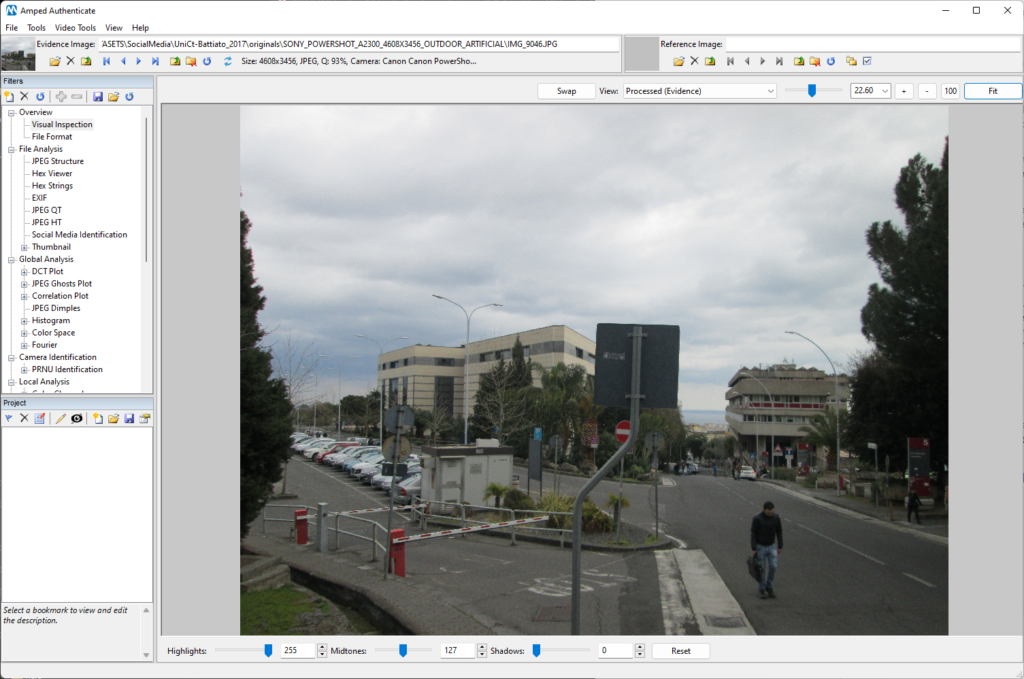

We already mentioned that uploading to a SMP causes lots of changes to a digital image. Let’s have a look at it with a practical example. We’ll use one image from the public dataset made available by the University of Catania and presented in a scientific paper [2]. Here’s what the original image looks like once loaded in Amped Authenticate.

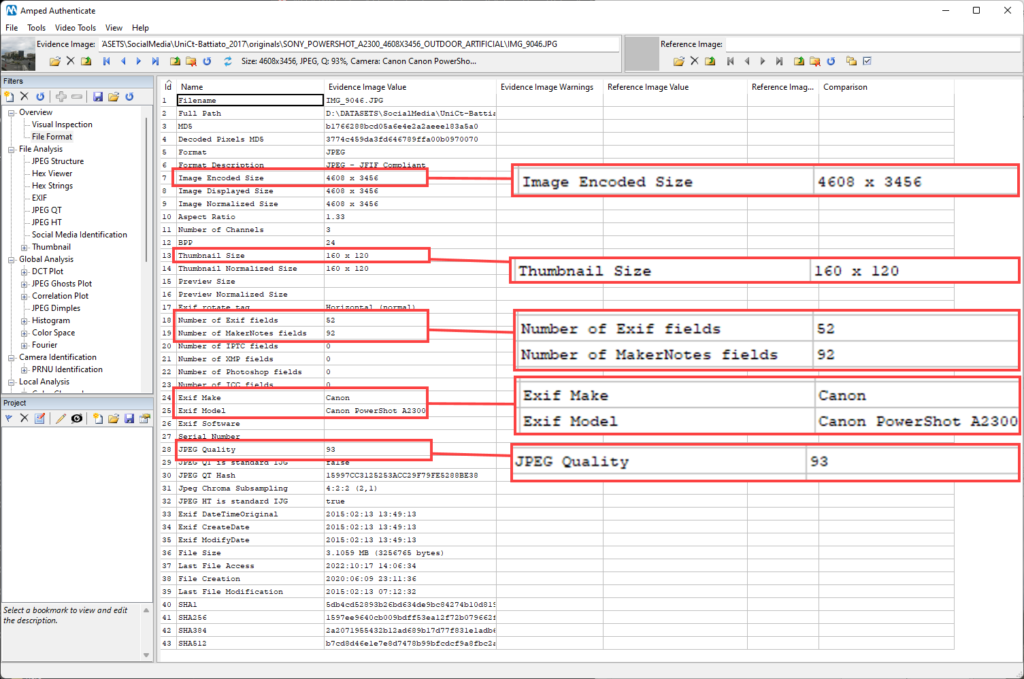

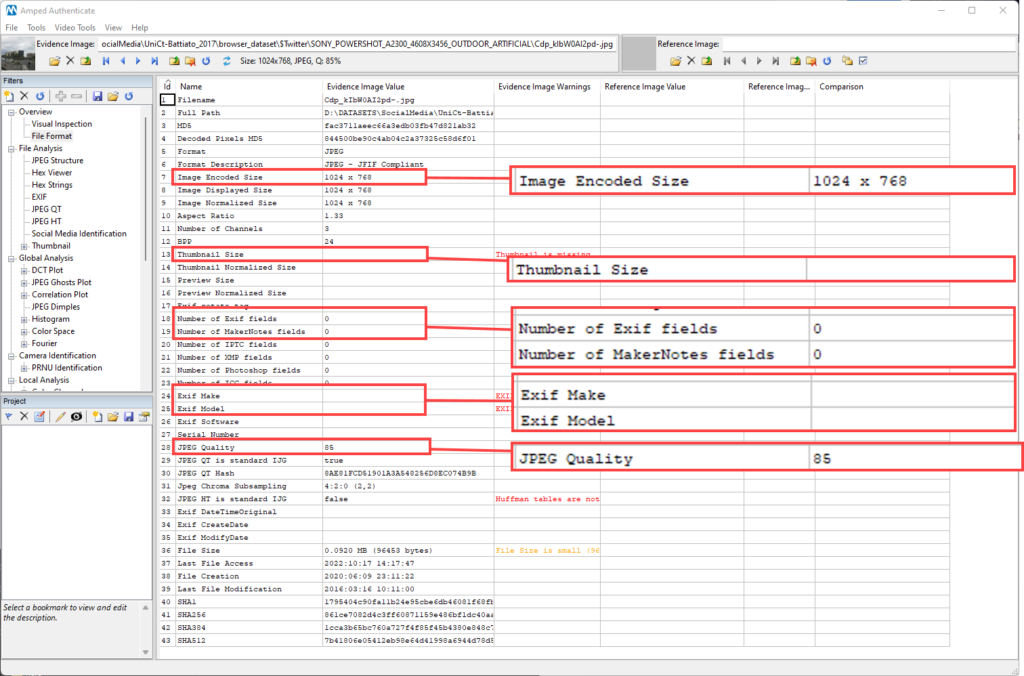

And here is what Authenticate’s File Format filter shows us (we’ve magnified some relevant parameters):

As we can see, the original image has a pixel resolution of 4608 x 3456, it contains a thumbnail picture, a load of Exif and MarkeNotes metadata, including information about the make and model of the camera, and it is saved as a rather high JPEG quality (93/100).

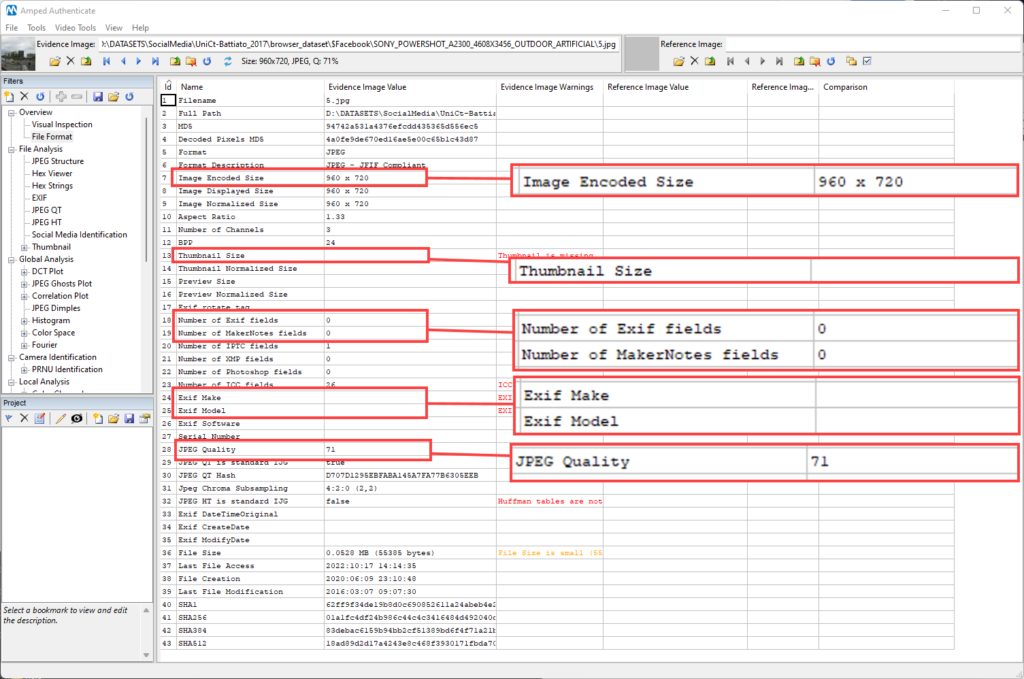

Now, let’s see what happens when the image is loaded and downloaded from Facebook (the dataset dates to 2017, so a few things may have changed in the meantime).

As expected, the image has been downscaled, the original metadata and the thumbnail have been removed, and the JPEG quality has been lowered to 71.

Let’s try again, this time uploading to Twitter:

We see a similar behavior: most metadata is gone, and the image has been scaled and re-compressed. However, the size and compression quality are different than those applied by Facebook.

It often happens in image forensics that a processing operation acts as a nuisance on one side but also as a new source of evidence on the other side. In our case, the fact that each SMP processes the image makes the analysis harder; however, it also introduces new processing traces that could be used to understand that the image has “traveled through” a SMP. And since different platforms tend to use slightly different processing parameters [2, 3], we may be able to identify one or a few specific SMPs that are compatible with the current properties of an image.

Amped Authenticate’s Social Media Identification filter builds upon this idea. It implements the approach proposed by Giudice et al. in a scientific work [2] to provide a nearest-neighbor classifier that extracts some of the features from the analyzed image and compares them against a dataset of thousand pictures downloaded from SMPs; when a match is found, the corresponding SMP is marked as compatible with the questioned image.

The considered image properties include pixel resolution, the amount of metadata, some values of the JPEG quantization tables, and more. Besides that, Amped Authenticate analyzes the filename of the picture to detect whether it matches some SMP predefined naming pattern [2]. Currently, the database covers the following SMPs: Facebook, Flickr, Google+, Instagram, Messenger, Telegram, Tinypic, Tumblr, Twitter, and WhatsApp.

Let’s see the Social Media Identification filter in action! This is a picture saved from Facebook of my wife’s favorite shop:

The Social Media Identification filter sits under the File Analysis category. A simple click over it is enough to run the analysis, which is amazingly fast.

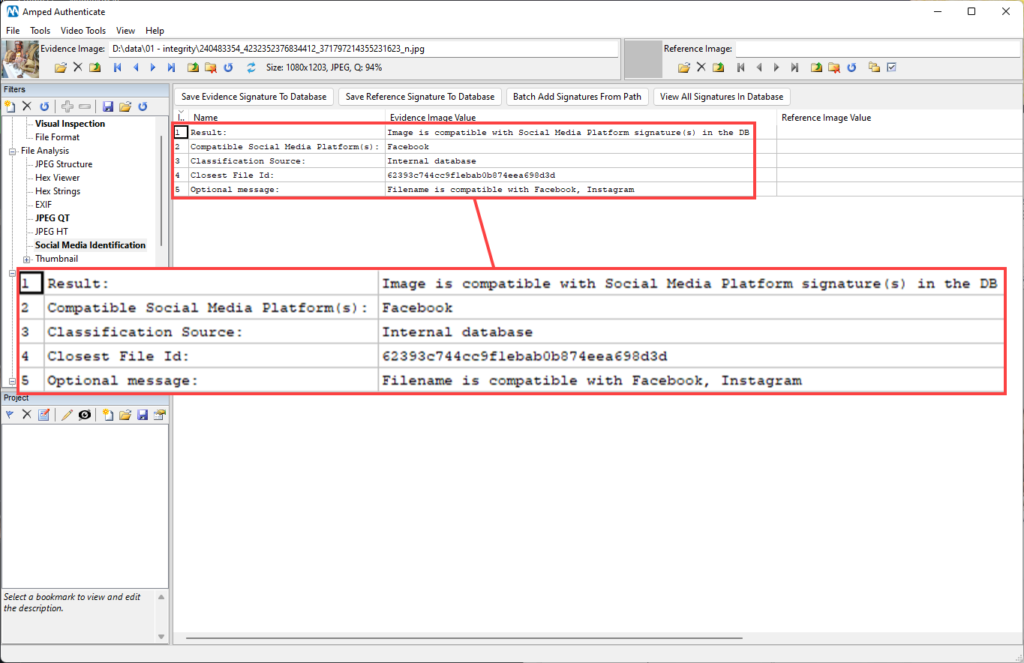

As expected, the filter has identified that the image is compatible with a SMP, specifically Facebook. The Classification Source tells you that the matching image belonged to Authenticate’s internal database. You are indeed allowed to create your own database of signatures by using the buttons above the table, it’s a very simple procedure fully explained in the software manual. These signatures are kept separate from Authenticate’s ones and if a match is found therein, the reported source will be the User Database.

The Closest File ID is an identifier that points to the matching file in Authenticate’s database. These files are not shipped together with the software, but they are kept safe in Amped servers; single files can be provided upon request for reproducibility purposes.

Finally, the Optional Message may contain information about whether the filename of the questioned image matches one or more of the known naming patterns. In our case, we see that the image filename matches both Facebook’s and Instagram’s naming patterns.

It is not uncommon that an image matches more than one SMP. This could happen since SMPs may sometimes use similar processing parameters, depending on the app version that was used to upload the picture, whether it is a computer or a smartphone, whether it is Android or iOS… you see, there’s a wide variety of settings!

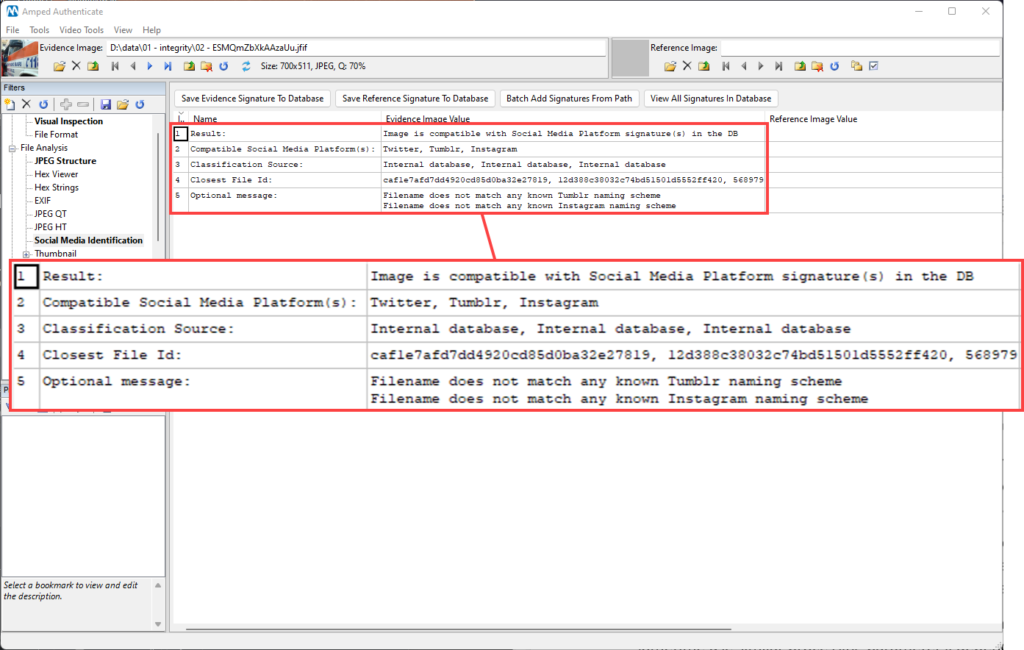

For example, the picture below matches several SMPs in Authenticate’s database; however, the Optional Message tells us that the image’s filename does not match any known Instagram and Tumblr naming patterns. Of course, it makes sense to rely on this message only if you know that the image has not been renamed before entering the analysis.

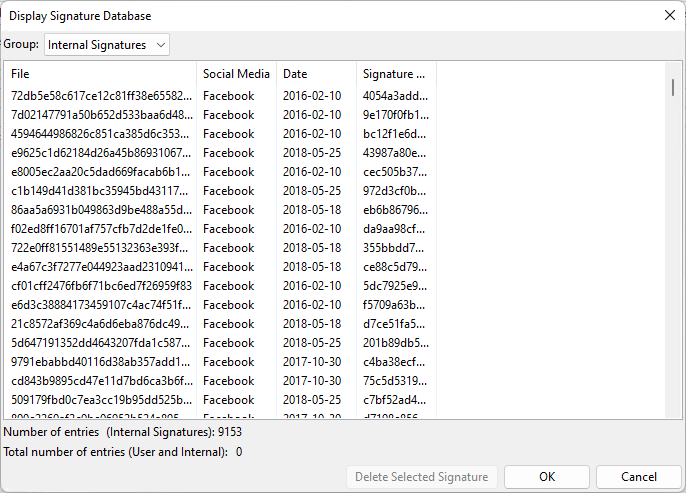

If you’re curious about which SMPs are featured in Authenticate’s database, simply click on the View All Signatures in Database button. You’ll get a list of reference files and their associated SMP. As you can see below, we have over 9,000 reference files in our database!

As mentioned before, using the Save Evidence Signature to Database button, or the more practical Batch Add Signatures From Path button, you can extend the database by adding new signatures for known SMPs or even add new SMPs.

Before moving on, an important remark is in order: the Social Media Identification filter is based on a reference database and a signature matching system. As such, it may give false positives (an image mistakenly marked as originating from a SMP when it’s not), or false negatives (an image that comes from a known SMP is not recognized as such). This is, to some extent, unavoidable.

How to analyze images that come from a SMP

If you reached this point, you know that uploading/sending an image via a SMP will cause some important processing of its pixels, metadata, and file properties. You also know that Amped Authenticate’s Social Media Identification filter helps you to check whether an image seems to come from a variety of SMPs.

These two things together are highly important for the rest of the analysis. If you know that an image has been downloaded from an SMP, then you should deduct that the performance of some forgery detection algorithms could be severely impacted.

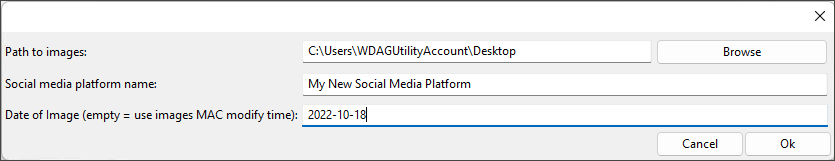

Let’s try with an example: we have a picture of a water bomber helicopter from which a malicious user removed the water tank.

Running the image through Authenticate’s Local Analysis filters indeed reveal some anomalies in the region below the helicopter: the JPEG Dimples Map filter is the winner here!

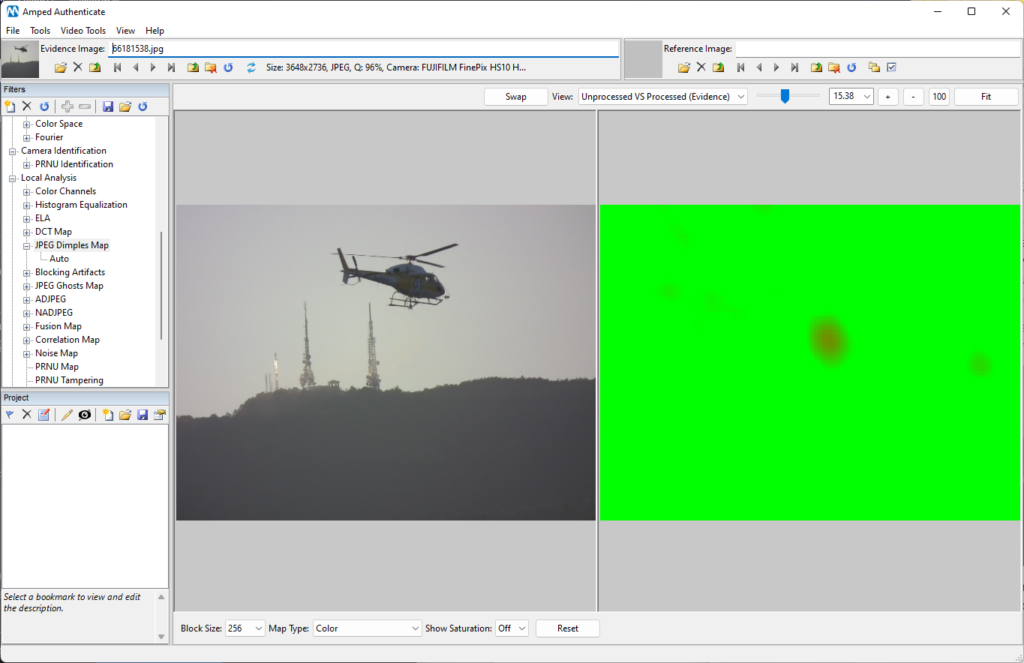

However, if we upload the image to Twitter, download the “Twitter-processed” version, and run again the filter, we’ll notice that the algorithm won’t be able to find any JPEG dimples in the overall image. When no statistical trace is found, the software will show a black frame as the example below.

This example was to say: when carrying out forgery identification, you should consider that some algorithms or filters have more chances to keep working after the “SMP treatment” than others. For example, filters based on double JPEG compression analysis, such as ADJPEG, NADJPEG, and JPEG Ghosts Map to cite some, are expected to be less reliable when working with images from SMPs.

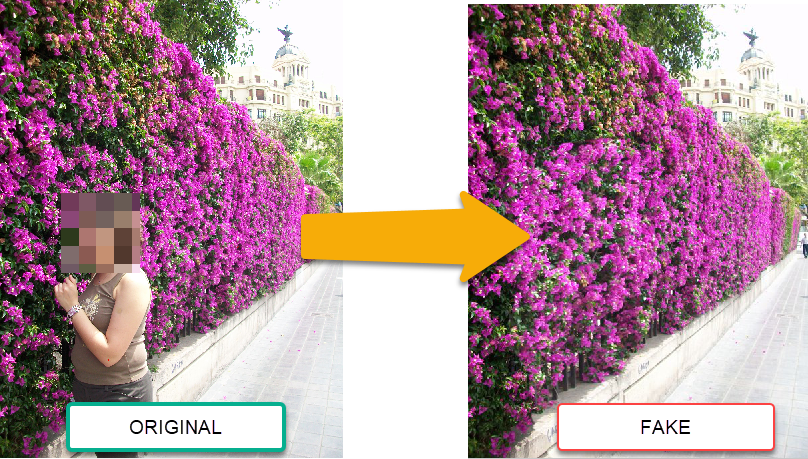

Other algorithms are a bit halfway: their reliability may be negatively impacted by the reduction in size and quality, but this may not completely impair the analysis. A notable example is the Clones Keypoints filter. Let’s take for example this image where a subject has been removed and replaced by the nearby flowers.

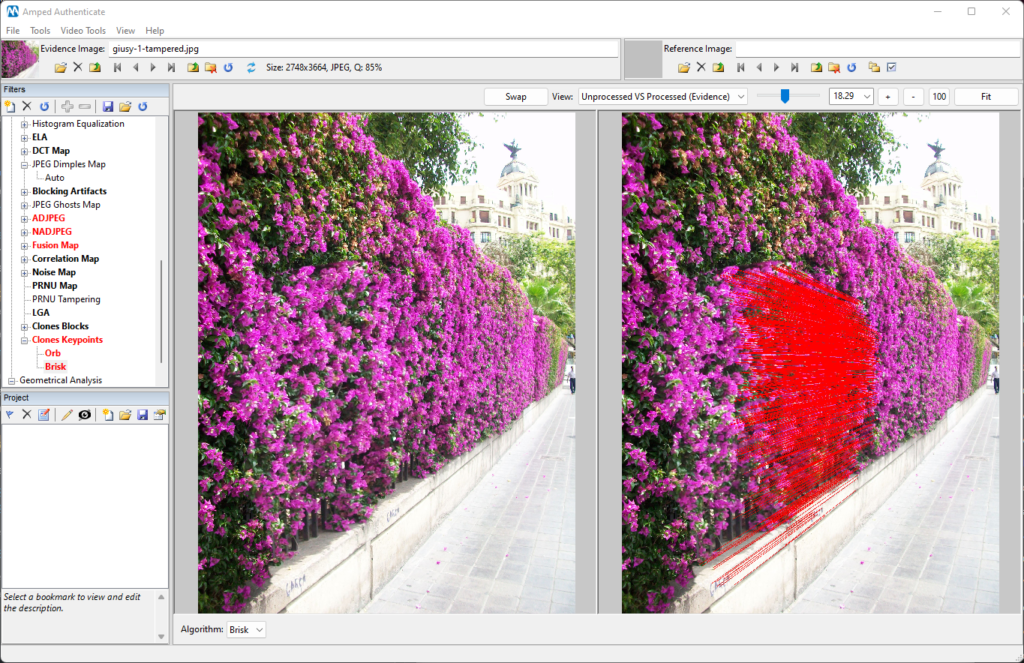

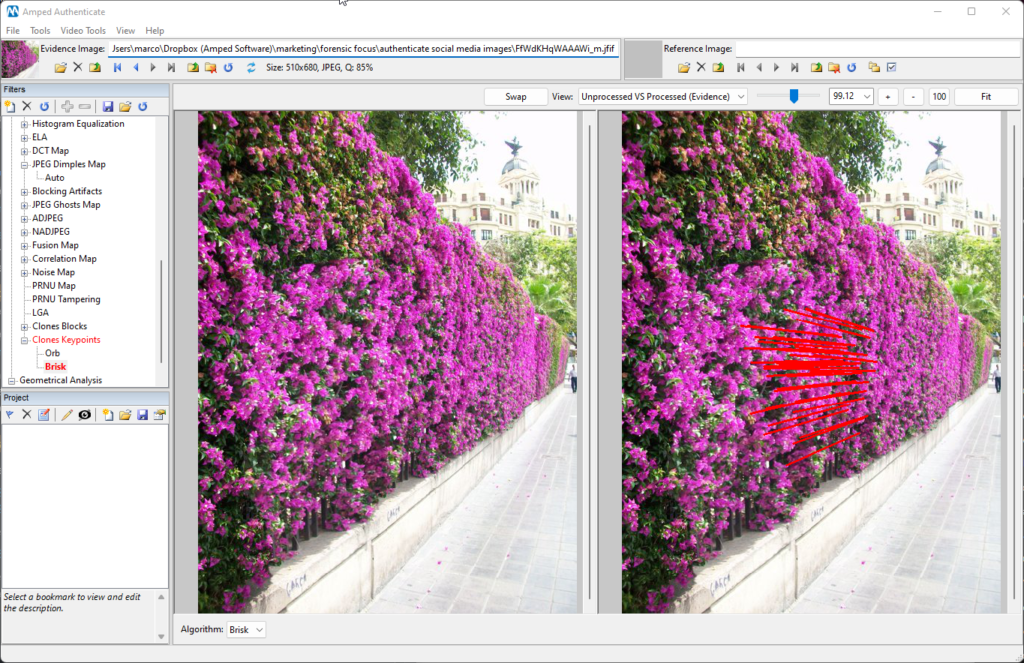

The Clones Keypoints filter does an excellent job revealing the cloning, which is extremely hard to spot by the naked eye.

If we pass the forged image through Twitter, and then compute the same filter again, here’s what we get:

As you can see, despite the relevant reduction in size and quality, the cloned region is still revealed, although by fewer matching key points. This example was to show that image forensics algorithms have different robustness to the processing steps applied by SMPs, and this should be taken into consideration when doing image authentication.

Now, let’s move to the undisputed king of robustness: Geometrical Analysis algorithms. This family of image authentication algorithms is based on detecting inconsistencies in the physical/geometrical properties of what is shown in the image.

When you create a forgery, it is often hard to figure out the way shadows, perspective, and reflections should be faked to be consistent with the other elements in the picture. Unfortunately for us (and luckily for the forgers), the human brain is not good at detecting slightly wrong shadows or perspectives.

However, researchers have figured out ways to help us in making an objective analysis of the consistency of the geometrical properties of an image.

The most desirable properties of algorithms based on geometrical and physical analysis is their outstanding robustness to post-processing. Since these algorithms do not rely on statistical properties of pixels, they are not affected by processing, unless it reduces the image to nearly useless quality state.

In other words: if a shadow is wrong, it stays wrong. You can upload the image wherever you like, you can even take screenshots of it, print the screenshot, then take a screenshot of the printing and upload it to Facebook: that shadow stays wrong.

The most notable exemplar of this class of algorithms is Shadows Analysis [4], which is indeed made available under the Geometrical Analysis filter category in Amped Authenticate. We have published a dedicated article on Forensic Focus a year ago on how to detect tampered images on social media via Shadows Analysis, so here we will just give a quick overview of how the Shadows filter ,can be used without diving too deep into the details.

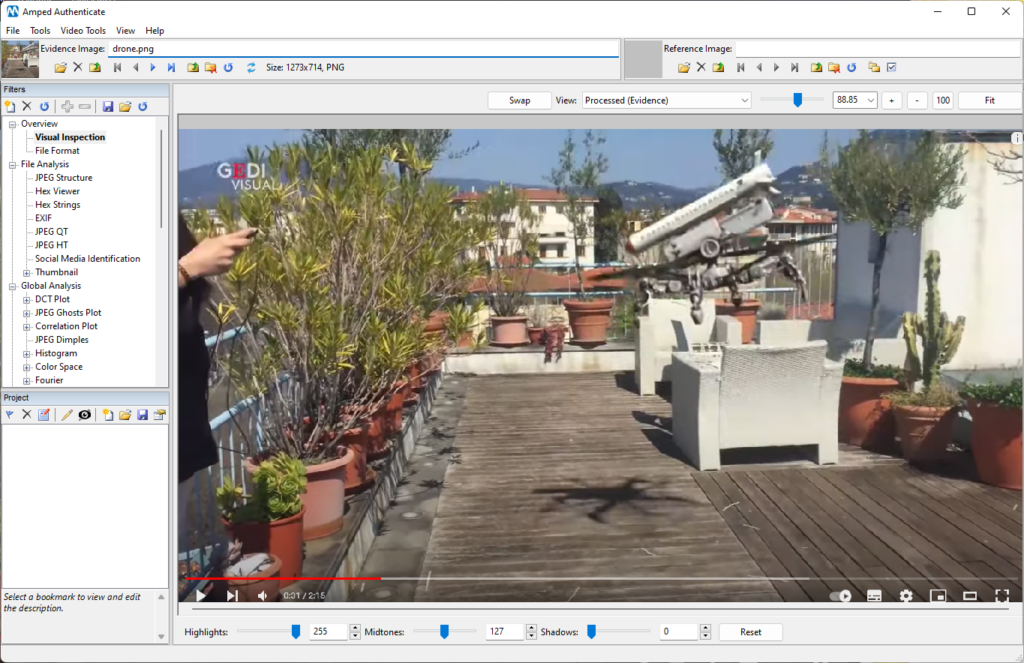

This time we’ll use a video that went viral on YouTube a few years ago during the Covid pandemic: the Italian artist Giacomo Costa created a satirical video where a missile-armed drone was used to shoot cars that violated the lockdown. The full video is available at this link.

Of course, none of it was real – common sense was probably enough to “debunk” here – still, many people didn’t get the joke. Anyway, let’s take the challenge. We download a screenshot of the video (which is usually an awful thing to do in forensic analysis, but we do it on purpose here) and place it into Amped Authenticate.

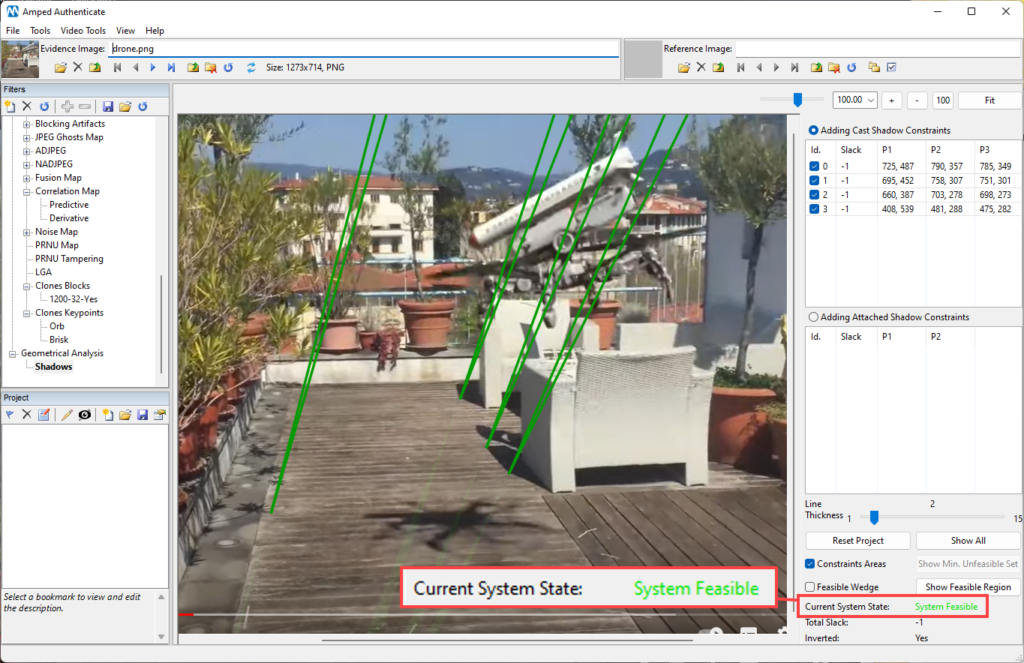

Now, we use the Shadows filter to draw some edges that connect the shadows of innocent objects in the scene to the parts of objects that generate them:

As you can see, after adding innocent object’s shadows constraints, the system state is “feasible”: it means that there exists an intersection among all wedges, which indicates that shadows are consistent.

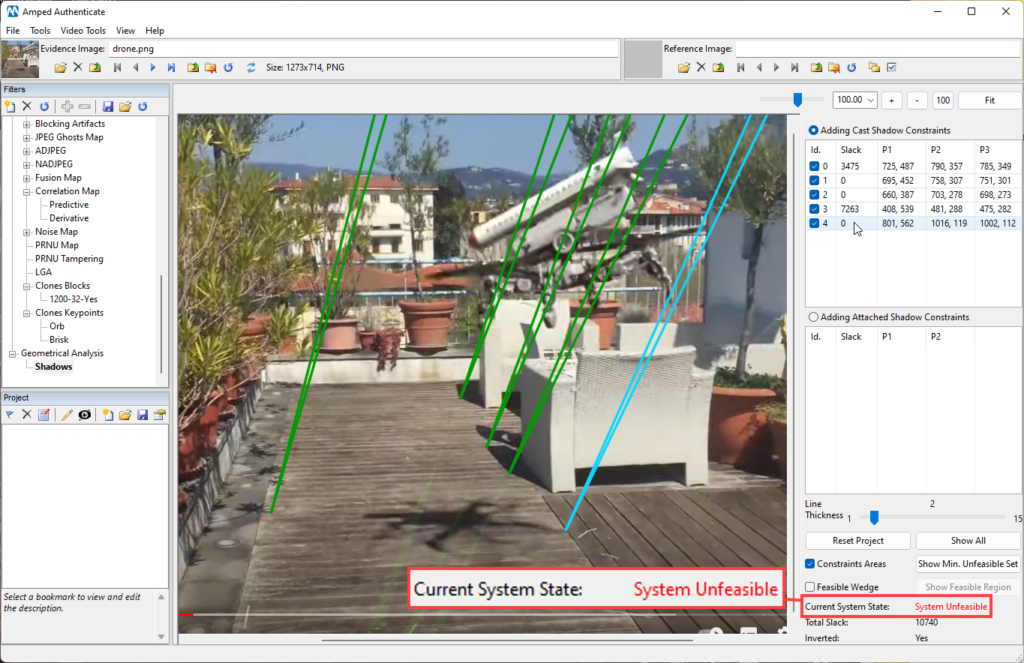

Now let’s add a wedge over the right-most part of the drone (highlighted in cyan below):

The drone-related shadow’s wedge has brought the system to an unfeasible state! This is indicating that at least one of the selected shadows is not consistent with the others, and in this case there’s not much to guess about which one is responsible for that.

Notice, however, that the drone’s shadow wedge is off just by a tiny amount compared to the others (it points nearly in the same direction): it means the artist did an excellent job, and it was nearly impossible to detect the inconsistency by the naked eye.

It’s good to remark that not only this picture came from a SMP (YouTube), but it’s a screenshot of a video! This was to stress again the outstanding robustness of geometrical analysis filters. Most image forensics algorithms based on pixel analysis would fall short in analyzing a screenshot of a video from YouTube: too much has happened since when pixels were originally captured and then edited!

Conclusion

Image authentication is a challenging task, and it only gets worse when dealing with images from Social Media Platforms. Indeed, processing steps between the time pixels are captured/altered and the time of analysis tend to act as an implicit counter-forensic tool.

For this reason, being able to understand whether the questioned image comes from a SMP is particularly important. First, because it can provide important clues from an investigative point of view; second, because authenticating an image requires to ‘reconstruct’ its digital lifecycle as much as possible — and passing through a SMP is something important; finally, because it can steer the rest of the authentication process, for example, suggesting to trust more some algorithms rather than others.

With Amped Authenticate, you’re well-equipped and ready to tackle this challenge in all its aspects!

Visit the Amped Blog to find out more.