Digital investigations have undergone a geometric progression of complexity since my first fledgling technology investigations during the 90s. In those early years, a competent digital forensics professional only needed to know how to secure, acquire and analyze the floppy disks and miniscule hard drives that represented 99% of data sources at the time.

Since those halcyon days of Norton Disk Edit for deleted file recovery and text searching, there has been a veritable explosion of methods and places to store data. The initial challenges were focused mainly on training the investigators in a new field and the progression in size of available storage for consumers (and therefore investigative targets). While seizing thousands of floppy disks required immense effort to secure, duplicate and analyze, it was still the same data we were used to, just inconveniently stored and frequently requiring assistance from outside resources (thank you Pocatello, Idaho lab).

Information evolution and explosion has a direct impact on the field of investigations. To set the stage for the second half of this two-part investigations blog, in this article I’d like to look back on some of what I feel are the major changes that have occurred over the past 30-odd years.

LET’S CONTINUE OUR TOUR

By the turn of the century, hard drives, initially as small as 10-20 Mb, grew to a ‘staggering’ 10 Gb in a high-end computer. Flash media in the form of thumb drives and compact flash cards began to hit the market around the same time, becoming quickly adopted as the preferred storage medium for the newly minted digital cameras and tablet computers. Some of this media was small enough to be hidden in books, envelopes and change jars.

Cellular telephones, originally used only for voice communications, quickly advanced to transmit and store data in the form of messages, pictures and even email. As data became more portable, and therefore easier to lose or have stolen, encryption schemes arose that enabled normal consumers to adopt data security strategies that had previously only been used by governments and their spy agencies.

As data speeds increased, so too did the volume of data created and transmitted, necessitating the need for even more novel methods of storage. At about this time, the global adoption of remote computing quickly moved from dial up network services like AOL and CompuServe, to using those services as an entrance ramp of sorts to the internet, to direct internet connections of increased speed that eliminated the need for the AOLs of the world in the context in which they were originally operating, becoming instead a content destination for users connecting to the internet using rapidly growing broadband access.

FOLLOW THE DATA

Each step in this transformation required that the investigators learned the new ways that data moved, was stored and by whom. Just learning who an AOL screen name belonged to required numerous acquisitions and legal action. Compelling service and content providers alike to divulge these small pieces of data was required to determine where connections were being made from and sometimes by whom. High-tech investigators became one of many pieces of the dot com phenomenon.

Data protection services sprung up with the various dot com enterprises; securing data frequently involved transmitting backup data to remote servers. These servers were rented or given away to anyone who wanted them, adding to the complexity of identifying where in the world a given user’s data resided. After determining where the data resided, there were at least another two layers of complexity for the investigator – namely knowing what legal process was required to acquire the remote data and proving who placed the data on the remote servers.

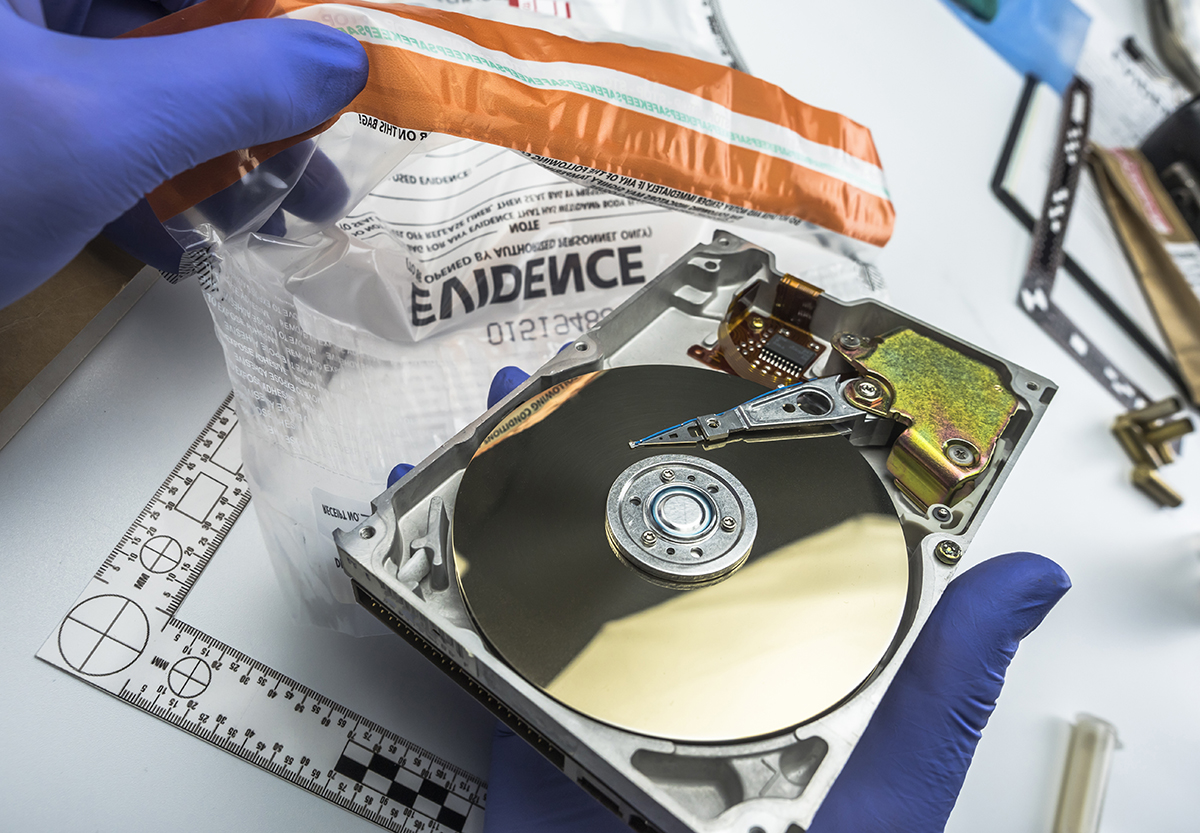

As data quantity exploded, the need for more advanced software to analyze this data was quite pressing. There were several software offerings that sprang up in the early days that, unlike disk edit, were created for the express purpose of reviewing quantities of digital evidence in a manner that was forensically sound. Most early digital forensic tools were expensive, complicated and slow, but they represented an important step in the growing field of digital forensics. The early offerings of both corporate and open-source digital forensic software were anemic compared to today’s digital processing giants.

In some instances, the introduction of 100,000 files was sufficient to bring some tools to their knees, necessitating that forensic cases be analyzed in batches of evidence to avoid taxing the software. Thankfully, this is largely a thing of the past, as products like Nuix Workstation will chew through ten million items without a hiccup, much less a major crash.

Before we knew it, we weren’t just analyzing static data sitting on a local storage device. Network data investigation had to be added to the investigator’s arsenal to determine how data moved across networks, from where and by whom. Along with remote storage services, online communication services exploded across the internet, and suddenly the high-tech criminal had acquired ready access to victims from the very young to the very old for a variety of crimes.

This drastic shift to remote, anonymous communication represented a very new and very real threat that had the added complexity of making not only the criminals difficult to identify, but their victims as well. The traditional transaction involving a citizen walking through the entrance of a police station to report a crime still happened, but new internet crimes meant that when criminals were caught, it was no longer the conclusion of a long investigation. Frequently, it represented the beginning of trying to identify and locate the many victims who either didn’t know where or how to report the crime. This is all because the crimes were facilitated by, or the evidence recorded on, the growing catalog of digital storage.

DEVICES TOO

As digital communication grew, so did the devices used to facilitate it. Cellular phones made the steady shift from plain telephones to a new category referred to commonly as ‘feature phones.’ These phones incorporated digital messaging utilities, including instant messaging, mobile email and access to portions of the internet through basic web browsers.

With the proliferation of feature phones, the real need for mobile device analysis sprang into existence almost overnight. Text messages on a flip phone were easy to photograph and catalog, but feature phones had a much more unique interface, requiring investigators to seek out technical solutions to the problem of megabytes of evidence locked in a device that was as non-standard as you could get.

For each manufacturer of cellular devices, there was a different operating system, storage capability and feature set. None of the existing computer forensic tools could acquire or analyze the wide assortment of available handsets. The cherry on the top of these early ‘smart’ phones was the seemingly random shape, size, placement and pin structure of the cables used to charge them. Many phone models came with dedicated companion software for the home computer that enabled backup or access from the computer.

Those same unique charging cables became unique data transfer cables connected to unique software on the host computer system. It was at this time that the first cellular forensic tools appeared. These systems didn’t appear at all like modern cellular forensic tools. They required extra software, hardware devices called ‘twister boxes’ and a literal suitcase of data transfer cables. Much like the early days of digital disk forensics, cellular forensics was a laborious and highly technical enterprise that required a great deal of training and experience to pull off.

Everything changed again in June 2007 with the release of what many consider to be the first true smartphone: the iPhone. Not long after, the beta Android device was introduced in November 2007 and the cellular arms race was on. If data quantity and location was an issue before, it was soon to become immensely more serious as the public rapidly adopted the smartphone and began carrying essentially an always connected, powerful computer in their pockets and purses.

If the high-tech investigation world was difficult before, it was about to become immensely more so. About the only beneficial thing that smartphones did for investigators was, over a 6-8 year period, they killed the feature phone and with it the suitcase of unique cables. A top shelf cellular forensic professional can safely carry five cables with them to handle the vast majority of phones in use. The original iPhone plug is still found in the wild, the newer Apple Lightning cable, and each of the USB flavors, mini, micro, and USB-C.

But, as you’ll see in part two of this series, that’s about the only positive for investigators. Things have continued to get much more complicated.