This is the second part of “How to Deal with Structured Data.” In case you missed it, the previous part is here.

Before I move onto transforming proprietary databases, let me add this point to packaged application data which I forgot to include in the previous post:

In other matters or collections, it may not be possible to use the live implementation of the application package, or work with the end client staff. While this is likely not ideal, it is still manageable and each instance may have various solutions or methodologies to resolve the issue. In the extreme, it may be necessary to replicate or model the native environment with an isolated environment built using computers to replace the original computers by recreating the environment from forensic images of all the systems.

This can be a very labour-intensive approach as you need to understand the original configuration, and then create a mirror configuration to run in isolation. Years ago, this would have meant setting up a room full of computers, each one being a replacement for one in the original configuration. Fortunately today, many times this room of computers can be replaced by one big computer with each physical machine being replaced by a virtual machine running on the big computer. I know from experience that this virtual machine replicated configuration will work for small environments as my team has done this on a few occasions in the past.

As for a large network configuration, we haven’t had to go to this extreme for a large eDiscovery matter, but my team has used this method to test hosting system upgrades by building a replica of our multi-subnet Ringtail and Relativity environments, and then even to test our business continuity/disaster recovery processes.

Every matter will come with its own nuances, but at the end of the day, you will need access to an environment and application knowledgeable resources to obtain the desired data for the document review process.

Proprietary Databases – Transforming Structured Data

What follows is a set of steps that can be used to transform structured data into documents that can be used inside of a review platform. This is by no means the only methodology; in fact, I have a slightly more labour intensive approach that yields “prettier” documents that I prefer to use. But these are the basics, and this will get you through the first time you encounter this type of data. Generally, the process is:

- Get the structured data into one or more CSV or XML structured files that can be loaded into Excel

- Merge the fields of each structured data table into a single formatted text file

- Break the formatted text file(s) into 1 file per record

- If necessary, join the related (family) text files together

Step 1

Generally, proprietary applications store their data in some form of database architecture. Generally, most common database systems provide a method of exporting the data into some form of flat file export. These flat files are typically XML formatted, CSV formatted, or a format that with effort can be turned into a CSV.

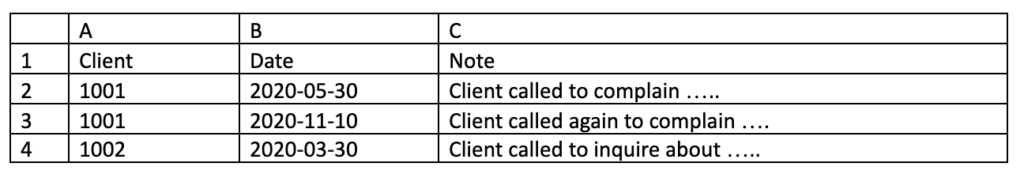

For this step and forward, let’s assume we have a client activity tracking application that has two database tables. The first table tracks who the client is (client #, name, address, etc) and the second table tracks the interactions with three fields (client #, Interaction Date, discussion notes). If the database is SQL-based, we can get an export for each table in CSV format. The key take-aways for this step are that that we get two CSV files, one from each table, and structurally, the records are connected by the client number value.

Step 2

We now have two CSV versions of the tables that we can load into Excel. I like using Excel for this step as it provides three features.

Firstly, I can use it to create a new field if the exported data requires some computation of fields to obtain a value that is not directly stored in the database (e.g. an invoicing system may only store the line items, quantity and unit price, but in Excel, I can generate an invoice total by summing up the quantity*unit price across all the line items for a single invoice number).

Secondly, I can use a variety of formulas to verify the content of each column, or even identify empty columns that can be ignored. Finally, I can use a variety of formulas that ultimately give one cell of a formatted text that I can cut/paste into a text editor. For example:

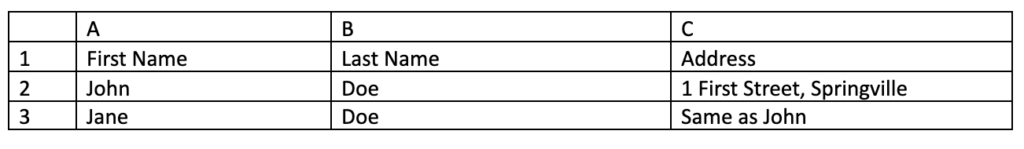

If I put a formula in D2 that is:

=”Name: “&B2&”, “&A2&char(254)&”Address: “&C2

Then copy that to D3 and down

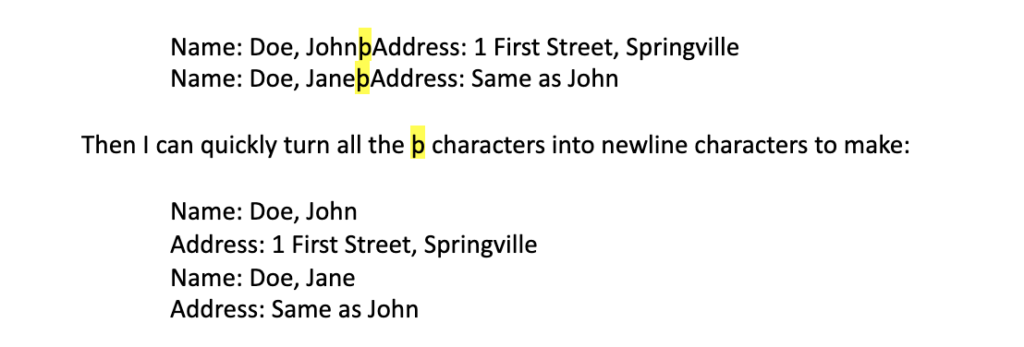

Then paste the results of those formulae into a good text editor like Ultraedit, I get:

And if I copied that formula down through all the records, I could get one large text file of Name and Address lines. Note that it is also possible to embed other control characters in the output string that the text editor can use with macros for formatting. For example, a table like:

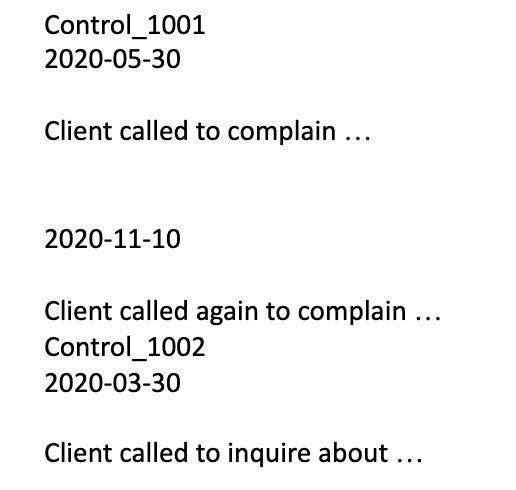

The formula in D2: =if(A2=A1,”~^”,”Control_”&A2)&”~^”&B2&”~^~^”&C2 then copied down, pasted in to UltraEdit and after a couple of replace commands, we get the following text document:

Note that this example is one where multiple rows in Excel are combined into single records in the text file, each having the “Control_####” line at the start of the record.

And as above, one thing that is really handy when dealing with multiple tables is to make the first output line of the formula for each row based on a structure that has the control information. In our two-table example, I would build the first line of each record to contain the client number for the client table, and the client number and date for the interactions table. The lines might look like this for the two tables respectively:

“Control_Client#” and “Control_Client#_YYYYMMDD”

We would do this to set up for step 3, where we need some formatted structure to use to split the files. In the name/address example above for the Excel formula, the string ‘Name:’ could be used as the control string, but more complicated tables likely require a specifically formatted string.

So after steps 1 and 2, we now have one or more large documents that are unstructured versions of the tables’ data. How do we get them into the review platform as families of documents? Stay tuned for the next post 😊

Read part nine here.

About The Author

Harold Burt-Gerrans is Director, eDiscovery and Computer Forensics for Epiq Canada. He has over 15 years’ experience in the industry, mostly with H&A eDiscovery (acquired by Epiq, April 1, 2019).