Presenter: Jad Saliba, CTO Magnet Forensics (formerly JADsoftware)

Join the forum discussion here.

View the webinar on YouTube here.

Read a full transcript of the webinar here.

Transcript

Jamie: Okay, welcome to the first Forensic Focus webinar. My name is Jamie Morris and I’m delighted to be joined by the founder and CTO of JADsoftware, Jad Saliba. This is actually the second time we’ve recorded this webinar – we ran into some audio issues yesterday, as anyone who joined us will know. So a big thank you to Jad for agreeing to re-record today. Hopefully we should end up with a much better presentation as a result. Alright, without any further ado – if you’re ready, Jad, I’ll hand over to you now.

Jad: Okay. Thanks, Jamie, and thanks for letting us do this over again. And thanks to anyone that’s tuning into it to watch this, especially if you were here yesterday for the first recording and had some issues with the audio. Hopefully this recording will be a lot more clear. So, thanks for joining us. We’re going to be talking about finding evidence in an online world, and talk about some of the challenges that you and I used to face as a forensic examiner. And my background is law enforcement, so that’s the perspective I’m coming from, but I think everything that we’ll talk about today applies to anyone that’s working in digital forensics.

So just a little bit about my background. So, I started off as a teenager very much into computers, writing code, self-taught, at that point, writing C command line utilities to do things like save boot sectors, clear partition tables, and just experimenting with things like that. I went to college for computer science and then worked in the software industry at a company called Open Text. I had always had a dream of being a police officer, I wanted to help, you know, catch bad guys and investigate crime. So I became a police officer and did that for 7 years, and some of that time was spent in the Tech Crimes Unit, that’s what, here in Canada what we call our Digital Forensics Unit. And while I was there it was really great to be able to marry the technical background that I had with law enforcement and the investigative side that I had learned as a police officer, to fight computer crimes. And while I was there I started JADsoftware as a part time project at home, doing research on different internet artifacts and started writing code again, and that’s kind of blossomed now into a much bigger thing, and we have a great, talented team of developers helping us take our software to the next level.

So, in September last year I made the tough decision of leaving the police force and going full-time with JADsoftware. So that was a tough decision, I really enjoyed policing, but I couldn’t really do both any more, and I felt that I really wanted to take the software to the next level, so I had to make that decision. But I do feel like I’m still in that field in some ways, and helping all of you that are either in law enforcement or doing corporate investigations, kind of, you know, find the evidence.

So today we’re going to discuss a few challenges that are facing us in digital forensics. These are based on experiences that I’ve had, they’re based on feedback we get from customers at conferences and trade shows that we go to, and in research that I’ve done. And I think these have been kind of the themes that we’ve heard over and over again: so, overwhelming data that’s coming through the labs, being able to find the key evidence, and technology changes.

So first challenge – overwhelming data. We’re getting more devices per case now, and I think everyone would agree about that. Back in, you know, five, ten years ago, it was much less data coming through as far as number of hard drives, number of mobile devices, how big those devices were, and more people now are owning multiple devices. So, instead of just owning a desktop computer at home, they’ve got a laptop, maybe a desktop at home, tablet, and some sort of smartphone. So that’s one of the contributors to the overwhelming amount of data that’s coming through.

Larger storage devices – hard drives are much cheaper now. They continue to get cheaper, they’re much larger. In 2007 I believe the first 1TB drive was introduced, now in 2011, just last year, a 4TB drive was released, and they’re much cheaper now. So people are downloading a lot more things and they need more storage space, so they’re buying more hard drives, and larger, and it’s easy to obtain these.

We’re also living in the digital age now, and I read a research paper where it described – 1999 to 2007 was kind of the golden age of forensics, and that was, like I just described, when you’d have typically one hard drive, one computer per person. They were small hard drives, there wasn’t a lot of different internet sites to count for, or to have to recover different artifacts from, and it was just kind of, in some ways a simpler time for forensics. And now that’s all changed, things are much bigger, there’s more data, there’s more different types of websites out there that people are using, and things are changing quickly. And everything is digital now, everyone is communicating over their mobile phones, or on the internet, through Facebook, email, and more and more communication is done on the internet.

So, how do… some considerations to deal with overwhelming data is empowering the front line. And I think this is kind of a general statement, and some people will have trouble agreeing with it or accepting it. And I think we have to be careful in how we do this, but we do need a paradigm shift as all this data continues to increase, otherwise we’re just not going to be able to keep up. And we need to find ways to allow front line officers or detectives to do some sort of either triage or examination of data, depending on what the case is, to help reduce the load on the Tech Crimes Unit. I know some agencies are using their traditional forensics branch, we called it an Identification Branch in Canada, to take pictures of text messages and those kinds of things on mobile phones for cases that may not be as important as others, or they’ve given them a [unclear] to use, to do quick downloads from certain phones. Budgets aren’t growing, and I know some agencies are actually losing examiners, getting pushed back to the road or other departments.

So, I think it’s inevitable that we need to help the front line help us in what we’re doing. And part of that is triaging data. If we can figure out which devices have the data that is important to the case, and just focus on those, that can save a lot of time. If you are doing a search warrant or entering a business, to look at the computers, and there’s 15, 20 computers, instead of grabbing all those computers, if we can do some triage on the scene of those systems and find the systems that are most relevant to what we’re dealing with, and just grab those, or even if you have to grab them all, knowing which ones to start on can really accelerate your investigation. So using tools that can do quick scans of a drive, or device, and give you an idea of what’s on there before you dive in and do your full examination, are very useful.

And in with that, automated tools. So, automated tools save you time and allow you to multitask. So while the tool is running a search or doing some sort of process for you, you can work on other parts of the case. And the examiner obviously becomes especially important when using automated tools. It’s very important to verify the findings from those tools, it’s very important to use other tools to cross-validate, or go to the locations of where the data was recovered, and just manually verify it for yourself, so that when you have to go on the stand or elsewhere to stand behind your findings, you can say that you did verify and it wasn’t just push-button forensics.

So, a screenshot from the latest version of our software. This is the first screen that you would have, and you can essentially just add drives, files – so things like live RAM dumps, or you know, the page file or certain files you’ve exported from a case, or a folder full of files that you’ve exported, or just point it at the image that you’ve obtained of a hard drive – and you can just add all these in, and they get queued up and searched one at a time. So that’s kind of what we’re trying to do to help combat, make things simpler this way, to combat the overwhelming data that’s coming through.

Challenge number 2 – investigator pressure, and I think this isn’t anything new, there’s always been a lot of pressure from administration or investigators for results. Non-technical people sometimes don’t understand the work that’s involved in examining a hard drive or doing an internet investigation, and they don’t understand what’s possible versus not possible, depending on what they’re asking for. An example is, we had a case of a video chat on chat roulette, and the investigators were hoping for a copy of that video from the suspect’s hard drive, and unfortunately, of course that doesn’t get saved anywhere – maybe traces here and there, but the entire video is not saved. And it’s hard for them sometimes to wrap their minds around that or to understand it’s just not there. So that can be a challenge.

Turnaround time – different cases require different amounts of time to examine, and I think that’s pretty obvious – some can take a few days, depending on the nature of the case, and some can take months. The overwhelming data that I spoke about earlier is increasing the average turnaround time. I had a case involving a large number of computers, and investigators kept changing what their parameters were for what they wanted to find, and there was over a quarter of a million emails involved, so I had to keep re-examining, re-filtering data, and giving them different reports. So that took quite a bit of time in that case.

Case backlogs – also I [unclear] talk to any of you about that, we’ve all got backlogs and I know some agencies are facing up to two years of backlogged cases. And after two years, or even after a year, and sometimes less, data can become less relevant to the case. Maybe the case has been resolved, or the data is just out-of-date now. That’s a challenge when you’re facing these backlogs, and there’s a bit of a mental impact on the examiners.

So, things that can help with that – is knowing where to look for different types of data. So for internet artifacts, getting live RAM captures is really important, there’s a lot of great data that can be found there that sometimes isn’t found anywhere else on the hard drive. The page file and hibernation file, those are closely inter-related with the RAM, so those can have a lot of great information in them as well, especially if you’re not able to get that live RAM capture. The master file table, [unclear], temporary internet files, those are all really good places to look.

Knowing what can be found – a point that I’ll make later. Doing research to know how to time it, if someone’s asking for a certain type of data, and you’ve already kind of done some research, without spending a lot of time you’ll know that it’s either possible to find and you know where to look for it, or it’s not possible, and you can kind of go from there. So that’s important to know.

And having a good toolbox, I think just like having the right tools in a traditional toolbox makes all the difference when you’re doing home repairs or working on a car, having the right tools in your forensics toolbox can also make a big difference on every case. Sometimes you need multiple tools to do different things or to verify results, and especially I think with mobile devices and things like that, you need to throw a few different tools at the device sometimes to get what you need. So that’s very important.

I’m going to go through some examples of some different types of internet artifacts that you might find and, just to give you an idea of what you can find out there, and what some of the different types of data mean.

So on this first screen here, you’ve got an example of Facebook chat, and highlighted at the first line there is the profile ID of the logged-in user. So regardless of who sent or received the message, this is the ID of the user that was logged in at that time, and in this case it’s the sender of the message. So just below that is the text of the message, and that’s the chat message that was sent, and then you’ve got the time, and that’s in UNIX or Epoch time, UTC, and this time value comes from the Facebook servers, so regardless of if you changed your time on your local machine or what time zone you’re in, it won’t modify this time value, which is really good.

The next time value below that is client time, it’s the time the message was sent, but that’s coming from the client. So in a web browser, it’s generally just a few milliseconds behind the time value, but the problem comes in when someone’s using a 3rd party program to do the Facebook chat, and this client time can be changed, or sometimes it’s not supported properly, so you could get varying data in this field here. So it’s less reliable to use that way, as it can be modified by the user.

Next, after that, is the From and To IDs of the Facebook recipient and sender, and you can look these up on Facebook. So if all you have is the ID, you can go to a URL on Facebook, replace the ID with one of these IDs, and get some information about the user. And at the bottom it’s just the From name and the To names of the people involved in the conversation. So Facebook chat used to be found all over the hard drive. Now they’ve made some changes last year, and it’s really a lot less of it is hitting the hard drive, so again live RAM becomes very important. I am seeing some of these fragments in the page file and hibernation file. So you can still find it, but it’s just not as much as it used to be.

On this next screen we’ve got Windows Live Messenger, and the first thing I’ve highlighted is the message date. So that’s the date and time the message was sent, and that’s local time. On the next line is the date and time again, but it’s in UTC time. So that can be useful for finding the time zone offset that the user was on. Below that are the friendly names of the From and To users in this message. The unfortunate thing about friendly names is it’s kind of a nickname that the user can set, and this can be changed as often as they like, so sometimes it’s tough to tie down to a specific person, unless they don’t change their name throughout their conversations. At the bottom is the text of the message. So these are fairly easy to find. If they’re being saved, you’ll find them in the My Documents folder for the user, and they’re easy to search for in an [unclear].

The next screen is Gmail Webmail, and this is essentially the data, a fragment of data that you’ll find, that relates to the view that you see when you log into your Gmail and you’re looking at your Inbox view. So the type of data that’s included here is essentially what you’re seeing on that screen. So the first thing that I’ve highlighted here is a message read indicator. And in this case that yP indicates the message was read, and if it was zF, then it would be an unread message. That can be useful sometimes. Next, after that, is the emails that were involved in the conversation. So in this case we have one, and this is the sender of the email. Sometimes you could have multiple email addresses in here, and there’d be emails that were involved in this conversation. Below that is the subject line of the email, and then after that is a snippet of the body of the mail. So this is kind of the first part of the email, and you can see that in your Inbox view, just a kind of the first part of the conversation that’s in that email. This email has an attachment, so you can see the filename there, and then after that you’ve got dates and times. So this is the local date and time, and again, you’ve got date and time in UTC, in the Unix format, so again a nice way to see what the time zone was.

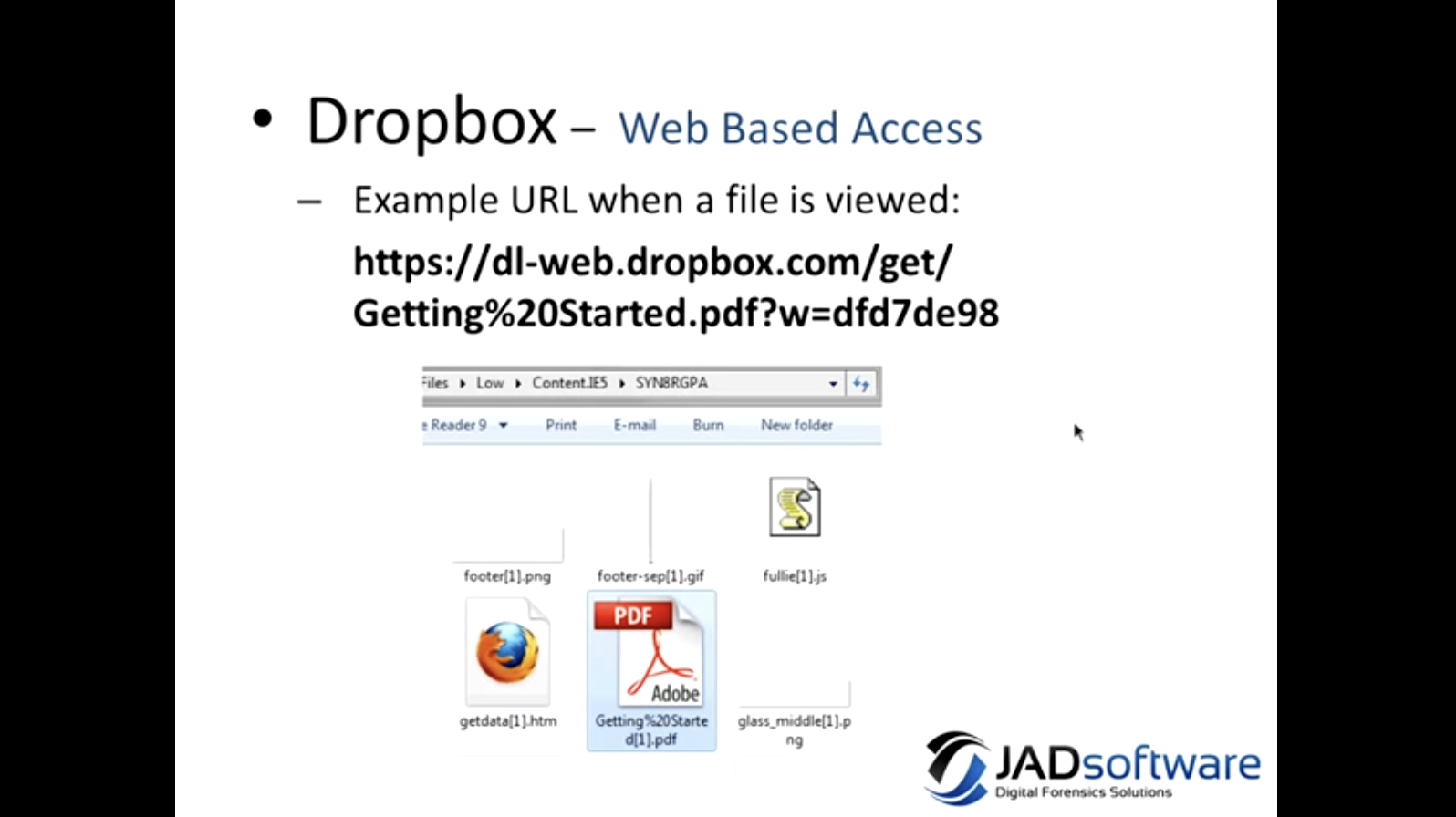

The next thing is Dropbox, and you can access Dropbox in a couple of different ways, either on your desktop or through their web portal. And when you are accessing it through the web browser, files that are viewed get cached in the Temporary Internet Files. So if you’re seeing in the web history a URL that looks like this one here, it’s worth looking in the web cache to see if file is there as well. And it’s easy to see what the file name is in the URL, so something to look at.

This is an artifact that gets left behind when you’re viewing files that you have on your Dropbox through the web. So the first item that I have circled there is the database ID, and this is essentially like the Dropbox user ID. Next is the filename, that they have on their Dropbox, and the file type, so this is a pdf file, and then a link to the file, and obviously you’d need to have the user’s credentials to log in to actually get this file. After that, file size, and the date and time modified in UNIX time, and date and time modified and this is local time. There’s also a file version ID or number, and this is a number that Dropbox uses to keep track of the versioning of files. So if you update this pdf file, it’ll keep a copy of the previous version. So it’s a good way to, if you’re seeing multiple artifacts, if this file version has changed, it would indicate that the file has been modified.

So this is a screenshot from our software, IEF, and it just shows all the artifacts that I’ve covered now. You can just have them checked off here, and do a search and you can recover them. There’s more than that obviously – if you scroll down there’s a number of other types of things, like peer-to-peer and web history. This is a screenshot of our report viewer, and this is showing Dropbox artifacts that were recovered. And this is from the screenshot that I just showed of the Dropbox artifacts, and it just shows you how it gets laid out for you there, and parsed out into the relevant different fields.

So the last challenge that we’re going to talk about today is technology changes. And one of those is solid state drives. So solid state drives have a feature called “trim”, that essentially reduces the amount of deleted data and unallocated space, and I won’t go into the details of how it works, but it’s essentially done to keep the speed of the solid state drive high as it gets full due to limitations of the SSD technology. So it’s good for people that have solid state drives, it’s not good for forensic investigators that are trying to recover deleted data on unallocated space. And these drives are getting larger and cheaper, so more and more people are adopting them. RAM sizes are increasing, I would say the average size in a laptop now, for you know 5-600 dollars is around 4-6 GB, but that is increasing, and RAM tends to follow Moore’s Law in some ways, and the average increase is about 4 to 5 times every 4 to 5 years for RAM, so as time goes by, in another 4-5 years, we could be seeing really large amounts of RAM in just the average home laptop or computer.

Emergence of new applications and artifacts – I don’t think this is news to anyone. The internet landscape changes quickly, sites that are popular today are not popular tomorrow, and vice versa, and artifacts change format or become less plentiful. And Facebook chat’s a good example of that. You used to be able to find thousands and thousands of messages on the hard drive for Facebook chat, and now it’s much less so because of how Facebook has changed, how their chat works. So time is needed to do research on these new artifacts.

The cloud is getting popular – it’s a big buzzword right now, but people are adopting it. If you look at stats, people are signing up for SkyDrive, for Dropbox, iCloud, in very large numbers. And as the cloud is used more, less data gets left on the hard drive, and some applications are starting to store more data on the cloud than on the hard drive. So Yahoo! Messenger is a good example – with their latest version, chats are not stored on the hard drive any more, they’re stored in Yahoo!’s cloud servers. So even if you have logging turned on, you’re not going to find those chat logs on the hard drive any more. Google Talk has been doing that for a long time, and they do it out of a convenience thing for the user.

Encryption is also kind of something that’s growing and becoming more popular. TrueCrypt is free use, it’s easy to use, if someone wants to put full disk encryption on their laptop, it’s not really that inconvenient or hard to set up any more, and more and more people are using it to protect data, sometimes for legitimate reasons and sometimes for not. So it’s a very tough thing to crack if you don’t have the password or if they haven’t used a simple password. So that’s a challenge.

Some considerations for these items. With solid state drives there are some areas on the hard drive that are not affected by trim, and one of those is the master file table. So a lot of times, small bits of data can be found there, and even if they’re deleted, they’re not going to be affected by the trim technology that SSDs have. And [file slack] is also protected that way, and you can find again chat messages, web history, things like that, there are smaller bits of data in file slack, and that’s not going to get wiped by solid state technology. It’s important to remember live files – files that haven’t been deleted. There’s still a lot of great information to be found there, in the page file, in the hibernation file, and those are obviously not affected by the hard drive technology. Live RAM becomes more important as well, as RAM sizes increase and things like solid state drives are becoming popular.

So, I think it’s more important than ever now, if you’re going on the scene and the computer’s on, to start doing those RAM captures, just to have it, even if you’re not sure exactly what you’re looking for there. Once you have that capture, then you can always go back to it and look for data or do keyword searches or run tools on that RAM dump to find data that might be in there. A lot of times there are types of data that don’t get left on the hard drive, they’re only found in RAMS. So it’s your only chance to get at some of those, and I think it’s fairly easy to do FTK Imager has built-in live RAM captures now, our software has a very easy RAM capture. There’s a command line utility that’s free, called DumpIt, that’s easy to use as well. So it’s a great thing to do if you’re going on scene.

As I mentioned previously, doing some research is helpful when you’re doing your exams, to know what can be found, what can’t be found. But most people I think are very busy just keeping up with their case backlogs, writing reports, and so on. So I think it’s very important to rely on each other and with your colleagues to kind of get this information. Spending time on discussion forums, blogs, and just getting on the phone with someone that maybe works in another jurisdiction that might have faced the problem you’re facing, and that can be a really big resource. And I’ll show you some of the blogs and resources that I think are really great to keep on top of.

With the cloud being more popular and leaving less data on the hard drive, there are still tracks that get left behind when you’re using the cloud, and I showed you that Dropbox example. So that’s a great indicator of activity – you can get filenames, dates and times, modifications, and things like that. And that can be a really great investigative tool , and it can be great for obtaining further information, so maybe for a subpoena or warrant, and getting that original evidence from the cloud provider. So there are still things to be found, and good information that can come from cloud usage.

And finally, live system analysis. So with encryption it becomes more important as well to do that on scene, kind of triage, see what’s on the screen, run a tool to check for encryption and verify that there is no encryption before pulling the plug. And if there is, then you have your opportunity to do a live image of the drive using FTK Imager or some other tool. It’s not the most optimal situation, but it’s better than unplugging the computer or pulling the battery and going back to the lab and having nothing. And we ran into a situation like this when I was working with the police, where we’d got a search warrant, and the user did have TrueCrypt, full disk encryption on the laptop. And by checking for encryption before pulling the battery, we were able to realize that the encryption was there, and imaged the drive before shutting down, and there was a lot of really great data that came out of that case. So it’s something to think about.

This is a screenshot of the RAM capture that our triage product has, and it’s as simple as just browsing to where you want to save the RAM dump and clicking Start. And it’ll run on 32-bit or 64-bit systems, and we’ve tested it on systems up to 96 GB of RAM, without any issues. So it’s something to think about doing when you’re going on scene. Here’s just some recommended sites and blogs that I try to stay on top of and I think that a lot of people know about and respect, and these people have volunteered their time to do research and to share it with the community, and I think it’s a really great resource for everyone, as well as the mailing lists that are at the bottom there. You know, lot of great people that are willing to volunteer their time to help each other out. So those are good things to kind of bookmark and go to every so often.

That’s all we have today. Just wanted to provide a couple of links there to get a trial of our internet evidence finder software, we’ll send you a key to get full functionality for a couple of weeks, so you can try it out on a case that you’re working on now, or maybe something that you’ve worked on in the past. And we’re also offering a 10% discount until August 1st for people who’ve watched the webinar. So just contact our sales team for details on that, and they’ll help you out.

So, I do want to thank you for your time today, for watching this little presentation, and hopefully you found it useful in some way. I also want to really thank Jamie from Forensic Focus for putting this on and for all his help and time. So thank you very much.

Jamie: Jad, that was great, thank you very much indeed.

Now, a few questions came in yesterday while we were recording the live version. Would it be okay if we just ran through one or two of them again just now.

Jad: Sure, that sounds good.

Jamie: Okay, let me bring those up on my screen here. I think the first one you touched on just at the end of the presentation, but it’s – can you recommend a solution to acquire RAM and page file.

Jad: So yeah… FTK Imager is a great tool, it’s free. I think everyone is familiar with it. It’s been around for a while and it’s great for using for imaging, but they can do RAM captures as well now. I mentioned our software and showed a screenshot there, and the DumpIt utility. I don’t have a link for it here, but if you just google that you can find it, and it’s a command line tool, small footprint and easy to use as well.

Jamie: Okay, second question – in IEF, is there a way to use distributed computing to accelerate processing.

Jad: Okay, we don’t have support for distributed computing at this time, we are looking at that and we are also adding multi-threading to the software so we can take advantage of all the CPU cores that you might have on your workstation. With the latest release we have increased speed, in some case 50 to 75%, so we’re really optimizing things and taking advantage of a new platform that we’re on now, for the software. So it is much faster now, even without doing any of that kind of stuff yet, but we are looking at distributed computing and multi-threading.

Jamie: Okay, great. And then just one final question – are cloud storage providers taking any proactive steps to monitor the content of storage, such as hash analysis for known CP images.

Jad: Yeah, so, I don’t know if they’re being that clever yet, I think the day is coming and I think they are starting to be more cognizant of these kinds of things and that they do have a bit of a responsibility to monitor some of the data. I do know that they are looking at data that gets uploaded. We had a case that involved Flickr where Flickr had actually contacted our agency saying that someone in our area had uploaded indecent images of children on to his Flickr account. So I’m not sure how they came to finding those files or being aware of them, but obviously they are keeping tabs on the files in some way.

Jamie: Alright, well, that was the last question. I think it just remains for me to thank Jad once again for re-recording today’s webinar, but also to thank everyone who joined us, whether you were listening live yesterday or you’re listening to this recording at a later date. I hope you found it useful. As always, if you want to give me any feedback, you can contact me by email on admin@forensicfocus.com, or you can use the contact form at the Forensic Focus site.