In this video from DFRWS-EU 2022, Jenny Ottmann revisits the discussion on quality criteria for “forensically sound” acquisition of such storage and proposes a new way to capture the intent to acquire an instantaneous snapshot from a single target system; one which allows a certain flexibility into when individual portions of memory are acquired, but at the same time require being consistent with causality (i.e., cause/effect relations).

Jenny Ottmann: Today, I’m going to talk about well, the definitions that we thought about in our paper for the quality criteria of memory images, or snapshots. So these terms will be used interchangeably, and a small warning: this is going to be way more theoretical than we just heard, but I’m going to try and keep it not too mathematical.

So the motivation for this topic is best understood if we look at the topic of main memory analysis. So when we analyze main memory images, we often want to know many things like; which kinds of processes are there, what is in the process memory and open network connections?

And for this information, we rely on data structures that are used by the operating system. So in the case of the Linux Kernel, for example, it has the task list.

And this is all great if we have consistent data structures, but oftentimes main memory images cannot be taken in a system that is in a frozen state. So while the image is taken, we have concurrent processes that make changes to the memory.

And one effect of this is that, maybe many people have heard about, is page smearing. So we get inconsistencies between the entries and the page tables and the actual contents and the page frames. A simplified example can be seen here where we have two memory regions; r1 and r2 on the Y axis and the progression of time on the X axis.

And so our memory acquisition process starts, and first acquires this first region here in the orange square, and then due to concurrent activity, our acquisition process doesn’t continue, some other things happen and suddenly we get a new list element, for example, in our task list, and a pointer to this new element.

And now we acquire the second region that contains the pointer to the list element. And this means that in our memory image, we have an inconsistent state with a pointer for like a next pointer, for example, pointing to a memory region with well, random memory or something like an older list element. So this is a state that has never existed like this.

And because this issue is not one people have been thinking about, yeah, what defines the quality of a memory image, especially in situations where we can’t freeze the system? And 10 years ago, Vömel and Freiling came up with three quality criteria; namely atomicity, correctness, and integrity.

And well, briefly, atomicity tries to look at the influence of concurrent activity on equality of the memory image; correctness just asks, is the acquisition process actually working correctly, is everything that is there copied and are all values copied correctly; and then integrity, the last one, tries to capture to which degree the memory acquisition program itself influenced the memory contents.

A few years later, more recently, Pagani and others, they criticized especially atomicity for being difficult to evaluate and practice, and instead suggested another criterium, which they call time consistency, because they also think that these inconsistencies during the memory acquisition process are big problem and influence the quality of the memory analysis to a high degree.

And so, instead of focusing on the concurrent activity or on the causal relationships as with the criteria of atomicity, they said that with time consistency we actually have to look at if the contents in our memory image were coexistent like this in memory at a point in time. So if there could have been an instantaneous snapshot that would’ve returned the same contents.

And in the following, so this motivated us to think anew about these criteria, that Vömel and Freiling formulated, and also maybe integrate this concept of time consistency into the formalizations. Because we think that this abstract way of thinking about the issue might help to identify interesting ways to evaluate the quality of memory acquisition tools.

And in the following, I’m going to first talk about this concept of atomicity and then about integrity and how they are interconnected with each other. So, for this, we actually need to have, well, a model and a way to visualize memory. And the model that was used by Vömel and Freiling is influenced by concepts of distributed computing.

So what we can see here are two memory regions, like in the example at the beginning r1 and r2, and the arrows denote how they change over time. And the contents of memory regions are changed by processes. We see p1 and p2 in this example, and each time a process accesses a memory region, for example, with a read or write, this is denoted as an event.

And events are ordered obviously by time. So in r2, we see that e2 happened before e3, but there’s a different connection as well, namely when the process first accesses one region and then another, like here between e1 and e3. And this happened before relation, it’s actually also a causal relationship or at least a potential causal relationship where we say it could have been that e1 is actually the cause of this event e3.

And these kinds of errors become interesting later for atomicity. But how does it look like when we take a snapshot of such in our model? So a memory snapshot or memory image is a cut through the space-time diagram. So that’s what I was talking about. And so here we see our cut is this orange line and everything to the left of the cut from its point of view lies in the past, but everything to the right lies in the future.

And so these space-time diagrams will now keep us company for the remainder of this talk. So let’s first look at atomicity or in a more general term, consistency. So the ideal case can be seen here. We have our two memory regions and we take the snapshot at exactly the same time.

For the model, this acquisition process needs to not only acquire the memory contents, but also the time at which the contents were acquired. So in this ideal snapshot for both memory regions, the safe time will be the same.

But as we already thought about, it’s often not the case that we can achieve these ideal circumstances. Instead, it might take some time to take the snapshot. So here we see that region one is copied earlier than region two.

And so what Vömel and Freiling called atomicity could also be called causal consistency because the deciding factor is if these causal relationships like here between e1 and e2 are completely part of the snapshot. So if for every effect or possible effect, we contain the possible cause.

So here everything is okay because we have the effect, e1, and the cause, e2. But in this example we see a snapshot that is not atomic. Here we first acquire region one, then event e1 happens, then event e2, and then region two is acquired.

Again, this could happen because of concurrent activity or in a more realistic example, there might be many memory regions in between. So it will just take some time until we get to the causally connected memory region.

And what we have here in this example is that this event e1 is in the future from the perspective of the snapshot or memory image and e2 lies in the past.

So now we have the situation that an event that happened in the future or happens is going to happen in the future from the perspective of the snapshot influences an event that has happened in the past. And this can have consequences like what we saw in the beginning.

Imagine if e1 was actually adding a new list element and e2 was changing an existing pointer to point to this list element, we would be missing this connection and in the worst case we could get a pointer to a wrong list element or otherwise confusing value.

So there’s this one way to think about it, just a causal model. Another way is to think about which kinds of memory contents were coexistent in memory at a certain point in time. So this is what we call quasi-instantaneous consistency. Instead of looking at causal dependencies between events, we try to see when the memory contents coexistent in memory.

In this example, the snapshot first copies r2 and then r1. And in between we have two events that happen, e1 and e2. And if we now try to see if the snapshot is quasi-instantaneously consistent, we have to search for a point in time where an instantaneous snapshot, so one that is taken while the system is frozen, would’ve produced the same results. And in this example, this could be this gray line here.

So what does this look like if the snapshot isn’t consistent? So here we also first acquire region two and then region one, but the problem is that while event e1 happens after region two has been already copied then event e2 happens and then region one is copied.

So at least in the timeframe that we are observing, there isn’t any state at which the memory contents caused by this event e2 were coexistent to memory contents where e1 hasn’t happened yet. Therefore we get a violation and this could again reside and something like this list inconsistency, where we are missing a link or seeing wrong links in the end.

So these are our consistency definitions, and they are also connected to each other. So one unsurprising thing is that instantaneous consistency implies both quas-instantaneous consistency as well as causal consistency.

This is because if we freeze the system and take the memory snapshot, copy every region at the same time, then we know that the point in time at which an instantaneous snapshot would have produced the same result is exactly that point in time.

And if we have this vertical line, there can also be no arrows from the future into the past. What is more interesting is the relationship between quasi-instantaneous consistency and causal consistency.

So here we see an example of a snapshot that is atomic, but not quasi-instantaneous. It’s a really simple example because it needs to be atomic as there are no causal relationships between e1 and e2. So, you know, for the causal effects, both processes are concurrent to each other.

But it’s the same example that we saw earlier for not quasi-instantaneous snapshot, we cannot find a hypothetical instantaneous snapshot that would’ve produced the same result. It was as what we see in our snapshot.

But what about the other way around? Is every quasi-instantaneous snapshot also quasi-consistent? For this, we need to think about something that I have mostly ignored so far. Events can be read or write events. And when we define these, when we think about these implications between the definitions, we also need to first think about which kinds of events we are observing, or we want to observe.

In this example, if e1 is only a read access, the snapshot is not causily consistent because the cause of e2 is missing, but it’s still quasi-instantaneously consistent if the event doesn’t change the memory contents, because then we can find a hypothetical instantaneous snapshot that would’ve produced the same results from the content. So this is for example, the gray line here.

If we say that we are only observing modifying events, then the situation changes. In this case, if e1 happens, then the snapshot is neither causily consistent nor quasi-instantaneously consistent. So we know that every time such a cause is missing, we will actually observe this when we try to search for the hypothetical instantaneous snapshot.

There’s just one limitation to this. We also need to be sure that after the example e2 has happened, there are no further events that changed the memory contents back to the original state of r1, because in this case we could possibly miss the connection that the causal consistency isn’t achieved anymore.

All right. So now we have been thinking a lot about consistency, let’s change focus a bit, and move on to integrity. So, as I said, consistency is mostly about the influence of concurrent activity on these snapshot contents. In the case of integrity on the other hand, we need to think about the influence of the acquisition program on the memory contents.

Vömel and Freiling defined integrity with respect to a point in time t. This could, for example, be the time at which the acquisition program has started. And to put it simply, they said between t and the acquisition of each memory region, no events are allowed to happen.

So if this event e1 or no modifying events are allowed to happen. If e1 here happened then this snapshot wouldn’t satisfy integrity anymore. We call this restrictive integrity because it doesn’t allow for any modifications to occur, but we thought that there might be use cases for a more relaxed definition.

It’s still with respect to a point in time t, but we said modifications are allowed as long as in the end, when the contents of the memory region are acquired, the original contents that were existent in memory at this point in time t are copied. It seems maybe a little bit arbitrary, but we could think about cases in which we acquired a memory from an outside source, but can still not freeze the system.

So if we operate at a higher privilege level than the operating system, this could be possible that we have to change parts of memory, but that we also have the ability to change them back before they are acquired.

The integrity definitions as well are connected to each other. This is also one obvious thing to see that restrictive integrity implies permissive integrity, because if no values at all are allowed to change any memory contents, then we also know that we are actually acquiring the contents that were existing in memory at time t.

Then there’s another implication, namely between integrity and correctness. So I haven’t talked about correctness today, but we can still get to understanding this, because the integrity definitions both compare the snapshot contents to the actual memory contents.

So if our acquisition program would be working incorrectly and maybe not acquiring all memory regions, or always acquiring ones when there are zeros, we would never evaluate a snapshot to satisfy integrity, and therefore we can just evaluate integrity and not have a special evaluation for correctness.

So lastly, integrity and consistency are also related to each other. And let’s first have a look at the duration between integrity and quasi-instantaneous consistency. Here we see a snapshot that is quasi-instantaneously consistent because we can find a point in time at which the memory contents were coexistent like this in memory, for example, here, the gray line.

But assuming that these events actually changed the memory contents, integrity isn’t satisfied with respect to t. So not every quasi-instantaneously consistent snapshot also satisfies integrity.

However, the other way around, we actually have an implication. So if we know that a snapshot satisfies integrity, then we know that it has to be at least quasi-instantaneously consistent, because with both integrity definitions, we know that the acquired values are those that were existent in memory at time t.

So this would be our gray line that we are searching, our hypothetical instantaneous snapshot for having the same memory contents in our snapshot than like a hypothetical instantaneous snapshot would’ve looked like. So we get this implication here.

As I said, the implication between quasi-instantaneous consistency and causal consistency is kind of limited by what kind of events are observed. So we cannot say that every time that because of this, we also have the implication between integrity and causal consistency only if we apply the limitation.

And there is another connection: restrictive integrity implies causal consistency if we are only observing modifying events without any further limitations, because if no event at all happens, then we can also not get missing causes of effects.

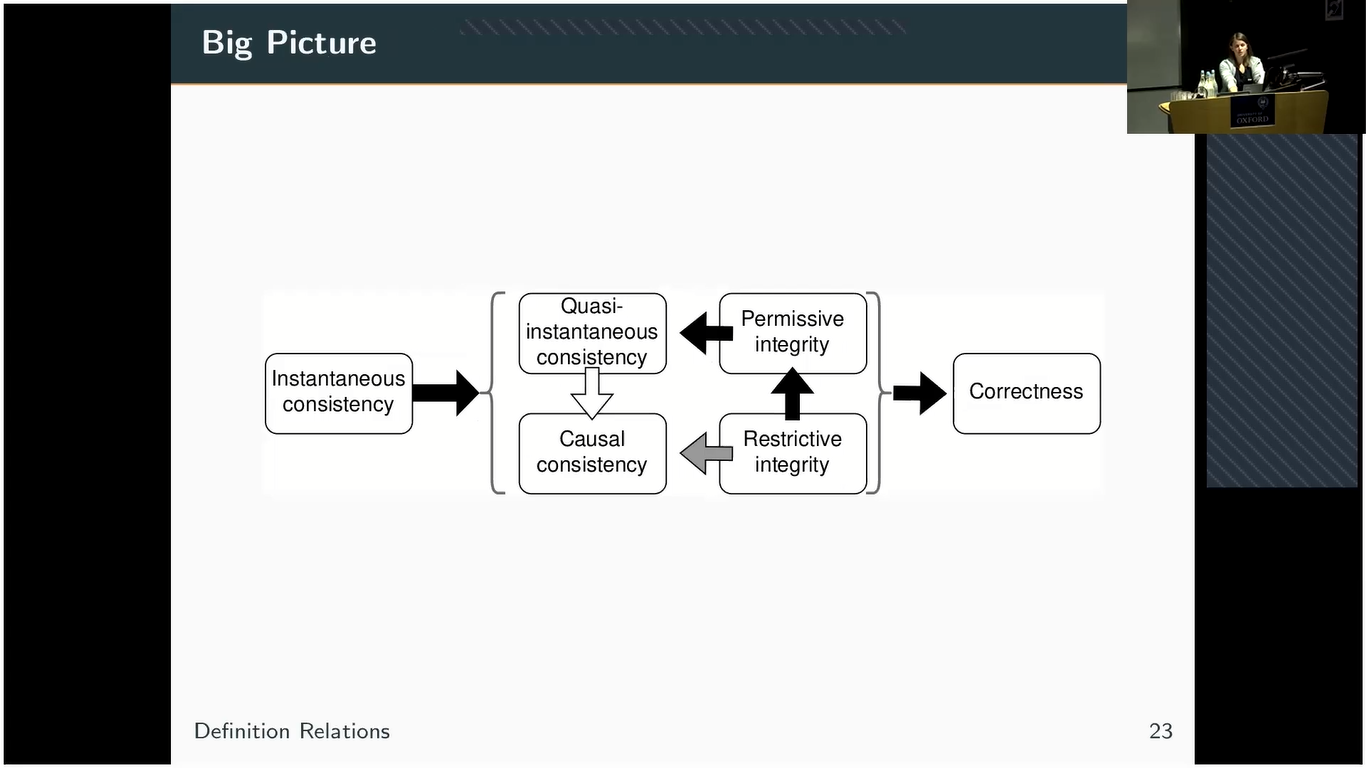

So in the end, this leads us to this big picture. What becomes clear by looking at this is that it’s really difficult to define consistency completely independent of integrity, which might be a weakness. And also that if we can evaluate integrity, we don’t have to independently also evaluate correctness.

Okay, so this was like the main definitions that we thought about. Of course, now that we have like, continued or worked a while in this abstract theoretical realm, it would be interesting to move a little bit more to the real world again.

And so as future work, we would like to actually perform practical tool evaluations with the two consistency definitions; causal consistency, and quasi-instantaneous consistency. And maybe also have another look at integrity because yeah, prior investigations into this maybe could be improved.

So thank you for your attention. And I’m happy to take any questions if you have some.

Session Chair: Thank you very much. So are there any questions in the audience or in the chat? Yes, there is one.

Audience Member 1: Thank you for your presentation and a good explanation about integrity. I was wondering if you take into account, what I think is like, inverse proportional relation between relevance and volatility? So typically things that have changed a lot are forensically not that relevant. So how do you see that?

Jenny: You mean…sorry.

Audience Member 1: Well, if it traces only a memory for, let’s say microseconds, typically I find it’s not that relevant in the scale of user activity that’s more about chats or killing or theft. So the relevancy of very short existing traces in the scope of acquisition is limited. So what are your views on that?

Jenny: I think that’s true. So yeah, this was mainly like an abstracted view on these concepts. But still, I think it’s interesting to understand what’s happening. To understand if you have only a few seconds to take a memory image, what can you get, how likely is it that you will have a memory snapshot that you can actually work with, that you can analyze well, and maybe also, what can you do to increase the quality of the memory snapshot? And I would like to talk about this more if you’re interested as well later.

Session Chair: Okay. Another question? Actually, I do have another one. You talk about the idea of making some practical tool evaluation. Do you already have a plan, like how to proceed with this?

Jenny: Kind of. So, we have been discussing quite a bit about this because especially in the case of atomicity, it is kind of difficult. Like, if you think about these causal relationships you can’t really track all processes of one system, like, at least a realistic one, and see how they interact with all the memory regions and how you get these connections. It is possible, but it takes a lot of time, I think.

So we have been thinking about maybe taking only a part of the system. So focusing on a certain part of the operating system memory, or maybe moving this to some kind of test program where we control everything, and then we can do some test runs where we try to evaluate how many inconsistencies do we get in the small part, and then try to connect this to other inconsistency indicators, like when we try to use volatility and we see, oh, the process list is inconsistent with another source of getting how many processes we’re running, for example.

Session Chair: Okay. Thank you very much. Yes, there is another question.

Audience Member 2: Thank you for that. Have you done any research into targeted acquisition of areas of memory, rather than doing a linear acquisition of starting at zero and going to the end, is there any benefit to jumping around within memory to image parts of it to gain atomicity?

Jenny: So we didn’t do any research or experience in this direction, but the Pagani and others in this paper mentioned, they suggested instead of performing this linear analysis to start with memory areas where we know they are important.

So for example, the Kernel memory where these process lists and everything are located and then continuing with the rest of the address space. So kind of, yeah, starting with the important things and then continuing. So I think that’s a good idea and should help quite a bit.

Session Chair: Okay, there’s another one. Yes. Give me a second.

Audience Member 3: So hello. So there is a lot going on in the memory when you try to acquire a memory image, so how do you identify the images like that causing inconsistence, because there could be a lot of images going on, right? So will you create a profile or something, or how would that look like to identify that, okay, if this has happened, then it’s the integrity breaks or if this hasn’t happened?

Jenny: So you mean like trying to define situations that make certain types of inconsistency more likely? I think that’s an interesting idea. I’m not exactly sure at the moment how we would go about doing this, but yeah.

Audience Member 3: Yeah, because it’s about how do you measure all these different properties, right? And if you cannot identify the events and then when they happen and whether it’s inconsistent, then making those properties would be very hard. Impractical, right?

Jenny: Yeah. So it is actually the problem when you think about, for example, these process list inconsistencies. You can theoretically also get them in a frozen system, because if you get the wrong time, then the operating system might be just changing something.

And then you also get a pointer that is pointing to, well, not unhelpful data or old data, so maybe it makes sense to work with a virtualized environment and also compare an actually instantaneous snapshot to the one acquired over time to get an idea about this. Yeah.

But I think it’s an interesting topic to think about. And yeah, maybe we can talk about it a little bit more later.

Audience Member 3: Thank you.

Session Chair: Thank you very much. So we will now start our next coffee break. We will meet again at half past three in this room or virtual. See you then.