The remarkable progress of artificial intelligence is changing many aspects of our life. Recent developments in large language models have enabled them to generate entire essays that are indistinguishable from those written by human beings. Additionally, with simple textual instructions, AI can now synthesize any kind of image, generating visually credible but entirely fake photos. Off-the-shelf software can now generate visually realistic images from a simple text prompt, strongly undermining the credibility of imagery as admissible evidence that something has happened in real life.

The technology behind the so-called “diffusion-based” image synthesis solutions, such as Midjourney, Dall-E and Stable Diffusion, is very advanced and beyond the scope of this short article. In a nutshell, it involves the use of deep neural networks and a process of progressively adding noise to an image until it becomes noise-only (forward diffusion). It also involves a reverse process of recovering intermediate versions of the image which removes such noise (reverse diffusion). When recovering an image, the noise reduction process can be conditioned by external data, such as a text prompt. This allows the user to “steer” the image reconstruction towards something quite specific (semantic concept), such as “a dog sitting on a ball in the middle of the street”, for example. Here’s what that produced on Midjourney (in approximately 30 seconds).

One way diffusion-based image generators can convert text into an image is to map both the image pixels and textual prompt to a common “semantic latent space” so that when you do provide a text prompt, the visual context of the words can be interpreted by the model and decoded into an image. This is what we refer to as the “semantic latent space”. Furthermore, it is possible to combine multiple textual concepts within the latent space, then decode it in order to obtain an image with those concepts combined. Just like in the example above with “dog”, “ball”, and “street”. In loose terms, the brain works in a similar way: whether you read the word “cat” or see a picture of a cat, eventually, the same neurons associated with the concept of “cat” will be stimulated in your brain.

Detecting AI-Generated Images

Moving on from the discussion of image creation, how can we detect if an image is AI-synthesized or real? Academic researchers are trying many different approaches, most relying on AI to classify an image as real or synthesized. This is an interesting approach and Amped Software is also conducting research in this area. Notwithstanding, a widespread issue affects all AI-based detectors, even the most accurate ones: once they provide you with an answer, that’s the end of the process. And, you either trust it or not. No explanations are offered as to how that conclusion was reached.

Instead, this article focuses on a different approach: using a geometrical analysis to check whether elements in the image are consistent. Indeed, when creating images, diffusion models may have a hard time retaining the physical and geometrical consistency of the scene. Therefore, if the inspected image meets the required criteria, Amped Authenticate can be quickly used to spot anomalies and obtain a robust decision. Two potential approaches will be presented, although more can also be considered: shadow analysis and reflection analysis.

Revealing AI-Generated Images Via Shadows Analysis

Amped discussed how to use the Amped Authenticate’s Shadows filter in this previous How-to article. However, for the sake of clarity, a brief refresher follows. First of all, let us establish the working hypotheses: this tool is based on published research [Kee14] and can be applied to images containing a known singular pointwise light source. For instance, it can be used for outdoor images captured on a sunny day or indoor images where the scene is illuminated by a single light bulb or spotlight. No led stripes, multi-bulb chandeliers and other amenities of the sort.

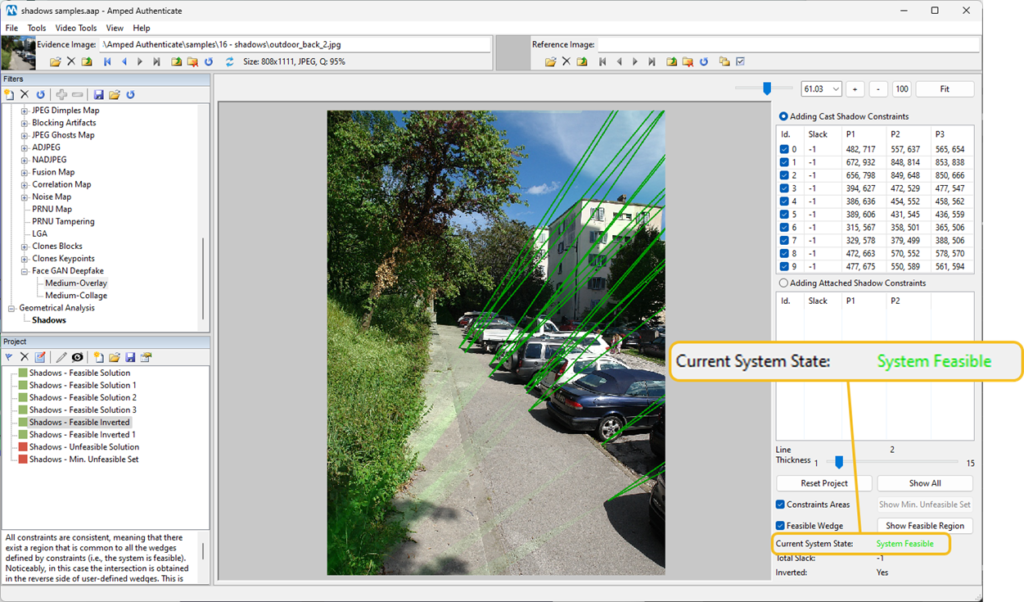

Now, let’s perform a shadow analysis on this image and treat it as a case study:

The image is loaded into Amped Authenticate and the Shadows filter is selected from the Geometrical Analysis category.

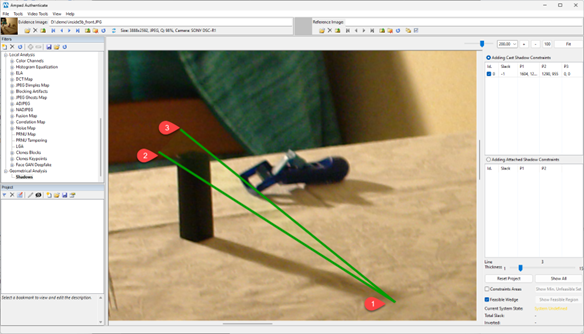

Let’s tick the “Constraints Area” checkbox and click on a tail end of a shadow. Let’s then click on two additional points which are identified as the dual source of the shadow, thus defining a green wedge:

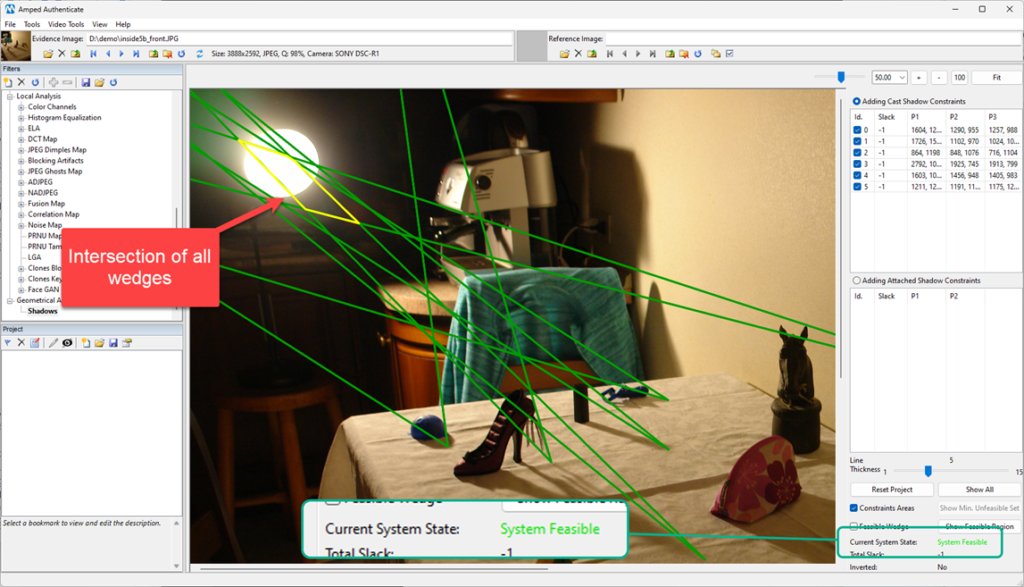

The same operation is repeated for several shadows, each time adding a new constraint and thus defining a new wedge. In the case of an original image, all wedges are expected to intersect in a common region (technically defined as “system is feasible”). This region would contain the projection of the light source over the image plane. If you’re lucky enough to have the light source visible in the image pixels, then the admissible region must contain it. This is indeed the case in this example, as shown in the inset of the example below:

However, the tool can be safely used even when the light source is not visible within the image, as in the example below: as you can see, the system is still feasible.

If you want a complete guide on best configuring and using the Shadows tools, Amped recommends checking the article they published a few months ago.

Now, let us return to the main topic: conducting shadow analysis on AI-generated images. Amped used three mainstream image synthesis systems: Dall-E2, Midjourney, and Stable Diffusion to generate a few outdoor scenes, then they analyzed their shadows and obtained interesting results.

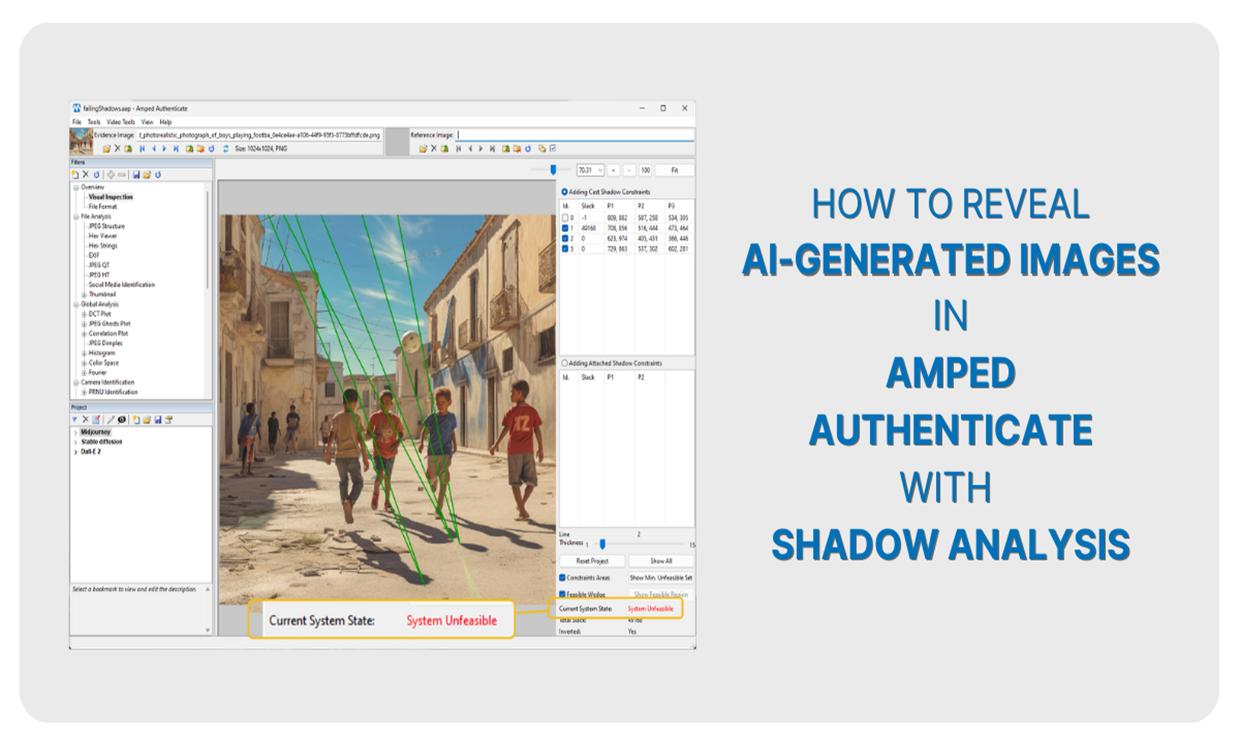

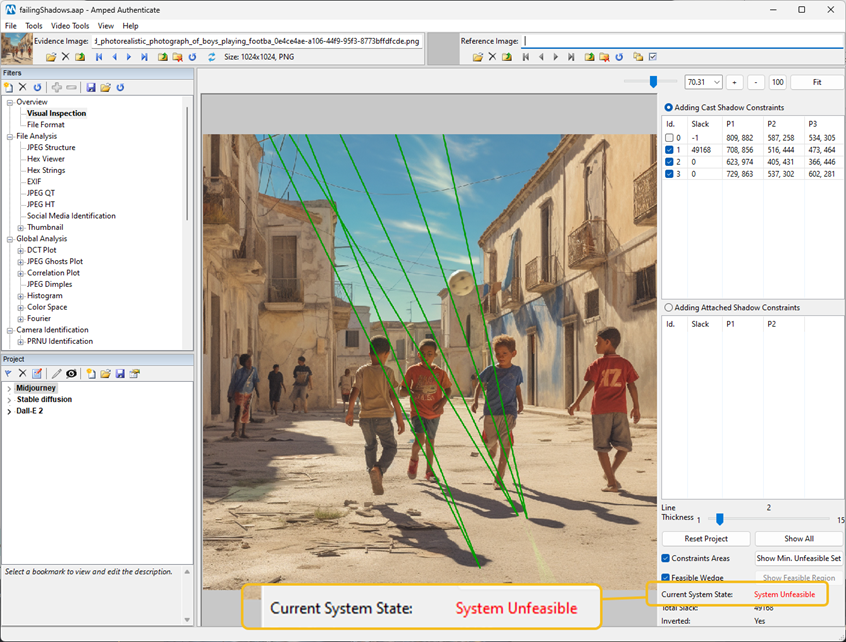

Let’s start from this image created with Midjourney using the following prompt: “photorealistic photograph of boys playing football in a street on a sunny day”:

As you can see, the shadows appear very neat in this image and look quite authentic at first sight. But by using Amped Authenticate, it can be demonstrated that this is not the case!

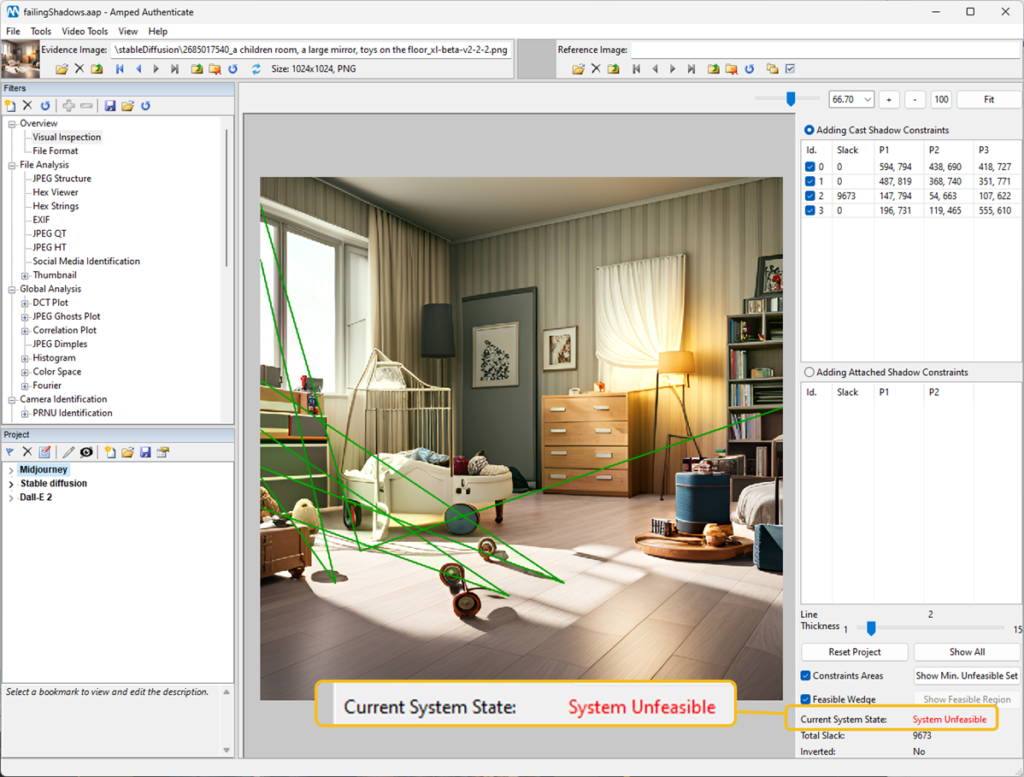

In order to test Stable Diffusion, Amped created the following prompt: “A children’s room, toys on the floor, sunlight”. There’s a small lamp in the image but the light it generates is visibly negligible compared to the light coming from outside through the window.

Even in this example, the shadows do not appear authentic. In particular, the shadow coming from the cradle.

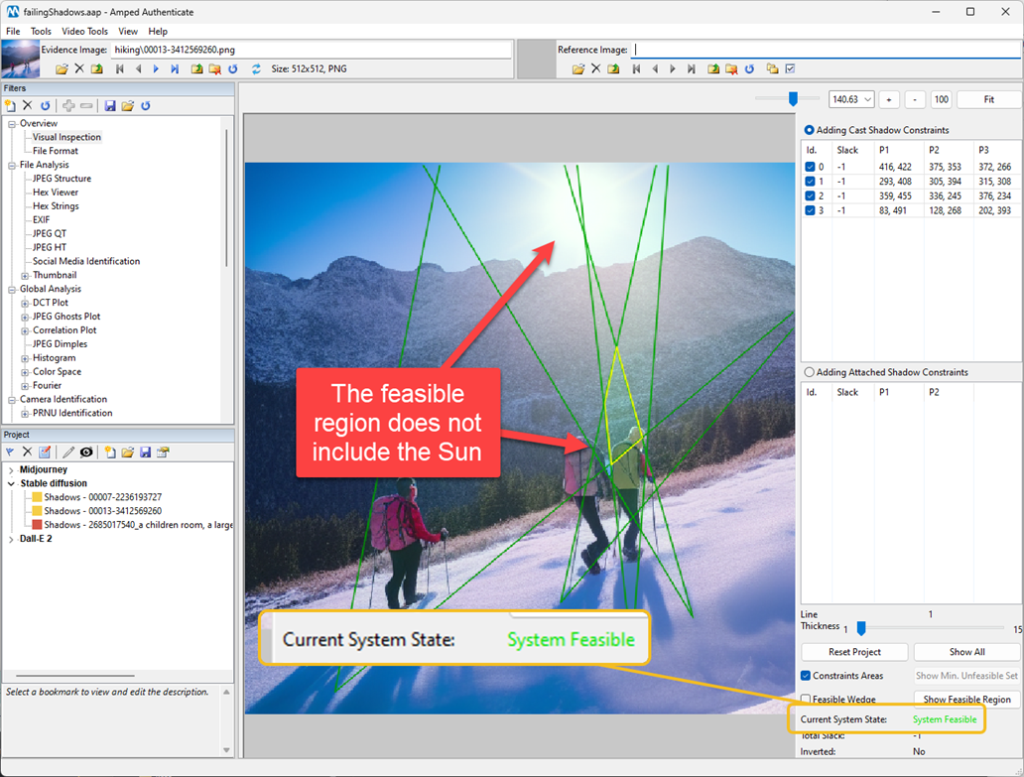

In regards to Stable Diffusion, Amped found some interesting examples where the constraints identify a feasible region. However, that region does not include the light source, like in the image below. So, despite the system being feasible, this image can certainly be marked as not authentic.

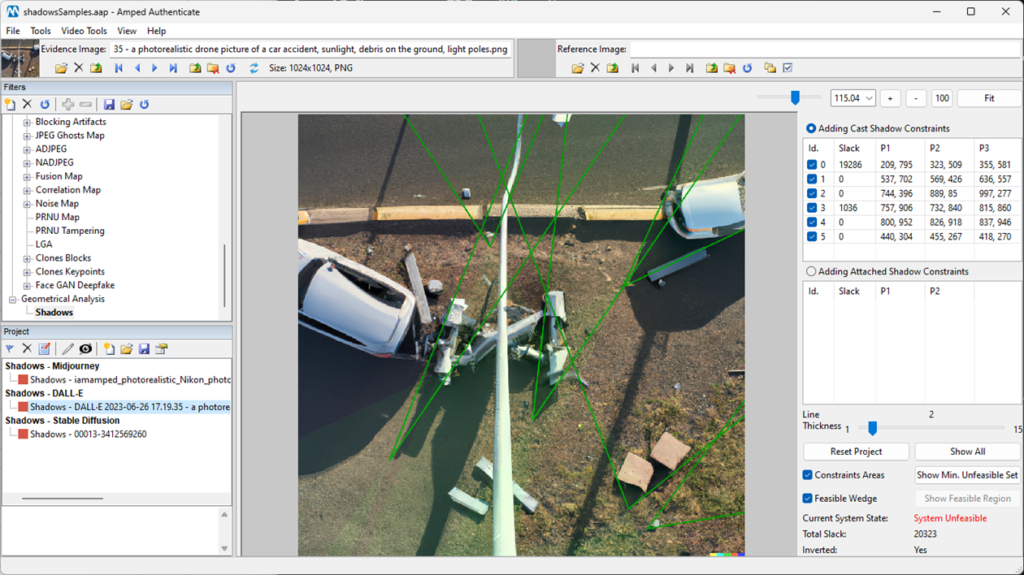

As for the Dall-E2, OpenAI’s solution for image synthesis, Amped tried to generate a picture of a car accident taken from a drone:

Once again, the image has shadows aplenty and they look authentic in terms of orientation and shape. However, when Amped performed a technical analysis, they spotted something not quite right:

Additional samples could be provided but the bottom line should now be clear: AI-generated images will often contain unrealistic shadows which can trick the human eye. However, when they are analyzed with Amped Authenticate’s Shadows tool, inconsistencies can be spotted that will help establish their AI origin.

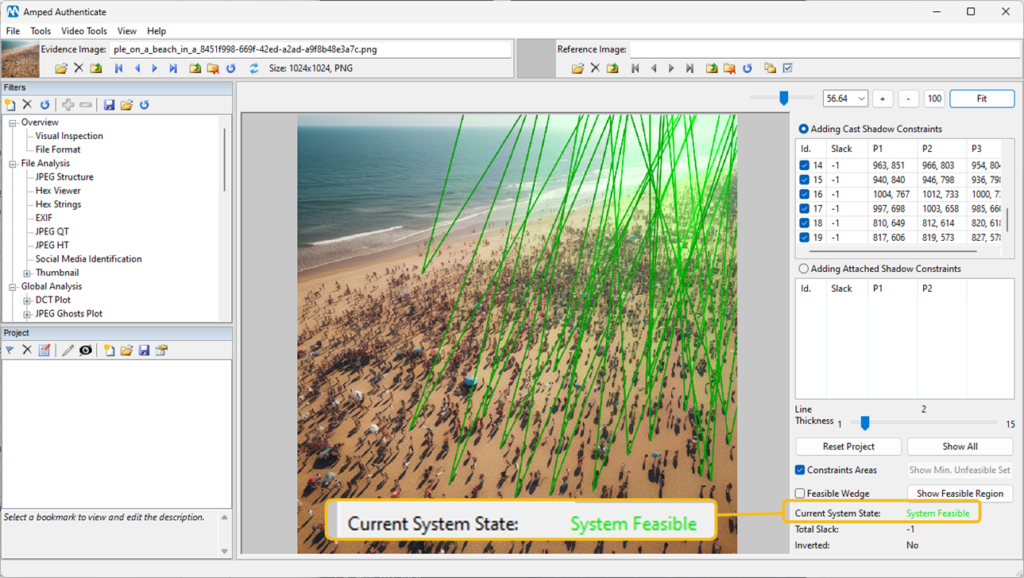

Of course, Amped does not suggest that all AI-generated images contain inconsistent shadows. For example, Amped asked Midjourney to generate an image containing lots of people on the beach on a sunny day. Despite over 20 shadow constraints drawn on the image in Amped Authenticate, Amped still could not spot any inconsistency.

According to our experimental observations, diffusion models tend to create plausible shadows when they are a little blurry and not very detailed. They will more likely fail when there are sharp shadows and objects casting complex shadows (such as the cradle in the example above).

It is worth noting that, while it is only possible to perform a shadow analysis when the required criteria are met, it has a significant advantage over AI-based fake detection systems: it can be easily explained. When an image is classified as “fake”, there are generally no other explanations other than a confidence score. And it may be difficult to explain to a layperson how the final result was reached, such as a jury or trier of fact. With shadow analysis, the results are explainable and justifiable.

Revealing AI-Generated Images Via Reflection Analysis

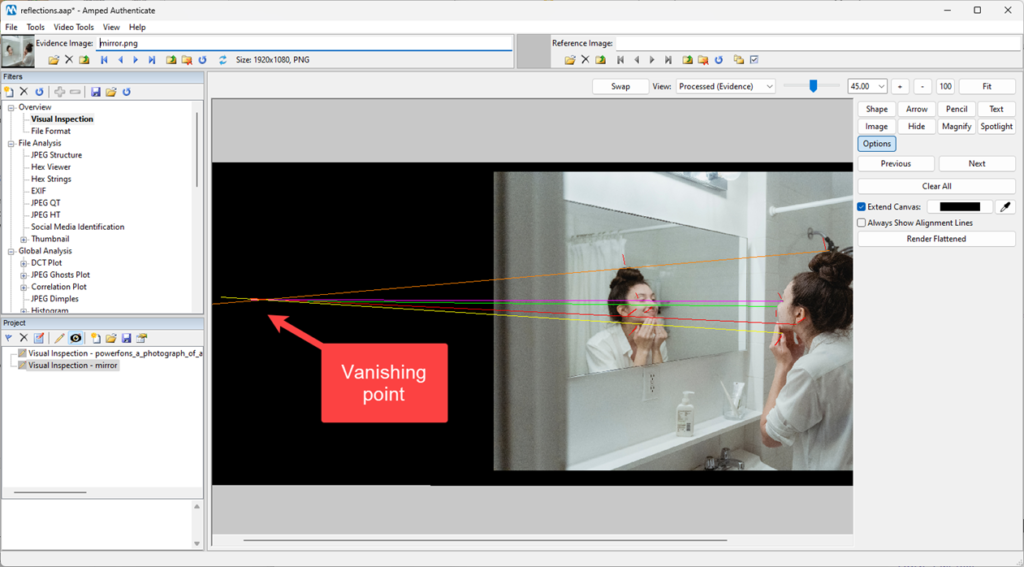

Checking the consistency of reflections is an image authentication technique that was proposed well before AI-synthesized images were a credible threat [Obrien12]. The idea is that when objects are reflected on a planar surface, lines connecting a point on the object to the corresponding point in the reflection should converge to a single vanishing point, as shown in the example below.

When creating fake images, it is hard even for expert human forgers to keep reflections technically consistent, so it’s not surprising at all that AI systems are not very good at all when it comes to generating convincing reflections in an image.

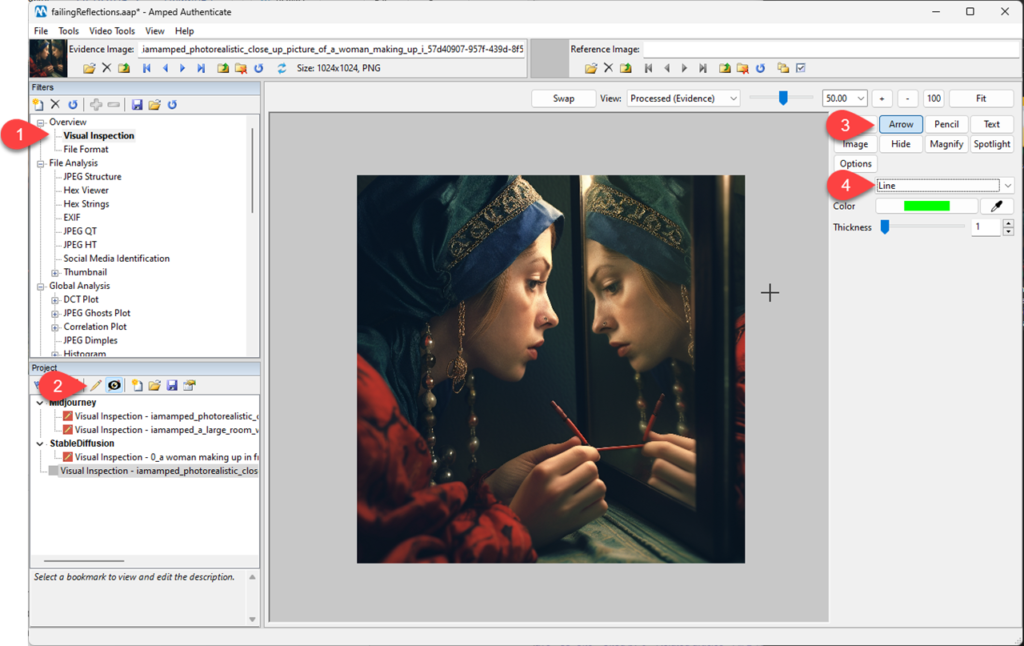

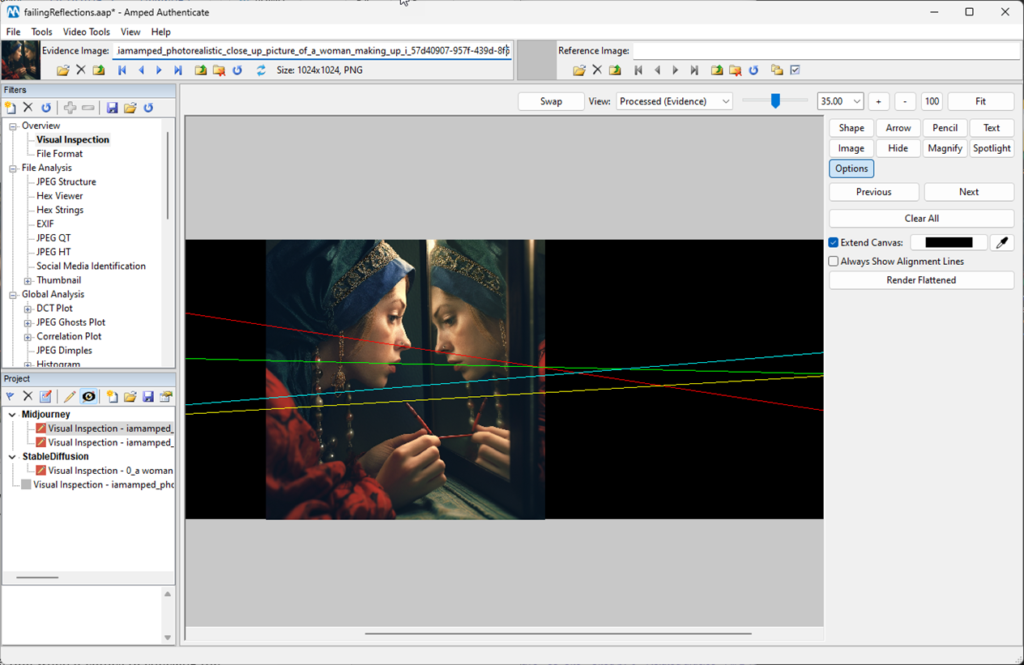

For example, the following image is what Amped obtained from Midjourney when they asked for a picture of a woman doing her makeup in front of a mirror:

In this case, it can be easily spotted that the reflection is not authentic. However, in order to obtain tangible proof, you can use Amped Authenticate’s Annotate tool to draw lines that connect landmark points to their reflection points. If the image is authentic, they should all intersect in one single point.

Just load the image in Amped Authenticate, navigate to the Visual Inspection filter and press the pencil button in the Project panel. Then, select the Arrow tool from the pop-up panel on the right and set the Head to “Line”:

Users can now draw multiple lines, change their color if they wish and connect a landmark point on the face to the corresponding point in the reflection. Also, users can extend the lines all the way to their estimated point of intersection, as shown in the example below:

It is clear that the lines do not converge to a single point, not even close. They are noticeably off from one another. This proves that the image is not authentic.

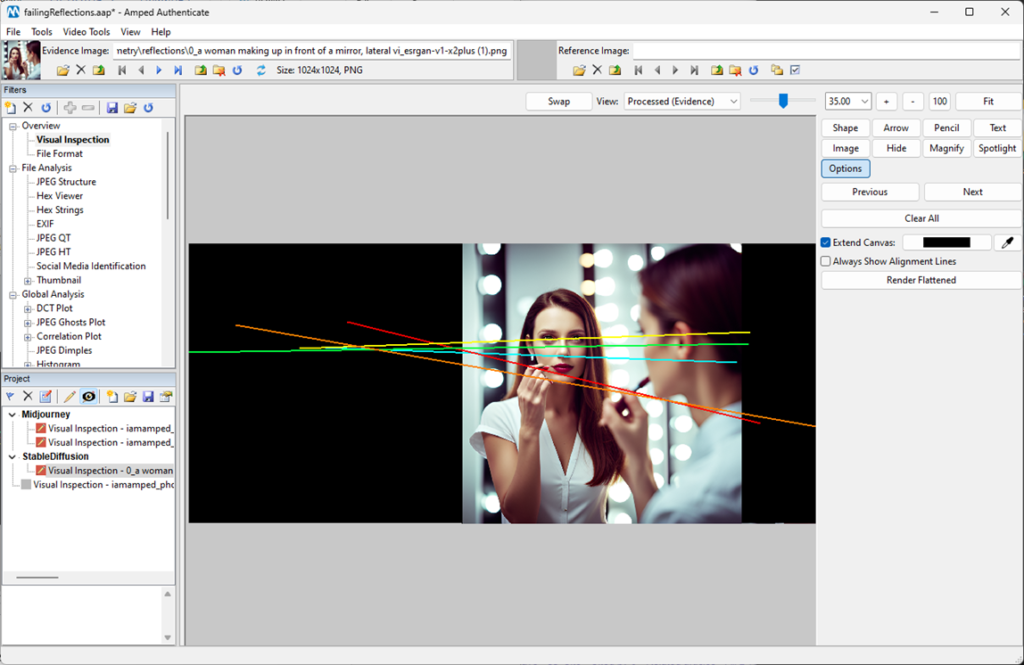

Amped tried the same experiment with Stable Diffusion and obtained a very similar result.

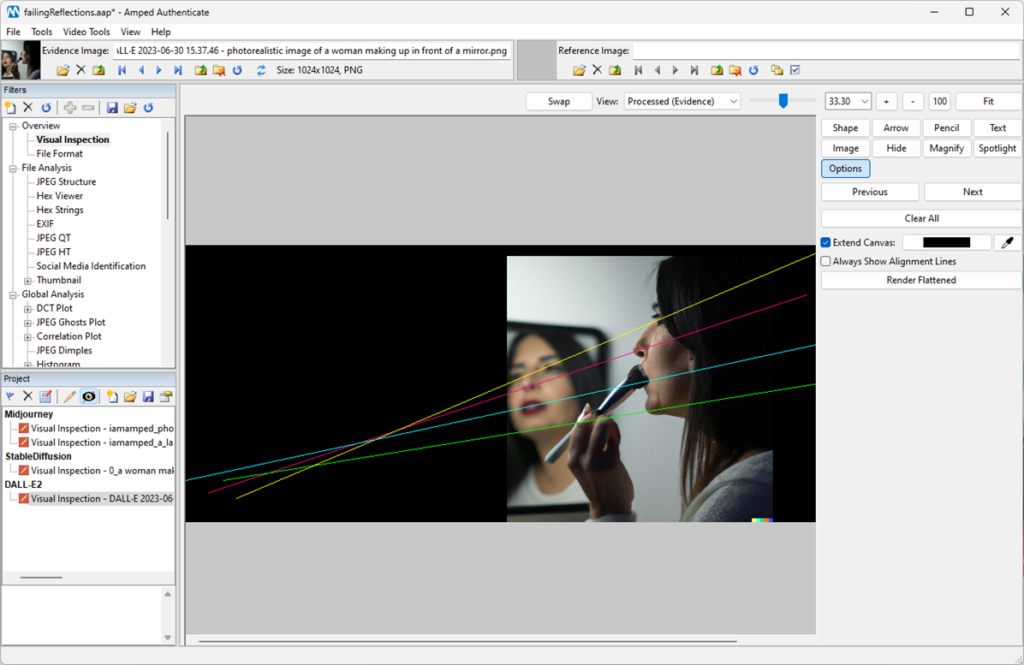

Furthermore, to complete the experiment, Amped tasked Dall-E2 with a similar prompt. The results they obtained are shown below:

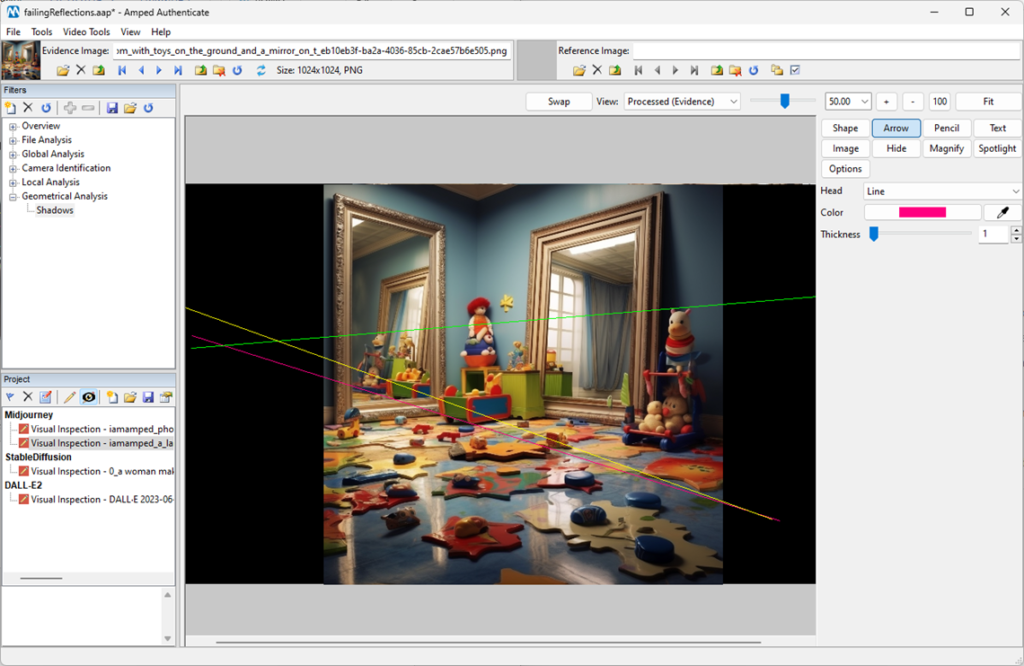

Amped repeated the experiment with a room full of toys and obtained some interesting results:

Unlike cases where Amped performed shadow analysis, they could not find images with convincing reflections with any of the tested generative systems. This is not surprising, since creating consistent reflections is a significantly harder task – even for a human – than creating consistent shadows.

Conclusion

AI-generated images are often of impressive quality and appear real, so the ability to detect them is increasingly important. In this tutorial, Amped has demonstrated how, under certain circumstances, shadows and reflection analysis can be a powerful ally in revealing synthesized images.

Noticeably, a recent study has revealed that the human eye is not a very reliable tool when it comes to checking the consistency of reflections and shadows [Nightingale19]. Nevertheless, resorting to technical analysis can often reveal inconsistencies in an undisputable way.

Amped Authenticate features a dedicated, powerful tool for shadow analysis, which generates meaningful and justifiable results. Despite not yet featuring a dedicated tool for reflection analysis, Amped Authenticate offers a powerful annotation tool that can prove very helpful in accomplishing the task. Furthermore, both the Shadows filter and the Annotate tool are fully documented when you generate the report, providing all the details you need to explain your conclusions.

Hopefully, you enjoyed this article. And when you’re dealing with AI-synthesized images, don’t forget to check for the geometrical/physical consistency of objects.

Bibliography

[Kee14] Kee, Eric, James F. O’brien, and Hany Farid. “Exposing Photo Manipulation from Shading and Shadows.” ACM Trans. Graph. 33, no. 5 (2014): 165-1. [Obrien12] O’brien, J. F., & Farid, H. (2012). Exposing photo manipulation with inconsistent reflections. ACM Trans. Graph., 31(1), 4-1. [Nightingale19] Nightingale, S. J., Wade, K. A., Farid, H., & Watson, D. G. (2019). Can people detect errors in shadows and reflections?. Attention, Perception, & Psychophysics, 81, 2917-2943.