Hans: So our keynote for today. Actually, I ran into Emre (online, of course) Tinaztepe from Binalyze in December. It was an interesting online meeting. I had to understand their technology.

And I’m sure Emre’s going to tell us about his vision, about the vision of his company, a company that is growing fast with an interesting view of how we should digital forensics for incident response.

And I liked it very much. I’ve seen some of his performances, and I thought that would be very well suited for our conference for digital forensic instant response in many ways.

So, Emre, I would like to give the floor to you and thank you again for attending, for being here as our keynote note speaker.

Emre: Thanks a lot, Hans. Hello everyone. Before I start, I’d like to thank all the organizing team for inviting me. It’s a real privilege to be here.

Let me share my screen. I hope you can see it now. So, today we’ll be talking about the traditions and reality in modern digital forensics and incident response. And as Hans briefly mentioned I’ll be doing my best to evaluate this industry in terms of the enterprise context.

And here’s the agenda: we will first be briefly talking about the brief history of digital forensics; and then what has changed in the last 40 years because it’s a 40 years old practice. And then we’ll be touching on the enterprise security because most of the times in enterprise context, alerts that are created by our products are the initial precursor.

So, we need to investigate machines and I’ll be talking about my personal eureka moments and that, which was the moment I was fully persuaded that we need to have a different approach and then will be quickly stepping into the enterprise forensics, that includes memory forensics, live forensics, and also touching base on the debates on the modern approach. This is an emerging industry and we have some proofs on that.

And then finally we’ll be having some incident response perspective, and final words.

Before we start, let me briefly introduce myself. My name is Emre Tinaztepe, I’m the founder and CEO of Binalyze. Prior to Binalyze, I was the Malware Analysis Team Leader at Comodo and Director of Development at Zemana. I’m based out in Estonia, the land of unicorns.

And I had a chance to spend 10 years in reverse engineering malware and teaching classes as well. 15 years of endpoint security and 25 years of software development. When I talk about digital forensics, I generally say I had to learn the digital forensics in the hard way, because I was required to learn it.

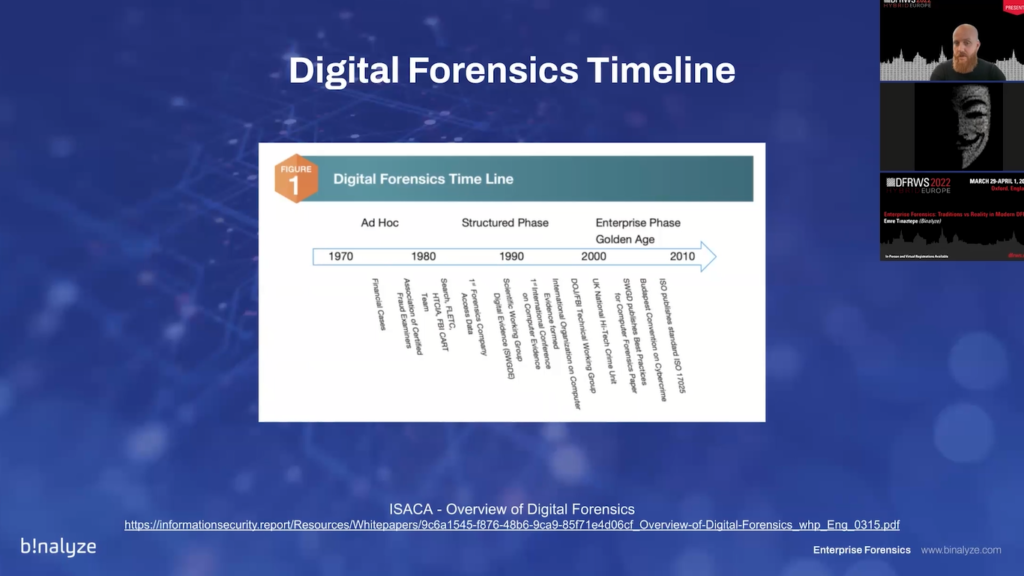

So let’s first start with the digital forensic timeline in order to set a baseline for our evaluation of this industry. When you take a look at the ISACA’s Overview of Digital Forensics paper, you’ll see that it’s split into three phases: first one is the ad hoc; and second one is the structured phase; and the last one (even though it’s enterprise that’s not the enterprise in our modern understanding)…so in these three phases, you’ll quickly realize that it started almost 40 years ago.

And when you take a look at this part, which was between 1970s and 1980s, the original motivation was fighting against financial crime. And it’s exactly the same actually nowadays, because cyber criminals are chasing money. And just like that was the case 30, 35 years ago, it’s still all about money. And nowadays it’s mostly ransomware.

So, if you pay attention that you quickly realize it was created by the law enforcement, and the original term that was coined was “computer forensics”, because that was the source of evidence at that time. And as I mentioned previously, the motivation was fighting against financial crime.

In 1984, we had the initial versions of computer analysis and response teams, which was called CART. And just a year later, UK Metropolitan Police started fraud squad. And again, with the sole idea of fighting against cyber crime. And then we started to see the standardizations, mainly.

When you take a look at the types of digital forensics, what started as the computer forensic 30, 35 years ago has quickly diverged and split into subcategories. Now we have network forensics…is there a question? You can hear me, right?

Moderator: Yeah, we can hear you.

Emre: Perfect. So it quickly became divided into subcategories, like network forensics, mobile device forensics, digital image, forensics, digital, audio, video forensics. And some of them will be discussing today, live forensics memory of forensic forensics and etc. What you see here, and the reason why we have this many types of forensics is because wherever is data, we have the need for forensics, and it’s becoming much more comprehensive as the time passes.

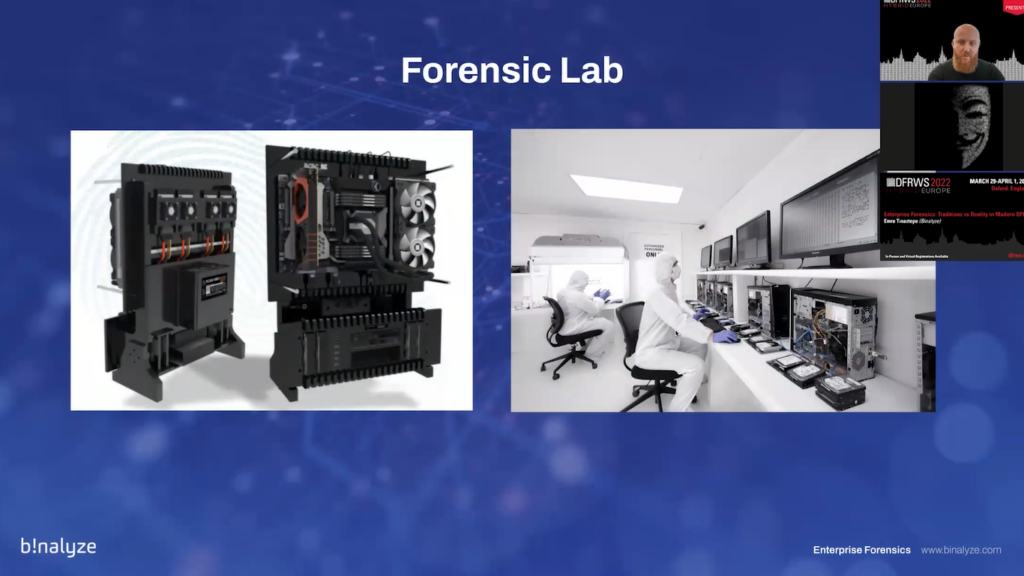

The first thing that you pay attention, you take a look at the…where forensics is made. Of course this, this part, like a bit more like exaggerated, because it also includes data recovery. But I don’t know if you had a chance to step into additional forensics lab.

The first thing that you’ll realize it’s a really serious industry. It’s a really serious process. And again, it was invented by the law enforcement. And the second thing you’ll pay attention is, it’s mostly hardware dependent because in order to perform forensics in a traditional way, you are required to have powerful machines.

And when you take a look at the image on the left, the first thing you realize is it’s not covered, so it’s an open machine. And the reason is these machines are burning like crazy because they need to be powerful. They’re processing terabytes of images in order to provide you a timeline, so they are specifically designed for that purpose.

And the way traditional forensics is made, even now, the first step is unplugging the drive. And the reason I wrote in parenthesis “if you can, when you can” is because nowadays it’s almost (and most of the times) it’s quite hard to access the hard drive.

And when you unplug the drive, you need to attach it to a disc applicator. And the traditional method enforces you to have two copies. You need to wait for hours in order to image two terabytes disc (hard disc).

And then you need to take this to the lab, process that disc image when you can, because again, it takes a lot of time. And then if you have any indicators of compromise, and if you find any lateral movement, you need to go to step one and repeat this process n times. So basically it’s not feasible anymore.

The original source for digital forensics was hard drives when it started 35 years ago. And if you take a look at the sizes of the evidence you’ll quickly realize that in 1984, we had an average disc size of 10 megabytes. And in around 10 years this size was increased to 10 gigabytes, maximum.

And then in 2006, we…it was quite common, I remember those days to see 200 gigabytes hard drives. And nowadays we can easily order a 20 terabyte disc from Amazon. So if you step back and take a look at the increase of the size, you’ll see that there was a 2 million times increase in the size of the evidence in the last 35 years.

And if we ask the question, “how often, and when do we use digital forensics in enterprise context?” Is mostly for validating an alert because we have millions of investments into products that creates alerts in our enterprises. And when you have an alert, nowadays you’re required to use forensic methodology for almost every day.

And when you validate an alert, it may end up with a compromise assessment or it may end up in an internal investigation. This is just one of the use cases. But the answer is, nowadays we are required to use digital forensics almost every day.

The takeaway from this first section is: digital forensics has been around for quite many years, and it’s heavily dependent on the hardware. The original reason why we are having this presentation is digital forensics was not invented for enterprise investigations. It was invented by the law enforcement agencies.

And those were the times we had only 10 megabytes, some 100 megabytes of disc sizes, and speed was not that much of a problem, but nowadays speed is of essence, and traditional method cannot be positioned as the first step anymore.

As I mentioned previously, why we need digital forensics in enterprise context is mostly related to enterprise security. So that’s why we need to quickly take a look at the enterprise security as well. My background is in enterprise security. So, I had a chance to work in this industry for more than 10 years.

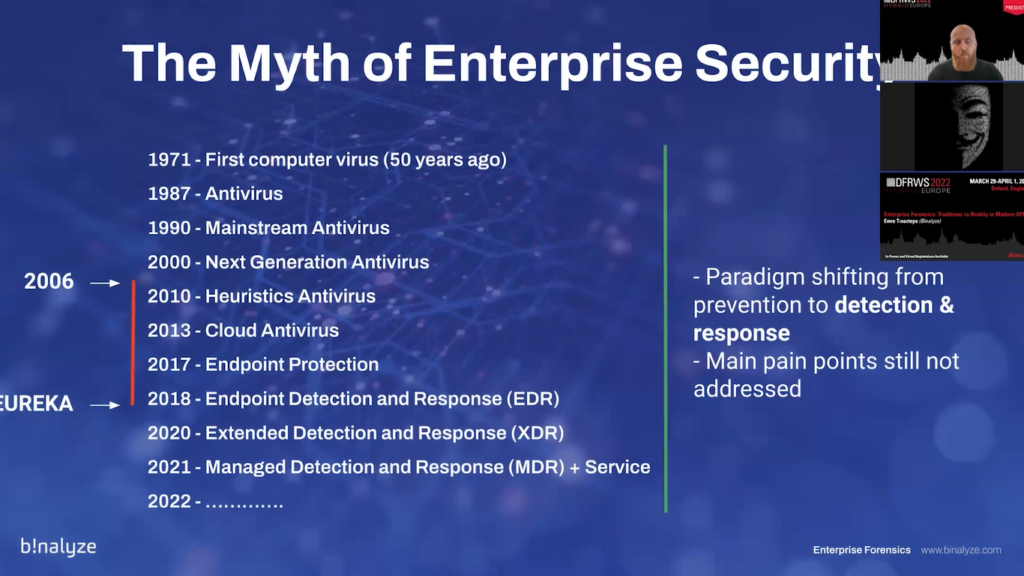

And these are the times I started in 2006 and in the upcoming 10 years I had a chance to see how the technology was evolving. But most of the time it was rebranded by adding some additional layers of protection. Like, it originally started with the signature base detections, and then we had heuristic antiviruses.

And then in a few years (in two, three years), we started to talk about cloud antiviruses, which was mainly checking the signature in the cloud and not on the machine. And then it quickly evolved into a new term, which was called endpoint protection.

So from traditional mainstream antivirus that was a new product line, and these were the times, like 2018, I had my eureka moment which was the time EDR was getting popular. And in just two years time period, we started to see another version of this technology that is now being named XDR. And in around a year, now we are talking about NDR, which is mainly XDR plus service. (Let me make this smaller.)

So the original takeaway from here is there’s a paradigm shift from prevention to detection and response, and unfortunately main pain points are still not addressed, because the enterprise security is still based on the monitoring and logging.

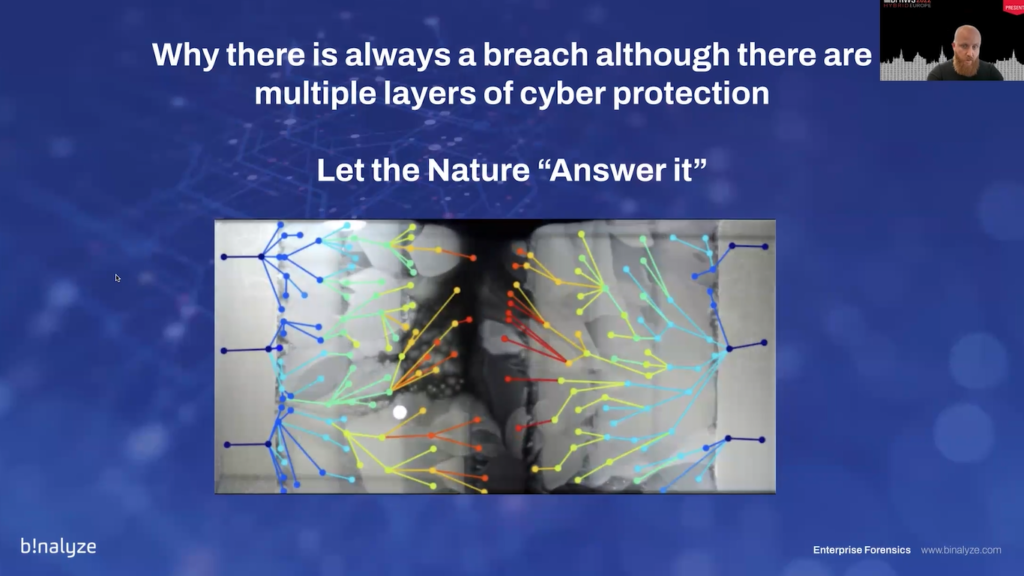

When I had my eureka moment, this video…we have a short like, slide, short GIF, that quickly describes that eureka moment. So, around four years ago I was sent this video by a friend of mine, and it was really mind blowing because it summarized my last 10 years in just one and a half minutes video.

This is an experiment made by the Harvard Medical School, and what they test here is the evolution of bacteria. What you see here on the slide is a Petri dish that is divided into nine vents. And on the right and left side, we have zero level of protection. And in the inner vents, we have 1 time stronger antibiotics, 10 times stronger antibiotics, 100 times and 1000 times.

So what they’ll do here is they will release the bacteria from both sides and observe how it behaves when it faces these levels of protections. When I first watched this video, it was the exact summary of the enterprise security at that time.

So as you quickly see when the bacteria hits this area that is not protected (you can think of it as our enterprise layers, so we have layers of defense starting from the firewalls and going into our inner defenses), it quickly spreads here.

And what you would expect is just because we have antibiotics here, you would expect it to protect this layer, but that’s not the case. As you see, we have some breaches here. And if you keep going, you’ll see that bacteria gains resistance on each layer.

And then in the middle, we have bacteria that can survive in an area that is protected by thousand times stronger antibiotics. And this is called a superbac, when you have it in your body, there is no way there is no chance of living.

And that’s what enterprise forensic provides you. So when I first saw this picture, that was the moment I decided to stop working on the enterprise security and invest into…by using the experience we gathered, invest into the new methodologies that would help enterprises quickly get an idea, get a picture of what happened in their enterprises.

If you take a look at the lasting, like the, how many days it takes to identify a breach you’ll quickly see that it’s around 200 days. And this may be open to a discussion why it takes too long. But even when we know that we are breached, it still takes more than two months to contain that breach.

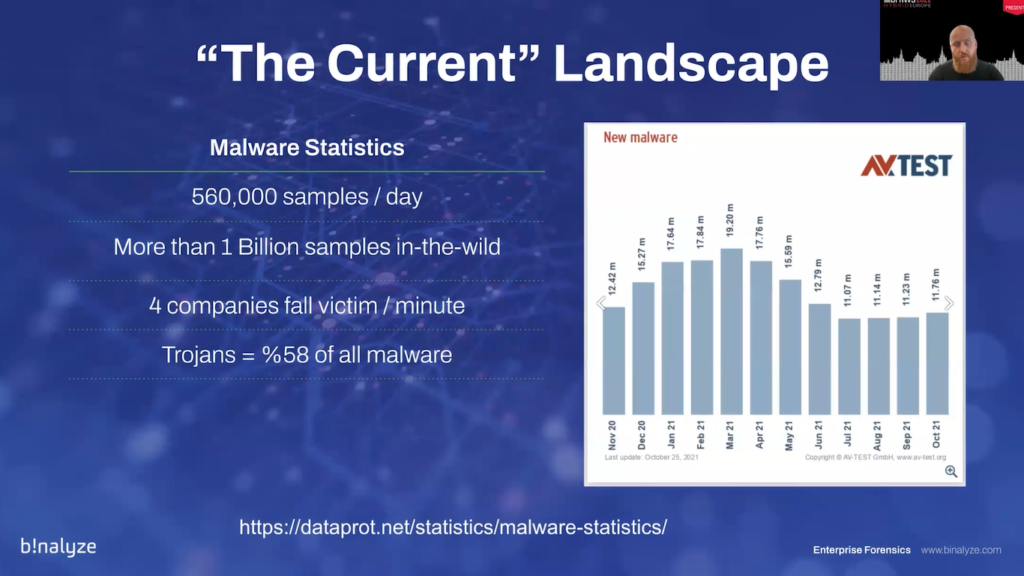

One of the reasons we have such a long time to identify and contain a breach is because we are under a heavy storm of malware samples. So every single day there are more than 500,000 samples in the wild. And we have 1 billion samples in the wild in total. And every minute we have four companies falling victim to cyber attacks, and Trojans are counting for the 58% of all malware.

When I was persuaded that whatever we do, there will be a breach, the latest advancements in the Rootkit stand malware was also a key factor because I was finally persuaded that a 100% security against a software based attack is not possible.

And those were the times we were seeing highly advanced rootkits like Rustock, TDL4, which was at that time called “indestructible botnet”. And it was literally making fun of the investigators, analysts, because it was outputting quotes from Star Wars.

Normally that’s something not you would expect from a malware developer because the more traces they live on the system, the higher is the chance of being detected, but they weren’t scared at all. They were making fun of the investigators.

And then there were more advanced malware families like DuQu, Flame, Gauss, and now we have state sponsored APT groups developing even more advanced techniques. And this was a funny coincidence: while I was preparing the presentations for the talk, I’ve seen this in Twitter. Now we even have methods for how to bypass antiviruses and we even have books for that.

So the takeaway from this section is there’s no silver bullets in enterprise security, and it’s basically exactly the same when it comes to the bacteria experiment. So, we will be protected just like the case with the bacteria experiments when you have the antibiotics, when you have the defense in depth in your organization, you will be protected 99% of the time.

But that will leave you with 1% chance of a window that will be open, engaged to the attackers. If it’s not an outsider, you will be facing an insider. We are hearing more and more news about APT groups bribing the employees, and a breach costs millions of dollars nowadays. So this is the reason, I think it’s time to better assume that we are already breached.

So, we talked about the traditional digital forensics, and then the reason why we had to use it in enterprise context. Let’s talk about the memory forensics, which was around five years ago while we were still heavily using it on our instant response engagements.

And the reason is, it was much faster. Those were the times we needed a new method to speed up the process. And the reason was when you had to choose between a 2 terabytes disc image, or a 4 gigabyte RAM image, it was always easier to go for the RAM image. And the way it works is it parses the raw memory. That way it reveals the truth.

And it was feasible at that time (I’m talking about like 5 years ago), and it’s still feasible to some extent. There were some products like HPGary, Volatility, Rekall, and these are mostly command line based with really low UX, because the experiences you are running a command, you’re getting some output in a text file, mostly, and then you need to find whatever you are looking for (some indicators) by searching inside those text outputs.

Under the hood, the way these products work (there are some also like open source alternatives) is parsing low level structures that are mostly called OPEC. And the reason is the operating system developer companies do not want you to touch those structures because they don’t want to be responsible for documenting them. They are the internal workings of the operating systems.

It makes these products quite powerful because it can reveal a lot of stuff, but at the same time, it’s the biggest weakness because these products are fragile. And the reason is, if you ask someone who does this on a daily basis, the first thing you will hear (and that’s what I was having at the same time), the first thing you’ll hear is “we ran product X and it gave an error”.

And when you ask why, it’s mostly because there are, there are some changes in the operating system, it faced some memory compression and I mean…there’s always some like commands not supporting the latest versions of the operating system, because all the inner workings of that command is based on the research on the previous operating system artifacts and etc.

So, in summary, even though it’s powerful, it’s not scalable and collaborative because it’s getting really hard to get a RAM image, which can be more than a 100 gigabytes on an average server machine nowadays, and you cannot upload and parse them while the clock is ticking.

So, again, the takeaways from this section is memory forensics is still applicable. I don’t want to go brutal on that, but it’s not possible to…it’s not feasible, let’s say, to use it as the first step. And how I see memory forensic is it sits in between the traditional methods and the new methodology.

So it still has its use. But it’s not the first step anymore. And also it’s facing the same problems with the traditional method because the sizes of the RAM is increasing with quite a cadence.

As the next step we started to use live forensics. Actually live forensics was already in use some 15 years ago, and they have really cool products by providing this kind of functionality, products that used to provide with some visibility, you can get remote different systems.

So, it’s not something new to be honest. But when I asked the question to myself, “what happened to these products?” is I think they were victim of non-repudiation, and I’ll be giving more information on that in the upcoming slides.

Live forensics is the fastest way of extracting information from a live system, in a way that you are not required to fight against the undocumented operating system structures. So, it’s quite stable. You’re not required to deal with terabytes of data, and you’re not required to wait for ages for getting the visibility you need for starting and finishing an investigation.

But the reason these products, like 15 years ago, were doing a really good job is that we don’t see them anymore. And one of the first questions people were asking when they see such a product is “aren’t you overriding evidence?” Actually, when you take a look at the memory forensics, the same question should be asked on memory forensic because in order to get a RAM image from a machine, there are a number of ways.

The first one is if you are facing, like, if you’re against the virtual machine, you can get the VMEM a file, which is the virtual memory file. So that way you’re not required to run anything on the machine itself, or there are some devices which provides you direct memory access, but there, again, highly hardware dependent because you need to be, you need to be plugging some device to the machine, and that talks directly with the RAM, so you don’t touch anything else.

Or there are some extreme methods, like freezing the RAM (I’ve never seen anyone using that), but it’s still a method. So there are some methods but the most widely used one is running a RAM dump binary inside the operating system. But just because we have the RAM image in that evidence source, I think people are, like, mostly taking it as its traditional disc image.

But actually that’s the exact same case. So, the answer to “aren’t you overriding evidence with live forensics?” is yes. But, as much as every investigator does. Just like you see in this crime scene, the investigator has to step inside the investigation, the crime scene investigation site, and they need to collect evidence so that they can label them.

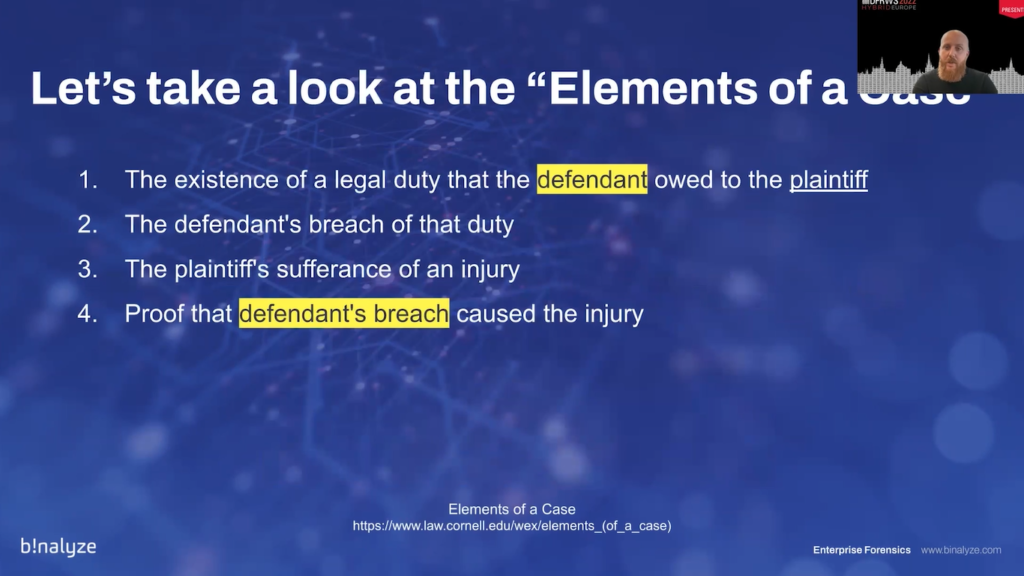

The second, most widely asked question is: “is the evidence accepted at the court?” Again, if you go back to, like, 40 years ago these are remnants of the law enforcement. And if you take a look at the elements of the case, you’ll see that there are… it may change depending on the jurisdiction, but most of the time you have four main elements.

And the first one is the existence of a legal duty that the defendant owe to the plaintiff. (Pay attention to this defendant part here.) And the last one is you need to have a proof that the defendant’s breach caused the injury.

So, in order to have a case, that can be (that should be) taken to a court, you need to have a defendant in place. But unfortunately, except some cases that may require you to go for a deep dive investigation, court is the remnants of traditional forensics perspective because 95% of the time, maybe even higher, you’ll be facing against an APT group that is targeting your enterprise, you’ll be facing a ransomware gang (which is nowadays called technical debt collectors) or an insider, which may require an in-depth investigation.

So the answer is: you won’t be required to go to court, most of the time. And in enterprise investigations, our priority number one is to stop bleeding and having the business continue its activity.

So, all in all, court is not a priority, until the case escalates. And even to understand if a case should escalate or not, you still need to go for the fastest methods, and then you can proceed with the memory forensics and full disc imaging.

So the takeaways from this section is: go traditional only when you need to, because nowadays no one has time to acquire full disc images as the first step.

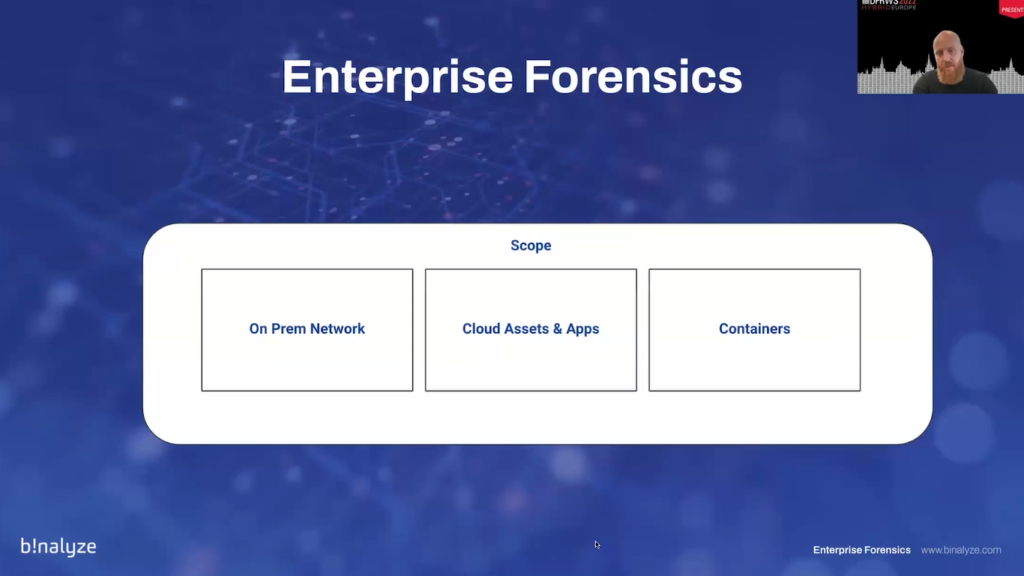

After having a brief summary of the digital forensics, live forensics, memory forensic, now we can welcome enterprise forensics. Enterprise forensics is the new approach for running a corporate investigation at scale. And it includes “computer forensic”, as it was coined 35 years ago.

It also contains live and memory forensics and network forensics in a way that is remote and fast. It’s non-obtrusive, so you can run it on, like, thousands of machines without disturbing your users. It’s scalable, it’s collaborative and enrichable.

When I say enrichable, it means you can start with one machine and without any hassle, you should be able to enrich it by adding more and more endpoints or assets, in this case.

When you take a look at the scope of it, it’s not only on prem networks anymore because when you define an enterprise, you will quickly see that an average enterprise nowadays has a cloud site which requires you to add cloud assets and applications into your investigations, because that’s what the cyber criminals are targeting most of the time.

And also containers that are quite near, that comes with a lot of advantages, but at the same time that comes with a lot of disadvantages when it comes to the investigations.

Compared to traditional forensics that was invented, like, decades ago, the enterprise forensics is fast. You are not required to be physically present on the machine. You can perform it remotely, it’s proactive, and it’s integrated, which means the problem of having skilled people that are able to investigate and collect evidence from a site is fixed by this technology, because you can quickly integrate it with your already existing products that are creating alerts, and let these products collect evidence for you regardless of the time. It’s signal based and it’s 24/7, so you’re not limited with the working hours, which is one of the biggest problems with the traditional forensics.

When you take a look at the industry, it’s an emerging industry on its own. Even SANS, which is the most reputed organization when it comes to creating cybersecurity courses, you’ll see that there is a new course that’s called “enterprise cloud forensics and incident response”, and it was a few months ago, like it was around four or five months ago.

It was still in beta, and the description is quite eye-catching. It says “the world is changing and so is the data we need to conduct our investigations.” And here, like, this part is also quite interesting: “many examiners are trying to force old methods for on-premise examination onto cloud hosted platforms.”

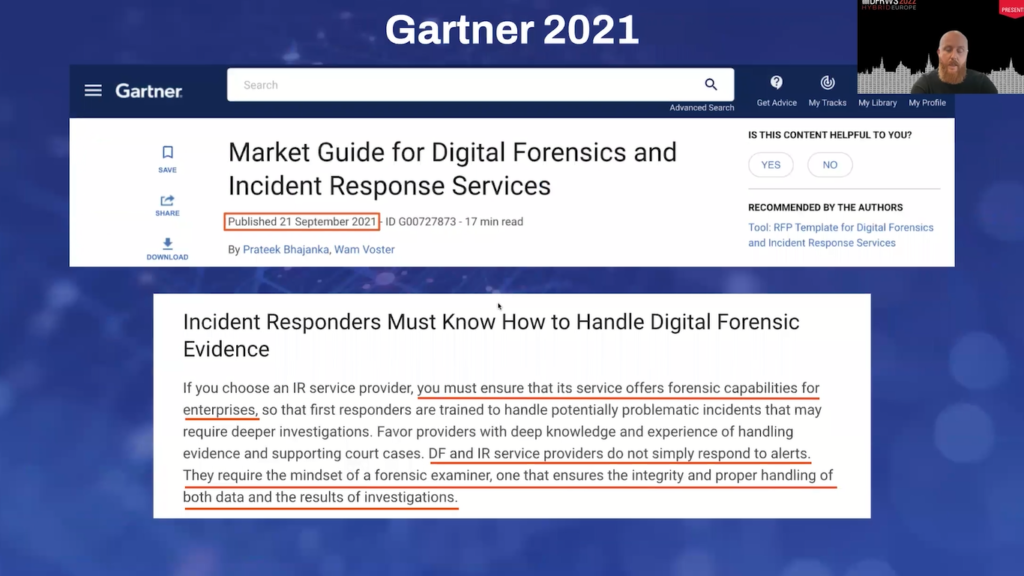

So this is one of the problems. And if you take a look at the Gartner reports in the last two years, you’ll quickly see that more and more clients are asking deeper into incidents, and they’re asking for detailed forensic reporting, because without having the forensic report which is mainly the source of the visibility, you cannot reduce the time to contain a breach and time to remediate an active incident.

And this Gartner report is the latest one that was published a few months ago. Again, it states that even when choosing a service provider for instant response and digital forensics you should be ensuring that they provide forensic capabilities for enterprises. And basically it’s not “nice to have” anymore, it’s a must have, and it requires the mindset of a forensic examiner.

At the same time there are some advancements on the law side. We are seeing more and more countries introducing laws for enforcing enterprises to be compliant with the laws.

This one was very recently, just a few weeks ago, was accepted by the US government. And it requires critical service infrastructure providers to report a breach in 72 hours. So it’s quickly becoming the case like GDPR.

And this one belongs to, I think, this week, just a few days ago. It enforces like all the EU governments and they require to share…it requires them to share incident related information with CERT-EU without any delay.

So, what we see here based on what we have seen on the industry, is we are now in a mindset shift from cybersecurity to cyber resilience because we have to accept that there will be a breach, and cybersecurity focuses on the security aspect of cyber, while the resilience also accepts the fact that you may be breached but you have sufficient means to get back to normal and learn from it and improve your resilience.

This is the reason enterprise forensic is a tactical solution for cyber resilience. And the reason is without the visibility you cannot fight. And it’s just like fighting with your eyes closed (unless you’re a Kung Fu master).

And most of the time when you have an alert, our current products are already creating alerts, by the way, we already have millions of investments into these products, and we already have precursors, but we don’t have people with the software skills to respond to these alerts and “let’s get the logs” is the old approach it’s not feasible anymore. And you cannot log everything from a machine; it’s practically impossible nowadays.

And forensics is not a side bonus feature. So, you may have some products with some level of forensic capabilities, but unfortunately it’s not a “nice to have” feature anymore. And it’s as you have seen in the first few slides, it’s an industry that is quite old. It’s an industry on its own, so it’s not a bonus feature.

At this stage, you may be, like, asking yourself, we have an EDR. And again, these products are monitoring based products and the visibility they’ll provide you is limited. I strongly suggest watching this video that is recorded by FalconForce. And it shows you the level of visibility and how many months of visibility you can get for each product.

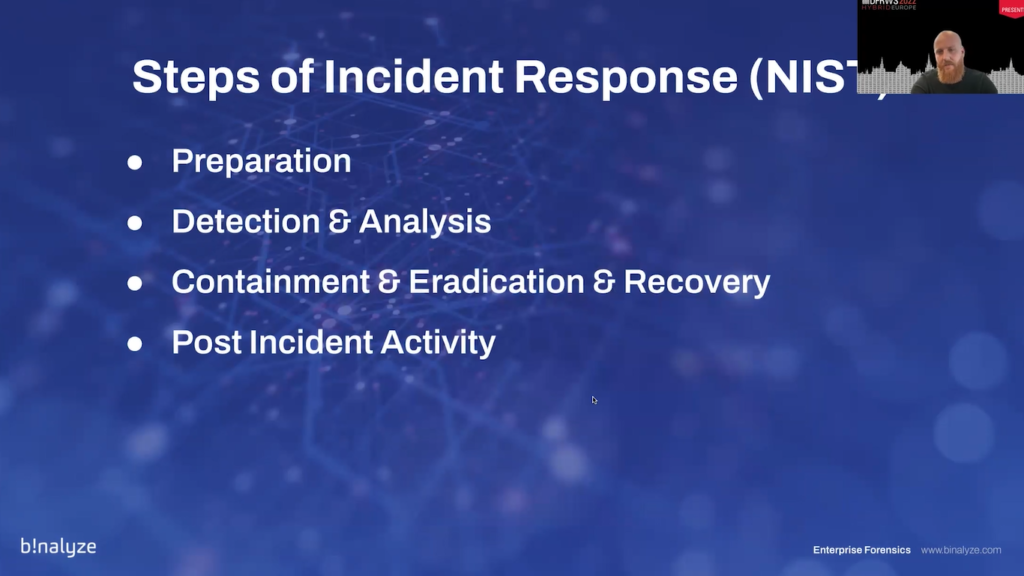

From the incident response perspective, enterprise forensics can be easily investigated, like, can be easily evaluated from NIST incident response steps plan.

The first one is preparation. At this stage, knowing your assets is the first step, because without knowing what type of assets you have, or how many assets you have, you cannot respond to a breach. Remember Hafnium, Solarwinds. Without answering the question of how many machines we have that can be affected by this global exploit, you cannot respond.

So, you should be investing into tooling that will stop you from asking these questions, sending emails internally, and you should be integrating and automating platforms so that you can do more with less.

I think the quick way of understanding how prepared you are is asking these questions to yourself: How many IIS Servers do you have in your network? How many of these are running (let’s say version 5)? How much visibility you can get from an asset, if you want to investigate it right now? How long it will take to get a full picture of a cyber crime scene? (This may include multiple machines.)

And how long you can go back in time, if you need to see more? Which will give you an answer that you need to invest into forensic capability. And what are your blind spots? Do you have visibility into cloud assets? Do you have visibility into remote locations or containers? Are critical questions to ask.

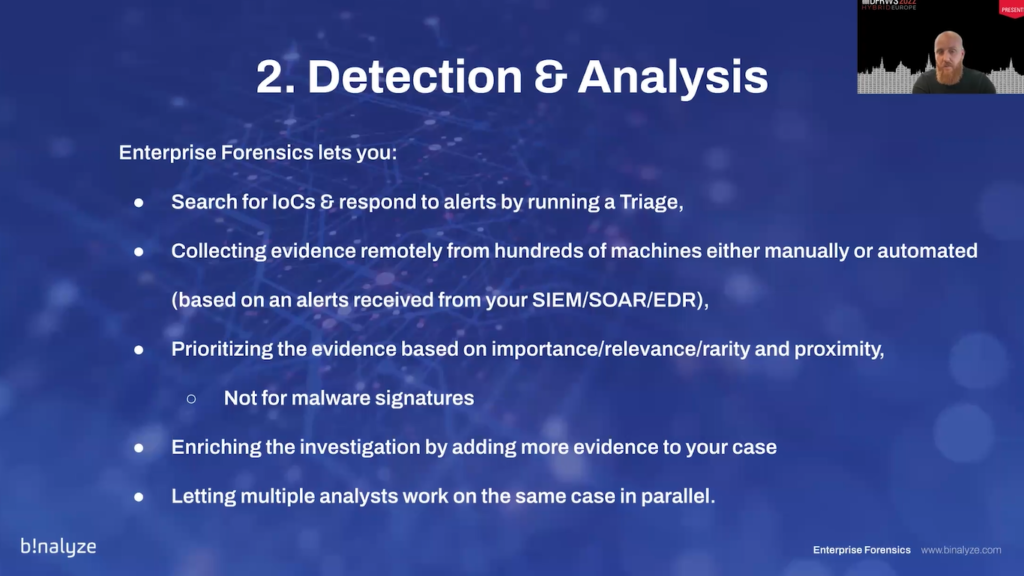

On the detection and analysis side, the importance of enterprise forensics shows itself by reducing the time to analyze and detect, because global security operation centers are facing talent wars, and the greatest need in these organizations is attack detection and analysis.

So, we should be eliminating noise from the signal, which is the most time consuming task, most of the times. And most of our level one and level two analysts are spending their time searching the needle in the haystack. And this is giving attackers time, by the way, while we are trying to navigate ourselves in the incident.

So enterprise forensics helps you quickly collect the evidence regardless of the location of your assets. And it also helps you prioritize what to look first. So, you’re not searching the needle in the haystack. And the less evidence to look at means less people and faster response time.

On the detection and analysis side, enterprise forensics lets you search for IoCs. So, it’s not only collecting, it’s also searching, like, running a triage across your enterprise, integrating with your SIEM/SOAR or EDR solutions, so that as soon as you receive an alert, you’re not required to be manually responding to these alerts precursors. And it helps you prioritize the evidence based on the importance, relevance, rarity and proximity.

One thing I should emphasize here is we have…we are not in the age of checking for malware signatures, we need decision support solutions that will guide our secure operation centers so that they can give educated guesses. And it helps you enrich the investigation by adding more assets into your case.

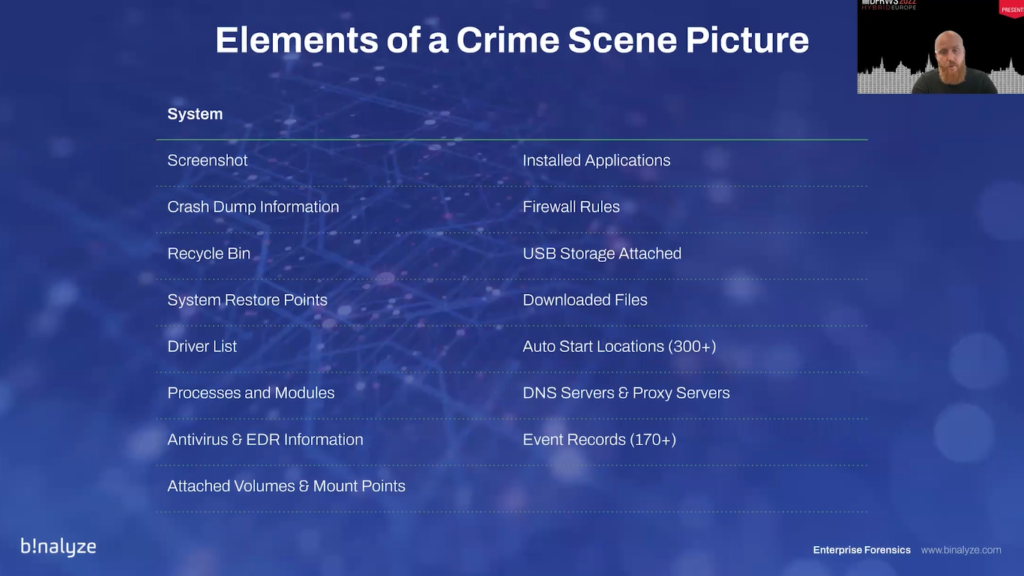

Before we start with the third stage of the NIST IR steps, the artifact of detection and analysis part is the crime scene picture. That’s how I call it.

And the crime scene picture, or cyber crime scene picture, is the picture that you get from a potential asset that has the highest resolution, which is basically a forensic snapshot that contains almost everything you need for an investigation, so that you won’t be required to ask any further details. It’s just like the picture you see on the slides. So, you can start the investigation right away.

There are a lot of evidence that should be inside this cyber crime scene picture. But just to be respectful with the time, we…I’ll be covering the main sections.

There are some system artifacts such as the screenshot of the asset, installed applications, firewall rules, all the running processes, the modules, the auto start locations, which can be more than 300 on an average Windows machine, and event reports that are highly important when it comes to investigating a machine. And, again, for investigating an average incident, you need to be looking at more than 170 event sources.

On the memory side, if you need to dig deeper, you can get a RAM image swap file, page file and hibernation file.

And on the browsers, there are a number of artifacts that starts with the URL visit industry, and that can go down to the downloaded list files: their signatures where they were downloaded from; most visited sites and case files.

On the file system, you may require to have a list of deleted files or the list of all the files on the system. And registry itself is a goldmine. You can have a lot of artifacts that can provide useful in an investigation.

And on the network, we have artifacts like DNS cache, network shares, like TCP, UDP and ARP tables, and some locations like WMI…it’s mostly used by the virus malware, which got quite popular in the recent years. And there are some application artifacts.

So, combining the application artifacts with the system evidence, you can get an even enriched version of the crime scene investigation. And this can also be enriched further by having a packet capture from the endpoint. This can be a net flow or a full packet capture. And upon this, you can also embed, like, ingest other sources of evidence like firewall logs and etc.

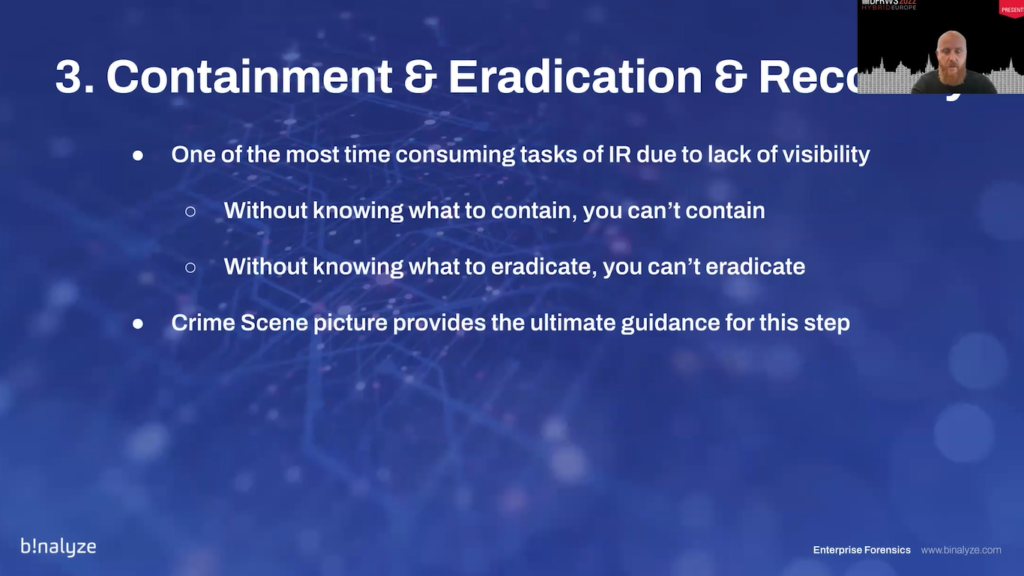

So on the containment and eradication and recovery stage, one of the most time consuming tasks of incident response (and this is due to lack of visibility), without knowing what to contain you cannot contain.

And that’s where enterprise forensics gets into the picture. And without knowing what to eradicate, you cannot eradicate. It’s like getting lost in an incident without knowing where to go, what to do. And this is the moment crime scene picture provides you ultimate guidance, so that you can start the containment, eradicate and get back to normal.

On the post incident activity step, every investigation is a chance of training your team. So enterprise forensics, providing you with a forensic snapshot of a machine in the shortest possible time, remotely, helps you use this for training your team at the same time.

There are some great examples in US Army, which they call “Center of Army Lessons Learned”. So we can do the same by using forensic snapshots. So that way we can keep incrementally learning from the investigations.

The key takeaways from my presentation is digital forensics is not a postmortem job anymore. And also it’s not a “nice to have” capability, it’s a must have one. It has now become a part of our daily lives, whether you are a security operation center analyst or an MSSP investigator, and the visibility is the key for any enterprise investigation without an exception.

And the last one is we have to embrace the modern approaches and rethink our priorities by putting aside the traditional methods of doing digital forensics. And the final word is: “every contact leaves a trace”, which is a favorite setting by Edmond Locard. My question is how much of this is visible to you? Thank you for your time.

Hans: Thank you, Emre, for this excellent keynote. I saw some comments in the chat already asking if you can share the links, but I think we will have a PDF of your presentation on the conference website, so that should contain all of the links. Are there any questions here in the room? I think, yeah. I see one question, two questions.

Audience member 1: Hello. Thank you for the presentation. Do you recommend, and do you have advice to deploy enterprise forensics in the middle of an incident?

Emre: Actually, you have to. A great question! You have to, because most of the products we have right now is providing us visibility from the time they were able to detect. But as you have seen in the previous slides, it takes sometimes years, and that’s what I’ve personally experienced in a lot of the engagements we had.

There were even some incidents that the company was breached by a number of APT groups and they were happily living inside. So, you have to deploy in the middle of the investigation, otherwise you can’t get the visibility and you’ll be limited with the time of the detection. So, you need to go back sometimes to the installation date of the machine. So the answer is, yes.

Hans: We have one more question here.

Emre: Sure.

Audience member 2: Thank you. Thank you for this talk. I have a comment/question on this. I think, at least from my experience, the main two problems with enterprise forensics is data protection. Especially when you say, “okay, we are going to use, like, an agent based approach where we collect all the data before something happens”.

And then when something happens, we can look at it because maybe that’s a German thing! But everybody gets very nervous when we are collecting data without like an active incident. And I think the other point is just, like, the storing the amount of data somewhere especially in very large companies.

If you collect, like, event logs for all the clients and all the servers and stuff like that, and you want to have them ready for, I don’t know how long, like, the longer, the better you need a whole lot of storage for that. And especially if you want to then, like, correlate this with, like, Splunk or elastic search or something, this gets very expensive.

So, I don’t know, like, what’s your approach on, like, the data protection side of things and like how to maybe show people that this is a security feature and not, like, a security hazard for them?

Emre: Yeah. Great question. Thank you. So the first part, the data protection part. In traditional forensics, it’s quite easy because what you do is you take a bit to bit image of a hard drive, and then you calculate the hash, which is a core part of the process.

And then you have a solid image of that drive. Unfortunately that’s not the case in the enterprise forensics because it also uses the volatile data as one of its evidence sources.

So our approach for this problem was using RFC 3161 timestamps, which is in my opinion, is quite underused. It’s a great way of, like, proving that specific evidence existed at that specific time.

There are a number of products that started to use this, and I think it’s the best way so far for proving that you have the data solid as it was acquired at the time of incident.

And the second one is…I agree, like, it can go quickly become tons of data when you want to collect event logs from thousands of machines. So that’s where the importance of triage stage gets into the picture.

And that’s why the collaboration and the enrichability of investigations plays an important role. The reason is most of the time we start with a few assets and that’s what most of our customers are doing. So, they start with a number of assets, and that way they’re not required to collect, like, evidence from thousands of machines. Most of the times it’s like 5, 10 machines.

And once they have sufficient evidence for the breach they can quickly run (or they should quickly run) sweep all the enterprise so that they know how many other assets are involved in that breach.

And I think that’s the reason…it’s not only about collecting evidence, prioritizing evidence that saves us from the investigation time. It’s also about the second stage, which is comprised of running at triage and making sure you have full coverage on the enterprise and there are no like loopholes in the process.

I think that answers your question.

Hans: Okay. Thank you. And maybe, and also related to the previous question: should a company deploy incident response software during a incident? I agree, right, that’s something that you should do better later than never. But I think, and I appreciate that of course your company Binalyze develops products in line with this vision.

I think the…what I felt that I learned from talking to you and looking at your company is that the managed part, being able…not having to set up software on workstations in a lab, but actually having your lab in the cloud so that it’s easy to collaborate in the 24/7.

I think people need to, well…it takes a while to get…to really let it sink in and what it means if you can do 24/7 investigations in multiple locations and also collecting and doing ad hoc deployments, I think of the endpoint, I think that’s also crucial in your situation.

Emre: Something to add (thanks a lot, that was a great like aspect of it as well), one of the biggest problems of…when you need to have visibility, it’s not, like, specific to our product, it’s the problem of the industry itself. It’s like you are inside a fire. So…and most of the time it takes a lot of time and effort to deploy something to the assets.

So that’s why it’s always great to have this capability always available as part of your security stack, and apart from using it as a postmortem approach, I can see that it’s now becoming a regular compromised assessment mindset.

So, this shouldn’t be seen as a postmortem solution, a postmortem mortem process. So, “I will be doing this when I need to”. I don’t agree with that. And the recent breaches proves this. You need to be doing this every month, every week, and make this a practice. So, I think we are moving into that kind of a stage that this needs to be on a continuous basis.

Hans: Okay, great. Thank you. Emre. I’m not sure, are there any more questions from the chat? Yep.

Host 2: So, yes. One question from the chat: what is the role of collaboration between enterprises and enterprise forensics?

Emre: Great. So the biggest problem is we have security operation centers, and a medium to large enterprise may have offices in 40-80 countries. And when you have that many countries, that many offices, that many regions then it becomes almost impossible to have a single security operation center.

So, that’s why most of the time these companies, these…even MSSPs, many secret service providers, are working with shifts. So I mean, we are humans after some stage we need to hand it over to some other team that will be taking over the investigation.

So, for that reason, collaboration is a big part of enterprise forensics because you need to be sure that you are also collaborating on the same case without being limited to any locations, any offices. And that’s why it’s a core part of any investigation right now.

And that’s also one of the biggest problems with the traditional forensics, because in traditional forensics, you’re limited with one image on a single machine that can be invested by a single investigator.

Of course, there are solutions like deploying these products into cloud and giving them access, but still, it’s not something you can use simultaneously by tens of investigators. So, that’s how it plays an important role in enterprise forensic context.

Hans: Okay. I see one more question on the chat: do you think threat hunting and enterprise forensics will merge?

Emre: Oh, 100%. Yeah. Because the reason is, we are having more and more companies working with CTI providers, and when they receive the alert, when they receive the IoC, or when they find the IoC, the next step is running this process automatically, if possible, or manually. So, I think there are quickly, like, getting closer to each other as the following steps of each other.

Hans: Okay, Emre. We are a little over time, we started a bit later, so that’s not…I wanna thank you. A final round of applause.