By John Patzakis [1] and Brent Botta [2]

Previously, in Forensic Focus, we addressed the issue of evidentiary authentication of social media data (see previous entries here and here). General Internet site data available through standard web browsing, instead of social media data provided by APIs or user credentials, presents slightly different but just as compelling challenges, which are outlined below. To help address these unique challenges, we are introducing and outlining a specified technical process to authenticate collected “live” web pages for investigative and judicial purposes.[3] We are not asserting that this process must be adopted as a universal standard and recognize that there may be other valid means authenticate website evidence. However, we believe that the technical protocols outlined below can be a very effective means to properly authenticate and verify evidence collected from websites while at the same time facilitating an automated and scalable digital investigation workflow.

Legal Authentication Requirements

The Internet provides torrential amounts of evidence potentially relevant to litigation matters, with courts routinely facing proffers of data preserved from various websites. This evidence must be authenticated in all cases, and the authentication standard is no different for website data or chat room evidence than for any other. Under US Federal Rule of Evidence 901(a), “The requirement of authentication … is satisfied by evidence sufficient to support a finding that the matter in question is what its proponent claims.” United States v. Simpson, 152 F.3d 1241, 1249 (10th Cir. 1998).

Ideally, a proponent of the evidence can rely on uncontroverted direct testimony from the creator of the web page in question. In many cases, however, that option is not available. In such situations, the testimony of the viewer/collector of the Internet evidence “in combination with circumstantial indicia of authenticity (such as the dates and web addresses), would support a finding” that the website documents are what the proponent asserts. Perfect 10, Inc. v. Cybernet Ventures, Inc. (C.D.Cal.2002) 213 F.Supp.2d 1146, 1154. (emphasis added) (See also, Lorraine v. Markel American Insurance Company, 241 F.R.D. 534, 546 (D.Md. May 4, 2007) (citing Perfect 10, and referencing MD5 hash values as an additional element of potential “circumstantial indicia” for authentication of electronic evidence).

Challenges with Current Methods

When examining solutions to capture internet web pages as evidence, one should be able to preserve and display all the available “circumstantial indicia” – to borrow the Perfect 10 court’s term —in order to present the best case possible for the authenticity of Internet-based evidence collected with their investigation software. This includes collecting all available metadata and generating a MD5 checksum or “hash value” of the preserved data.

But html web pages pose unique authentication challenges. For instance, merely generating an MD5 checksum of the entire web page, or just the web page source file, provides limited value because web pages are constantly changing due to their very fluid and dynamic nature. In fact, a web page collected from the Internet in immediate succession would very likely calculate two different MD5 checksums. This is because web pages typically feature links to many external items that are dynamically loaded upon each page view. These external links take the form of cascading style sheets (CSS), graphical images, JavaScripts and other supporting files. This linked content can be stored on another server in the same domain, but is often located somewhere else on the Internet.

When the web browser loads a web page, it consolidates all these items into one viewable page for the user. Since the web page source file contains only the links to the files to be loaded, the MD5 checksum of the source file can remain unchanged even if the content of the linked files become completely different. Therefore, the content of the linked items must be considered in the authenticity of the web page. To further complicate web collections, entire sections of a web page are often not visible to the viewer. These hidden areas serve various purposes, including meta-tagging for Internet search engine optimization. The servers that host Websites can either store static web pages or dynamically created pages that usually change each time a user visits the website, even though the actual content may appear unchanged. It is with these dynamics and challenges that we formulated our process for authentication of website evidence.

Itemized and Dual Checksums: A New Approach

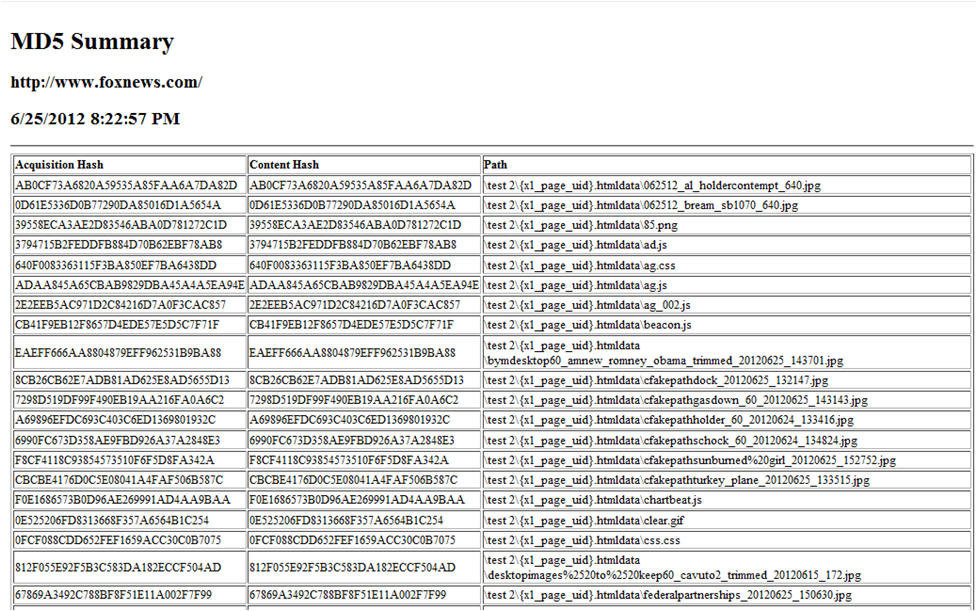

The first step of the process, which we have dubbed “Itemized and Dual Checksums” (IDC) is to generate, at the point of collection, an MD5 checksum log representing each item that constitutes the web page, including the main web page’s source. Then an MD5 representing the content of all the items contained within the web page is generated and preserved. To address the additional complication of the web page’s various amounts of hidden content, which also must be preserved and authenticated, two different MD5 fields for each item that makes a web page are generated and logged. The first is the acquisition hash that is from the actual collected information. The second is the content hash. The content hash is based on the actual “body” of a web page and ignores the hidden metadata. By taking this approach, the content hash will show if the user viewable content has actually changed, not just a hidden metadata tag provided by the server. To illustrate, below is a screenshot from the metadata view of X1 Social Discovery,[4] a solution designed for investigative professionals to address website capture evidence, reflecting the generation of MD5 checksums for individual objects on a single webpage:

The time stamp of the capture and url of the web page is also documented in the case. By generating hash values of all individual objects within the web page, the examiner is better able to pinpoint any changes that may have occurred in subsequent captures. Additionally, if there is specific item appearing on the web page, such as an incriminating image, then is it is important to have an individual MD5 checksum of that key piece of evidence. Finally, any document file linked on a captured web page, such as a pdf, Powerpoint, or Word document, should also be individually collected with corresponding content hash values generated.

As with all forensically collected items, there needs to be a single value that represents the authenticity. A single MD5 hash is generated by calculating the hash of the log file that represents each itemized collected item, acquisition and content hash values. This allows the collected webpage to have a single MD5 hash value associated with it.

We believe this IDC approach to authentication of website evidence is unique in its detail and can present a new standard subject to industry adaption.

In addition to supporting these requirements, we also strongly believe this process should be automated so as to support scalability requirements and an investigative workflow. An authentication methodology that requires manual, tedious steps greatly hinders one of the main requirements for effective digital investigations, which is to collect evidence in a scalable and efficient manner. A scalable process should integrate these authentication steps to collect website evidence both through a one-off capture or full crawling, including on a scheduled basis, and have that information instantly reviewable in native file format through a federated search that includes up to thousands of web pages items of social media evidence in a single case.

Many real-world investigations often require collection from thousands of individual web pages in with significant time constraints. The effectiveness of proper collection and authentication can be significantly degraded if the evidence is not collected in an automated manner and cannot be effectively searched, sorted (including by metadata fields), tagged and reviewed, in order to expediently identity key substantive evidence as well as all available “circumstantial indicia.” As such, the collected website data should not be a mere image capture or pdf, but a full HTML (native file) collection, to ensure preservation of all metadata and other source information as well as to enable instant and full search and effective evidentiary authentication. All of the evidence should be searched with one pass, reviewed, tagged and, if needed, exported to an attorney review platform from a single workflow.

Conclusion

Itemized Dual Checksums (IDC) represents and new method for defensible and thorough evidentiary authentication of live internet website data, while at the same time supporting a scalable and effective collection process. IDC is currently employed by the X1 Social Discovery software, but is disclosed and illustrated here as an “open source” methodology for the benefit of other developers in the industry.

Notes

__________________________________

Reblogged this on Yury Chemerkin.